I. deployment of flannel

1.1 installation of flannel

kubernetes requires that all nodes in the cluster (including the master node) can be interconnected through the Pod network segment. flannel uses vxlan technology to create an interoperable Pod network for each node. The port used is UDP 8472.

When flanneld is started for the first time, obtain the configured Pod network segment information from etcd, assign an unused address segment to this node, and then create the flanneldl.1 network interface (or other names, such as flannel1).

flannel writes the Pod segment information assigned to itself to the / run/flannel/docker file. Docker then uses the environment variable in this file to set the docker0 bridge, so as to assign IP to all Pod containers of this node from this address segment.

For more information on flannel, please refer to 008.Docker Flannel+Etcd distributed network deployment.

1 [root@k8smaster01 ~]# cd /opt/k8s/work/ 2 [root@k8smaster01 work]# mkdir flannel 3 [root@k8smaster01 work]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz 4 [root@k8smaster01 work]# tar -xzvf flannel-v0.11.0-linux-amd64.tar.gz -C flannel

1.2 distribution of flannel

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 scp flannel/{flanneld,mk-docker-opts.sh} root@${master_ip}:/opt/k8s/bin/ 7 ssh root@${master_ip} "chmod +x /opt/k8s/bin/*" 8 done

1.3 create a flannel certificate and key

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# cat > flanneld-csr.json <<EOF 3 { 4 "CN": "flanneld", 5 "hosts": [], 6 "key": { 7 "algo": "rsa", 8 "size": 2048 9 }, 10 "names": [ 11 { 12 "C": "CN", 13 "ST": "Shanghai", 14 "L": "Shanghai", 15 "O": "k8s", 16 "OU": "System" 17 } 18 ] 19 } 20 EOF 21 #Create a CA certificate request file for flanneld

Interpretation:

The certificate will only be used by kubectl as the client certificate, so the hosts field is empty.

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \ 3 -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json \ 4 -profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld #Generate CA key (CA key. PEM) and certificate (ca.pem)

1.4 distribute certificate and private key

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 ssh root@${master_ip} "mkdir -p /etc/flanneld/cert" 7 scp flanneld*.pem root@${master_ip}:/etc/flanneld/cert 8 done

1.5 write cluster Pod segment information

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# etcdctl \ 4 --endpoints=${ETCD_ENDPOINTS} \ 5 --ca-file=/opt/k8s/work/ca.pem \ 6 --cert-file=/opt/k8s/work/flanneld.pem \ 7 --key-file=/opt/k8s/work/flanneld-key.pem \ 8 mk ${FLANNEL_ETCD_PREFIX}/config '{"Network":"'${CLUSTER_CIDR}'", "SubnetLen": 21, "Backend": {"Type": "vxlan"}}'

Note: Note: this step only needs to be performed once.

Tip: the current version of flanneld (v0.11.0) does not support etcd v3, so use etcd v2 API to write configuration key and network segment data;

The address segment ${cluster ﹣ CIDR} written to the Pod network segment (such as / 16) must be smaller than SubnetLen, and must be consistent with the -- cluster CIDR parameter value of Kube controller manager.

1.6 create system D of flanneld

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# cat > flanneld.service << EOF 4 [Unit] 5 Description=Flanneld overlay address etcd agent 6 After=network.target 7 After=network-online.target 8 Wants=network-online.target 9 After=etcd.service 10 Before=docker.service 11 12 [Service] 13 Type=notify 14 ExecStart=/opt/k8s/bin/flanneld \\ 15 -etcd-cafile=/etc/kubernetes/cert/ca.pem \\ 16 -etcd-certfile=/etc/flanneld/cert/flanneld.pem \\ 17 -etcd-keyfile=/etc/flanneld/cert/flanneld-key.pem \\ 18 -etcd-endpoints=${ETCD_ENDPOINTS} \\ 19 -etcd-prefix=${FLANNEL_ETCD_PREFIX} \\ 20 -iface=${IFACE} \\ 21 -ip-masq 22 ExecStartPost=/opt/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker 23 Restart=always 24 RestartSec=5 25 StartLimitInterval=0 26 27 [Install] 28 WantedBy=multi-user.target 29 RequiredBy=docker.service 30 EOF

Interpretation:

mk-docker-opts.sh: this script writes the Pod sub network segment information assigned to flanneld to the / run/flannel/docker file, and then uses the environment variable in this file to configure the docker0 bridge when docker starts;

flanneld: use the interface where the system default route is located to communicate with other nodes. For nodes with multiple network interfaces (such as internal network and public network), you can use the - iface parameter to specify the communication interface;

flanneld: root permission is required at runtime;

-IP masq: flanneld sets the SNAT rule for accessing traffic outside the Pod network, and sets the variable -- IP masq (/ run/flannel/docker file) passed to the Docker to false, so that the Docker will no longer create SNAT rules; when the -- IP masq of the Docker is true, the created SNAT rules are "violent": connect all the access to non docker0 initiated by the Pod of the node In this way, the source IP of the request to access the Pod of other nodes will be set to the IP of the flannel.1 interface, so that the destination Pod cannot see the real source Pod IP The SNAT rules created by flanneld are relatively mild, and only snats are made for requests accessing non Pod network segments.

1.7 distribution of flannel system D

1 [root@k8smaster01 ~]# cd /opt/k8s/work 2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh 3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 scp flanneld.service root@${master_ip}:/etc/systemd/system/ 7 done

II. Start and verify

2.1 start flannel

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh 2 [root@k8smaster01 ~]# for master_ip in ${MASTER_IPS[@]} 3 do 4 echo ">>> ${master_ip}" 5 ssh root@${master_ip} "systemctl daemon-reload && systemctl enable flanneld && systemctl restart flanneld" 6 done

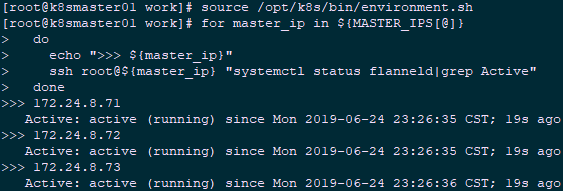

2.2 check the startup of flannel

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh 2 [root@k8smaster01 ~]# for master_ip in ${MASTER_IPS[@]} 3 do 4 echo ">>> ${master_ip}" 5 ssh root@${master_ip} "systemctl status flanneld|grep Active" 6 done

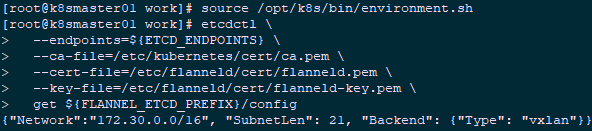

2.3 check pod segment information

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh 2 [root@k8smaster01 ~]# etcdctl \ 3 --endpoints=${ETCD_ENDPOINTS} \ 4 --ca-file=/etc/kubernetes/cert/ca.pem \ 5 --cert-file=/etc/flanneld/cert/flanneld.pem \ 6 --key-file=/etc/flanneld/cert/flanneld-key.pem \ 7 get ${FLANNEL_ETCD_PREFIX}/config #View cluster Pod segment (/ 16)

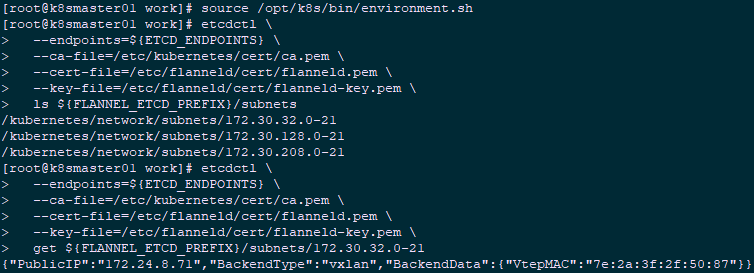

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh 2 [root@k8smaster01 ~]# etcdctl \ 3 --endpoints=${ETCD_ENDPOINTS} \ 4 --ca-file=/etc/kubernetes/cert/ca.pem \ 5 --cert-file=/etc/flanneld/cert/flanneld.pem \ 6 --key-file=/etc/flanneld/cert/flanneld-key.pem \ 7 ls ${FLANNEL_ETCD_PREFIX}/subnets #View the list of allocated Pod subnet segments (/ 24) 8 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh 9 [root@k8smaster01 ~]# etcdctl \ 10 --endpoints=${ETCD_ENDPOINTS} \ 11 --ca-file=/etc/kubernetes/cert/ca.pem \ 12 --cert-file=/etc/flanneld/cert/flanneld.pem \ 13 --key-file=/etc/flanneld/cert/flanneld-key.pem \ 14 get ${FLANNEL_ETCD_PREFIX}/subnets/172.30.32.0-21 #View the node IP and flannel interface address corresponding to a Pod network segment

Interpretation:

172.30.32.0/21 is assigned to node k8smaster01 (172.24.8.71);

VtepMAC is the MAC address of the flannel.1 network card of k8smaster01 node.

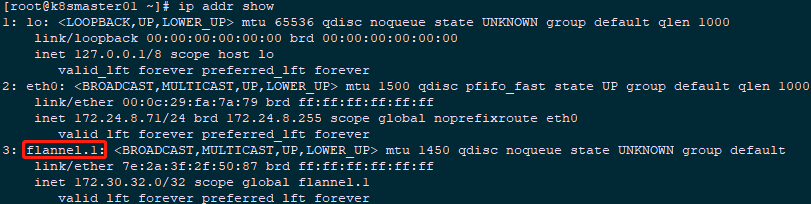

2.4 check the flannel network information

1 [root@k8smaster01 ~]# ip addr showExplanation: the address of the flannel.1 network card is the first IP (. 0) of the allocated Pod subnet segment, and it is the address of / 32.

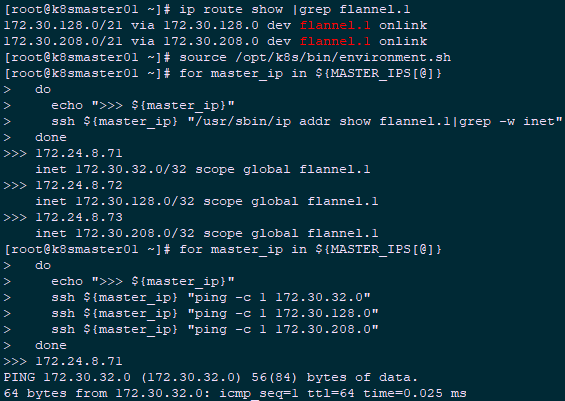

1 [root@k8smaster01 ~]# ip route show |grep flannel.1 2 172.30.128.0/21 via 172.30.128.0 dev flannel.1 onlink 3 172.30.208.0/21 via 172.30.208.0 dev flannel.1 onlink

Interpretation:

Requests to other nodes' Pod network segments are forwarded to the flannel.1 network card;

flanneld determines the interconnection IP of which node the incoming request is sent according to the sub network segment information of etcd, such as ${flannel 「 etcd 「 prefix} / subnets / 172.30.32.0-21.

2.5 verify the flannel of each node

After deploying flannel on each node, check whether the flannel interface is created (the name may be flannel0, flannel.0, flannel.1, etc.):

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh 2 [root@k8smaster01 ~]# for master_ip in ${MASTER_IPS[@]} 3 do 4 echo ">>> ${master_ip}" 5 ssh ${master_ip} "/usr/sbin/ip addr show flannel.1|grep -w inet" 6 done

Output:

1 >>> 172.24.8.71 2 inet 172.30.32.0/32 scope global flannel.1 3 >>> 172.24.8.72 4 inet 172.30.128.0/32 scope global flannel.1 5 >>> 172.24.8.73 6 inet 172.30.208.0/32 scope global flannel.1

ping all the flannel interface IP on each node to ensure that:

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh 2 [root@k8smaster01 ~]# for master_ip in ${MASTER_IPS[@]} 3 do 4 echo ">>> ${master_ip}" 5 ssh ${master_ip} "ping -c 1 172.30.32.0" 6 ssh ${master_ip} "ping -c 1 172.30.128.0" 7 ssh ${master_ip} "ping -c 1 172.30.208.0" 8 done