One Flannel component

1.1 introduction to flannel

Kubernetes' network model assumes that all pods are in a flat network space that can be directly connected. If you need to implement this network assumption, you need to implement mutual access between Docker containers on different nodes, and then run kubernetes. At present, there are many open source components supporting the container network model. Such as Flannel, Open vSwitch, direct routing, and Calico.

The reason why Flannel can build the underlying network on which Kubernetes relies is that it can realize the following two points.

- It can assist Kubernetes to assign non conflicting IP addresses to each Docker container on each Node.

- It can establish an Overlay Network between these IP addresses, through which data packets can be transferred to the target container.

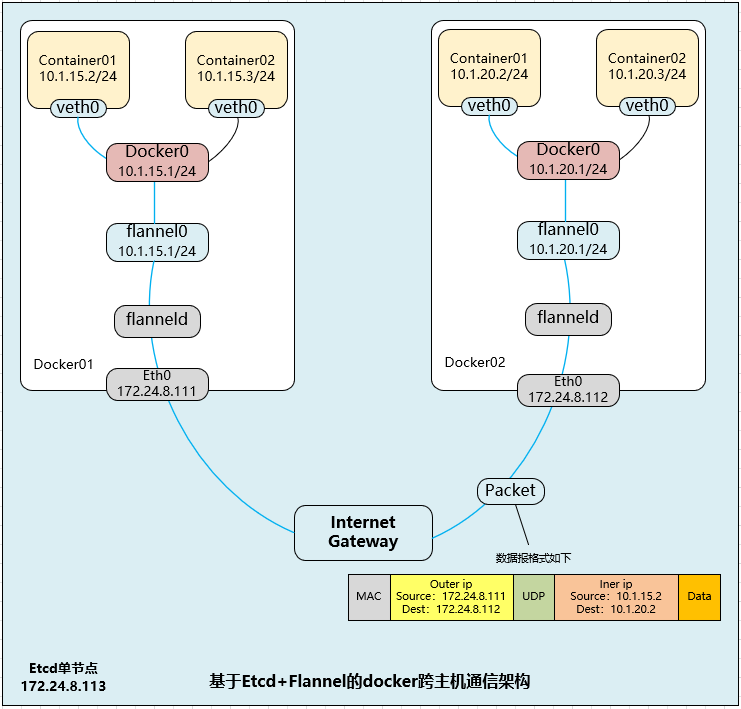

flannel architecture:

As shown in the figure above, Flannel first creates a bridge named flannel0, and one end of the bridge is connected to the docker0 bridge, and the other end is connected to a service process called flanneld. The flanneld process connects etcd, uses etcd to manage the allocable IP address segment resources, monitors the actual address of each Pod in etcd, and establishes a Pod node routing table in memory; the flanneld process connects docker0 with the physical network, uses the Pod node routing table in memory to package the packets sent to it by docker0, and uses the connection of the physical network to cast the packets It is delivered to the target flanneld to complete the direct address communication between Pod and Pod.

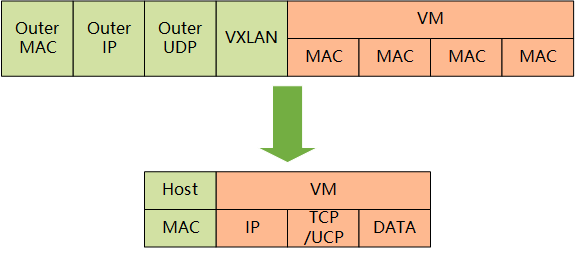

The optional technologies of the underlying communication protocol between Flannel include UDP, VxLan, AWS VPC, etc. Through the source flanneld packet and the target flanneld unpacking, the final docker 0 receives the original data, which is transparent to the container application and does not feel the existence of the intermediate Flannel.

The address segments allocated by flannel are obtained in the same public area each time, so that the IP assigned by Pod on different nodes does not conflict. After the address segment is allocated by flannel, other operations are completed by Docker. Flannel will transfer the address segment allocated to it by modifying the startup parameters of Docker:

--bip=172.17.18.1/24

In the above way, flannel controls the address of the docker0 address segment on each Node, thus ensuring that the IP addresses of all pods are in the same horizontal network without conflict. Flannel perfectly realizes the support of Kubernetes network, but it introduces several network components. In network communication, it needs to switch to flannel0 network interface, and then to the user state flanneld program. After the end-to-end, it needs to go through the reverse process of this process, so it will also introduce some network delay loss. In addition, by default, the flannel model uses UDP as the underlying transport protocol, UDP itself is an unreliable protocol. Although TCP at both ends realizes reliable transmission, it is recommended to test several times in the application scenario of large traffic and high concurrency.

Note: for more implementation and deployment of flannel, please refer to 008.Docker Flannel+Etcd distributed network deployment.

II. Calico components

2.1 introduction to calco components

Calico is a pure three-tier network solution based on BGP, which can integrate well with OpenStack, Kubernetes, AWS, GCE and other cloud platforms. Calico uses Linux Kernel to implement an efficient vRouter for data forwarding in every computing node. Each vRouter broadcasts the routing information of the container running on this node to the entire calico network through BGP1 protocol, and automatically sets the routing and forwarding rules to other nodes.

Calico guarantees that all data flows between containers are interconnected through IP routing. Calico nodes can directly use the network structure of the data center (L2 or L3) when networking. No additional NAT, tunnel or Overlay Network is needed, and no additional packet unpacking is needed. It can save CPU operation and improve network efficiency.

Calico can be directly connected in small-scale clusters, and can be completed in large-scale clusters through additional BGP route reflector s. Calico also provides rich network policies based on iptables, implements Kubernetes' Network Policy policy, and provides the function of network accessibility limitation between containers.

2.2 Calico architecture

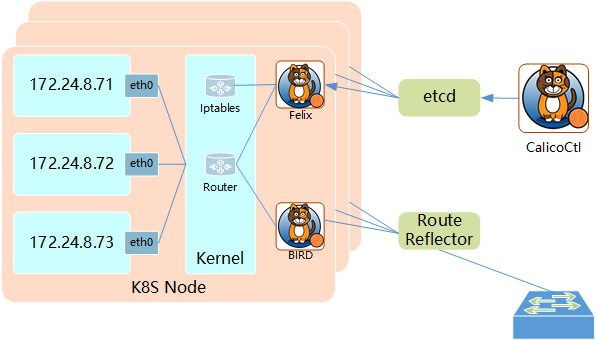

Main components of Calico:

Felix: Calico Agent, running on each Node, is responsible for setting network resources (IP address, routing rules, iptables rules, etc.) for the container to ensure the network interoperability of cross host containers.

etcd: the back-end storage used by Calico.

BGP Client: responsible for broadcasting the routing information set by Felix on each Node to Calico network through BGP protocol.

Route Reflector: one or more BGP route reflectors are used to complete hierarchical routing distribution of large-scale clusters.

CalicoCtl: Calico command line management tool.

2.3 Calico step process

The main steps to deploy Calico in Kubernetes are as follows:

- Modify the startup parameters of the Kubernetes service and restart the service.

- Set the startup parameters of the Kube apiserver service on the Master: - allow privileged = true (because calico Node needs to run on each Node in privileged mode).

- Set the startup parameters of kubelet service on each Node: - networkplugin=cni (use CNI network plug-in).

- Create Calico services, mainly including Calico node and calico policy controller. The resource objects to be created are as follows:

- Create configmap Calico config, which contains the configuration parameters required by Calico.

- Create secret calico etcd secrets to connect etcd using TLS.

- The calico/node container runs on each Node and is deployed as a DaemonSet.

- Install Calico CNI binaries and network configuration parameters (completed by the install CNI container) on each Node.

- Deploy a Deployment called calico / Kube policy controller to dock with the Network Policy set for Pod in the Kubernetes cluster.

2.4 formal deployment

[root@k8smaster01 ~]# curl \

https://docs.projectcalico.org/v3.6/getting-started/kubernetes/installation/hosted/canal/canal.yaml \

-O

[root@k8smaster01 ~]# POD_CIDR="<your-pod-cidr>" \

sed -i -e "s?10.10.0.0/16?$POD_CIDR?g" canal.yaml

The yaml file above includes all the configurations required by Calico, as follows: segment parsing for the key part:

- ConfigMap resolution

1 kind: ConfigMap 2 apiVersion: v1 3 metadata: 4 name: calico-config 5 namespace: kube-system 6 data: 7 typha_service_name: "none" 8 calico_backend: "bird" 9 veth_mtu: "1440" 10 cni_network_config: |- 11 { 12 "name": "k8s-pod-network", 13 "cniVersion": "0.3.0", 14 "plugins": [ 15 { 16 "type": "calico", 17 "log_level": "info", 18 "datastore_type": "kubernetes", 19 "nodename": "__KUBERNETES_NODE_NAME__", 20 "mtu": __CNI_MTU__, 21 "ipam": { 22 "type": "calico-ipam" 23 }, 24 "policy": { 25 "type": "k8s" 26 }, 27 "kubernetes": { 28 "kubeconfig": "__KUBECONFIG_FILEPATH__" 29 } 30 }, 31 { 32 "type": "portmap", 33 "snat": true, 34 "capabilities": {"portMappings": true} 35 } 36 ] 37 }

The main parameters are described as follows.

etcd_endpoints: Calico uses etcd to save network topology and status. This parameter specifies the address of etcd service, which can be set manually.

calico_backend: the backend of calico, which is bird by default.

CNI network config: network configuration that conforms to CNI specification. Where type=calico means that kubelet will search for the executable file named calico from the / opt/cni/bin directory, and call it to complete the container network setting. The type=calico ipam in ipam indicates that kubelet will search for an executable named calico ipam in the / opt/cni/bin directory to complete the allocation of the container IP address.

Tip: if the etcd service is configured with TLS security authentication, you need to specify the corresponding ca, cert, key and other files.

- Secret analysis

1 apiVersion: v1 2 kind: Secret 3 type: Opaque 4 metadata: 5 name: calico-etcd-secrets 6 namespace: kube-system 7 data: 8 # Populate the following with etcd TLS configuration if desired, but leave blank if 9 # not using TLS for etcd. 10 # The keys below should be uncommented and the values populated with the base64 11 # encoded contents of each file that would be associated with the TLS data. 12 # Example command for encoding a file contents: cat <file> | base64 -w 0 13 # If TLS is configured, you need to set the corresponding certificate and key file path 14 # etcd-key: null 15 # etcd-cert: null 16 # etcd-ca: null

- DaemonSet analysis

1 kind: DaemonSet 2 apiVersion: extensions/v1beta1 3 metadata: 4 name: calico-node 5 namespace: kube-system 6 labels: 7 k8s-app: calico-node 8 spec: 9 selector: 10 matchLabels: 11 k8s-app: calico-node 12 updateStrategy: 13 type: RollingUpdate 14 rollingUpdate: 15 maxUnavailable: 1 16 template: 17 metadata: 18 labels: 19 k8s-app: calico-node 20 annotations: 21 scheduler.alpha.kubernetes.io/critical-pod: '' 22 spec: 23 nodeSelector: 24 beta.kubernetes.io/os: linux 25 hostNetwork: true 26 tolerations: 27 - effect: NoSchedule 28 operator: Exists 29 - key: CriticalAddonsOnly 30 operator: Exists 31 - effect: NoExecute 32 operator: Exists 33 serviceAccountName: calico-node 34 terminationGracePeriodSeconds: 0 35 initContainers: 36 - name: upgrade-ipam 37 image: calico/cni:v3.6.0 38 command: ["/opt/cni/bin/calico-ipam", "-upgrade"] 39 env: 40 - name: KUBERNETES_NODE_NAME 41 valueFrom: 42 fieldRef: 43 fieldPath: spec.nodeName 44 - name: CALICO_NETWORKING_BACKEND 45 valueFrom: 46 configMapKeyRef: 47 name: calico-config 48 key: calico_backend 49 volumeMounts: 50 - mountPath: /var/lib/cni/networks 51 name: host-local-net-dir 52 - mountPath: /host/opt/cni/bin 53 name: cni-bin-dir 54 - name: install-cni 55 image: calico/cni:v3.6.0 56 command: ["/install-cni.sh"] 57 env: 58 - name: CNI_CONF_NAME 59 value: "10-calico.conflist" 60 - name: CNI_NETWORK_CONFIG 61 valueFrom: 62 configMapKeyRef: 63 name: calico-config 64 key: cni_network_config 65 - name: KUBERNETES_NODE_NAME 66 valueFrom: 67 fieldRef: 68 fieldPath: spec.nodeName 69 - name: CNI_MTU 70 valueFrom: 71 configMapKeyRef: 72 name: calico-config 73 key: veth_mtu 74 - name: SLEEP 75 value: "false" 76 volumeMounts: 77 - mountPath: /host/opt/cni/bin 78 name: cni-bin-dir 79 - mountPath: /host/etc/cni/net.d 80 name: cni-net-dir 81 containers: 82 - name: calico-node 83 image: calico/node:v3.6.0 84 env: 85 - name: DATASTORE_TYPE 86 value: "kubernetes" 87 - name: WAIT_FOR_DATASTORE 88 value: "true" 89 - name: NODENAME 90 valueFrom: 91 fieldRef: 92 fieldPath: spec.nodeName 93 - name: CALICO_NETWORKING_BACKEND 94 valueFrom: 95 configMapKeyRef: 96 name: calico-config 97 key: calico_backend 98 - name: CLUSTER_TYPE 99 value: "k8s,bgp" 100 - name: IP 101 value: "autodetect" 102 - name: IP_AUTODETECTION_METHOD 103 value: "can-reach=172.24.8.2" 104 - name: CALICO_IPV4POOL_IPIP 105 value: "Always" 106 - name: FELIX_IPINIPMTU 107 valueFrom: 108 configMapKeyRef: 109 name: calico-config 110 key: veth_mtu 111 - name: CALICO_IPV4POOL_CIDR 112 value: "10.10.0.0/16" 113 - name: CALICO_DISABLE_FILE_LOGGING 114 value: "true" 115 - name: FELIX_DEFAULTENDPOINTTOHOSTACTION 116 value: "ACCEPT" 117 - name: FELIX_IPV6SUPPORT 118 value: "false" 119 - name: FELIX_LOGSEVERITYSCREEN 120 value: "info" 121 - name: FELIX_HEALTHENABLED 122 value: "true" 123 securityContext: 124 privileged: true 125 resources: 126 requests: 127 cpu: 250m 128 livenessProbe: 129 httpGet: 130 path: /liveness 131 port: 9099 132 host: localhost 133 periodSeconds: 10 134 initialDelaySeconds: 10 135 failureThreshold: 6 136 readinessProbe: 137 exec: 138 command: 139 - /bin/calico-node 140 - -bird-ready 141 - -felix-ready 142 periodSeconds: 10 143 volumeMounts: 144 - mountPath: /lib/modules 145 name: lib-modules 146 readOnly: true 147 - mountPath: /run/xtables.lock 148 name: xtables-lock 149 readOnly: false 150 - mountPath: /var/run/calico 151 name: var-run-calico 152 readOnly: false 153 - mountPath: /var/lib/calico 154 name: var-lib-calico 155 readOnly: false 156 volumes: 157 - name: lib-modules 158 hostPath: 159 path: /lib/modules 160 - name: var-run-calico 161 hostPath: 162 path: /var/run/calico 163 - name: var-lib-calico 164 hostPath: 165 path: /var/lib/calico 166 - name: xtables-lock 167 hostPath: 168 path: /run/xtables.lock 169 type: FileOrCreate 170 - name: cni-bin-dir 171 hostPath: 172 path: /opt/cni/bin 173 - name: cni-net-dir 174 hostPath: 175 path: /etc/cni/net.d 176 - name: host-local-net-dir 177 hostPath: 178 path: /var/lib/cni/networks

The Pod includes two containers as follows:

- Install CNI: install the CNI binary file on Node to the directory / opt/cni/bin, and install the corresponding network configuration file to the directory / etc/cni/net.d. set it to initContainers and exit after running.

- Calico Node: calico service program, which is used to set up the network resources of Pod and ensure the interconnection between Pod network and each Node. It also needs to run in the host network mode, using the host network directly.

The main parameters of the calico node service are as follows.

- Calico? Ipv4pool? CIDR: the IP address pool of Calico IPAM, from which the IP address of Pod will be allocated.

- Calico? Ipv4pool? IPIP: whether IPIP mode is enabled. When IPIP mode is enabled, calico creates a virtual tunnel named tunl0 on the Node.

- IP ﹣ autoselection ﹣ method: the method to obtain the Node IP address. By default, the IP address of the first network interface is used. For nodes with multiple network cards installed, you can use regular expressions to select the correct network card. For example, "interface=eth. *" means to select the IP address of the network card whose name begins with eth.

- Felix? Ipv6support: whether IPv6 is enabled.

- Felix? Logseveriscreen: log level.

- securityContext.privileged=true: runs in privileged mode.

In addition, if RBAC permission control is enabled, you can set the ServiceAccount.

IP Pool can use two modes: BGP or IPIP. When using the IPIP mode, set the calico? Ipv4pool? IPIP = "always", when not using the IPIP mode, set the calico? Ipv4pool? IPIP = "off", then the BGP mode will be used.

- Calico Kube controllers analysis

Calico Kube controllers container for docking with the Network Policy set for Pod in Kubernetes cluster

1 apiVersion: extensions/v1beta1 2 kind: Deployment 3 metadata: 4 name: calico-kube-controllers 5 namespace: kube-system 6 labels: 7 k8s-app: calico-kube-controllers 8 annotations: 9 scheduler.alpha.kubernetes.io/critical-pod: '' 10 spec: 11 replicas: 1 12 strategy: 13 type: Recreate 14 template: 15 metadata: 16 name: calico-kube-controllers 17 namespace: kube-system 18 labels: 19 k8s-app: calico-kube-controllers 20 spec: 21 nodeSelector: 22 beta.kubernetes.io/os: linux 23 tolerations: 24 - key: CriticalAddonsOnly 25 operator: Exists 26 - key: node-role.kubernetes.io/master 27 effect: NoSchedule 28 serviceAccountName: calico-kube-controllers 29 containers: 30 - name: calico-kube-controllers 31 image: calico/kube-controllers:v3.6.0 32 env: 33 - name: ENABLED_CONTROLLERS 34 value: node 35 - name: DATASTORE_TYPE 36 value: kubernetes 37 readinessProbe: 38 exec: 39 command: 40 - /usr/bin/check-status 41 - -r

If RBAC permission control is enabled, you can set the ServiceAccount. After the user sets the Network Policy of Pod in Kubernetes cluster, calicokube controllers will automatically notify the calico Node service on each Node, set the corresponding iptables rules on the host computer, and complete the setting of network access policy between Pod.

[root@k8smaster01 ~]# docker pull calico/cni:v3.9.0

[root@k8smaster01 ~]# docker pull calico/node:v3.9.0

[root @ k8smaster01 ~] ා docker pull calico / Kube controllers: v3.9.0 ා it is recommended to pull the image in advance

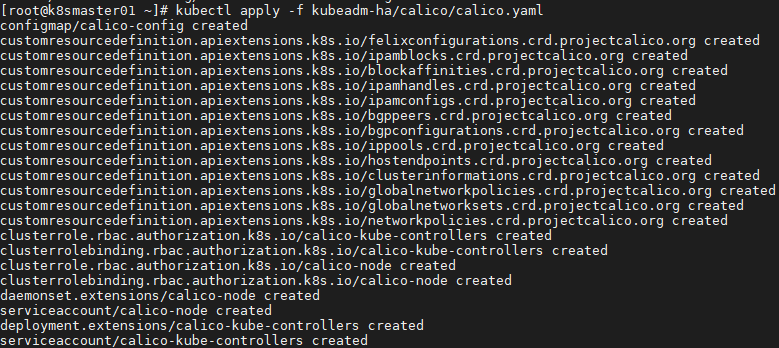

[root@k8smaster01 ~]# kubectl apply -f calico.yaml

2.5 view validation

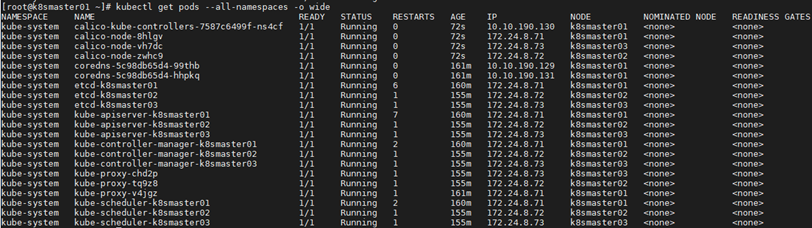

[root @ k8smaster01 ~] (kubectl get pods -- all namespaces - O wide) view deployment

[root@k8smaster01 ~]# ll /etc/cni/net.d/

After calico node runs normally, the following files and directories will be generated in / etc/cni/net.d / directory according to CNI specification, and the binary files calico and calico IPAM will be installed in / opt/cni/bin / directory for kubelet to call.

- 10-calico.conf: CNI compliant network configuration, where type=calico indicates the binary name of the plug-in is calico.

- Calico kubeconfig: kubeconfig file required by calico.

- Calico TLS Directory: connect etcd related files in TLS mode.

[root@k8smaster01 ~]# ifconfig tunl0

tunl0: flags=193<UP,RUNNING,NOARP> mtu 1440

inet 10.10.190.128 netmask 255.255.255.255

tunnel txqueuelen 1000 (IPIP Tunnel)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Viewing the network interface settings of the server, an interface named tunl0 will be produced with a network address of 10.10.190.128/32. This IP is allocated from the IP address pool (calico? Ipv4pool? CIDR = "10.10.0.0 / 16") set by calico node. At the same time, docker0 will no longer work for Kubernetes to set the IP address of Pod.

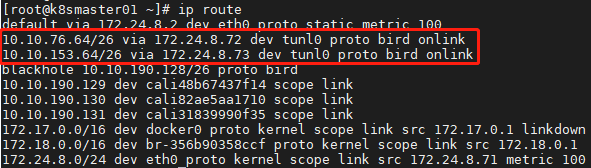

[root @ k8smaster01 ~] (IP route) view route table

As shown above, you can see the corresponding routes of different nodes, so you can set the container network between nodes through Calico. In the subsequent Pod creation process, kubelet will call Calico through CNI interface to set the Pod network, including IP address, routing rules, iptables rules, etc.

If you set Calico ﹣ ipv4pool ﹣ IPIP = "off", that is, do not use the IPIP mode, Calico will not create the tunl0 network interface, and the routing rules directly use the physical machine network card as the path

Forwarded by.

2.6 operation test

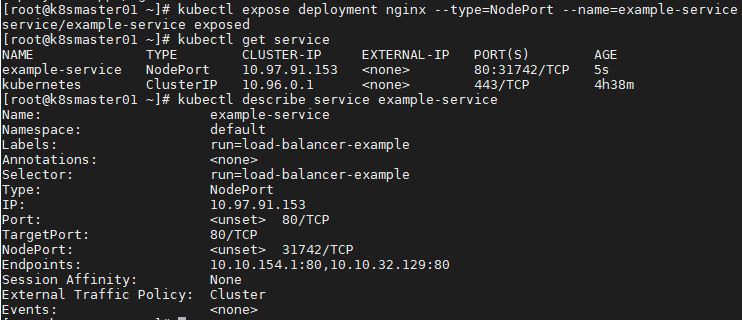

[root@k8smaster01 ~]# kubectl run nginx --replicas=2 --labels="run=load-balancer-example" --image=nginx --port=80

[root @ k8smaster01 ~] (kubectl expose deployment nginx -- type = nodeport -- name = example service)

[root @ k8smaster01 ~] (kubectl get service) view service status

[root @ k8smaster01 ~] (kubectl describe service example service) view information

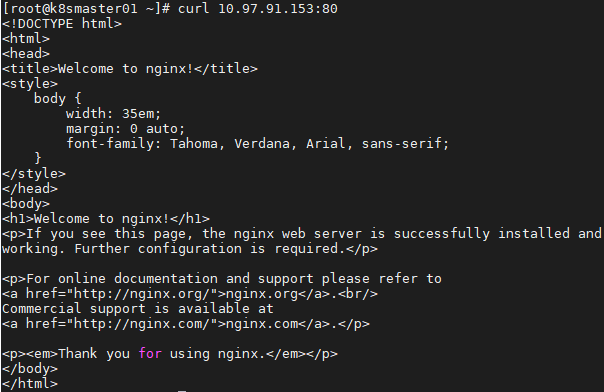

[root@k8smaster01 ~]# curl 10.97.91.153:80

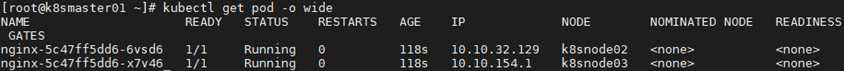

[root @ k8smaster01 ~] (kubectl get Pod - O width) view endpoint

[root @ k8smaster01 ~] ා curl 10.10.32.129:80 ා access endpoint, the same as access service ip result

[root@k8smaster01 ~]# curl 10.10.154.1:80

Deployment reference document: https://docs.projectcalico.org/v3.6/getting-started/kubernetes/installation/flannel

https://github.com/projectcalico/calico/blob/master/v3.8/getting-started/kubernetes/installation/hosted/calico.yaml