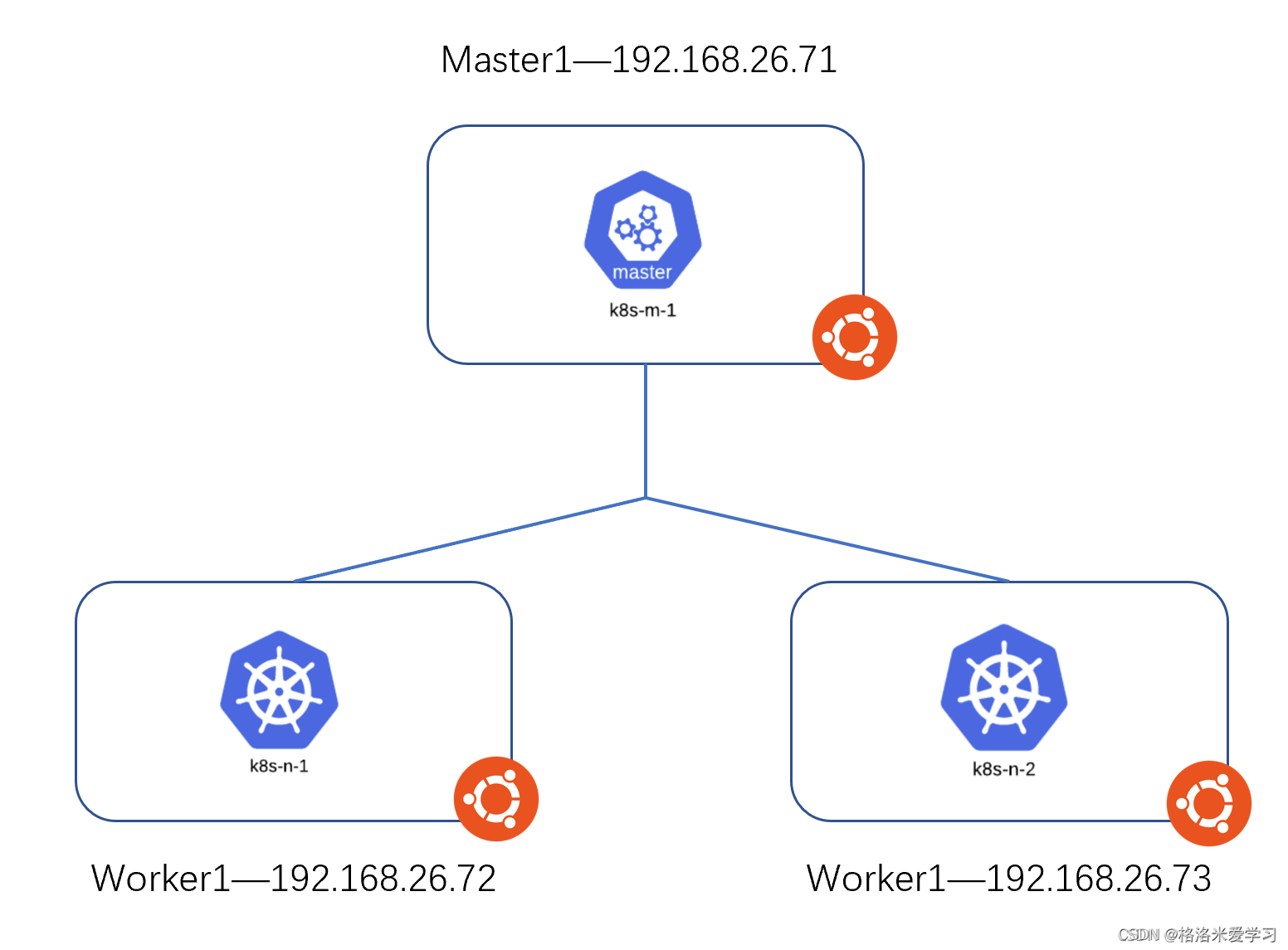

1, Experimental environment

The underlying system is Ubuntu 18 04, then install k8s on each node and build a cluster. The IP address of the Master node is 192.168 26.71/24, the IP address of two worker nodes is 192.168 26.72/24,192.168. 26.73/24.

2, PSP Foundation

1.PSP overview

Pod Security Policy is a cluster level resource (it does not belong to any Namespace by default, but it can also be bound to a specific Namespace when creating PSP rules). It can control all aspects of pod security. It includes the following contents:

Only when we meet the above requirements when deploying Pod can we deploy it normally. PSP consists of settings and policies, which can control the security characteristics of Pod access. These settings fall into three categories:

- Boolean based control: this type of field defaults to the most restrictive value;

- Control based on the allowed value set: this type of field will be compared with this group of values to confirm that the values are allowed;

- Policy based control: the setting item generates the value through a mechanism provided by the policy, which can ensure that the specified value falls within the allowed set of values.

In addition, we need to note that due to the difficulty of deployment, PodSecurityPolicy is implemented in kubernetes v1 Deprecated in version 21 and will be released in V1 25, and then the PSP is replaced by simplified PodSecurity. The description address is as follows: https://kubernetes.io/blog/2021/04/06/podsecuritypolicy-deprecation-past-present-and-future/ .. At present, if we want to use PSP, we need to add it at the admission controller in the cluster.

2.PSP workflow

Whether we create a pod through user account or service account (for example, the Deployment controller will use), the request will pass through the admission controller. If we open PSP at the admission controller and do not set any rules, then the K8s account All requests sent by (including admin) will be rejected. When we create PSP rules, the requests sent by K8s account must meet the PSP rules before they can operate on the pod, otherwise the requests will still be rejected.

It should be noted that if we do not grant access to the corresponding PSP to the User Account or Service Account created by ourselves, the operation request sent by the user cannot reach the PSP, and the request will be rejected; Since the default K8s admin account has all permissions, you can access all PSPs in the cluster.

Therefore, when the PSP is enabled, if we want to deploy a pod, we need to meet the following conditions:

- The K8s user used is given the permission to create pod;

- The K8s user must have access to PSP;

- The deployed Pod can pass the inspection of the accessed PSP.

3. Turn on the PSP

First, enable PSP while ensuring that the K8s cluster works normally. Otherwise, if the system pod of K8s cluster has not been started normally and the PSP rule is started again, all the system pods in K8s cluster cannot be started and the cluster will not work normally.

On the Master, edit / etc / kubernetes / manifest / Kube apiserver Yaml file, and then we need to add PodSecurityPolicy under enable admission plugins under command

command: - --enable-admission-plugins=NodeRestriction,PodSecurityPolicy

Then restart kubelet and check whether it starts successfully:

systemctl restart kubelet.service root@vms71:~/psp# kubectl get psp Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES controller false RunAsAny MustRunAs MustRunAs MustRunAs true configMap,secret,emptyDir speaker true NET_RAW RunAsAny RunAsAny RunAsAny RunAsAny true configMap,secret,emptyDir

Delete two default PSP rules to facilitate our subsequent experiments:

root@vms71:~/psp# kubectl delete psp controller Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ podsecuritypolicy.policy "controller" deleted root@vms71:~/psp# kubectl delete psp speaker Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ podsecuritypolicy.policy "speaker" deleted

Next, we try to create a pod with the admin account and check the results:

root@vms71:~/psp# kubectl run pod1 --image=nginx --image-pull-policy=IfNotPresent Error from server (Forbidden): pods "pod1" is forbidden: PodSecurityPolicy: no providers available to validate pod request

It can be seen that even if we use the administrator account, we can't create the corresponding pod. An error message prompts us PodSecurityPolicy: no providers available to validate pod request, indicating that the request has been blocked by PodSecurityPolicy.

3, Practice 1: restrict the deployment of Privileged pod s

The K8s account used in the experiment is the testuser created in the previous note, and the authentication file is kc1. The link is as follows https://blog.csdn.net/tushanpeipei/article/details/121877797?spm=1001.2014.3001.5501 Currently, testuser does not have any permissions.

Now let's use the administrator account to create a PSP that restricts the deployment of Privileged pod. The yaml file is as follows:

root@vms71:~/psp# cat privileged.yaml

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: deny-privilege

spec:

privileged: false # Don't allow privileged pods!

# The rest fills in some required fields.

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

runAsUser:

rule: RunAsAny

fsGroup:

rule: RunAsAny

volumes:

- '*'

root@vms71:~/psp# kubectl apply -f privileged.yaml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/deny-privilege created

It can be seen that this file does not have great restrictions on other contents. For example, we can mount volumes in the pod of any type, and there are no restrictions on UID and GID in the pod. However, we can see that the value of privileged is set to false, which means that it is not allowed to create a pod in a privileged way.

Next, we continue to create a clusterrole for testuser using the following yaml file:

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: creationTimestamp: null name: crole-pod rules: - apiGroups: - "" resources: - pods verbs: - get - delete - list - create - watch

In this clusterrole, some operations on pods resources are allowed. Next, we need to bind the created clusterrole and testuser. The command is as follows:

kubectl create clusterrolebinding crolebinding-pod --clusterrole=crole-pod --user=testuser

When you use testuser to create a pod in a non privileged form, you can see that it cannot be created normally because testuser does not have permission to access the PSP we set.

root@vms71:~/psp# kubectl run pod1 --image=nginx --image-pull-policy=IfNotPresent --kubeconfig=kc1 Error from server (Forbidden): pods "pod1" is forbidden: PodSecurityPolicy: unable to admit pod: []

Therefore, we should also set up a clusterrole, set the permission to access the PSP rules we set, and bind it to the testuser. The following is the creation idea and content of the corresponding yaml file:

root@vms71:~/psp# kubectl create clusterrole psp-deny-privilege --verb=use --resource=psp --resource-name=deny-privilege --dry-run=client -o yaml > cluster-role-psp-deny-privilege.yaml root@vms71:~/psp# cat cluster-role-psp-deny-privilege.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: creationTimestamp: null name: psp-deny-privilege rules: - apiGroups: - policy resourceNames: - deny-privilege resources: - podsecuritypolicies verbs: - use

After creating a clusterrole of PSP deny privilege using the yaml file above, create a clusterrole binding and bind it to testuser:

kubectl create clusterrolebinding crolebinding-psp-privilege --clusterrole=psp-deny-privilege --user=testuser

Now let's re-use testuser to create a pod to see if it succeeds:

root@vms71:~/psp# kubectl run pod1 --image=nginx --image-pull-policy=IfNotPresent --kubeconfig=kc1 pod/pod1 created

You can see that it can now be created normally. Then, let's create a pod and yaml file in a privileged way as follows:

apiVersion: v1 kind: Pod metadata: name: privileged spec: containers: - name: pod1 image: nginx securityContext: privileged: true

Use testuser to create the user again, and the results are as follows:

root@vms71:~/psp# kubectl apply -f privileged-pod.yaml --kubeconfig=kc1 Error from server (Forbidden): error when creating "privileged-pod.yaml": pods "privileged" is forbidden: PodSecurityPolicy: unable to admit pod: [spec.containers[0].securityContext.privileged: Invalid value: true: Privileged containers are not allowed]

The error message displays Invalid value: true: Privileged containers are not allowed], indicating that the set PSP rule blocks the request to create a privileged pod.

4, Practice 2: deploy pod with Deployment

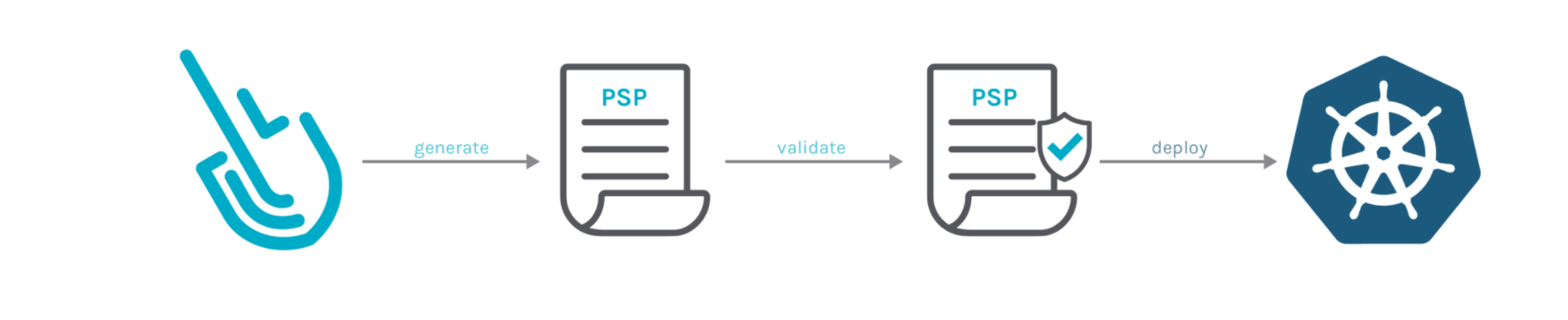

We can use the user of K8s to deploy the Deployment controller or other controllers to deploy the pod. After receiving our request, these controllers will also use their own Service Accounts to complete subsequent operations. The specific steps are shown in the figure below:

Then, if the SA of the Deployment controller is not authorized to access the PSP rules running the Deployment operation, even if we use the default account admin, we cannot complete the Deployment operation. The verification steps are as follows:

Currently, PSP S deployed by deny privilege still exist in the whole environment:

root@vms71:~/psp# kubectl get psp Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES deny-privilege false RunAsAny RunAsAny RunAsAny RunAsAny false *

Then we use the admin account to create a Deployment using the following Deployment yaml file:

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web1

name: web1

spec:

replicas: 3

selector:

matchLabels:

app1: web1

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app1: web1

app2: web1

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: IfNotPresent

resources: {}

status: {}

After applying this yaml file, web1 Deployment is successful, but we can see that the pod is not deployed by looking at the pod and Deployment:

root@vms71:~/psp# kubectl get deployments.apps web1 NAME READY UP-TO-DATE AVAILABLE AGE web1 0/3 0 0 10s root@vms71:~/psp# kubectl get pods No resources found in default namespace.

The reason is that the SA of the Deployment controller does not have permission to access the PSP. Therefore, now we need to grant the SA of the Deployment controller access to the PSP. First, create a clusterrole and set the permission to access the PSP. The yaml file is as follows:

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: creationTimestamp: null name: psp-deny-privilege rules: - apiGroups: - policy resourceNames: - deny-privilege resources: - podsecuritypolicies verbs: - use

Then use clusterrole binding to bind the created clusterrole to all SAS in the Kube system namespace (including the SAS of the Deployment controller):

kubectl create clusterrolebinding crolebinding-psp-privilege --clusterrole=psp-deny-privilege --group=system:serviceaccounts -n kube-system

After completing the configuration, reapply the yaml file of development to check whether the pod can be deployed normally:

root@vms71:~/psp# kubectl get pods NAME READY STATUS RESTARTS AGE web1-6748654d8d-6f26l 1/1 Running 0 5s web1-6748654d8d-6zzb8 1/1 Running 0 5s web1-6748654d8d-ph89j 1/1 Running 0 5s root@vms71:~/psp# kubectl get deployments.apps web1 NAME READY UP-TO-DATE AVAILABLE AGE web1 3/3 3 3 10s

It can be seen that the Deployment has successfully deployed the pod and the test is successful.

5, Other PSP strategies

The following is a restrictive policy from the K8s official website, which requires users to run with a non privileged account, prohibits possible upgrade to root permission, and requires the use of several security mechanisms.

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: restricted

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: 'docker/default,runtime/default'

apparmor.security.beta.kubernetes.io/allowedProfileNames: 'runtime/default'

seccomp.security.alpha.kubernetes.io/defaultProfileName: 'runtime/default'

apparmor.security.beta.kubernetes.io/defaultProfileName: 'runtime/default'

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

# This is redundant with non-root + disallow privilege escalation,

# but we can provide it for defense in depth.

requiredDropCapabilities:

- ALL

# Allow core volume types.

volumes:

- 'configMap'

- 'emptyDir'

- 'projected'

- 'secret'

- 'downwardAPI'

# Assume that persistentVolumes set up by the cluster admin are safe to use.

- 'persistentVolumeClaim'

hostNetwork: false

hostIPC: false

hostPID: false

runAsUser:

# Require the container to run without root privileges.

rule: 'MustRunAsNonRoot'

seLinux:

# This policy assumes the nodes are using AppArmor rather than SELinux.

rule: 'RunAsAny'

supplementalGroups:

rule: 'MustRunAs'

ranges:

# Forbid adding the root group.

- min: 1

max: 65535

fsGroup:

rule: 'MustRunAs'

ranges:

# Forbid adding the root group.

- min: 1

max: 65535

readOnlyRootFilesystem: false

For the explanation of specific fields, please refer to: https://kubernetes.io/zh/docs/concepts/policy/pod-security-policy/#example-policies

Sorting data source:

K8s pod-security-policy: https://kubernetes.io/zh/docs/concepts/policy/pod-security-policy/

Sysdig: https://sysdig.com/blog/psp-in-production/

Old section CKS course