Chapter 4 IDEA Environmental Applications

The spark shell only uses a lot when testing and validating our programs. In production environments, programs are usually written in the IDE, then packaged into jar packages and submitted to the cluster. The most common use is to create a Maven project that uses Maven to manage the dependencies of jar packages.

4.1 Write WordCount program in IDEA

1) Create a Maven project WordCount and import dependencies

<dependencies> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.11</artifactId> <version>2.1.1</version> </dependency> </dependencies> <build> <finalName>WordCount</finalName> <plugins> <plugin> <groupId>net.alchim31.maven</groupId> <artifactId>scala-maven-plugin</artifactId> <version>3.2.2</version> <executions> <execution> <goals> <goal>compile</goal> <goal>testCompile</goal> </goals> </execution> </executions> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-assembly-plugin</artifactId> <version>3.0.0</version> <configuration> <archive> <manifest> <mainClass>WordCount(modify)</mainClass> </manifest> </archive> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> </configuration> <executions> <execution> <id>make-assembly</id> <phase>package</phase> <goals> <goal>single</goal> </goals> </execution> </executions> </plugin> </plugins> </build>

2) Write code

package com.atguigu import org.apache.spark.{SparkConf, SparkContext} object WordCount{ def main(args: Array[String]): Unit = { //Establish SparkConf And set App Name val conf = new SparkConf().setAppName("WC") //Establish SparkContext,The object is a submission Spark App Entrance to val sc = new SparkContext(conf) //Use sc Establish RDD And execute the corresponding transformation and action sc.textFile(args(0)).flatMap(_.split(" ")).map((_, 1)).reduceByKey(_+_, 1).sortBy(_._2, false).saveAsTextFile(args(1)) sc.stop() } }

3) Packaging into cluster testing

bin/spark-submit \ --class WordCount \ --master spark://hadoop102:7077 \ WordCount.jar \ /word.txt \ /out

4.2 Local Debugging

Debugging a local Spark program requires the use of local commit mode, which treats the local machine as the running environment, with both Master and Worker native.Add breakpoint debugging directly at runtime.The following:

Set additional properties when creating SparkConf to indicate local execution:

val conf = new SparkConf().setAppName("WC").setMaster("local[*]")

If the native operating system is windows, if something related to hadoop is used in the program, such as writing files to HDFS, the following exception will be encountered:

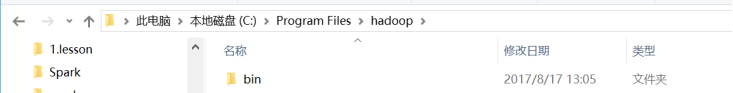

The reason for this problem is not a program error, but the use of hadoop-related services. The solution is to unzip the attached hadoop-common-bin-2.7.3-x64.zip to any directory.

Configure Run Configuration in IDEA, add HADOOP_HOME variable

4.3 Remote Debugging

Remote debugging through IDEA mainly submits the application as a Driver through the following configuration process:

Modify the sparkConf, add the address of the Jar package, Driver program that will ultimately run, and set the submission address for Master:

val conf = new SparkConf().setAppName("WC")

.setMaster("spark://hadoop102:7077")

.setJars(List("E:\\SparkIDEA\\spark_test\\target\\WordCount.jar"))

Then add breakpoints and debug directly: