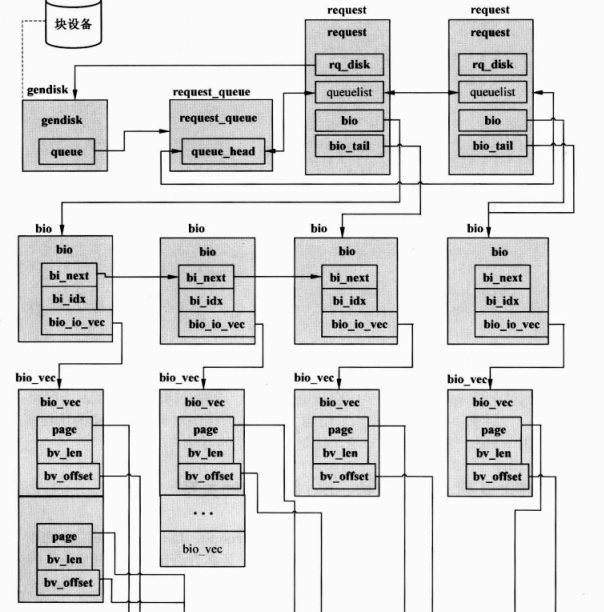

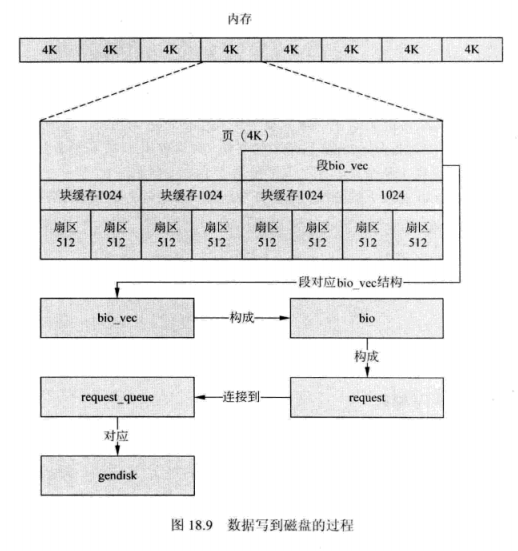

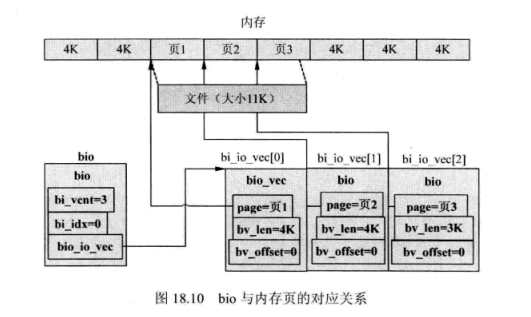

Memory is a linear structure, and Linux systems divide memory into pages. The largest page can be 64KB, but the current mainstream system pages are 4KB in size. Each page of data is encapsulated into a segment, which is represented by bio_vec. Multiple pages are encapsulated into segments, which are composed of an array of bio_vec elements, which are represented by bio_io_vec.

bio_io_vec is a pointer in bio. One or more bio composes a request request request descriptor. The request will be connected to the request queue request_queue, or merged into an existing request queue request_queue. The merging condition is that the sector positions represented by two adjacent request requests are adjacent.

1. Block I/O Request (bio)

The process of data from memory to disk or from disk to memory is called I/O operation. The kernel uses a core data structure to describe I/O operations. The bio structure contains a segment of data (bio_io_vec), which is the data to be manipulated.

/*

* main unit of I/O for the block layer and lower layers (ie drivers and

* stacking drivers)

*/

struct bio {

/*The first sector to be transmitted*/

sector_t bi_sector; /* device address in 512 byte

sectors */

/*Next sector*/

struct bio *bi_next; /* request queue link */

struct block_device *bi_bdev; /*bio Corresponding block devices*/

unsigned long bi_flags; /* status, command, etc */

unsigned long bi_rw; /* bottom bits READ/WRITE,

* top bits priority

*/

unsigned short bi_vcnt; /* how many bio_vec's */

unsigned short bi_idx; /* current index into bvl_vec */

/* Number of segments in this BIO after

* physical address coalescing is performed.

*/

unsigned short bi_phys_segments;

/* Number of segments after physical and DMA remapping

* hardware coalescing is performed.

*/

unsigned short bi_hw_segments;

unsigned int bi_size; /* residual I/O count */

/*

* To keep track of the max hw size, we account for the

* sizes of the first and last virtually mergeable segments

* in this bio

*/

unsigned int bi_hw_front_size;

unsigned int bi_hw_back_size;

unsigned int bi_max_vecs; /* max bvl_vecs we can hold */

struct bio_vec *bi_io_vec; /* the actual vec list */

bio_end_io_t *bi_end_io;

atomic_t bi_cnt; /* pin count */

void *bi_private;

bio_destructor_t *bi_destructor; /* destructor */

};

bio-related macros

/*To get the current page pointer*/

bio_page(bio)

/*Offset to get the current page*/

bio_offset(bio)

bio_cur_sectors(bio) 2. Request structure

Several consecutive pages form a bio structure, and several adjacent bio structures form a request structure. In this way, it is not necessary to move the magnetic head substantially, which saves the time of I/O operation.

/*

* try to put the fields that are referenced together in the same cacheline

*/

/*Request structure request*/

struct request {

struct list_head queuelist; /*Request queue request_queue list*/

struct list_head donelist;

request_queue_t *q;

unsigned int cmd_flags;

enum rq_cmd_type_bits cmd_type;

/* Maintain bio traversal state for part by part I/O submission.

* hard_* are block layer internals, no driver should touch them!

*/

/*The first sector number to be transmitted*/

sector_t sector; /* next sector to submit */

/*The next sector to be transmitted*/

sector_t hard_sector; /* next sector to complete */

unsigned long nr_sectors; /* no. of sectors left to submit */

unsigned long hard_nr_sectors; /* no. of sectors left to complete */

/* no. of sectors left to submit in the current segment */

unsigned int current_nr_sectors;

/* no. of sectors left to complete in the current segment */

unsigned int hard_cur_sectors;

struct bio *bio; /*Point to the first unfinished bio domain*/

struct bio *biotail;/*The last bio in the request list*/

struct hlist_node hash; /* merge hash */

/*

* The rb_node is only used inside the io scheduler, requests

* are pruned when moved to the dispatch queue. So let the

* completion_data share space with the rb_node.

*/

union {

struct rb_node rb_node; /* sort/lookup */

void *completion_data;

};

/*

* two pointers are available for the IO schedulers, if they need

* more they have to dynamically allocate it.

*/

void *elevator_private; /*Private data pointing to I/O scheduler 1*/

void *elevator_private2;/*Private data pointing to I/O scheduler 2*/

struct gendisk *rq_disk; /*Point to the disk to which the request is directed*/

unsigned long start_time;

/* Number of scatter-gather DMA addr+len pairs after

* physical address coalescing is performed.

*/

unsigned short nr_phys_segments;/*Number of physical segments requested*/

/* Number of scatter-gather addr+len pairs after

* physical and DMA remapping hardware coalescing is performed.

* This is the number of scatter-gather entries the driver

* will actually have to deal with after DMA mapping is done.

*/

unsigned short nr_hw_segments;

unsigned short ioprio;

void *special;

char *buffer;

int tag;

int errors;

int ref_count;

/*

* when request is used as a packet command carrier

*/

unsigned int cmd_len;

unsigned char cmd[BLK_MAX_CDB];

unsigned int data_len;

unsigned int sense_len;

void *data;

void *sense;

unsigned int timeout;

int retries;

/*

* completion callback.

*/

rq_end_io_fn *end_io;

void *end_io_data;

};

3. Request queue

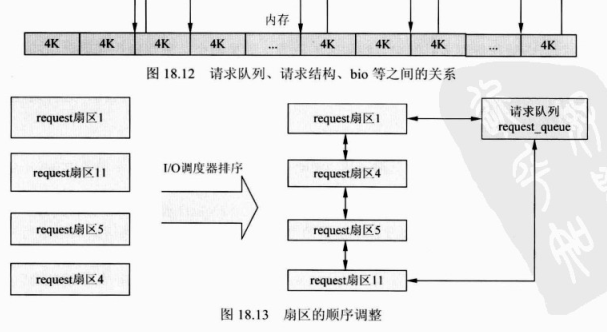

Request queues are mainly used to connect multiple request request request structures to the same device. It also contains the type of request supported by the block device, the number of requests, the size of segments, the number of hardware sectors and other device-related information.

/*The kernel designs the request queue request_queue as a bidirectional list.

Link request requests*/

struct request_queue

{

/*

* Together with queue_head for cacheline sharing

*/

struct list_head queue_head;/*Connect to the request structure,

Represents pending requests*/

struct request *last_merge;

elevator_t *elevator; /*Pointer of Elevator Scheduling Algorithms*/

/*

* the queue request freelist, one for reads and one for writes

*/

struct request_list rq;/*Data structures used for assigning request descriptors*/

/*Functions for Implementing Driver Processing Requests*/

request_fn_proc *request_fn;

/*Method of inserting a new request request request into the request queue*/

make_request_fn *make_request_fn;

prep_rq_fn *prep_rq_fn;

unplug_fn *unplug_fn;

merge_bvec_fn *merge_bvec_fn;

issue_flush_fn *issue_flush_fn;

prepare_flush_fn *prepare_flush_fn;

softirq_done_fn *softirq_done_fn;

/*

* Dispatch queue sorting

*/

sector_t end_sector;

struct request *boundary_rq;

/*

* Auto-unplugging state

*/

struct timer_list unplug_timer;

int unplug_thresh; /* After this many requests */

unsigned long unplug_delay; /* After this many jiffies */

struct work_struct unplug_work;

struct backing_dev_info backing_dev_info;

/*

* The queue owner gets to use this for whatever they like.

* ll_rw_blk doesn't touch it.

*/

void *queuedata;/*Pointer to Private Data of Block Device Driver*/

/*

* queue needs bounce pages for pages above this limit

*/

unsigned long bounce_pfn;

gfp_t bounce_gfp;

/*

* various queue flags, see QUEUE_* below

*/

unsigned long queue_flags;

/*

* protects queue structures from reentrancy. ->__queue_lock should

* _never_ be used directly, it is queue private. always use

* ->queue_lock.

*/

spinlock_t __queue_lock;

spinlock_t *queue_lock;

/*

* queue kobject

*/

struct kobject kobj;

/*

* queue settings

*/

unsigned long nr_requests; /* Max # of requests */

unsigned int nr_congestion_on;

unsigned int nr_congestion_off;

unsigned int nr_batching;

unsigned int max_sectors;

unsigned int max_hw_sectors;

unsigned short max_phys_segments;

unsigned short max_hw_segments;

unsigned short hardsect_size;

unsigned int max_segment_size;

unsigned long seg_boundary_mask;

unsigned int dma_alignment;

struct blk_queue_tag *queue_tags;

unsigned int nr_sorted;

unsigned int in_flight;

/*

* sg stuff

*/

unsigned int sg_timeout;

unsigned int sg_reserved_size;

int node;

#ifdef CONFIG_BLK_DEV_IO_TRACE

struct blk_trace *blk_trace;

#endif

/*

* reserved for flush operations

*/

unsigned int ordered, next_ordered, ordseq;

int orderr, ordcolor;

struct request pre_flush_rq, bar_rq, post_flush_rq;

struct request *orig_bar_rq;

unsigned int bi_size;

struct mutex sysfs_lock;

};4. Summary

Relationships among request queue, request structure, bio, etc.