In order to better query the relevant information of enterprises, I crawled the enterprises in Anhui Province. The problems encountered and the technologies used are as follows:

1. Problems encountered:

1 > Enterprise Check PC version data only shows the first 500 pages. In order to maximize the crawl site data, this crawl is crawled according to the city level, totally crawling 80,000 enterprise information in 16 urban areas of Anhui Province.

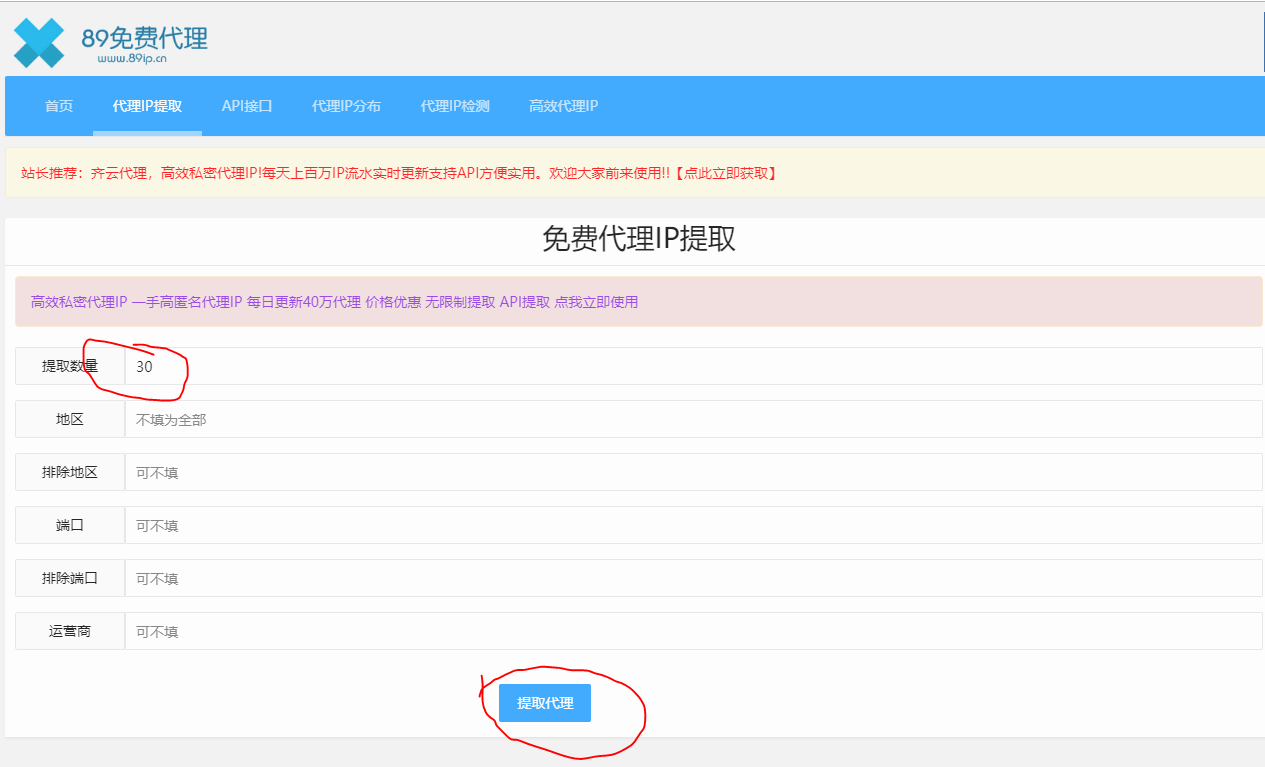

2 > When crawling website data, if the crawling speed is too fast, there will be manual verification function. In order to solve manual verification, and to avoid blockade, the IP proxy can directly replace IP proxy randomly. IP proxy can obtain free proxy account in "89 Free Agent" website. The address is: http://www.89ip.cn/ 30 proxy IP can be obtained at one time, if not enough.

The agent pool can be extracted many times, and then constructed. I tried it. The free agent of the website is much better than the free agent of the Western agent and the fast agent website, as follows:

2. Technology used:

1 > Request module: requests request, in order to avoid anti-crawling, using random proxy, and using fake_user ragent to generate user-agent randomly;

2 > Parsing libraries: using xpath and regular expressions

3 > Speed-up optimization: multi-threading is adopted, and the crawled data is saved once to avoid frequent IO disks.

3. The core code is as follows:

import requests from lxml import etree from queue import Queue from threading import Thread from fake_useragent import UserAgent import csv import os import re import random import time from ippools import ProxySpider from proxy_ip import IP_LIST class QichachaSpider: def __init__(self): self.url = 'https://www.qichacha.com/gongsi_area.html?prov={}&city={}&p={}' self.q = Queue() self.company_info = [] self.headers = { 'Host': 'www.qichacha.com', 'Referer': 'https: // www.qichacha.com /', 'X-Requested-With': 'XMLHttpRequest' } # random User-Agent def random_ua(self): ua = UserAgent() return ua.random # Random IP def random_proxy(self): proxy_list = ProxySpider().get_training_ip('https://www.qichacha.com/') return proxy_list # Climb the target into the queue def put_url(self): self.headers['User-Agent'] = self.random_ua() url = 'https://www.qichacha.com/' html = requests.get(url, headers=self.headers).content.decode('utf-8', 'ignore') parse_html = etree.HTML(html) r_list = parse_html.xpath('//div[@class="areacom"]/div[2]/div[2]/a/@href') for r in r_list: link = r.split('_')[1:] for i in range(1, 501): url = self.url.format(link[0], link[1], i) print(url) self.q.put(url) # Getting first-level page data def get_data(self): while True: if not self.q.empty(): url = self.q.get() self.headers['User-Agent'] = self.random_ua() # proxies = self.random_proxy() proxies = random.choice(IP_LIST) try: html = requests.get(url, headers=self.headers, proxies=proxies, timeout=3).content.decode('utf-8', 'ignore') # html = requests.get(url, headers=self.headers).content.decode('utf-8', 'ignore') # time.sleep(random.uniform(0.5, 1.5)) parse_html = etree.HTML(html) company_list = parse_html.xpath('//table[@class="m_srchList"]/tbody/tr') if company_list is not None: for company in company_list: try: company_name = company.xpath('./td[2]/a/text()')[0].strip() company_link = 'https://www.qichacha.com' + company.xpath('./td[2]/a/@href')[0].strip() company_type, company_industry, company_business_scope = self.get_company_info( company_link) company_person = company.xpath('./td[2]/p[1]/a/text()')[0].strip() company_money = company.xpath('./td[2]/p[1]/span[1]/text()')[0].split(': ')[-1].strip() company_time = company.xpath('./td[2]/p[1]/span[2]/text()')[0].split(': ')[-1].strip() company_email = company.xpath('./td[2]/p[2]/text()')[0].split(': ')[-1].strip() company_phone = company.xpath('td[2]/p[2]/span/text()')[0].split(': ')[-1].strip() company_address = company.xpath('td[2]/p[3]/text()')[0].split(': ')[-1].strip() company_status = company.xpath('td[3]/span/text()')[0].strip() company_dict = { 'Corporate name': company_name, 'Corporate Links': company_link, 'Type of company': company_type, 'B.': company_industry, 'Scope of Business': company_business_scope, 'Corporate Legal Person': company_person, 'registered capital': company_money, 'Registration time': company_time, 'mailbox': company_email, 'Telephone': company_phone, 'address': company_address, 'Does it survive?': company_status, } print(company_dict) # self.company_info.append( # (company_name, company_link, company_type, company_industry, company_business_scope, # company_person, company_money, company_time, company_email, company_phone, # company_address, company_status)) info_list = [company_name, company_link, company_type, company_industry, company_business_scope, company_person, company_money, company_time, company_email, company_phone, company_address, company_status] self.save_data(info_list) except: with open('./bad.csv', 'a', encoding='utf-8', newline='') as f: writer = csv.writer(f) writer.writerow(url) continue except: self.q.put(url) else: break # Getting secondary page data def get_company_info(self, company_link): headers = {'User-Agent': UserAgent().random} html = requests.get(company_link, headers=headers, proxies=random.choice(IP_LIST), timeout=3).content.decode( 'utf-8', 'ignore') while True: if 'Enterprise type' not in html: html = requests.get(company_link, headers=headers, proxies=random.choice(IP_LIST), timeout=3).content.decode( 'utf-8', 'ignore') else: break try: company_type = re.findall(r'Enterprise type</td> <td class="">(.*?)</td>', html, re.S)[0].strip() company_industry = re.findall(r'B.</td> <td class="">(.*?)</td>', html, re.S)[0].strip() company_business_scope = re.findall(r'Scope of Business.*?"3">(.*?)</td>', html, re.S)[0].strip() return company_type, company_industry, company_business_scope except: return 'nothing', 'nothing', 'nothing' # Save data def save_data(self, info): with open('./1111.csv', 'a', encoding='utf-8', newline='') as f: writer = csv.writer(f) writer.writerow(info) def main(self): if os.path.exists('./1111.csv'): os.remove('./1111.csv') with open('./1111.csv', 'a', encoding='utf-8', newline='') as f: writer = csv.writer(f) writer.writerow( ['Corporate name', 'Corporate Links', 'Type of company', 'B.', 'Scope of Business', 'Corporate Legal Person', 'registered capital', 'Registration time', 'mailbox', 'Telephone', 'address', 'Does it survive?']) self.put_url() t_list = [] for i in range(0, 10): t = Thread(target=self.get_data) t_list.append(t) t.start() for j in t_list: j.join() if __name__ == "__main__": spider = QichachaSpider() spider.main()