Environmental preparation:

k8s environment managed by lancher 2.3

jenkins (you can use docker to build or install directly. If you are not familiar with k8s, it is not recommended to use helm to install jenkins. Many configurations are not convenient to change. I built it with docker, but I need to record and mount the docker command. It is recommended to install it directly on the host to avoid the issue of releasing ssh without key.)

Alibaba image warehouse (you can register your alicloud account for free)

maven's local nexus proxy server (convenient for jar management within the company)

1.maven package and docker compile and publish I use:

<groupId>com.spotify</groupId> <artifactId>docker-maven-plugin</artifactId> <version>1.2.0</version>

This plug-in has been replaced by: dockerfile maven plugin, but I didn't use this because my local development machine doesn't have software such as docker client installed, which seems to require that the local machine at least has a docker client environment, and the docker environment variable must be configured. So I didn't use it. In this way, the machine can also directly use maven to package docker build: push code to Alibaba warehouse. It is convenient for application upgrade of development environment.

Use this docker Maven plugin to find a bug:

Because I took the jenkins installed by docker and did not mount the docker command before starting the container, then I relied on the container to regenerate the image. Later, I added the login authentication information of the Alibaba image warehouse to the root directory of the user (that is, the config.json of docker was placed under / root/.docker /, I don't know Baidu), resulting in the serverId and the C in the docker Maven plugin The config.json conflict prevents the image from being pushed to the Alibaba image warehouse.

Also adjusted me 2 days, forced me to take the source code to study all. Finally, we found the problem.

resolvent:

How to directly use the docker command, directly use the pipeline to specify the user's password. Then I deployed it with pipeline.

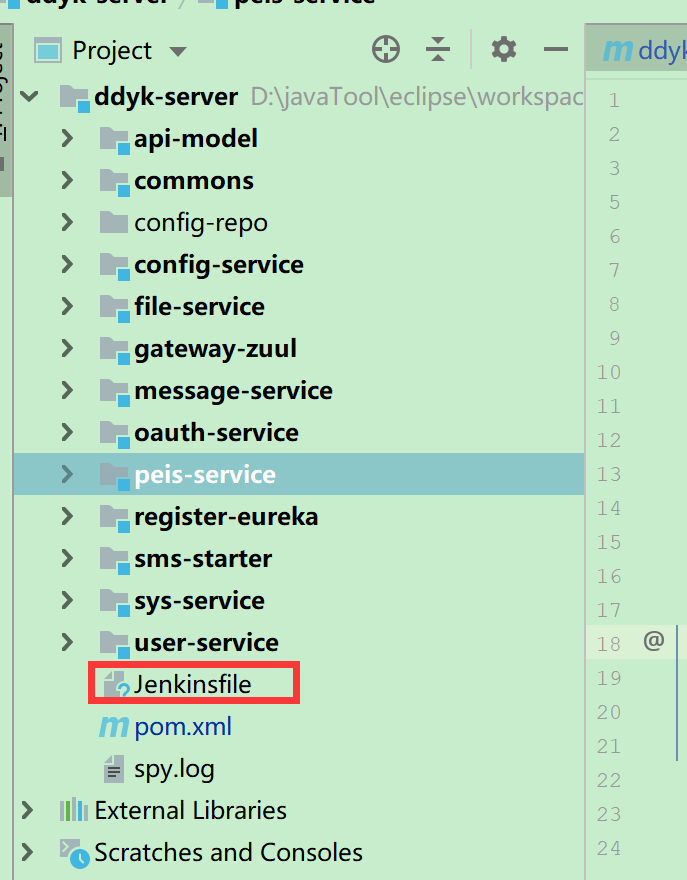

2. First place jenkinsfile in the root directory of the project (note that it is not the module directory of maven), as shown in the figure:

jenkinsfile content reference:

properties([ parameters([ string(name: 'PORT', defaultValue: '1111', description: 'Program running port'), choice(name: 'ACTIVE_TYPE', choices: ['local', 'dev', 'test', 'prod'], description: 'Program packaging environment'), choice(name: 'ENV_TYPE', choices: ['offline', 'online'], description: 'Or offline or online environment'), booleanParam(name: 'All_COMPILE', defaultValue: false, description: 'Need to recompile the whole module'), booleanParam(name: 'DEPLOYMENT', defaultValue: true, description: 'Deployment'), booleanParam(name: 'ON_PINPOINT', defaultValue: false, description: 'Whether to add Pinpoint Monitor'), booleanParam(name: 'ON_PROMETHEUS', defaultValue: false, description: 'Whether to add Prometheus Monitor'), string(name: 'EMAIL', defaultValue: '******@tintinhealth.com', description: 'Packaging result notification') ]) ]) node { stage('Prepare') { echo "1.Prepare Stage" def MVNHOME = tool 'maven-3.6.3' // Add variables to environment variables //env.PATH = "${jdk77}/bin:${MVNHOME}/bin:${env.PATH}" env.PATH = "${MVNHOME}/bin:${env.PATH}" MVNCONFIG= '/var/jenkins_home/maven/settings.xml' //echo "UUID=${UUID.randomUUID().toString()}" checkout scm //When there are multiple projects to be processed, sub projects shall be entered first projectwk = "." mainpom = readMavenPom file: 'pom.xml' repostory = "${mainpom.properties['docker.repostory']}" //When there are multiple modules, select one to compile // if(mainpom.modules.size() > 0 ) { // echo "project has modules = = ${mainpom.modules}" // timeout(time: 10, unit: 'MINUTES') { // def selproj = input message: 'please select the project to be processed', parameters: [choice(choices: mainpom.modules, description: 'please select the project to be processed', name: 'selproj')] / /, submiterparameter: 'project' // projectwk = selproj // echo "select project = ${projectwk}" // } // } projectwk="${JOB_NAME}" dir("${projectwk}") { pom = readMavenPom file: 'pom.xml' echo "group: ${pom.groupId}, artifactId: ${pom.artifactId}, version: ${pom.version} ,description: ${pom.description}" artifactId = "${pom.artifactId}" version = "${pom.version}" description = "${pom.description}" } if(version == 'null'){ version = "${mainpom.version}" echo "Using father version:${version}" } script { GIT_TAG = sh(returnStdout: true, script: 'git rev-parse --short HEAD').trim() echo "GIT_TAG== ${GIT_TAG}" } image = "registry.cn-hangzhou.aliyuncs.com/ddyk/${artifactId}:${version}" // if (params.ENV_TYPE == 'offline' || params.ENV_TYPE == null) { //sh "sed -i 's#39.95.40.97:5000#10.3.80.50:5000#g' pom.xml" // image = "registry.cn-hangzhou.aliyuncs.com/ddyk/${artifactId}:${version}" // } } if(params.All_COMPILE){ if(mainpom.modules.size() > 0 ) { stage('Compile master project') { sh "mvn -s ${MVNCONFIG} -DskipTests clean install" } } } dir("${projectwk}") { stage('Compiler module') { echo "2.Compiler module ${artifactId}" def jarparam='' def pinname = artifactId if( pinname.length() > 23) { pinname = artifactId.substring(0,23) } //Add pinpoint if(params.ON_PINPOINT) { jarparam = '"-javaagent:/app/pinpoint-agent/pinpoint-bootstrap-1.8.0.jar","-Dpinpoint.agentId={pinname}", "-Dpinpoint.applicationName={pinname}",' } //Add prometheus if(params.ON_PROMETHEUS) { jarparam = jarparam + '"-javaagent:/app/prometheus/jmx_prometheus_javaagent-0.11.0.jar=1234:/app/prometheus/jmx.yaml",' } sh "sed -i 's#{jarparam}#${jarparam}#g;s#{port}#${params.PORT}#g' Dockerfile" sh "sed -i 's#{description}#${description}#g;s#{artifactId}#${artifactId}#g;s#{version}#${version}#g;s#{active}#${params.ACTIVE_TYPE}#g;s#{pinname}#${pinname}#g' Dockerfile" sh "sed -i 's#{artifactId}#${artifactId}#g;s#{version}#${version}#g;s#{port}#${params.PORT}#g;s#{image}#${image}#g' Deployment.yaml" sh "mvn -s ${MVNCONFIG} -DskipTests clean package" stash includes: 'target/*.jar', name: 'app' } stage('Docker Pack') { echo "3.Docker Pack" unstash 'app' sh "mvn -s ${MVNCONFIG} docker:build" } stage('Push mirror') { echo "4.Push Docker Image Stage" sh "mvn -s ${MVNCONFIG} docker:push" } // timeout(time: 10, unit: 'MINUTES') { // input 'are you sure you want to deploy? ' // } if(params.DEPLOYMENT){ stage('Release') { if (params.ENV_TYPE == 'offline' || params.ENV_TYPE == null) { sshagent(credentials: ['deploy_ssh_key_dev']) { sh "scp -r Deployment.yaml root@192.168.1.108:/home/sam/devops/kubernetes/manifests/workTools/ddyk/Deployment-${artifactId}.yaml" sh "ssh root@192.168.1.108 'kubectl apply -f /home/sam/devops/kubernetes/manifests/workTools/ddyk/Deployment-${artifactId}.yaml && kubectl set env deploy/${artifactId} DEPLOY_DATE=${env.BUILD_ID}'" } } else { sshagent(credentials: ['deploy_ssh_key_238']) { sh "scp -P 22 -r Deployment.yaml root@192.168.1.108:/home/sam/devops/kubernetes/manifests/workTools/ddyk/Deployment-${artifactId}.yaml" sh "ssh -p 22 root@192.168.1.108 'kubectl apply -f /home/sam/devops/kubernetes/manifests/workTools/ddyk/Deployment-${artifactId}.yaml && kubectl set env deploy/${artifactId} DEPLOY_DATE=${env.BUILD_ID}'" } } echo "Release completed" } } } stage('Inform the person in charge'){ // emailext body: "build project: ${artifactid} \ R \ nbuild complete", subject: 'build result notification [success], to: "${EMAIL}" // echo "build project: ${description} \ R \ nbuild complete" echo "Construction project:${artifactId}\r\n Completion of construction" } }

The above contents can be changed and deleted as required.

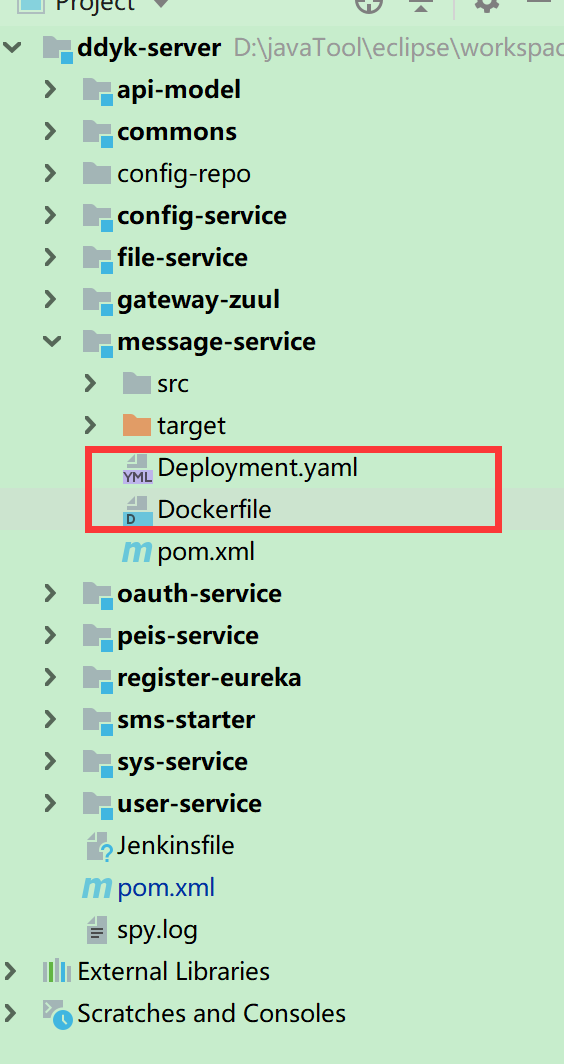

3. The Maven module places the following documents:

Please refer to the description for the contents of Dockerfile:

FROM openjdk:8-alpine MAINTAINER ddyk gsj "*****@tintinhealth.com" ENV WORK_PATH /app #ENV APP_NAME @project.build.finalName@.@project.packaging@ EXPOSE 9108 #Unified time zone RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime COPY /app/message-service*.jar $WORK_PATH/app.jar WORKDIR $WORK_PATH ENTRYPOINT ["java", "-Xmx512m","-Dspring.profiles.active={active}","-Dserver.port={port}",{jarparam} "-jar", "/app/app.jar"]

Deployment.yaml content reference:

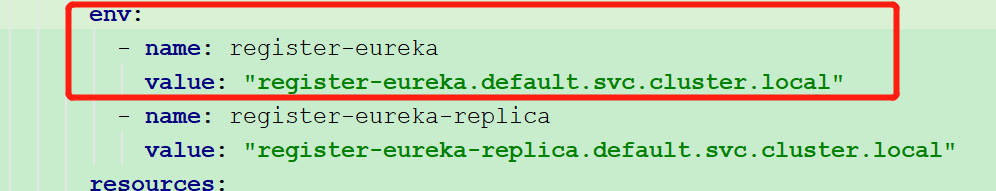

--- apiVersion: apps/v1 kind: Deployment metadata: name: {artifactId} namespace: default labels: app: {artifactId} version: {version} spec: selector: matchLabels: app: {artifactId} replicas: 1 template: metadata: labels: app: {artifactId} annotations: prometheus.io.jmx: "true" prometheus.io.jmx.port: "1234" spec: hostAliases: - ip: "172.20.246.2" hostnames: - "node-1" - ip: "172.20.246.3" hostnames: - "node-2" containers: - name: {artifactId} image: {image} # IfNotPresent\Always imagePullPolicy: Always ports: - name: prometheusjmx containerPort: 1234 livenessProbe: #kubernetes thinks that the pod is alive. If not, it needs to be restarted httpGet: path: /actuator/health port: {port} scheme: HTTP initialDelaySeconds: 60 ## Set to the maximum time required for the system to fully start + seconds timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: #kubernetes believes that the pod was started successfully httpGet: path: /actuator/health port: {port} scheme: HTTP initialDelaySeconds: 40 ## Set to the minimum time required for the system to fully start timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 env: - name: register-eureka value: "register-eureka.default.svc.cluster.local" - name: register-eureka-replica value: "register-eureka-replica.default.svc.cluster.local" resources: # 5% CPU time and 700MiB memory requests: # cpu: 50m memory: 250Mi # Allow it to use at most limits: # cpu: 100m memory: 1000Mi # Specify the path to mount in the container volumeMounts: - name: logs-volume mountPath: /logs - name: host-time mountPath: /etc/localtime readOnly: true - name: host-timezone mountPath: /etc/timezone readOnly: true # - name: pinpoint-config # mountPath: /app/pinpoint-agent/pinpoint.config imagePullSecrets: - name: registrykey-m2-1 volumes: - name: logs-volume hostPath: # Directory on host path: /logs - name: host-time hostPath: path: /etc/localtime - name: host-timezone hostPath: path: /usr/share/zoneinfo/Asia/Shanghai # # Run on the node with the specified label, if the node is marked with kubectl label nodes 192.168.0.113 edgenode=flow # nodeSelector: # edgenode: flow --- apiVersion: v1 kind: Service metadata: name: {artifactId} namespace: default labels: app: {artifactId} version: {version} spec: selector: app: {artifactId} # type: NodePort ports: - name: tcp-{port}-{port} protocol: TCP port: {port} targetPort: {port} # nodePort: 31111

Note: prometheus is not used by me. You can modify the deployment file for debugging.

About the deployment of register Eureka: the service name is the same as register Eureka.

Note: the project name built in jenkins should be the same as the module name of maven. Otherwise, you need to modify the deployment related script of jenkins file.

Do not understand the welcome consultation, in fact, this set of deployment is mainly these script files.

By the way, the automatic deployment (automatically triggered after git submits the code) can only be implemented in the development environment, because after all, the program fails to inform when to send the formal environment (currently, you can also judge the difference between the release and the development version according to the version). You can see my previous file by yourself.