Back to CNN convolutional neural network directory

The last chapter: Depth part -- CNN convolutional neural network (3) On ROI pooling and ROI Align and interpolation

In this section, I will elaborate on the demonstration project of mnist handwritten digit code using tf cnn

github code of this project: https://github.com/wandaoyi/tf_cnn_mnist_pro

5, TF CNN MNIST handwritten digit code demonstration

(1) preface.

Prior to In depth - neural network We have learned ANN and DNN. Now, we have learned CNN. For learning to apply, we will use CNN to build convolutional neural network.

(2) . define requirements

The requirement of the project is to recognize the handwritten Arabic numeral pictures from 0 to 9. For example, the number on the invoice (the first one for handwritten number recognition was written by a bank in the United States in 1989, hired by a tycoon, and was written by LeNet-5, a convolutional neural network technology at that time). Front In depth part -- neural network (7) detailed description of DNN Neural Network handwritten digit code demonstration We have used DNN case study; now, let's use LeNet-5 for a case study. At that time, the project was used to identify the signed numbers on the check. Training network, of course, is inseparable from data, so we first download the data, which has been uploaded to Baidu cloud disk for you: link: https://pan.baidu.com/s/13OokGc0h3F5rGrxuSLYj9Q extraction code: qfj6.

(3) . build project

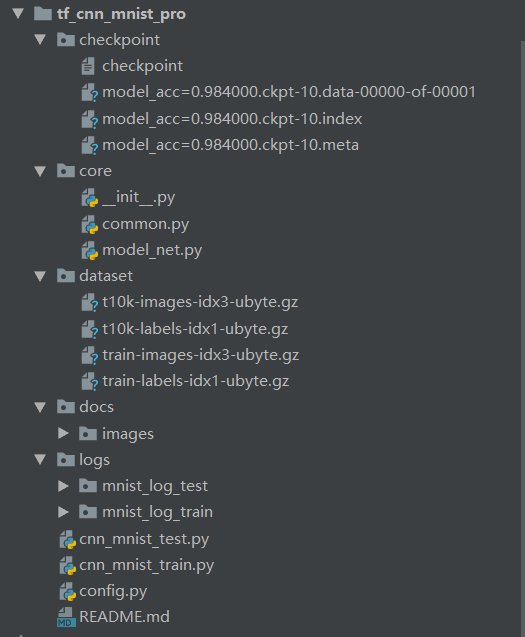

The project structure is as follows:

The above model is the accuracy of 10 epochs I randomly trained: 0.984000. Before we used DNN, the accuracy of 10 epochs was only 0.96 +. In this way, we can see that the accuracy has increased by 2 percentage points. In this way, some people may not be satisfied with it and think it is not good. However, if we look at it in reverse, we can see that the error rate has been reduced by half, so the effect will be very considerable. It's important to be able to do things when working on projects in the company, but it's also important to be able to express yourself.

(4) . environmental dependence

Environmental dependence:

pip install numpy==1.16 pip install easydict conda install tensorflow-gpu==1.13.1 # It is not recommended to use tf version 2.0, which has many pits

The installation of tensorflow is explained in detail in my previous blog: Fragmented part -- Installation of tensorflow gpu version If not, you can see how to install.

README.md file:

# tf_cnn_mnist_pro tf_cnn Handwritten number forecast 2020-02-09 - Project download address: https://github.com/wandaoyi/tf_cnn_mnist_pro - Please go to Baidu cloud disk to download the training data required for the project: - Links: https://pan.baidu.com/s/13OokGc0h3F5rGrxuSLYj9Q extraction code: qfj6 ## Parameter setting - Before training or forecasting, we need to set parameters - open config.py File, where parameters or paths are set. ## Model - Model code model_net.py - Here, we use lenet-5 Network model to extract features ## Training model - Function cnn_mnist_train.py ,Simple operation, right click directly run - The training effect is as follows: - acc_train: 1.0 - epoch: 10, acc_test: 0.984000 - Here is the effect of random training. If you want to get good results, you can train more epoch - You can also add it yourself early-stopping Go in it's not a problem ## Forecast - Function cnn_mnist_test.py ,Simple operation, right click directly run - After running, some forecast results will be printed on the console - The prediction effect is as follows: - predicted value: [7 2 1 0 4] - True value: [7 2 1 0 4] ## Tensorbboard log - Use tensorboard The advantage of this log is that it is real-time, and you can watch the renderings while training. - stay cmd Command window, enter the following command: - tensorboard --logdir=G:\work_space\python_space\pro2018_space\wandao\mnist_pro\logs\mnist_log_train --host=localhost - stay --logdir= Followed by the folder path of the log, - stay --host= Is used to specify ip If you don't write it, you can only use the address of the computer instead of using it localhost - Open on Google browser tensorboard Journal: http://localhost:6006/ - Model acc  - model structure

The following file or code, in which there are comments

(5) . config.py

#!/usr/bin/env python # _*_ coding:utf-8 _*_ # ============================================ # @Time : 2020/02/08 19:23 # @Author : WanDaoYi # @FileName : config.py # ============================================ from easydict import EasyDict as edict import os __C = edict() cfg = __C # common options public profile __C.COMMON = edict() # Windows gets the absolute path of files, which is convenient for windows to run projects in black windows __C.COMMON.BASE_PATH = os.path.abspath(os.path.dirname(__file__)) # # Get the path of the current window. When using Linux, switch to this, or an error will be reported. (windows can also use this) # __C.COMMON.BASE_PATH = os.getcwd() __C.COMMON.DATA_PATH = os.path.join(__C.COMMON.BASE_PATH, "dataset") # Shape of image __C.COMMON.DATA_RESHAPE = [-1, 28, 28, 1] # Shape of image rezie __C.COMMON.DATA_RESIZE = (32, 32) # Training configuration __C.TRAIN = edict() # Learning rate __C.TRAIN.LEARNING_RATE = 0.01 # batch_size __C.TRAIN.BATCH_SIZE = 32 # Iteration times __C.TRAIN.N_EPOCH = 10 # Model save path, use relative path, easy to transplant __C.TRAIN.MODEL_SAVE_PATH = "./checkpoint/model_" # dropout's holdings, 0.7 represents 70% of the nodes. __C.TRAIN.KEEP_PROB_DROPOUT = 0.7 # Test configuration __C.TEST = edict() # Test model save path __C.TEST.CKPT_MODEL_SAVE_PATH = "./checkpoint/model_acc=0.984000.ckpt-10" # Log configuration __C.LOG = edict() # Log saving path, followed by a trail or test: for example, MNIST log trail __C.LOG.LOG_SAVE_PATH = "./logs/mnist_log_"

(6) . common.py

#!/usr/bin/env python

# _*_ coding:utf-8 _*_

# ============================================

# @Time : 2020/02/08 19:26

# @Author : WanDaoYi

# @FileName : common.py

# ============================================

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

from config import cfg

import numpy as np

class Common(object):

def __init__(self):

# Data path

self.data_file_path = cfg.COMMON.DATA_PATH

pass

# Read data

def read_data(self):

# Data download address: http://yann.lecun.com/exdb/mnist/

mnist_data = input_data.read_data_sets(self.data_file_path, one_hot=True)

train_image = mnist_data.train.images

train_label = mnist_data.train.labels

_, n_feature = train_image.shape

_, n_label = train_label.shape

return mnist_data, n_feature, n_label

# bn operation

def deal_bn(self, input_data, train_flag=True):

bn_info = tf.layers.batch_normalization(input_data, beta_initializer=tf.zeros_initializer(),

gamma_initializer=tf.ones_initializer(),

moving_mean_initializer=tf.zeros_initializer(),

moving_variance_initializer=tf.ones_initializer(),

training=train_flag)

return bn_info

pass

# Pooling treatment

def deal_pool(self, input_data, ksize=(1, 2, 2, 1), strides=(1, 2, 2, 1),

padding="VALID", name="avg_pool"):

pool_info = tf.nn.avg_pool(value=input_data, ksize=ksize,

strides=strides, padding=padding,

name=name)

tf.summary.histogram('pooling', pool_info)

return pool_info

pass

# dropout processing

def deal_dropout(self, hidden_layer, keep_prob):

with tf.name_scope("dropout"):

tf.summary.scalar('dropout_keep_probability', keep_prob)

dropped = tf.nn.dropout(hidden_layer, keep_prob)

tf.summary.histogram('dropped', dropped)

return dropped

pass

# Parameter record

def variable_summaries(self, param):

with tf.name_scope('summaries'):

mean = tf.reduce_mean(param)

tf.summary.scalar('mean', mean)

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean(tf.square(param - mean)))

tf.summary.scalar('stddev', stddev)

tf.summary.scalar('max', tf.reduce_max(param))

tf.summary.scalar('min', tf.reduce_min(param))

tf.summary.histogram('histogram', param)

# Full connection operation

def neural_layer(self, x, n_neuron, name="fc"):

# Include all computing nodes. For this layer, the name scope can be written or not

with tf.name_scope(name=name):

n_input = int(x.get_shape()[1])

stddev = 2 / np.sqrt(n_input)

# The w in this layer can be regarded as a two-dimensional array. Each neuron has a set of w parameters

# truncated normal distribution has a smaller value than regular normal distribution

# There will be no big weight value to ensure a slow and steady training

# Using this standard deviation will make convergence faster

# The w parameter needs to be random, not 0, otherwise the output is 0, and the final adjustment is not significant.

with tf.name_scope("weights"):

init_w = tf.truncated_normal((n_input, n_neuron), stddev=stddev)

w = tf.Variable(init_w, name="weight")

self.variable_summaries(w)

with tf.name_scope("biases"):

b = tf.Variable(tf.zeros([n_neuron]), name="bias")

self.variable_summaries(b)

with tf.name_scope("wx_plus_b"):

z = tf.matmul(x, w) + b

tf.summary.histogram('pre_activations', z)

return z

# Convolution operation

def conv2d(self, input_data, filter_shape, strides_shape=(1, 1, 1, 1),

padding="VALID", train_flag=True, name="conv2d"):

with tf.variable_scope(name):

weight = tf.get_variable(name="weight", dtype=tf.float32,

trainable=train_flag,

shape=filter_shape,

initializer=tf.random_normal_initializer(stddev=0.01))

conv = tf.nn.conv2d(input=input_data, filter=weight,

strides=strides_shape, padding=padding)

conv_2_bn = self.deal_bn(conv, train_flag=train_flag)

return conv_2_bn

pass

pass

(7) . model code

#!/usr/bin/env python

# _*_ coding:utf-8 _*_

# ============================================

# @Time : 2020/02/08 22:26

# @Author : WanDaoYi

# @FileName : model_net.py

# ============================================

import tensorflow as tf

from core.common import Common

class ModelNet(object):

def __init__(self):

self.common = Common()

pass

def lenet_5(self, input_data, n_label=10, keep_prob=1.0, train_flag=True):

with tf.variable_scope("lenet-5"):

conv_1 = self.common.conv2d(input_data, (5, 5, 1, 6), name="conv_1")

tanh_1 = tf.nn.tanh(conv_1, name="tanh_1")

avg_pool_1 = self.common.deal_pool(tanh_1, name="avg_pool_1")

conv_2 = self.common.conv2d(avg_pool_1, (5, 5, 6, 16), name="conv_2")

tanh_2 = tf.nn.tanh(conv_2, name="tanh_2")

avg_pool_2 = self.common.deal_pool(tanh_2, name="avg_pool_2")

conv_3 = self.common.conv2d(avg_pool_2, (5, 5, 16, 120), name="conv_3")

tanh_3 = tf.nn.tanh(conv_3, name="tanh_3")

reshape_data = tf.reshape(tanh_3, [-1, 120])

dropout_1 = self.common.deal_dropout(reshape_data, keep_prob)

fc_1 = self.common.neural_layer(dropout_1, 84, name="fc_1")

tanh_4 = tf.nn.tanh(fc_1, name="tanh_4")

dropout_2 = self.common.deal_dropout(tanh_4, keep_prob)

fc_2 = self.common.neural_layer(dropout_2, n_label, name="fc_2")

scale_2 = self.common.deal_bn(fc_2, train_flag=train_flag)

result_info = tf.nn.softmax(scale_2, name="result_info")

return result_info

pass

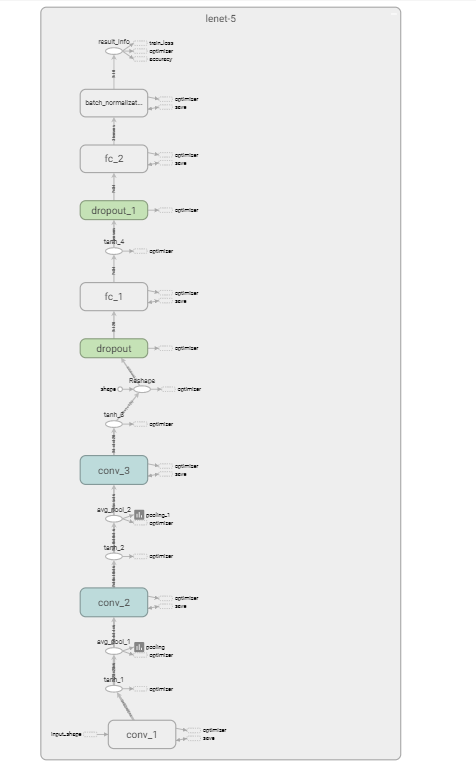

The model here, I used lenet-5, of course, I want to change other models later, it is also OK. In lenet-5, the input of the model is a 32 x 32 size image required by the shape. Otherwise, if the scale is not enough, the model will report an error. So, resize the image to 32 x 32.

(8) . training code

#!/usr/bin/env python

# _*_ coding:utf-8 _*_

# ============================================

# @Time : 2020/02/08 19:24

# @Author : WanDaoYi

# @FileName : cnn_mnist_train.py

# ============================================

from datetime import datetime

import tensorflow as tf

from config import cfg

from core.common import Common

from core.model_net import ModelNet

class CnnMnistTrain(object):

def __init__(self):

# Model save path

self.model_save_path = cfg.TRAIN.MODEL_SAVE_PATH

self.log_path = cfg.LOG.LOG_SAVE_PATH

self.learning_rate = cfg.TRAIN.LEARNING_RATE

self.batch_size = cfg.TRAIN.BATCH_SIZE

self.n_epoch = cfg.TRAIN.N_EPOCH

self.data_shape = cfg.COMMON.DATA_RESHAPE

self.data_resize = cfg.COMMON.DATA_RESIZE

self.common = Common()

self.model_net = ModelNet()

# Read data and dimensions

self.mnist_data, self.n_feature, self.n_label = self.common.read_data()

# Create a blueprint

with tf.name_scope(name="input_data"):

self.x = tf.placeholder(dtype=tf.float32, shape=(None, self.n_feature), name="input_data")

self.y = tf.placeholder(dtype=tf.float32, shape=(None, self.n_label), name="input_labels")

with tf.name_scope(name="input_shape"):

# 784 dimensions are transformed into pictures and kept to nodes

# -1 represents the number of incoming pictures, 28, 28 is the height and width of the picture, 1 is the color channel of the picture

image_shaped_input = tf.reshape(self.x, self.data_shape)

# resize the input image to the size required by the network

image_resize = tf.image.resize_images(image_shaped_input, self.data_resize)

tf.summary.image('input', image_resize, self.n_label)

self.keep_prob_dropout = cfg.TRAIN.KEEP_PROB_DROPOUT

self.keep_prob = tf.placeholder(tf.float32)

# Get the return result of the last level of lenet 5

self.result_info = self.model_net.lenet_5(image_resize, n_label=self.n_label,

keep_prob=self.keep_prob_dropout)

# Calculated loss

with tf.name_scope(name="train_loss"):

# Define loss function

self.cross_entropy = tf.reduce_mean(-tf.reduce_sum(self.y * tf.log(self.result_info),

reduction_indices=[1]))

tf.summary.scalar("train_loss", self.cross_entropy)

pass

with tf.name_scope(name="optimizer"):

self.optimizer = tf.train.AdamOptimizer(learning_rate=self.learning_rate)

self.train_op = self.optimizer.minimize(self.cross_entropy)

pass

with tf.name_scope(name="accuracy"):

self.correct_pred = tf.equal(tf.argmax(self.result_info, 1), tf.argmax(self.y, 1))

self.acc = tf.reduce_mean(tf.cast(self.correct_pred, tf.float32))

tf.summary.scalar("accuracy", self.acc)

pass

# Because we have defined too many tf.summary summary operations before, it is too troublesome to perform them one by one,

# Get all the summary operations directly using TF. Summary. Merge all() for later execution

self.merged = tf.summary.merge_all()

self.sess = tf.InteractiveSession()

# Save training model

self.saver = tf.train.Saver()

# Define two tf.summary.FileWriter file recorders and different subdirectories to store the training and test log data respectively

# At the same time, the Session calculation graph sess.graph is added to the training process so that it can be displayed in the graphics window of TensorBoard

self.train_writer = tf.summary.FileWriter(self.log_path + 'train', self.sess.graph)

self.test_writer = tf.summary.FileWriter(self.log_path + 'test')

pass

# Irrigation data

def feed_dict(self, train_flag=True):

# training sample

if train_flag:

# Get next batch of samples

x_data, y_data = self.mnist_data.train.next_batch(self.batch_size)

keep_prob = self.keep_prob_dropout

pass

# Validation sample

else:

x_data, y_data = self.mnist_data.test.images, self.mnist_data.test.labels

keep_prob = 1.0

pass

return {self.x: x_data, self.y: y_data, self.keep_prob: keep_prob}

pass

def do_train(self):

# Define initialization

init = tf.global_variables_initializer()

self.sess.run(init)

test_acc = None

for epoch in range(self.n_epoch):

# Obtain the total number of samples

batch_number = self.mnist_data.train.num_examples

# Obtain the total samples in several batches

size_number = int(batch_number / self.batch_size)

for number in range(size_number):

summary, _ = self.sess.run([self.merged, self.train_op], feed_dict=self.feed_dict())

# Cycle number

i = epoch * size_number + number + 1

self.train_writer.add_summary(summary, i)

if number == size_number - 1:

# Get next batch of samples

x_batch, y_batch = self.mnist_data.train.next_batch(self.batch_size)

acc_train = self.acc.eval(feed_dict={self.x: x_batch, self.y: y_batch})

print("acc_train: {}".format(acc_train))

# Verification method two or two, any one can be chosen.

test_summary, acc_test = self.sess.run([self.merged, self.acc], feed_dict=self.feed_dict(False))

print("epoch: {}, acc_test: {}".format(epoch + 1, acc_test))

self.test_writer.add_summary(test_summary, epoch + 1)

test_acc = acc_test

pass

save_path = self.model_save_path + "acc={:.6f}".format(test_acc) + ".ckpt"

# Preservation model

self.saver.save(self.sess, save_path, global_step=self.n_epoch)

self.train_writer.close()

self.test_writer.close()

pass

if __name__ == "__main__":

# Code start time

start_time = datetime.now()

print("start time: {}".format(start_time))

demo = CnnMnistTrain()

demo.do_train()

# Code end time

end_time = datetime.now()

print("End time: {}, Training model time consuming: {}".format(end_time, end_time - start_time))

Training code, just rough to training, not to do grid search, not to do fine tuning. There is no early stopping. If you are interested, you can add it yourself.

(9) . test code

#!/usr/bin/env python

# _*_ coding:utf-8 _*_

# ============================================

# @Time : 2020/02/08 19:24

# @Author : WanDaoYi

# @FileName : cnn_mnist_test.py

# ============================================

from datetime import datetime

import tensorflow as tf

import numpy as np

from config import cfg

from core.common import Common

from core.model_net import ModelNet

class CnnMnistTest(object):

def __init__(self):

self.common = Common()

self.model_net = ModelNet()

# Read data and dimensions

self.mnist_data, self.n_feature, self.n_label = self.common.read_data()

# ckpt model

self.test_ckpt_model = cfg.TEST.CKPT_MODEL_SAVE_PATH

print("test_ckpt_model: {}".format(self.test_ckpt_model))

# tf.reset_default_graph()

# Create a blueprint

with tf.name_scope(name="input"):

self.x = tf.placeholder(dtype=tf.float32, shape=(None, self.n_feature), name="input_data")

self.y = tf.placeholder(dtype=tf.float32, shape=(None, self.n_label), name="input_labels")

self.data_shape = cfg.COMMON.DATA_RESHAPE

self.data_resize = cfg.COMMON.DATA_RESIZE

with tf.name_scope(name="input_shape"):

# 784 dimensions are transformed into pictures and kept to nodes

# -1 represents the number of incoming pictures, 28 x 28 is the height and width of the picture, 1 is the color channel of the picture

self.image_shaped_input = tf.reshape(self.x, self.data_shape)

# resize the input image to the size 32 x 32 required by the network

self.image_resize = tf.image.resize_images(self.image_shaped_input, self.data_resize)

# Get the return result of the last level of lenet 5

self.result_info = self.model_net.lenet_5(self.image_resize, n_label=self.n_label)

pass

# Forecast

def do_ckpt_test(self):

saver = tf.train.Saver()

with tf.Session() as sess:

saver.restore(sess, self.test_ckpt_model)

# Forecast

output = self.result_info.eval(feed_dict={self.x: self.mnist_data.test.images})

# Convert one hot forecast to number

y_perd = np.argmax(output, axis=1)

print("predicted value: {}".format(y_perd[: 5]))

# True value

y_true = np.argmax(self.mnist_data.test.labels, axis=1)

print("True value: {}".format(y_true[: 5]))

pass

pass

if __name__ == "__main__":

# Code start time

start_time = datetime.now()

print("start time: {}".format(start_time))

demo = CnnMnistTest()

# Test with ckpt model

demo.do_ckpt_test()

# Code end time

end_time = datetime.now()

print("End time: {}, Training model time consuming: {}".format(end_time, end_time - start_time))

(10) . view log effect

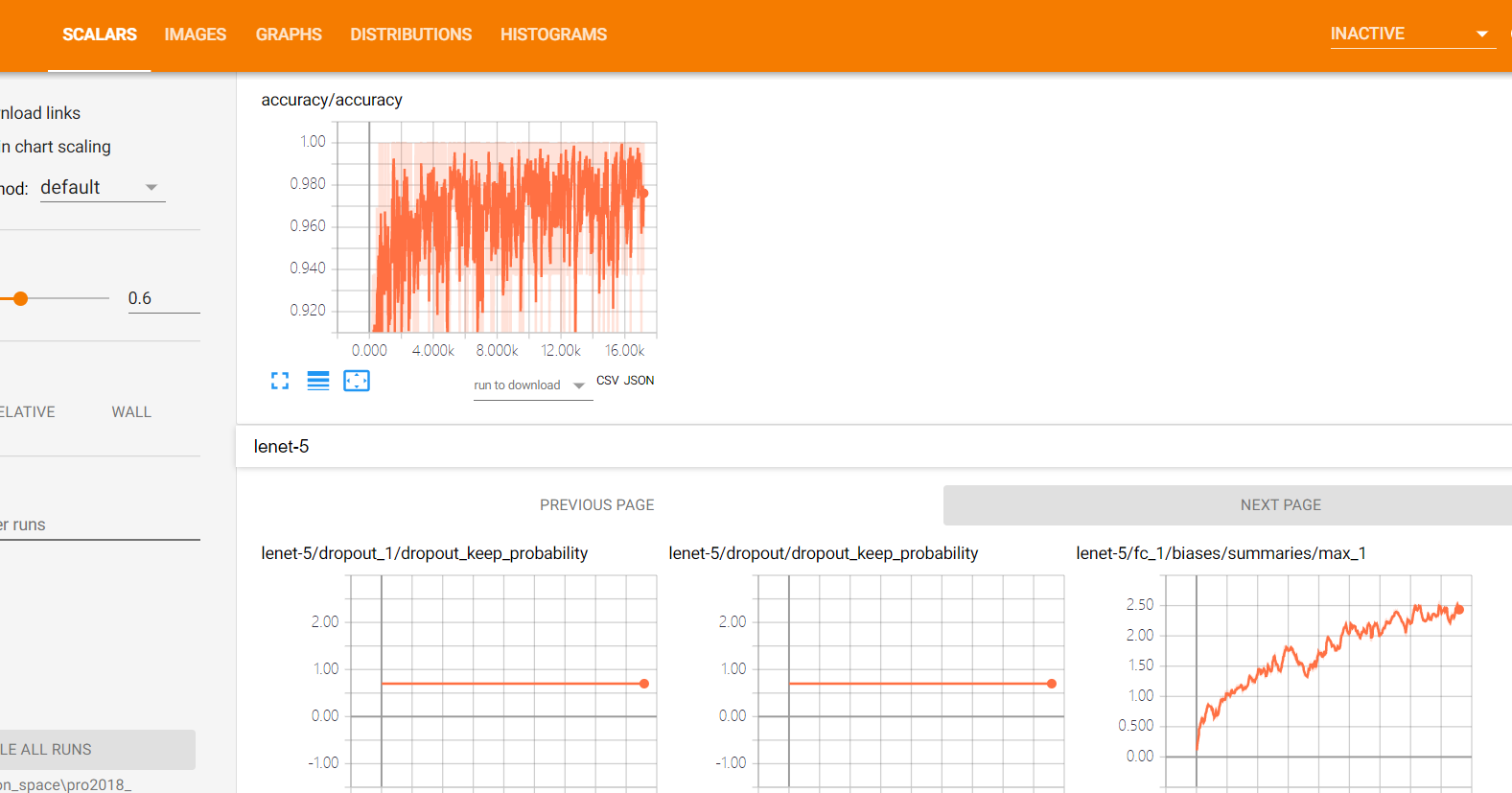

Image of acc:

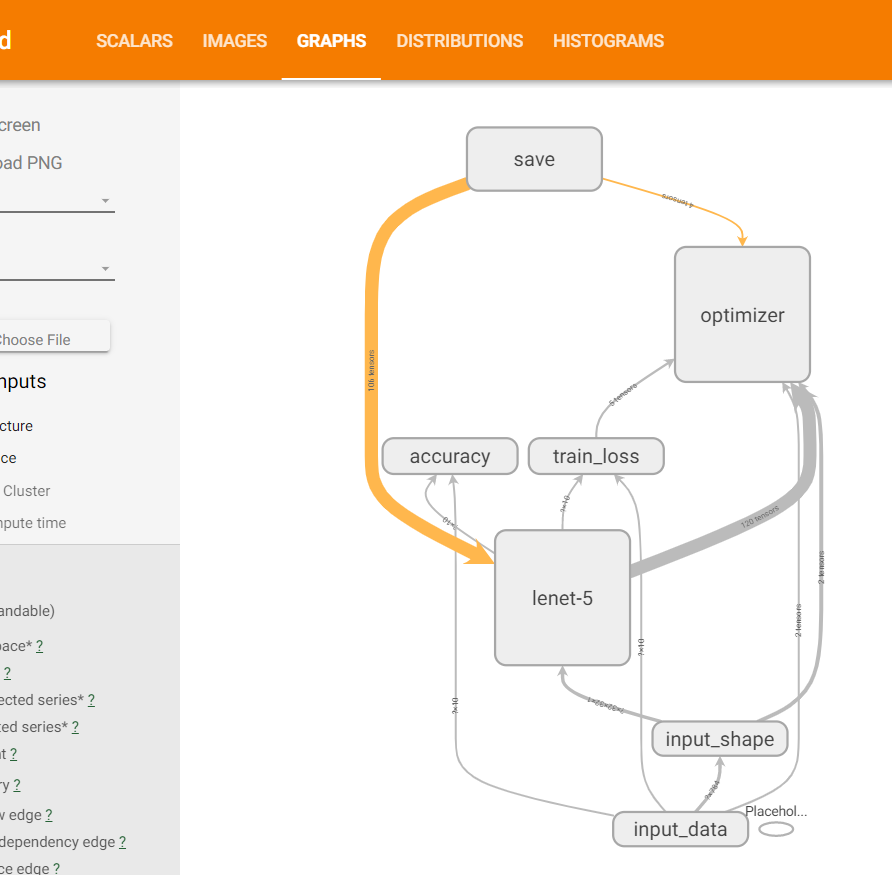

graphs image:

In the tensorboard log, you can double-click lenet-5. The model structure is as follows:

After opening the log graphs, you can zoom in to see a clear image

From the training of DNN and CNN, it is not difficult to see that CNN is better than DNN in image information prediction (the accuracy of 10 epochs of DNN is only 96%, while the accuracy of 10 epochs of CNN is 98%. Of course, this is only the training effect in the early stage, which is not good to explain directly. However, if the training times are enough, the effect of CNN will be a little better. That's why, in image processing, CNN is mostly used instead of pure DNN)

Back to CNN convolutional neural network directory

The last chapter: Depth part -- CNN convolutional neural network (3) On ROI pooling and ROI Align and interpolation