Attention mechanism

In section Encoder-Decoder (seq2seq), the decoder relies on the same context vector to obtain input sequence information at each time step.When the encoder is a circular nerve network, the background variable comes to the hidden state of its final time step.The source sequence input information is encoded in a cyclic unit state and then passed to the decoder to generate the target sequence.However, there are problems with this structure, especially with the disappearance of the long-range gradient in the RNN mechanism. For long sentences, it is difficult to expect that all the valid information will be saved by converting the input sequence into a fixed-length vector. As the length of the sentences being translated increases, the effect of this structure will be significantly reduced.

At the same time, the target words of the decoding may only be related to part of the original input words, not to all the inputs.For example, when "Hello world" is translated to "Bonjour le monde," Hello is mapped to "Bonjour" and "world" to "monde".In the seq2seq model, the decoder can only implicitly select the corresponding information from the final state of the encoder.However, attention mechanisms can explicitly model this selection process.

Attention mechanism framework

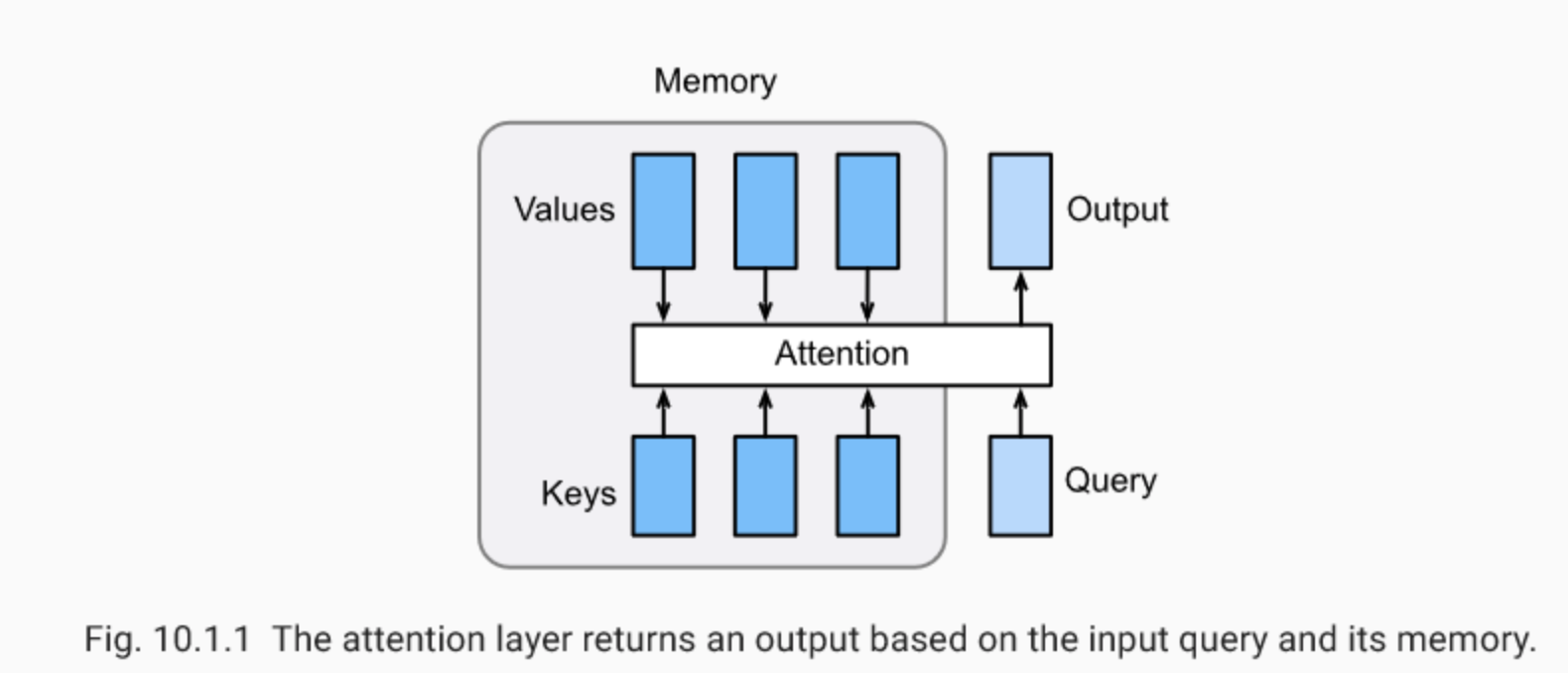

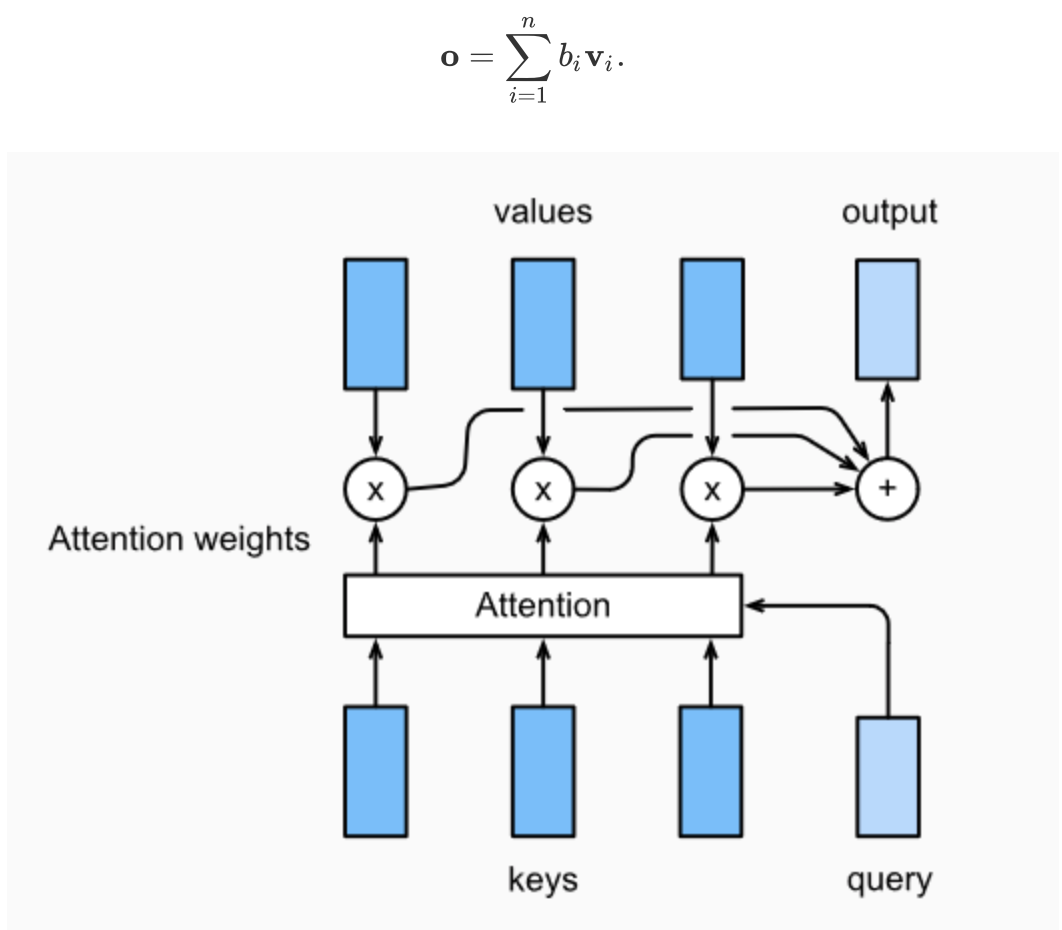

Attention is a general weighted pooling method, and the input consists of two parts: query and key-value pairs.Ki < Rdk,VI < Rdv. Query q < Rdq, attention layer gets the dimension of output consistent with value o < Rdv. For a query, attention layer calculates the attention score with each key and normalizes the weights, the vector o of output is the weighted sum of values, and the weights computed by each key correspond to the values one by one.

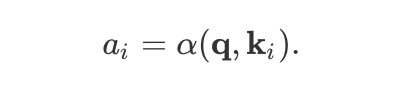

In order to calculate the output, we first assume that a function alpha is used to calculate the similarity between query and key, then we can calculate all attention scores a1,...,an by

We use the softmax function to get the attention weight:

The final output is the weighted sum of value s:

The difference between the attentions layers is the selection of the score function. In the remainder of this section, we will discuss two common attention layers, Dot-product Attention and Multilayer Perceptron Attention; then we will implement a seq2seq model that introduces attention and train and test it on English-French translation data.

import math import torch import torch.nn as nn

import os def file_name_walk(file_dir): for root, dirs, files in os.walk(file_dir): # print("root", root) # Current directory path print("dirs", dirs) # All subdirectories under current path print("files", files) # All non-directory subfiles under current path file_name_walk("/home/kesci/input/fraeng6506")

dirs []

files ['_about.txt', 'fra.txt']

Softmax Shielding

Before delving into implementation, let's first introduce a shielding operation for the softmax operator.

def SequenceMask(X, X_len,value=-1e6): maxlen = X.size(1) #print(X.size(),torch.arange((maxlen),dtype=torch.float)[None, :],'\n',X_len[:, None] ) mask = torch.arange((maxlen),dtype=torch.float)[None, :] >= X_len[:, None] #print(mask) X[mask]=value return X

def masked_softmax(X, valid_length): # X: 3-D tensor, valid_length: 1-D or 2-D tensor softmax = nn.Softmax(dim=-1) if valid_length is None: return softmax(X) else: shape = X.shape if valid_length.dim() == 1: try: valid_length = torch.FloatTensor(valid_length.numpy().repeat(shape[1], axis=0))#[2,2,3,3] except: valid_length = torch.FloatTensor(valid_length.cpu().numpy().repeat(shape[1], axis=0))#[2,2,3,3] else: valid_length = valid_length.reshape((-1,)) # fill masked elements with a large negative, whose exp is 0 X = SequenceMask(X.reshape((-1, shape[-1])), valid_length) return softmax(X).reshape(shape)

masked_softmax(torch.rand((2,2,4),dtype=torch.float), torch.FloatTensor([2,3]))

tensor([[[0.5423, 0.4577, 0.0000, 0.0000],

[0.5290, 0.4710, 0.0000, 0.0000]],

[[0.2969, 0.2966, 0.4065, 0.0000],

[0.3607, 0.2203, 0.4190, 0.0000]]])

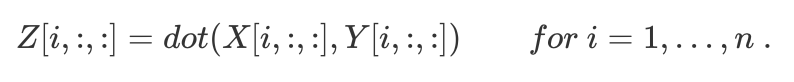

Multiplication beyond 2-dimensional matrix

X and Y are dimensions, respectively

Tensors of (b,n,m) and (b,m,k) are multiplied by a second-order two-dimensional matrix to obtain Z with dimensions of (b,n,k).

torch.bmm(torch.ones((2,1,3), dtype = torch.float), torch.ones((2,3,2), dtype = torch.float))

tensor([[[3., 3.]], [[3., 3.]]])

Dot product attention

The dot product assumes that query and keys have the same dimension, i, q, kI

< Rd. Calculating attention score by calculating the product of query and key transpose usually also removes the d reduction in the calculated score dependency on the dimension, as follows

Assuming Q < Rm*d has m queries and K < Rn*d has n keys, we can calculate all m n score s by matrix operation:

Now let's implement this layer, which supports a set of queries and key-value pairs.In addition, it supports random deletion of some attention weights as regularization.

# Save to the d2l package. class DotProductAttention(nn.Module): def __init__(self, dropout, **kwargs): super(DotProductAttention, self).__init__(**kwargs) self.dropout = nn.Dropout(dropout) # query: (batch_size, #queries, d) # key: (batch_size, #kv_pairs, d) # value: (batch_size, #kv_pairs, dim_v) # valid_length: either (batch_size, ) or (batch_size, xx) def forward(self, query, key, value, valid_length=None): d = query.shape[-1] # set transpose_b=True to swap the last two dimensions of key scores = torch.bmm(query, key.transpose(1,2)) / math.sqrt(d) attention_weights = self.dropout(masked_softmax(scores, valid_length)) print("attention_weight\n",attention_weights) return torch.bmm(attention_weights, value)

test

Now we have created two batches, one query and 10 key-values pairs for each batch.We specify by valid_length that for the first batch, we only focus on the first two key-value pairs, while for the second batch, we will examine the first six key-value pairs.Therefore, although the two batches have the same query and key-value pairs, the output we get is different.

atten = DotProductAttention(dropout=0) keys = torch.ones((2,10,2),dtype=torch.float) values = torch.arange((40), dtype=torch.float).view(1,10,4).repeat(2,1,1) atten(torch.ones((2,1,2),dtype=torch.float), keys, values, torch.FloatTensor([2, 6]))

attention_weight

tensor([[[0.5000, 0.5000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]],

[[0.1667, 0.1667, 0.1667, 0.1667, 0.1667, 0.1667, 0.0000, 0.0000,0.0000, 0.0000]]])

tensor([[[ 2.0000, 3.0000, 4.0000, 5.0000]],

[[10.0000, 11.0000, 12.0000, 13.0000]]])

Multi-layer Perceptor Attention

In Multilayer Perceptors, we first project query and keys to Rh. To be more specific, we define the score function by mapping Wk < Rh*dk, Wq < Rh*dq, and V < Rh.

The key and value are then combined in the dimension of the feature (concatenate), and sent to a single hidden layer perceptron where the hidden layer output a size of and. The hidden layer activation function is tanh, unbiased.

# Save to the d2l package. class MLPAttention(nn.Module): def __init__(self, units,ipt_dim,dropout, **kwargs): super(MLPAttention, self).__init__(**kwargs) # Use flatten=True to keep query's and key's 3-D shapes. self.W_k = nn.Linear(ipt_dim, units, bias=False) self.W_q = nn.Linear(ipt_dim, units, bias=False) self.v = nn.Linear(units, 1, bias=False) self.dropout = nn.Dropout(dropout) def forward(self, query, key, value, valid_length): query, key = self.W_k(query), self.W_q(key) #print("size",query.size(),key.size()) # expand query to (batch_size, #querys, 1, units), and key to # (batch_size, 1, #kv_pairs, units). Then plus them with broadcast. features = query.unsqueeze(2) + key.unsqueeze(1) #print("features:",features.size()) #------------------- On scores = self.v(features).squeeze(-1) attention_weights = self.dropout(masked_softmax(scores, valid_length)) return torch.bmm(attention_weights, value)

test

Although MLPAttention contains an additional MLP model, given the same input and the same key, we will get the same output as DotProductAttention

atten = MLPAttention(ipt_dim=2,units = 8, dropout=0) atten(torch.ones((2,1,2), dtype = torch.float), keys, values, torch.FloatTensor([2, 6]))

tensor([[[ 2.0000, 3.0000, 4.0000, 5.0000]],

[[10.0000, 11.0000, 12.0000, 13.0000]]], grad_fn=<BmmBackward>)

summary

- The attention layer explicitly selects relevant information.

- Note that the memory of the layer consists of key-value pairs, so its output is close to the value of the key similar to the query.

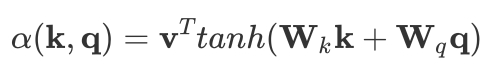

Seq2seq Model with Attention Mechanism

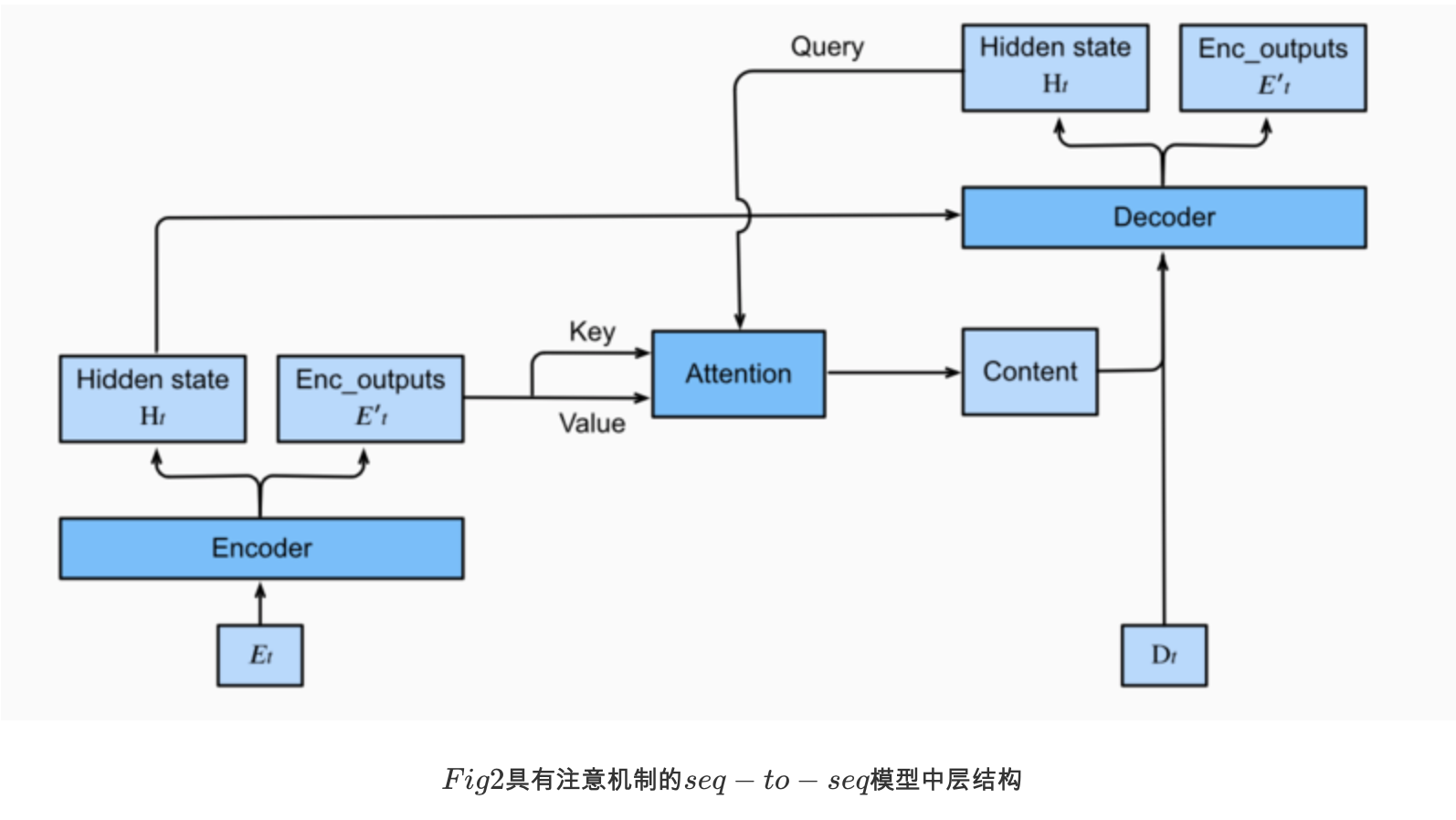

In this section, the attention mechanism is added to the sequence to sequence model to explicitly aggregate states using weights.The following figure shows the model structure of encoding and decoding at time step t.Now the attention layer holds all the information encodering sees - every step of the encoding output.During the decoding phase, the hidden state of the decoder's t-moment is treated as query, and the hidden states of each encoder's time-step are attentioned as key and value. The output of the Attetion model is treated as context vector of context information and input with the decoder

Dt is stitched together and sent to the decoder:

The following diagram shows the relationship between all layers of the seq2seq mechanism, and the layer structure of encoder and decoder

import sys sys.path.append('/home/kesci/input/d2len9900') import d2l

Decoder

Since the seq2seq encoder with attention mechanism is the same as Seq2SeqEncoder in the previous chapter, we only focus on the decoder here.We added a MLP Attention Layer (MLPAttention), which has the same hidden size as the LSTM layer in the decoder.Then we initialize the state of the decoder by passing three parameters from the encoder:

-

Each state of the encoder outputs of all timesteps:encoder output, used in the memory part of the attetion layer, has the same key and values

-

the hidden state of the encoder's final timestep: The hidden state of the last time step of the encoder used to initialize the hidden state of the decoder

-

the encoder valid length: The effective length of the encoder so that the attention layer does not take into account the Paddings in the encoder output

At each time step of decoding, we use the output of the last RNN layer of the decoder as the query of the attention layer.Then, the output of the attention model is connected to the input embedded vector and input to the RNN layer.Although the RNN layer hidden state also contains historical information from the decoder, the output of the attention model explicitly selects the encoder output within enc_valid_len, so that the attention mechanism excludes as much unrelated information as possible.

class Seq2SeqAttentionDecoder(d2l.Decoder): def __init__(self, vocab_size, embed_size, num_hiddens, num_layers, dropout=0, **kwargs): super(Seq2SeqAttentionDecoder, self).__init__(**kwargs) self.attention_cell = MLPAttention(num_hiddens,num_hiddens, dropout) self.embedding = nn.Embedding(vocab_size, embed_size) self.rnn = nn.LSTM(embed_size+ num_hiddens,num_hiddens, num_layers, dropout=dropout) self.dense = nn.Linear(num_hiddens,vocab_size) def init_state(self, enc_outputs, enc_valid_len, *args): outputs, hidden_state = enc_outputs # print("first:",outputs.size(),hidden_state[0].size(),hidden_state[1].size()) # Transpose outputs to (batch_size, seq_len, hidden_size) return (outputs.permute(1,0,-1), hidden_state, enc_valid_len) #outputs.swapaxes(0, 1) def forward(self, X, state): enc_outputs, hidden_state, enc_valid_len = state #("X.size",X.size()) X = self.embedding(X).transpose(0,1) # print("Xembeding.size2",X.size()) outputs = [] for l, x in enumerate(X): # print(f"\n{l}-th token") # print("x.first.size()",x.size()) # query shape: (batch_size, 1, hidden_size) # select hidden state of the last rnn layer as query query = hidden_state[0][-1].unsqueeze(1) # np.expand_dims(hidden_state[0][-1], axis=1) # context has same shape as query # print("query enc_outputs, enc_outputs:\n",query.size(), enc_outputs.size(), enc_outputs.size()) context = self.attention_cell(query, enc_outputs, enc_outputs, enc_valid_len) # Concatenate on the feature dimension # print("context.size:",context.size()) x = torch.cat((context, x.unsqueeze(1)), dim=-1) # Reshape x to (1, batch_size, embed_size+hidden_size) # print("rnn",x.size(), len(hidden_state)) out, hidden_state = self.rnn(x.transpose(0,1), hidden_state) outputs.append(out) outputs = self.dense(torch.cat(outputs, dim=0)) return outputs.transpose(0, 1), [enc_outputs, hidden_state, enc_valid_len]

Now we can use the attention model to test seq2seq.To be consistent with the model in section 9.7, we used the same hyperparameters for vocab_size, embedded_size, num_hiddens, and num_layers.As a result, we get the same output shape of the decoder, but the state structure changes.

encoder = d2l.Seq2SeqEncoder(vocab_size=10, embed_size=8, num_hiddens=16, num_layers=2) # encoder.initialize() decoder = Seq2SeqAttentionDecoder(vocab_size=10, embed_size=8, num_hiddens=16, num_layers=2) X = torch.zeros((4, 7),dtype=torch.long) print("batch size=4\nseq_length=7\nhidden dim=16\nnum_layers=2\n") print('encoder output size:', encoder(X)[0].size()) print('encoder hidden size:', encoder(X)[1][0].size()) print('encoder memory size:', encoder(X)[1][1].size()) state = decoder.init_state(encoder(X), None) out, state = decoder(X, state) out.shape, len(state), state[0].shape, len(state[1]), state[1][0].shape

batch size=4

seq_length=7

hidden dim=16

num_layers=2

encoder output size: torch.Size([7, 4, 16])

encoder hidden size: torch.Size([2, 4, 16])

encoder memory size: torch.Size([2, 4, 16])

(torch.Size([4, 7, 10]), 3, torch.Size([4, 7, 16]), 2, torch.Size([2, 4, 16]))

train

Similar to section 9.7.4, try a simple entertainment model by applying the same training hyperparameters and the same training loss.From the results, we can see that the additional attention layers did not result in significant improvements because of the relatively short sequence in the training dataset.This model is much slower than the unnoticed seq2seq model due to the computational overhead of the attention layer of the encoder and decoder.

import zipfile import torch import requests from io import BytesIO from torch.utils import data import sys import collections class Vocab(object): # This class is saved in d2l. def __init__(self, tokens, min_freq=0, use_special_tokens=False): # sort by frequency and token counter = collections.Counter(tokens) token_freqs = sorted(counter.items(), key=lambda x: x[0]) token_freqs.sort(key=lambda x: x[1], reverse=True) if use_special_tokens: # padding, begin of sentence, end of sentence, unknown self.pad, self.bos, self.eos, self.unk = (0, 1, 2, 3) tokens = ['', '', '', ''] else: self.unk = 0 tokens = [''] tokens += [token for token, freq in token_freqs if freq >= min_freq] self.idx_to_token = [] self.token_to_idx = dict() for token in tokens: self.idx_to_token.append(token) self.token_to_idx[token] = len(self.idx_to_token) - 1 def __len__(self): return len(self.idx_to_token) def __getitem__(self, tokens): if not isinstance(tokens, (list, tuple)): return self.token_to_idx.get(tokens, self.unk) else: return [self.__getitem__(token) for token in tokens] def to_tokens(self, indices): if not isinstance(indices, (list, tuple)): return self.idx_to_token[indices] else: return [self.idx_to_token[index] for index in indices] def load_data_nmt(batch_size, max_len, num_examples=1000): """Download an NMT dataset, return its vocabulary and data iterator.""" # Download and preprocess def preprocess_raw(text): text = text.replace('\u202f', ' ').replace('\xa0', ' ') out = '' for i, char in enumerate(text.lower()): if char in (',', '!', '.') and text[i-1] != ' ': out += ' ' out += char return out with open('/home/kesci/input/fraeng6506/fra.txt', 'r') as f: raw_text = f.read() text = preprocess_raw(raw_text) # Tokenize source, target = [], [] for i, line in enumerate(text.split('\n')): if i >= num_examples: break parts = line.split('\t') if len(parts) >= 2: source.append(parts[0].split(' ')) target.append(parts[1].split(' ')) # Build vocab def build_vocab(tokens): tokens = [token for line in tokens for token in line] return Vocab(tokens, min_freq=3, use_special_tokens=True) src_vocab, tgt_vocab = build_vocab(source), build_vocab(target) # Convert to index arrays def pad(line, max_len, padding_token): if len(line) > max_len: return line[:max_len] return line + [padding_token] * (max_len - len(line)) def build_array(lines, vocab, max_len, is_source): lines = [vocab[line] for line in lines] if not is_source: lines = [[vocab.bos] + line + [vocab.eos] for line in lines] array = torch.tensor([pad(line, max_len, vocab.pad) for line in lines]) valid_len = (array != vocab.pad).sum(1) return array, valid_len src_vocab, tgt_vocab = build_vocab(source), build_vocab(target) src_array, src_valid_len = build_array(source, src_vocab, max_len, True) tgt_array, tgt_valid_len = build_array(target, tgt_vocab, max_len, False) train_data = data.TensorDataset(src_array, src_valid_len, tgt_array, tgt_valid_len) train_iter = data.DataLoader(train_data, batch_size, shuffle=True) return src_vocab, tgt_vocab, train_iter

embed_size, num_hiddens, num_layers, dropout = 32, 32, 2, 0.0 batch_size, num_steps = 64, 10 lr, num_epochs, ctx = 0.005, 500, d2l.try_gpu() src_vocab, tgt_vocab, train_iter = load_data_nmt(batch_size, num_steps) encoder = d2l.Seq2SeqEncoder( len(src_vocab), embed_size, num_hiddens, num_layers, dropout) decoder = Seq2SeqAttentionDecoder( len(tgt_vocab), embed_size, num_hiddens, num_layers, dropout) model = d2l.EncoderDecoder(encoder, decoder)

Training and Forecasting

d2l.train_s2s_ch9(model, train_iter, lr, num_epochs, ctx)

epoch 50,loss 0.104, time 54.7 sec

epoch 100,loss 0.046, time 54.8 sec

epoch 150,loss 0.031, time 54.7 sec

epoch 200,loss 0.027, time 54.3 sec

epoch 250,loss 0.025, time 54.3 sec

epoch 300,loss 0.024, time 54.4 sec

epoch 350,loss 0.024, time 54.4 sec

epoch 400,loss 0.024, time 54.5 sec

epoch 450,loss 0.023, time 54.4 sec

epoch 500,loss 0.023, time 54.7 sec

for sentence in ['Go .', 'Good Night !', "I'm OK .", 'I won !']: print(sentence + ' => ' + d2l.predict_s2s_ch9( model, sentence, src_vocab, tgt_vocab, num_steps, ctx))

Go . => va !

Good Night ! => !

I'm OK . => ça va .

I won ! => j'ai gagné !