Directory: 1. Introduction to RBD

Common RBD Commands

RBD Configuration Operation

RBD mounted on operating system

Snapshot Configuration

Export Import RBD Mirror

Introduction to RBD

RBD is short for RADOS Block Device. RBD block storage is the most stable and common storage type.RBD block devices like disks can be mounted.RBD block devices have snapshots, multiple copies, clones, and consistency, and the data is stored in stripes in multiple OSD s of the Ceph cluster.The following is the understanding of Ceph RBD. Snapshots are equivalent to cloning, that is, there is an RBD1 to make a snapshot, such as writing data to it and rolling back, which is the way to make a backup. Although we write to a file in a block, it actually stores an object inside.

RBD is a block device in Ceph. A 4T block device functions like a 4T SATA. A mounted RBD can be used as a disk; you can understand that a block is a disk.

resizable: this block can be big or small; however, this block can be resized and expanded

data striped: This block is cut into small pieces inside Ceph to save, otherwise how do 1PB blocks get saved? 1PB data can store 55 PB block devices

thin-provisioned: streamlined provisioning, 1TB clusters are capable of creating an infinite number of 1PB blocks.In fact, there is no relationship between the size of the block and the actual size taken up in Ceph. The newly created block does not take up much space. How much space will be used in the future before how much space will be taken up in Ceph.For example: if you have a 32G U disk with a 2G movie in it, the RBD size is similar to 32G, and 2G is equivalent to the space occupied in Ceph;

Block storage is essentially a mapping of bare disks or similar bare disk (lvm) devices to hosts that can format and store and read data. Block devices read fast but do not support sharing.

Block storage is fast but relatively not supported for sharing and, if supported, Cephfs is not needed. Many companies use this block to do some development based on blocks and object storage.

ceph can provide block device support through kernel modules and librbd libraries.Clients can use rbd via a kernel module. Clients can format and use rbd block devices just like regular hard disks. Client applications can also use ceph blocks via librbd, typically the block storage service of cloud platforms (see below). Cloud platforms can use rbd as the storage backend of the cloud to provide mirror storage, volume blocks, or customersSystem boot disk, etc.

Use scenarios:

Cloud Platform (OpenStack provides mirrored storage as a storage backend for the cloud)

K8s container (automatic pv supply)

A map blocking device is used directly, requests a block in storage, maps the block to a disk that the system recognizes, such as ls/dev/can see the RBD, mounts it locally, and if shared, maps this out with the help of another tool, export, ISCIS, install the Ceph client, that is, install the iscls package on each machine so that you can use this command to create blocks

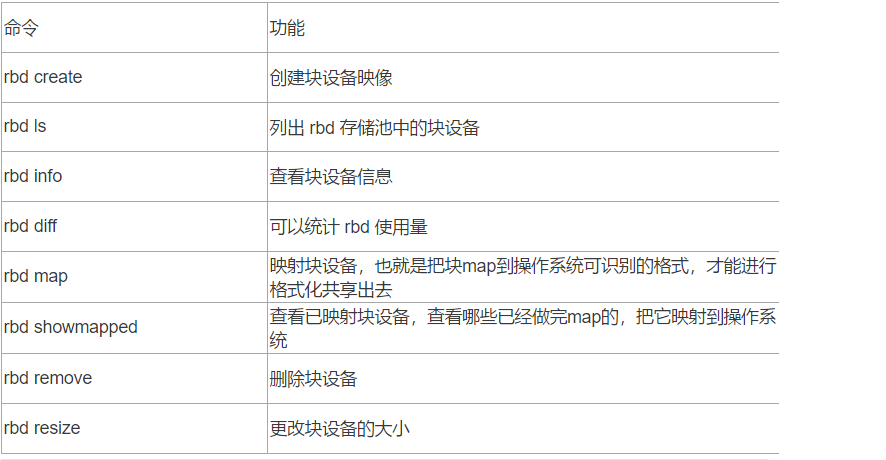

Common RBD Commands

RBD Configuration Operation

RBD mounted to operating system

1. Create pool s used by rbd

That is, when you create an rbd, you put it under this pool, but if you don't want to put it under the pool, you can specify the name of the pool. These commands are not currently for k8s. For example, if our service temporarily needs a space to mount this file locally, you can use this method to take a space out of the storage for him to use

3232: Its PG and pgp number is the number of pgs, that is, to create a pool,32 pg, 32 pgp, this will dynamically expand with the capacity increase, PG will also be dynamically expanded, production specifies how much PG will be used, according to the number of osd s, the number of files, do a pre-planning

Once created, a new rbd block store can be viewed through ceph osd lspools

#ceph osd pool create rbd 32 32 .rgw.root default.rgw.control default.rgw.meta default.rgw.log rbd //You can see pg_num and pgp_num by looking at the details of creating a pool through # ceph osd pool ls detail pg_num yes pg Included object,pg_num Is Description pg Location, where the two numbers correspond one to one pool 5 'rbd' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode warn last_change 29 flags hashpspool stripe_width 0 //Mark this pool as rbd #ceph osd pool application enable rbd rbd //Once executed, you can use the rbd ls command [root@cephnode01 my-cluster]# rbd ls

2. Create a block of device images

#rbd create --size 10240 image01

3. See how many mirrors the block device has, and details

#ls

#rbd info image01

rbd image 'image01':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 11bb8aff0615

block_name_prefix: rbd_data.11bb8aff0615

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Mon Mar 2 15:14:01 2020

access_timestamp: Mon Mar 2 15:14:01 2020

modify_timestamp: Mon Mar 2 15:14:01 2020

//Use the rados command to view the underlying rbd

#rados -p rbd ls --all

rbd_id.image01

rbd_directory

rbd_object_map.11bb8aff0615

rbd_header.11bb8aff0615

rbd_info4. Mapping block devices to the system kernel

#rbd map image01

If this error occurs here the following unsupported system cores need to be executed

5. Disable feature s not supported by the current system kernel

#rbd feature disable image01 exclusive-lock, object-map, fast-diff, deep-flatten

View rbd info image01 again

Details show that feature has been turned off

6. Remap

#rbd map image01 /dev/rbd0 #Ls/dev/rbd0 view will have one more/dev/rbd0 device /dev/rbd0

6. Formatting Block Device Mirror, that is, you can use this device now because it is mapped to the system kernel, but before using it we need to format it

#mkfs.xfs /dev/rbd0 meta-data=/dev/rbd0 isize=512 agcount=16, agsize=163840 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=2621440, imaxpct=25 = sunit=1024 swidth=1024 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=8 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0

7. Mount to local, mount to system/mnt directory, or use other directories

#mount /dev/rbd0 /mnt //File System Capacity Used Available%Mountpoint /dev/mapper/centos-root 36G 5.6G 30G 16% / devtmpfs 1.9G 0 1.9G 0% /dev tmpfs 1.9G 0 1.9G 0% /dev/shm tmpfs 1.9G 13M 1.9G 1% /run tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup /dev/sda1 1014M 170M 845M 17% /boot tmpfs 378M 8.0K 378M 1% /run/user/42 tmpfs 378M 44K 378M 1% /run/user/0 /dev/sr0 4.2G 4.2G 0 100% /run/media/root/CentOS 7 x86_64 tmpfs 1.9G 52K 1.9G 1% /var/lib/ceph/osd/ceph-0 /dev/rbd0 10G 33M 10G 1% /mnt

8. Create a file under / mnt, also known as a mounted directory, for testing. Use the rados command to see that it stores data in the rbd format

#cd /mnt

#echo "welcome to use rbd" > test.txt

#rados -p rbd ls --all

rbd_data.11bb8aff0615.0000000000000001

rbd_data.11bb8aff0615.00000000000006e0

rbd_data.11bb8aff0615.00000000000008c0

rbd_data.11bb8aff0615.0000000000000501

rbd_data.11bb8aff0615.0000000000000140

rbd_data.11bb8aff0615.0000000000000960

rbd_id.image01

rbd_data.11bb8aff0615.0000000000000780

rbd_data.11bb8aff0615.0000000000000640

rbd_directory

rbd_data.11bb8aff0615.0000000000000820

rbd_data.11bb8aff0615.00000000000001e0

rbd_header.11bb8aff0615

rbd_info

rbd_data.11bb8aff0615.00000000000005a0

rbd_data.11bb8aff0615.00000000000009ff

rbd_data.11bb8aff0615.0000000000000280

//Uninstall using umount

#umount /mnt9. Unmap Block Devices and Kernels

View map

#rbd showmapped

id pool namespace image snap device

rbd image01 - /dev/rbd0

//Cancel Block Device

#rbd unmap image01

//Looking at info device information again is still in place, that is, after umount and unmap data will not be lost, only after rm deletes the block device data will be lost

#rbd info image01

rbd image 'image01':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 11bb8aff0615

block_name_prefix: rbd_data.11bb8aff0615

format: 2

features: layering

op_features:

flags:

create_timestamp: Mon Mar 2 15:14:01 2020

access_timestamp: Mon Mar 2 15:14:01 2020

modify_timestamp: Mon Mar 2 15:14:01 202010. Delete RBD Block Device

#rbd rm image01 This is how the local machine mounts the rbd to the file system, but it can only be created when there is an rbd command in the CEPH cluster. If you want to use the rbd command, you need to install ceph-mon

Snapshot Configuration

1. Create snapshots

#rbd create --size 10240 image02 #rbd snap create image02@image02_snap01

2. List snapshots created

#rbd snap list image02 SNAPID NAME SIZE PROTECTED TIMESTAMP image02_snap01 10 GiB Mon Mar 2 16:44:37 2020 //or #rbd ls -l NAME SIZE PARENT FMT PROT LOCK image02 10 GiB 2 image02@image02_snap01 10 GiB 2

3. View snapshot details

#rbd info image02@image02_snap01

rbd image 'image02':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 1

id: 12315524dc09

block_name_prefix: rbd_data.12315524dc09

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Mon Mar 2 16:32:50 2020

access_timestamp: Mon Mar 2 16:32:50 2020

modify_timestamp: Mon Mar 2 16:32:50 2020

protected: False4. Clone snapshots (snapshots must be protected to be cloned)

Enter Protected State

#rbd snap protect image02@image02_snap01

Here you need to create a pool for kube

#ceph osd pool create kube 16 16 //Cloning to kube/image02_clone01 #rbd clone rbd/image02@image02_snap01 kube/image02_clone01 #rbd ls -p kube image02_clone01

5. View the children of the snapshot and its sub-snapshots

#rbd children image02 kube/image02_clone01

6. Remove the parent of the snapshot, and then look at it again after removing the relationship.

#rbd flatten kube/image02_clone01 Image flatten: 100% complete...done.

7. Restore a snapshot, which is a rollback operation, such as creating a file with a snapshot of 1g before, then you can use the snapshot operation before recovery if you want to rollback to the previous state.

#rbd snap rollback image02@image02_snap01

8. Delete snapshot

Unprotect

#rbd snap unprotect image02@image02_snap01 #rbd snap remove image02@image02_snap01

View after deletion

#rbd snap ls image02

9. Export Import RBD Mirror

Export RBD Mirror

#rbd export image02 /tmp/image02 Exporting image: 100% complete...done.

Import RBD Mirror

Delete previous work first

#rbd ls image02 rbd remove image02 Removing image: 100% complete...done.

Import

#rbd import /tmp/image02 rbd/image02 --image-format 2

Summary:

If you use RBD in k8s, it is also possible to create a block of devices that map to a system-identifiable device, format it, mount it locally, share it through exports, and use RBD with NFS

Use command for RBD expansion: rbd --image image03 resize --size 15240

1. Expand with rbd, for example, create a new RBD image

#rbd create --size 10240 image03 rbd ls

2. View block storage details

#rbd info image03

3. Use rados to view underlying device rbd block devices

#rados -p rbd ls

4. Disable feature s not supported by the current system kernel

#rbd feature disable image03 exclusive-lock, object-map, fast-diff, deep-flatten

5. map becomes a system-recognizable device

#rbd map image03

6. Formatting

#mkfs.xfs /dev/rbd0

7. Mount to Local

#mount /dev/rbd0 /mnt

8. Use df-h to see that the disk is mounted

#df -h

9. Expand capacity

#rbd --image image03 resize --size 15240

10. View the size of the rbd, which has been expanded to 15, but this does not directly occupy 15g, but how much to use, how much to use, the use of a streamlined substitution

#rbd info image03

rbd image 'image03':

size 15 GiB in 3810 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 134426f02050

block_name_prefix: rbd_data.134426f02050

format: 2

features: layering

op_features:

flags:

create_timestamp: Mon Mar 2 18:26:29 2020

access_timestamp: Mon Mar 2 18:26:29 2020

modify_timestamp: Mon Mar 2 18:26:29 2020