Scene description

To form Qlik automatic sampling.

Qlik is required to automatically go to the source database to obtain data according to a configuration table (excel table) and store it as a qvd file. Data should support incremental mode (in fact, ETL work: obtain isomorphic table from source database)

In the future, we need to add and reduce isomorphic tables. We only need to add and delete records in the configuration table, and mark the extraction methods (incremental, full, etc.), which greatly reduces the workload.

program development

Theme code structure

- [Main]: system constant setting, application automatically generated, no change

- [Const]: program constant declaration, such as DB connection name, file root path name, etc

- [SubProgs]: subroutine declaration, defining some reusable blocks

- [LoadData]: sampling the main program to obtain the source database data and store it in qvd

- [CombineData]: other personalized data processing. Here is the union(concatenate) of two DB sources and tables with the same name

1, Constant declaration Const

// Global constant Set DefaultDBConn = 'LIS_NodeA'; //Default source DB library connection name Set SenseRootPath = 'lib://QlikSense '; / / stores the connection name of the root directory such as extraction log and configuration file // configuration file Set ConfigPath = '$(SenseRootPath)/Config/'; Set LogFileName = 'ATSLoadLog.qvd'; //qvd name of the log file Set DataPath = '$(SenseRootPath)/Data/'; // qvd file path of each view & Table Set ViewType = 'Interface'; // 'logs' data table type: logs / interface // Front end configuration files for processing collection expressions Set DBEffDays = 10;

2, Subroutine declaration

Define reusable subroutine block. This code can be put on the server in the form of file (txt), and application can call the block program. Especially, log can be developed for other applications

// Create log file (when log does not exist)

Sub CreateLogFile(p_ErpLogPath,p_LogFileName)

LogTable:

Load * Inline [ViewType,SourceViewName,StatusFlag,ExeTime,Comments,CreationDate];

Store LogTable Into [$(p_ErpLogPath)$(p_LogFileName)](qvd);

End Sub;

// Store table load records. Incremental data needs to be determined according to the log time

Sub StoreLogs(p_ViewType, p_DwViewName, p_ErpLogPath, p_LogFileName, p_StatusFlag, p_ThisExeTime)

Let LogComments = Replace(ScriptErrorList,chr(10),';');

ScriptErrorList = ''; // Clear error list

Let LogCreationTime = Text(Now());

LogTable:

Load * From [$(p_ErpLogPath)$(p_LogFileName)](qvd);

Concatenate (LogTable)

Load * Inline [ViewType ,SourceViewName ,StatusFlag ,ExeTime ,Comments ,CreationDate

'$(p_ViewType)','$(p_DwViewName)',$(p_StatusFlag),'$(p_ThisExeTime)','$(LogComments)','$(LogCreationTime)'];

Store LogTable Into [$(p_ErpLogPath)$(p_LogFileName)](qvd);

Drop Table LogTable;

End Sub;

// Required variables: viewtype (module name), dwviewname (table name to be stored), ErpQvdPath(qvd save path), qvdname (save name)

// Erplogpath, logfilename, thisexetime

// Optional variable: PreviousErrorCount the last error count when traversing multiple

Sub StoreQvd(p_ViewType, p_DwViewName, p_ErpQvdPath, p_QvdName, p_PreviousErrorCount, p_ErpLogPath, p_LogFileName, p_ThisExeTime)

// Save Qvd file & write log file

Let LogNotExists = IsNull(FileTime('$(p_ErpLogPath)$(p_LogFileName)'));

If $(LogNotExists) = -1 Then

LogTable:

Load * Inline [ViewType,SourceViewName,StatusFlag,ExeTime,Comments,CreationDate];

Store LogTable Into [$(p_ErpLogPath)$(p_LogFileName)](qvd);

Drop Table LogTable;

End If;

trace 'ScriptErrorCount=$(ScriptErrorCount)';

trace 'p_PreviousErrorCount=$(p_PreviousErrorCount)';

If (If(IsNull($(p_PreviousErrorCount)), $(ScriptErrorCount), $(p_PreviousErrorCount)-$(ScriptErrorCount))) = 0 Then

Store $(p_DwViewName) into [$(p_ErpQvdPath)$(p_QvdName).qvd](qvd);

Call StoreLogs('$(p_ViewType)', '$(p_DwViewName)', '$(p_ErpLogPath)', '$(p_LogFileName)', 'S', '$(p_ThisExeTime)');

Else

Call StoreLogs('$(p_ViewType)', '$(p_DwViewName)', '$(p_ErpLogPath)', '$(p_LogFileName)', 'E', '$(p_ThisExeTime)');

End If;

End Sub;

3, Extract data main code (key)

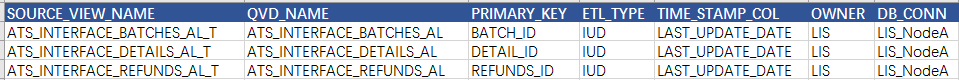

When extracting data, read the excel table, which records the tables / views to be extracted and the corresponding extraction methods. The contents are as follows

- Source [view] name: the name of the table / view to be extracted (go to the source library and execute the select statement according to the name, so the name must not be wrong)

- qvd_name: the name of the file when it is stored as a qvd file

- primary_key: the primary key of the table, which is required for incremental query

- etl_type: extraction method

- IUD: when the original table record has the operations of insert, update and delete, extract by IUD

- IU: when the original table record is only added (Insert) or updated (Update), use IU to extract (the table record will not be deleted)

- I: when the original table record is only added (Insert), select by [i]

- Time stamp col: a time stamp field. It is required for incremental update. The data change time is determined by the time stamp to determine the incremental record

- owner: the db user to which the table / view belongs

- db_conn: database connection name defined in sense

After the connection name is configured in the sense editor, you can write the code to ETL the data

// Read excel table configuration

SourceViews:

LOAD

SOURCE_VIEW_NAME,

QVD_NAME,

PRIMARY_KEY,

ETL_TYPE,

TIME_STAMP_COL,

OWNER,

DB_CONN

FROM [$(ConfigPath)ATS_Tables.xlsx]

(ooxml, embedded labels, table is Intf);

Let SourceViewsCnt = NoOfRows('SourceViews');

Trace '==> DefaultDBConn = $(DefaultDBConn)';

// Load DB data

Let DBConn = '$(DefaultDBConn)'; // Configure default DB connection

LIB CONNECT TO [$(DBConn)];

For i = 0 to $(SourceViewsCnt)-1

Let RecordErrorCount = ScriptErrorCount;

// Let LogComments = 'Success';

//Begin: get basic data information

Let Owner = peek('OWNER',i,'SourceViews')&'.';

Let DwViewName = peek('SOURCE_VIEW_NAME',i,'SourceViews');

Let PrimaryKey = peek('PRIMARY_KEY',i,'SourceViews');

Let QvdName = peek('QVD_NAME',i,'SourceViews');

Let EtlType = 'ALL';//peek('ETL_TYPE',i,'SourceViews');

Let TimeStampCol = peek('TIME_STAMP_COL',i,'SourceViews');

Let ViewDbConn = peek('DB_CONN',i,'SourceViews');

If '$(DBConn)' = '$(ViewDbConn)' Then

Trace '==> DB Connection is $(DBConn)';

Else

DisConnect;

Let DBConn = '$(ViewDbConn)';

LIB CONNECT TO [$(DBConn)];

End If;

Trace ' => DwViewName = $(DwViewName); EtlType = $(EtlType)';

Let QvdNotExist = IsNull(QvdCreateTime('$(DataPath)'&'$(QvdName)'&'.qvd'));

Let LogNotExist = IsNull(QvdCreateTime('$(DataPath)'&'$(LogFileName)'));

If $(LogNotExist) = -1 Then

Call CreateLogFile('$(DataPath)','$(LogFileName)');

End If;

//End: get basic data information

Trace ' => QvdNotExist = $(QvdNotExist)';

//Begin: determine the start and end time of data

LastExeTime:

Load Text(Date(Max(ExeTime),'YYYY/MM/DD hh:mm:ss')) AS ExeTime From [$(DataPath)$(LogFileName)](qvd)

Where SourceViewName = '$(DwViewName)'

And StatusFlag = 'S';

Let LastExeTime = If(IsNull(FieldValue('ExeTime',1)),'1990/01/01 00:00:00',Text(FieldValue('ExeTime',1)));

Let ThisExeTime = Text(Now());

Drop Table LastExeTime;

//Begin: determine the start and end time of data

Let NowTime = Text(Now());

Trace '==> Processing table $(DwViewName) start time $(NowTime) ';

//Begin judges the total amount of data increment according to the configuration

If $(QvdNotExist) = 0 Then

If (EtlType = 'IUD') Then

$(DwViewName):

Select p.*

From $(Owner)$(DwViewName) p

,(Select $(PrimaryKey)

From $(Owner)$(DwViewName)

Where $(TimeStampCol) > TO_DATE('$(LastExeTime)','YYYY/MM/DD HH24:MI:SS')

And $(TimeStampCol) <= TO_DATE('$(ThisExeTime)','YYYY/MM/DD HH24:MI:SS')) temp

Where p.$(PrimaryKey) = temp.$(PrimaryKey);

Concatenate ($(DwViewName))

Load *

From [$(DataPath)$(QvdName).qvd](qvd)

Where Not Exists ($(PrimaryKey));

Inner Join ($(DwViewName))

Select $(PrimaryKey)

From $(Owner)$(DwViewName);

ElseIf (EtlType = 'IU') Then

$(DwViewName):

Select p.*

From $(Owner)$(DwViewName) p

,(Select $(PrimaryKey)

From $(Owner)$(DwViewName)

Where $(TimeStampCol) > TO_DATE('$(LastExeTime)','YYYY/MM/DD HH24:MI:SS')

And $(TimeStampCol) <= TO_DATE('$(ThisExeTime)','YYYY/MM/DD HH24:MI:SS')) temp

Where p.$(PrimaryKey) = temp.$(PrimaryKey);

Concatenate ($(DwViewName))

Load *

From [$(DataPath)$(QvdName).qvd](qvd)

Where Not Exists ($(PrimaryKey));

ElseIf (EtlType = 'I') Then

$(DwViewName):

Select p.*

From $(Owner)$(DwViewName) p

,(Select $(PrimaryKey)

From $(Owner)$(DwViewName)

Where $(TimeStampCol) > TO_DATE('$(LastExeTime)','YYYY/MM/DD HH24:MI:SS')

And $(TimeStampCol) <= TO_DATE('$(ThisExeTime)','YYYY/MM/DD HH24:MI:SS')) temp

Where p.$(PrimaryKey) = temp.$(PrimaryKey);

Concatenate ($(DwViewName))

Load *

From [$(DataPath)$(QvdName).qvd](qvd);

ElseIf (EtlType = 'ALL') Then

$(DwViewName):

Select *

From $(Owner)$(DwViewName);

End If;

Else

$(DwViewName):

Select *

From $(Owner)$(DwViewName);

End If;

//End makes full judgment of data increment according to configuration

Let NowTime = Text(Now());

Trace '==> Processing table $(DwViewName) End time $(NowTime) ';

// Save data to qvd & write log

Call StoreQvd('$(ViewType)', '$(DwViewName)', '$(DataPath)', '$(QvdName)', '$(RecordErrorCount)', '$(DataPath)', '$(LogFileName)', '$(ThisExeTime)');

Drop Table $(DwViewName);

Next i;

Set ErrorMode = 1;

Drop Table SourceViews;

//End: Load Data

DisConnect;

4, Data consolidation (personalized, negligible)

The code corresponds to as like as two peas in the scene, which are N data sources (DB). The table structure of some tables is exactly the same in each source library, but the table name difference (business is the different sub categories of the same business, and the background table is divided into libraries). Use code for automatic union to form a large table.

Set filePrefixSet = 'ATS_INTERFACE_BATCHES,ATS_INTERFACE_DETAILS,ATS_INTERFACE_REFUNDS';

Set ViewType = 'QlikInterface';

Let fileTypeCnt = SubStringCount('$(filePrefixSet)',',') + 1;

For fileTypeIdx = 1 to fileTypeCnt

Let filePrefix = SubField('$(filePrefixSet)',',',fileTypeIdx);

Let prefixLen = Len('$(DataPath)')+Len('$(filePrefix)')+2;

Set fileCnt = 0;

For Each file in FileList('$(DataPath)'&'$(filePrefix)'&'_*.qvd')

Let fileCnt = fileCnt + 1;

Let srcSysIdf = Replace(Mid('$(file)',prefixLen),'.qvd','');

If fileCnt = 1 Then

$(filePrefix):

Load '$(srcSysIdf)' AS SRC_SYS_IDF,*

From [$(file)](qvd);

Else

Concatenate ($(filePrefix))

Load '$(srcSysIdf)' AS SRC_SYS_IDF,*

From [$(file)](qvd);

End If;

Next

Store $(filePrefix) Into [$(DataPath)$(filePrefix).qvd](qvd);

Drop Table $(filePrefix);

Next