1, Cache overview

The design of Mybatis cache system follows the principle of single responsibility, opening and closing, and high decoupling. It is exquisite and fully layered, and its unique features can be applied to many similar businesses. Here is a brief analysis of the main caching system. With the help of the learning of Mybatis cache system, we can further explore the enterprise level practice of responsibility chain distribution mode, and how to avoid the enterprise level solution of dead cycle loading in the context of object circular dependency.

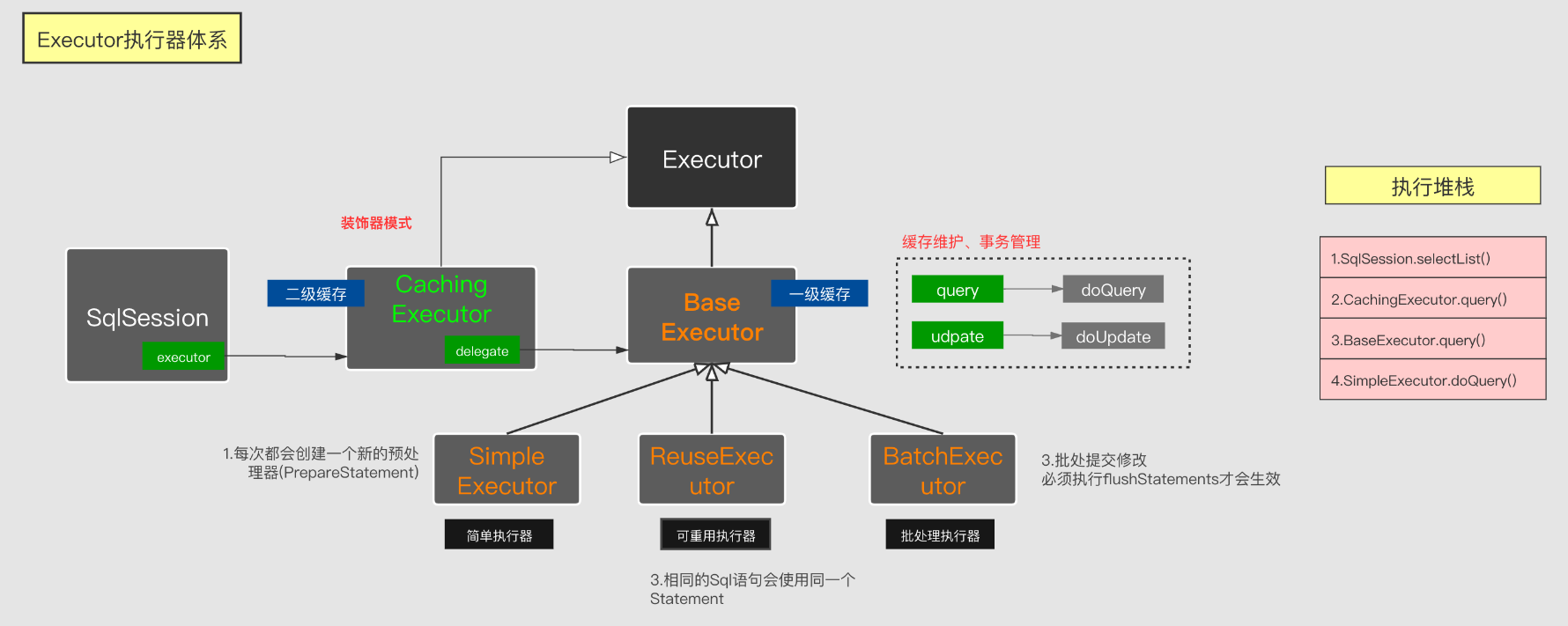

Let's take a picture of the previous implementation system:

In contrast to this execution chart, it is not hard to see that for a Mybatis query call, that is, SqlSession - > simpleexecution / reuseexecution / batchexecutor - > JDBC, in fact, caching is a layer of intercepting request logic between SqlSession and Executor *. It's well understood at a macro level. As a pre enhanced decorator of BaseExecutor, the enhanced function of cacheexecutor is to determine whether the cache is hit. If the cache is hit, the execution dispatch of BaseExecutor will not be performed.

1 public class CachingExecutor implements Executor { 2 // BaseExecutor 3 private final Executor delegate; 4 public CachingExecutor(Executor delegate) { 5 this.delegate = delegate; 6 delegate.setExecutorWrapper(this); 7 } 8 @Override 9 public <E> List<E> query(MappedStatement ms, Object parameterObject, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) 10 throws SQLException { 11 Cache cache = ms.getCache(); 12 if (cache != null) { 13 flushCacheIfRequired(ms); 14 if (ms.isUseCache() && resultHandler == null) { 15 ensureNoOutParams(ms, boundSql); 16 List<E> list = (List<E>) tcm.getObject(cache, key); 17 if (list == null) { 18 list = delegate.query(ms, parameterObject, rowBounds, resultHandler, key, boundSql); 19 tcm.putObject(cache, key, list); // issue #578 and #116 20 } 21 return list; 22 } 23 } 24 // If the cache is not hit, the BaseExecutor Distribution 25 return delegate.query(ms, parameterObject, rowBounds, resultHandler, key, boundSql); 26 } 27 }

So from this point of view, mybatis's cache is to try to hit the second level cache of cacheexecutor first. If it fails to hit, it will send a BaseExecutor to try to hit the first level cache next. Since the first level cache is relatively simple, let's first look at the first level cache.

2, First level cache overview

As mentioned in the previous section of executors, both the executors of Mybatis and SqlSession are one-to-one

1 public class DefaultSqlSession implements SqlSession { 2 // ... 3 private final Executor executor; 4 // ... 5 }

In each actuator, a member variable is used as the cache container

1 public abstract class BaseExecutor implements Executor { 2 // ... 3 protected PerpetualCache localCache; 4 // ... 5 }

That is to say, once SqlSession is closed, that is, the object is destroyed. The BaseExecutor object must be destroyed, so the first level cache container is destroyed. This can be pushed to the following: the first level cache is the SqlSession level cache. That is to say, to hit the first level cache, it must be the same SqlSession and not closed.

Let's see how level 1 cache is set up:

1 public abstract class BaseExecutor implements Executor { 2 protected PerpetualCache localCache; 3 @Override 4 public <E> List<E> query(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler) throws SQLException { 5 BoundSql boundSql = ms.getBoundSql(parameter); 6 CacheKey key = createCacheKey(ms, parameter, rowBounds, boundSql); 7 return query(ms, parameter, rowBounds, resultHandler, key, boundSql); 8 } 9 @Override 10 public <E> List<E> query(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException { 11 ErrorContext.instance().resource(ms.getResource()).activity("executing a query").object(ms.getId()); 12 if (closed) { 13 throw new ExecutorException("Executor was closed."); 14 } 15 if (queryStack == 0 && ms.isFlushCacheRequired()) { 16 clearLocalCache(); 17 } 18 List<E> list; 19 try { 20 queryStack++; 21 list = resultHandler == null ? (List<E>) localCache.getObject(key) : null; 22 if (list != null) { 23 handleLocallyCachedOutputParameters(ms, key, parameter, boundSql); 24 } else { 25 list = queryFromDatabase(ms, parameter, rowBounds, resultHandler, key, boundSql); 26 } 27 } finally { 28 queryStack--; 29 } 30 if (queryStack == 0) { 31 for (DeferredLoad deferredLoad : deferredLoads) { 32 deferredLoad.load(); 33 } 34 // issue #601 35 deferredLoads.clear(); 36 if (configuration.getLocalCacheScope() == LocalCacheScope.STATEMENT) { 37 // issue #482 38 clearLocalCache(); 39 } 40 } 41 return list; 42 } 43 }

Through this source code, you can see that the cache key is built in line 6, and the cache is attempted in line 21. Building a cache key depends on four dimensions: MappedStatement (the same statementId), parameter (the same query parameter), RowBounds (the same number of rows), BoundsSql (the same SQL), plus the conditions of the SqlSession on the top, the hit conditions of the first level cache are: the same SqlSession, statementId, parameter, number of rows, SQL, so as to hit the first level cache.

Here's a digression. When mybatis is integrated with Spring, the management of sqlsession will be given to Spring framework. Every time mybatis queries, Spring framework will create a new sqlsession for mybatis to use. It seems that the first level cache will never work. The solution is to add a transaction to the query. When adding a transaction, the Spring framework will ensure that only the same sqlsession object is provided to mybatis in a transaction.

Look at when the next level of cache will be refreshed, and then go to the source code:

1 public abstract class BaseExecutor implements Executor { 2 protected PerpetualCache localCache; 3 @Override 4 public void close(boolean forceRollback) { 5 try { 6 try { 7 rollback(forceRollback); 8 } finally { 9 if (transaction != null) { 10 transaction.close(); 11 } 12 } 13 } catch (SQLException e) { 14 log.warn("Unexpected exception on closing transaction. Cause: " + e); 15 } finally { 16 transaction = null; 17 deferredLoads = null; 18 localCache = null; 19 localOutputParameterCache = null; 20 closed = true; 21 } 22 } 23 @Override 24 public int update(MappedStatement ms, Object parameter) throws SQLException { 25 ErrorContext.instance().resource(ms.getResource()).activity("executing an update").object(ms.getId()); 26 if (closed) { 27 throw new ExecutorException("Executor was closed."); 28 } 29 clearLocalCache(); 30 return doUpdate(ms, parameter); 31 } 32 @Override 33 public <E> List<E> query(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException { 34 if (queryStack == 0 && ms.isFlushCacheRequired()) { 35 clearLocalCache(); 36 } 37 if (configuration.getLocalCacheScope() == LocalCacheScope.STATEMENT) { 38 clearLocalCache(); 39 } 40 } 41 @Override 42 public void commit(boolean required) throws SQLException { 43 clearLocalCache(); 44 } 45 46 @Override 47 public void rollback(boolean required) throws SQLException { 48 if (!closed) { 49 try { 50 clearLocalCache(); 51 flushStatements(true); 52 } finally { 53 if (required) { 54 transaction.rollback(); 55 } 56 } 57 } 58 } 59 60 @Override 61 public void clearLocalCache() { 62 if (!closed) { 63 localCache.clear(); 64 localOutputParameterCache.clear(); 65 } 66 }

For this source code, in the scenario of clearing cache, focus on the place where clearLocalCache is called:

That is to say, triggering the update operation (line 29), configuring flushCache=true (line 35), configuring the cache for STATEMENT (line 38), commit (line 42), rollback (line 50), and actuator shutdown (line 7) will clear the first level cache.

3, The solution of the first level cache for nested sub query cycle dependency scenario

The situation of circular dependence can be seen everywhere, for example: a teacher in charge of a class, there are many students below, and each student has a corresponding teacher in charge of a class.

For class teachers and students, it is a typical nested query at the level of mybatis. When mybatis processes nested queries, it will query, and then when setting properties, if there is a subquery, it will issue a subquery. Then, without special intervention, this scenario will fall into a dead cycle of setting properties to trigger queries.

1 <select id="selectHeadmasterById" resultMap="teacherMap"> 2 select * from teacher where id = #{id} 3 </select> 4 <resultMap id="teacherMap" type="Teacher" autoMapping="true"> 5 <result column="name" property="name"/> 6 <collection property="students" column="id" select="selectStudentsByTeacherId" fetchType="eager"/> 7 </resultMap> 8 <select id="selectStudentsByTeacherId" resultMap="studentMap"> 9 select * from student where teacher_id = #{teacherId} 10 </select> 11 <resultMap id="studentMap" type="comment"> 12 <association property="teacher" column="teacher_id" select="selectHeadmasterById" fetchType="eager"/> 13 </resultMap>

When mybatis is dealing with this situation, it cleverly uses a temporary level-1 cache placeholder and delayed loading (different from lazy loading) to solve the problem of query dead cycle. Here we go directly to the source code:

For each query, if mybatis fails to hit the effective cache (i.e. non placeholder cache), it will write a placeholder to the first level cache in advance. After the database query is completed, the real data will be overwritten with the placeholder cache.

1 public abstract class BaseExecutor implements Executor { 2 protected ConcurrentLinkedQueue<DeferredLoad> deferredLoads; 3 protected PerpetualCache localCache; 4 protected int queryStack; 5 @Override 6 public <E> List<E> query(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException { 7 ErrorContext.instance().resource(ms.getResource()).activity("executing a query").object(ms.getId()); 8 if (closed) { 9 throw new ExecutorException("Executor was closed."); 10 } 11 if (queryStack == 0 && ms.isFlushCacheRequired()) { 12 clearLocalCache(); 13 } 14 List<E> list; 15 try { 16 queryStack++; 17 list = resultHandler == null ? (List<E>) localCache.getObject(key) : null; 18 if (list != null) { 19 handleLocallyCachedOutputParameters(ms, key, parameter, boundSql); 20 } else { 21 // Check the database if the cache is not obtained 22 list = queryFromDatabase(ms, parameter, rowBounds, resultHandler, key, boundSql); 23 } 24 } finally { 25 queryStack--; 26 } 27 if (queryStack == 0) { 28 for (DeferredLoad deferredLoad : deferredLoads) { 29 deferredLoad.load(); 30 } 31 deferredLoads.clear(); 32 if (configuration.getLocalCacheScope() == LocalCacheScope.STATEMENT) { 33 clearLocalCache(); 34 } 35 } 36 return list; 37 } 38 }

Go to line 22 of the Query method above:

1 private <E> List<E> queryFromDatabase(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException { 2 List<E> list; 3 // Set up placeholder cache before checking database 4 localCache.putObject(key, EXECUTION_PLACEHOLDER); 5 try { 6 list = doQuery(ms, parameter, rowBounds, resultHandler, boundSql); 7 } finally { 8 localCache.removeObject(key); 9 } 10 localCache.putObject(key, list); 11 if (ms.getStatementType() == StatementType.CALLABLE) { 12 localOutputParameterCache.putObject(key, parameter); 13 } 14 return list; 15 }

BaseExecutor.queryFromDataBase Method, the database query will be triggered, and then the result value setting logic will be entered. First, it will detect whether there are nested subqueries. If there are, the main query in the previous step will wait for a while and start the subquery immediately.

1 private Object getNestedQueryMappingValue(ResultSet rs, MetaObject metaResultObject, ResultMapping propertyMapping, ResultLoaderMap lazyLoader, String columnPrefix) 2 throws SQLException { 3 final String nestedQueryId = propertyMapping.getNestedQueryId(); 4 final String property = propertyMapping.getProperty(); 5 final MappedStatement nestedQuery = configuration.getMappedStatement(nestedQueryId); 6 final Class<?> nestedQueryParameterType = nestedQuery.getParameterMap().getType(); 7 final Object nestedQueryParameterObject = prepareParameterForNestedQuery(rs, propertyMapping, nestedQueryParameterType, columnPrefix); 8 Object value = null; 9 if (nestedQueryParameterObject != null) { 10 final BoundSql nestedBoundSql = nestedQuery.getBoundSql(nestedQueryParameterObject); 11 final CacheKey key = executor.createCacheKey(nestedQuery, nestedQueryParameterObject, RowBounds.DEFAULT, nestedBoundSql); 12 final Class<?> targetType = propertyMapping.getJavaType(); 13 // Determine whether the current sub query is the same as the previous step main query 14 if (executor.isCached(nestedQuery, key)) { 15 executor.deferLoad(nestedQuery, metaResultObject, property, key, targetType); 16 value = DEFERRED; 17 } else { 18 final ResultLoader resultLoader = new ResultLoader(configuration, executor, nestedQuery, nestedQueryParameterObject, targetType, key, nestedBoundSql); 19 if (propertyMapping.isLazy()) { 20 lazyLoader.addLoader(property, metaResultObject, resultLoader); 21 value = DEFERRED; 22 } else { 23 // Send a starter query immediately 24 value = resultLoader.loadResult(); 25 } 26 } 27 } 28 return value; 29 }

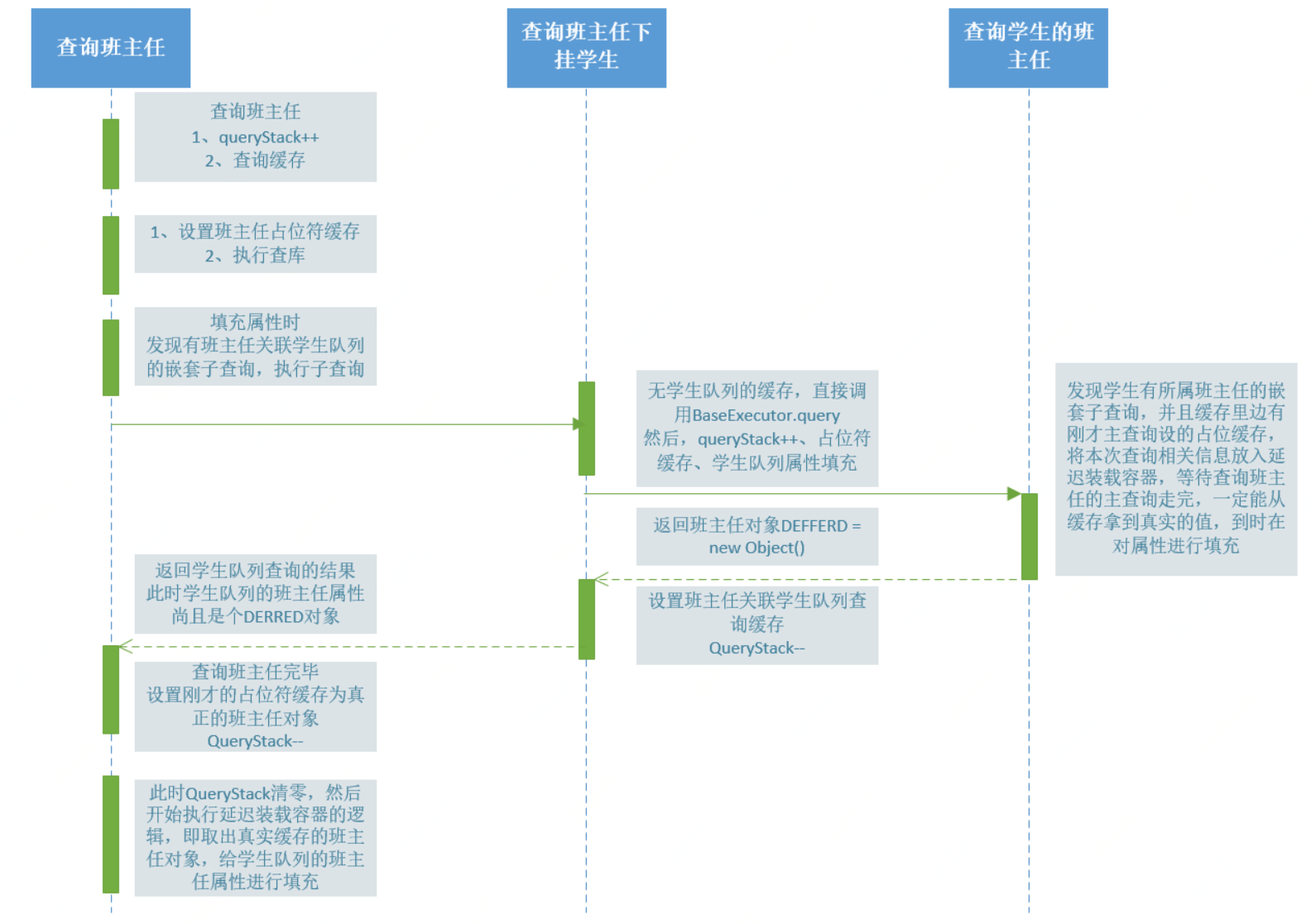

This focuses on lines 13 and 23. Line 23 will recurse to the top BaseExecutor.query Line 22 of the code snippet. If the getNestedQueryMappingValue code segment walks through 15 lines of logic, the BaseExecutor.query Line 28 of the code snippet. This recursion is relatively convoluted. Let's make a popular explanation:

First query the head teacher's main query to write a placeholder cache to the first level cache, then check the database, and then set the property. If there is no nested sub query, then write the value of the set property to overwrite the first level placeholder cache. Flow through.

However, there are nested sub queries, so the main query of the query head teacher stops at the step of setting attributes, and then starts another query to query students, and then enters the method of querying students' setting attributes.

The method of setting students' attributes finds that there are nested queries, so it launched a query operation of students' querying the head teacher, and then entered the setting attributes section. However, it is found that there is a station cache in the first level cache that lives in front of the query. Therefore, instead of checking the database, the sub query is put into the delayed loading container. The sub query is finished. Then the previous step of inquiry (teacher check students) is over.

Next, the query teacher's live query setting property is completed, and his results are overwritten with the previously written site cache. At the same time, the logic of delay loading is started. Delay loading is to take out the level-1 cache data (teacher) of the teacher just queried from the level-1 cache, and set the MetaObject property for the second step sub query (student).

Let's put it simply: when the main query (head teacher check) is executed, it is first written into the station cache, then suspended, and the first nested sub query (teacher check student) is initiated, then the sub query is suspended again, and the student check teacher is initiated, but it is found that the first step of the main query has a level 1 cache (station cache), then the sub query is automatically added to the delayed load queue, and then the change is terminated Sub query, wait for the main query to finish, and then delay the loader to take the data from the cache to the first sub query (teachers check students) for attribute setting.

Having said so much, I'm sure I'm carsick. Here is a sequence diagram:

To summarize:

1. The placeholder cache is used to identify the same front nested query as the current query. For example: query the head teacher of the student, and find that the main query in front is to query the head teacher, so it is not to query the executive head teacher. Waiting for the real head teacher to query, we just need to go to the cache to get it. So we do not execute the query, just put this property setting into the delayed loading queue.

2. queryStack is used to record the nesting level of the current query. When queryStack == 0, it is proved that the whole query has returned to the original main query. At this time, all objects that need to be loaded late in the process can start the real load.

3. The first level cache plays an important role in solving the circular dependency of nested subquery property settings. Therefore, the cache cannot be completely closed. But we can set: LocalCacheScope.STATEMENT , to let the first level cache clear in time. See source code

1 public <E> List<E> query(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException { 2 // ... 3 try { 4 queryStack++; 5 list = resultHandler == null ? (List<E>) localCache.getObject(key) : null; 6 if (list != null) { 7 handleLocallyCachedOutputParameters(ms, key, parameter, boundSql); 8 } else { 9 list = queryFromDatabase(ms, parameter, rowBounds, resultHandler, key, boundSql); 10 } 11 } finally { 12 queryStack--; 13 } 14 if (queryStack == 0) { 15 for (DeferredLoad deferredLoad : deferredLoads) { 16 deferredLoad.load(); 17 } 18 deferredLoads.clear(); 19 // set up LocalCacheScope.STATEMENT To clear the cache in time 20 if (configuration.getLocalCacheScope() == LocalCacheScope.STATEMENT) { 21 clearLocalCache(); 22 } 23 } 24 return list; 25 }

IV. L2 cache

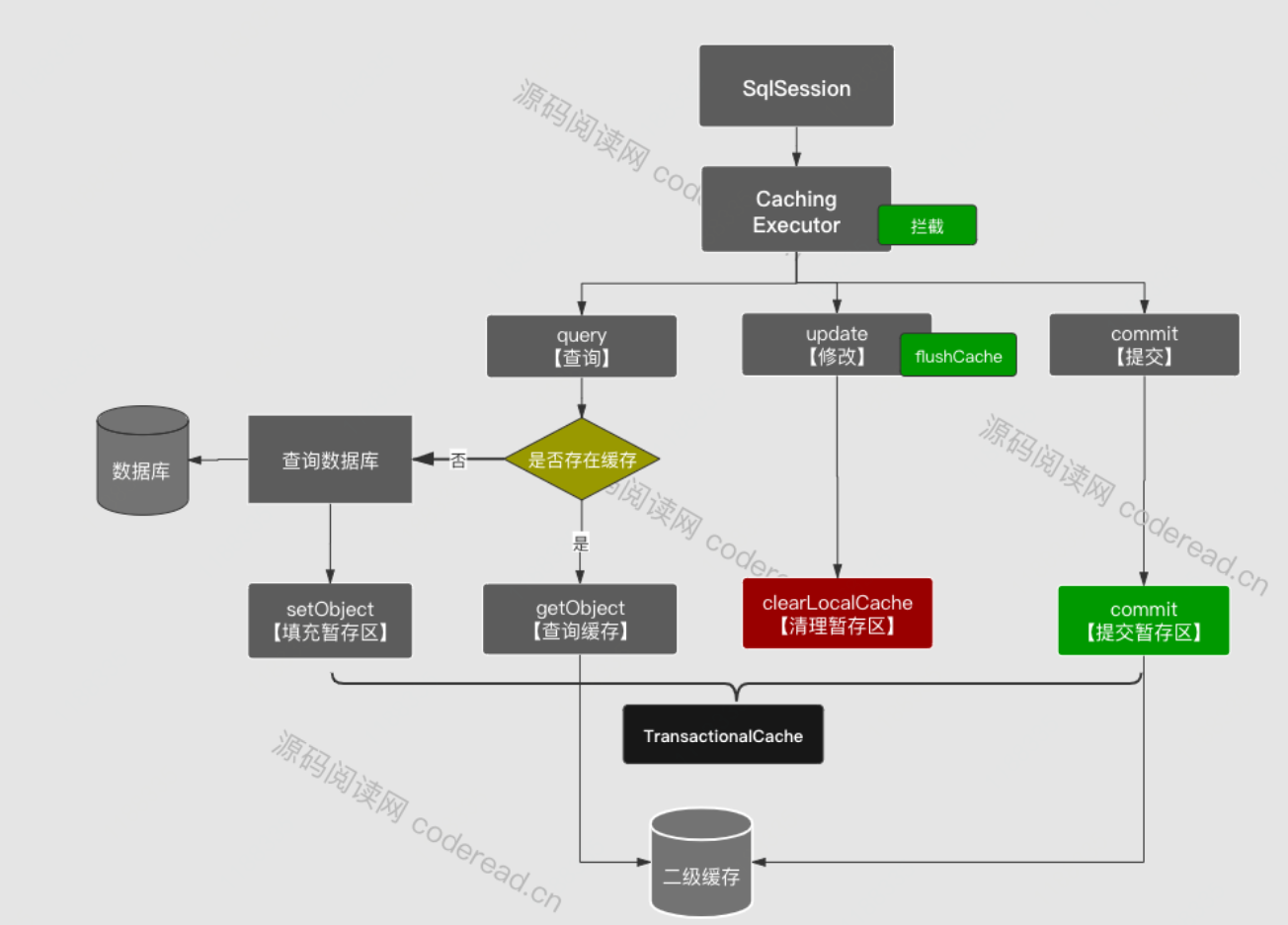

To start with the execution process of the previous L2 cache:

The second level cache is implemented in the front enhanced wrapper class cacheexecutor of BaseExecutor, that is, if the cache is hit from cacheexecutor, BaseExecutor will not be distributed (line 14 below).

1 public <E> List<E> query(MappedStatement ms, Object parameterObject, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) 2 throws SQLException { 3 Cache cache = ms.getCache(); 4 if (cache != null) { 5 flushCacheIfRequired(ms); 6 if (ms.isUseCache() && resultHandler == null) { 7 ensureNoOutParams(ms, parameterObject, boundSql); 8 @SuppressWarnings("unchecked") 9 List<E> list = (List<E>) tcm.getObject(cache, key); 10 if (list == null) { 11 list = delegate.<E> query(ms, parameterObject, rowBounds, resultHandler, key, boundSql); 12 tcm.putObject(cache, key, list); // issue #578 and #116 13 } 14 return list; 15 } 16 } 17 return delegate.<E> query(ms, parameterObject, rowBounds, resultHandler, key, boundSql); 18 }

The difference between line 11 and line 17 is whether to start the secondary Cache. If it is started, the query results sent to the BaseExecutor will be written to the staging area (line 12, TransactionCacheManager), and other transaction commits will actually brush into the secondary Cache. Next, we will focus on the reading and writing of Cache (lines 9 and 12). The real execution object here is the implementation of a series of Cache interfaces, including thread safety, logging, expiration cleaning, overflow elimination, serialization, execution storage and so on. And the design of the second level Cache is exquisite here. It is perfectly distributed according to responsibilities and completely decoupled.

Next, let's look at the initial change process of cache responsibility chain by default:

1 public Cache useNewCache(Class<? extends Cache> typeClass, 2 Class<? extends Cache> evictionClass, 3 Long flushInterval, 4 Integer size, 5 boolean readWrite, 6 boolean blocking, 7 Properties props) { 8 Cache cache = new CacheBuilder(currentNamespace) 9 // Here, set the default storage as memory 10 .implementation(valueOrDefault(typeClass, PerpetualCache.class)) 11 // The default overflow elimination is set as LRU 12 .addDecorator(valueOrDefault(evictionClass, LruCache.class)) 13 .clearInterval(flushInterval) 14 .size(size) 15 .readWrite(readWrite) 16 .blocking(blocking) 17 .properties(props) 18 .build(); 19 configuration.addCache(cache); 20 currentCache = cache; 21 return cache; 22 }

Then there is the initial change process:

1 public Cache build() { 2 setDefaultImplementations(); 3 Cache cache = newBaseCacheInstance(implementation, id); 4 setCacheProperties(cache); 5 // issue #352, do not apply decorators to custom caches 6 if (PerpetualCache.class.equals(cache.getClass())) { 7 for (Class<? extends Cache> decorator : decorators) { 8 cache = newCacheDecoratorInstance(decorator, cache); 9 setCacheProperties(cache); 10 } 11 cache = setStandardDecorators(cache); 12 } else if (!LoggingCache.class.isAssignableFrom(cache.getClass())) { 13 cache = new LoggingCache(cache); 14 } 15 return cache; 16 } 17 private Cache setStandardDecorators(Cache cache) { 18 try { 19 MetaObject metaCache = SystemMetaObject.forObject(cache); 20 if (size != null && metaCache.hasSetter("size")) { 21 metaCache.setValue("size", size); 22 } 23 if (clearInterval != null) { 24 cache = new ScheduledCache(cache); 25 ((ScheduledCache) cache).setClearInterval(clearInterval); 26 } 27 if (readWrite) { 28 cache = new SerializedCache(cache); 29 } 30 cache = new LoggingCache(cache); 31 cache = new SynchronizedCache(cache); 32 if (blocking) { 33 cache = new BlockingCache(cache); 34 } 35 return cache; 36 } catch (Exception e) { 37 throw new CacheException("Error building standard cache decorators. Cause: " + e, e); 38 } 39 }

Here we initialize the responsibility decoration from lines 3, 8, 24, 28, 30, 31 and 33 respectively. This decoupling method, which is divided and nested according to responsibilities, is actually a very mature design mode of enterprise level responsibility distribution. And the loop decoration nesting like line 8 can be seen in many open source frameworks, such as Dubbo's AOP mechanism.

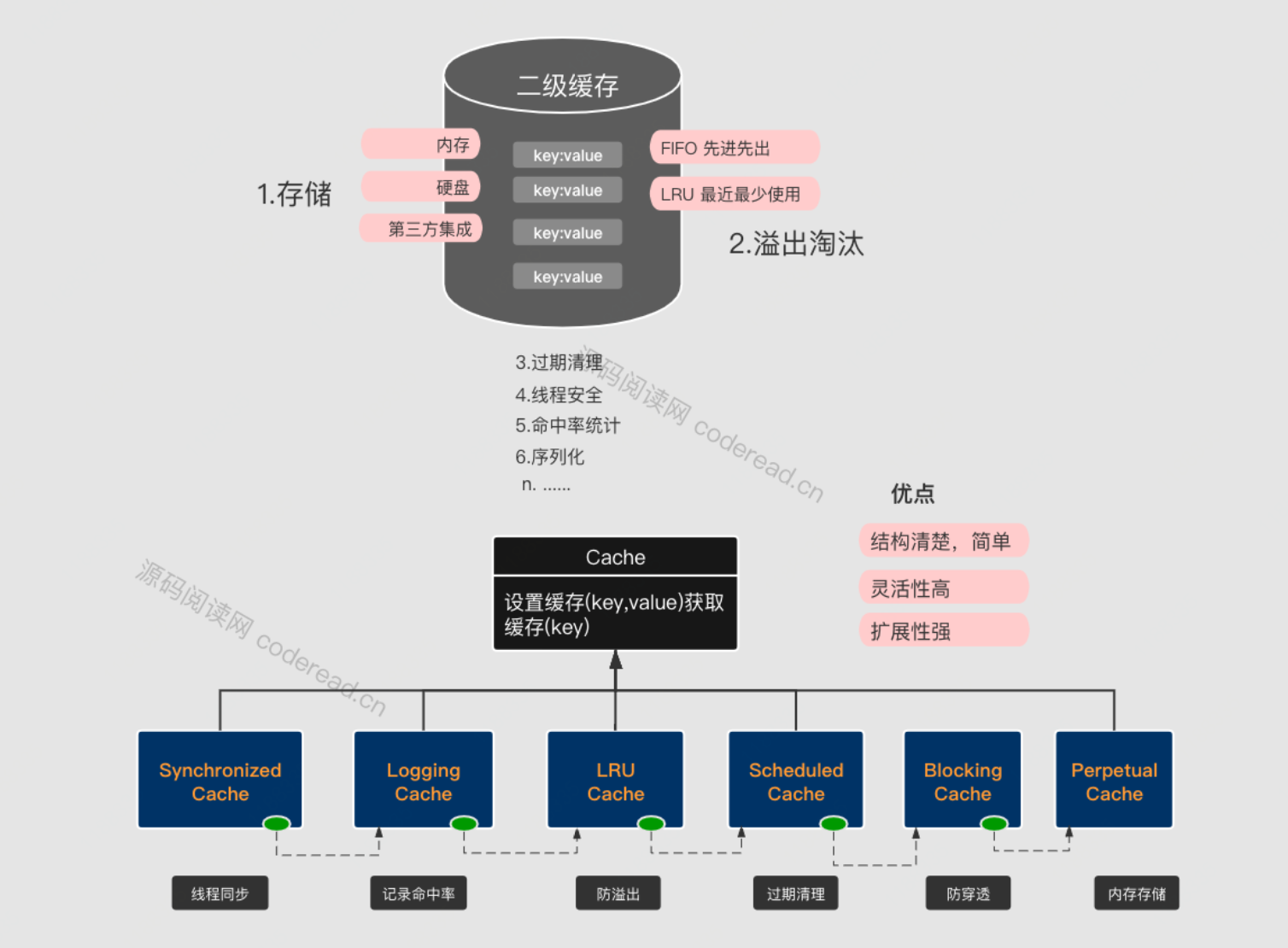

The following is a direct list of the functions covered by Mybatis's secondary buffer design, as well as the structure chart of each function responsibility chain distribution:

As can be seen from the code above, if blocking is set, then the outermost layer will wrap BlockingCache, and the next layer is synchronized cache. Both of them are ready-made security to prevent cache penetration.

1 public class BlockingCache implements Cache { 2 private final Cache delegate; 3 private final ConcurrentHashMap<Object, ReentrantLock> locks; 4 public BlockingCache(Cache delegate) { 5 this.delegate = delegate; 6 this.locks = new ConcurrentHashMap<Object, ReentrantLock>(); 7 } 8 @Override 9 public void putObject(Object key, Object value) { 10 try { 11 delegate.putObject(key, value); 12 } finally { 13 releaseLock(key); 14 } 15 } 16 @Override 17 public Object getObject(Object key) { 18 acquireLock(key); 19 Object value = delegate.getObject(key); 20 if (value != null) { 21 releaseLock(key); 22 } 23 return value; 24 } 25 }

1 public class SynchronizedCache implements Cache { 2 private Cache delegate; 3 @Override 4 public synchronized void putObject(Object key, Object object) { 5 delegate.putObject(key, object); 6 } 7 @Override 8 public synchronized Object getObject(Object key) { 9 return delegate.getObject(key); 10 } 11 }

Take a look at LruCache, which is responsible for overflow elimination:

1 public class LruCache implements Cache { 2 private final Cache delegate; 3 private Map<Object, Object> keyMap; 4 // Record what needs to be eliminated when overflowing Key 5 private Object eldestKey; 6 public void setSize(final int size) { 7 // LinkedHashMap.accessOrder Set to true,That is, each element accessed is placed at the end of the queue once. When it overflows, it can be removed from the head 8 keyMap = new LinkedHashMap<Object, Object>(size, .75F, true) { 9 @Override 10 protected boolean removeEldestEntry(Map.Entry<Object, Object> eldest) { 11 boolean tooBig = size() > size; 12 if (tooBig) { 13 eldestKey = eldest.getKey(); 14 } 15 return tooBig; 16 } 17 }; 18 } 19 @Override 20 public void putObject(Object key, Object value) { 21 delegate.putObject(key, value); 22 cycleKeyList(key); 23 } 24 private void cycleKeyList(Object key) { 25 keyMap.put(key, key); 26 if (eldestKey != null) { 27 delegate.removeObject(eldestKey); 28 eldestKey = null; 29 } 30 } 31 }

Let's talk about the second level cache. Summarize the second level cache:

1. On by default, cacheenable switch. After submission.

2. Same StatementId.

3. The same number of SQL, parameters, and rows.

4. Call across Mapper.

5, Summary

At present, in various distributed application scenarios, both the first level cache and the second level cache have a high probability of dirty reading, which is forbidden, but Mybatis is very delicate in the design of this local scenario. For example, the scenario design of object loop nested query, in fact, this mature solution is also applied by Spring (there is also the scenario of object loop injection). As well as the design of responsible decoration, Dubbo is also in use. In fact, we can get a lot of inspiration from it. For example, for a given business scenario, we need to add ready-made security considerations. On the premise of not intruding into the business code, can we also add a layer of responsibility decoration and distribute it?