mExecutorService.submit(new Runnable() {

@Override

public void run() {

AVUtils.audioPlay(input_video_file_path);

}

});

break;

}

}

@Override

public void onFinish() {

runOnUiThread(new Runnable() {

@Override

public void run() {

if (mProgressDialog.isShowing()) {

mProgressDialog.dismiss();

}

Toast.makeText(MainActivity.this, "Decoding complete", Toast.LENGTH_SHORT).show();

}

});

}

@Override

protected void onDestroy() {

super.onDestroy();

mExecutorService.shutdown();

}

}

nativelib.c

#include <jni.h>

#include <string.h>

#include <android/log.h>

#include <stdio.h>

#include <libavutil/time.h>

//Encoding

#include "include/libavcodec/avcodec.h"

//Encapsulation format processing

#include "include/libavformat/avformat.h"

//Pixel Processing

#include "include/libswscale/swscale.h"

#define LOGI(FORMAT, ...) __android_log_print(ANDROID_LOG_INFO,"haohao",FORMAT,##VA_ARGS);

#define LOGE(FORMAT, ...) __android_log_print(ANDROID_LOG_ERROR,"haohao",FORMAT,##VA_ARGS);

//Chinese string conversion

jstring charsToUTF8String(JNIEnv *env, char *s) {

jclass string_cls = (*env)->FindClass(env, "java/lang/String");

jmethodID mid = (*env)->GetMethodID(env, string_cls, "", "([BLjava/lang/String;)V");

jbyteArray jb_arr = (*env)->NewByteArray(env, strlen(s)); (*env)->SetByteArrayRegion(env, jb_arr, 0, strlen(s), s); jstring charset = (*env)->NewStringUTF(env, "UTF-8"); return (*env)->NewObject(env, string_cls, mid, jb_arr, charset);

}

JNIEXPORT void JNICALL

Java_com_haohao_ffmpeg_AVUtils_videoDecode(JNIEnv *env, jclass type, jstring input_,

jstring output_) {

//Accessing static methods

jmethodID mid = (*env)->GetStaticMethodID(env, type, "onNativeCallback", "()V");

//Video files that need to be transcoded (input video files)

const char *input = (*env)->GetStringUTFChars(env, input_, 0);

const char *output = (*env)->GetStringUTFChars(env, output_, 0);

//Register all components

av_register_all();

//Encapsulation format context, commands the global structure, saves information about the video file encapsulation format

AVFormatContext *pFormatCtx = avformat_alloc_context();

//Open Input Video File

if (avformat_open_input(&pFormatCtx, input, NULL, NULL) != 0) {

LOGE("%s", "Unable to open input video file");

return;

}

//Get video file information, such as the width and height of the video

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGE("%s", "Unable to get video file information");

return;

}

//Get the index position of the video stream

//Traverse all types of streams (audio stream, video stream, subtitle stream) to find the video stream

int v_stream_idx = -1;

int i = 0;

for (; i < pFormatCtx->nb_streams; i++) {

//Judging video streams

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

v_stream_idx = i;

break;

}

}

if (v_stream_idx == -1) {

LOGE("%s", "No video stream found\n");

return;

}

//Get the corresponding decoder according to how the video is encoded

AVCodecContext *pCodecCtx = pFormatCtx->streams[v_stream_idx]->codec;

//Find the corresponding decoder based on the coded id in the codec context

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

LOGE("%s", "Decoder not found or video is encrypted\n");

return;

}

//Turn on the decoder, there is a problem with the decoder (for example, we compiled FFmpeg without compiling the corresponding type of decoder)

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGE("%s", "Decoder could not be opened\n");

return;

}

//Output Video Information

LOGI("File format for video:%s", pFormatCtx->iformat->name);

LOGI("Video duration:%lld", (pFormatCtx->duration) / (1000 * 1000));

LOGI("Width and height of video:%d,%d", pCodecCtx->width, pCodecCtx->height);

LOGI("Name of decoder:%s", pCodec->name);

//Read Read

//AVPacket is used to store compressed data frame by frame (H264)

//Buffer, open up space

AVPacket *packet = (AVPacket *) av_malloc(sizeof(AVPacket));

//AVFrame is used to store decoded pixel data (YUV)

//memory allocation

AVFrame *pFrame = av_frame_alloc();

//YUV420

AVFrame *pFrameYUV = av_frame_alloc();

//Memory can only be truly allocated if the AVFrame's pixel format and picture size are specified

//Buffer allocation memory

uint8_t *out_buffer = (uint8_t *) av_malloc(

avpicture_get_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height));

//Initialization Buffer

avpicture_fill((AVPicture *) pFrameYUV, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width,

pCodecCtx->height);

//Parameters for transcoding (zooming), width before, width after, format, etc.

struct SwsContext *sws_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height,

pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height,

AV_PIX_FMT_YUV420P,

SWS_BICUBIC, NULL, NULL, NULL);

int got_picture, ret;

//output file

FILE *fp_yuv = fopen(output, "wb+");

int frame_count = 0;

//Read compressed data frame by frame

while (av_read_frame(pFormatCtx, packet) >= 0) {

//As long as the video is compressed (based on the index position of the stream)

if (packet->stream_index == v_stream_idx) {

//Decode a frame of video compression data to get video pixel data

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0) {

LOGE("%s", "Decoding error");

return;

}

//Decoding completed for 0, non-0 is decoding

if (got_picture) {

//AVFrame to pixel format YUV420, width and height

//26 Input and output data

//37 Size of data in one row of input and output pictures AVFrame conversion is one line to one line conversion

//4 The position of the first column of input data to be transcoded starts at 0

//5 Height of input picture

sws_scale(sws_ctx, pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

//Output to YUV file

//AVFrame Pixel Frame Write File

//Data decoded image pixel data (audio sample data)

//Y Brightness UV Chroma (compressed) People are more sensitive to brightness

//The number of U V s is 1/4 of Y

int y_size = pCodecCtx->width * pCodecCtx->height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv);

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv);

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv);

frame_count++;

LOGI("Decode No.%d frame", frame_count);

}

}

//Release Resources

av_free_packet(packet);

}

fclose(fp_yuv);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_free_context(pFormatCtx);

(*env)->ReleaseStringUTFChars(env, input_, input);

(*env)->ReleaseStringUTFChars(env, output_, output);

//Notifies the Java layer that decoding is complete

(*env)->CallStaticVoidMethod(env, type, mid);

}

//Using these two Window s-related headers requires the introduction of the android Library in the CMake script

#include <android/native_window_jni.h>

#include <android/native_window.h>

#include "include/yuv/libyuv.h"

JNIEXPORT void JNICALL

Java_com_haohao_ffmpeg_AVUtils_videoRender(JNIEnv *env, jclass type, jstring input_,

jobject surface) {

//Video files that need to be transcoded (input video files)

const char *input = (*env)->GetStringUTFChars(env, input_, 0);

//Register all components

av_register_all();

//avcodec_register_all();

//Encapsulation format context, commands the global structure, saves information about the video file encapsulation format

AVFormatContext *pFormatCtx = avformat_alloc_context();

//Open Input Video File

if (avformat_open_input(&pFormatCtx, input, NULL, NULL) != 0) {

LOGE("%s", "Unable to open input video file");

return;

}

//Get video file information, such as the width and height of the video

//The second parameter is a dictionary indicating what information you need, such as video metadata

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGE("%s", "Unable to get video file information");

return;

}

//Get the index position of the video stream

//Traverse all types of streams (audio stream, video stream, subtitle stream) to find the video stream

int v_stream_idx = -1;

int i = 0;

//number of streams

for (; i < pFormatCtx->nb_streams; i++) {

//Type of flow

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

v_stream_idx = i;

break;

}

}

if (v_stream_idx == -1) {

LOGE("%s", "No video stream found\n");

return;

}

//Get Codec Context in Video Stream

AVCodecContext *pCodecCtx = pFormatCtx->streams[v_stream_idx]->codec;

//Find the corresponding decoder based on the coded id in the codec context

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

LOGE("%s", "Decoder not found or video is encrypted\n");

return;

}

//Turn on the decoder, there is a problem with the decoder (for example, we compiled FFmpeg without compiling the corresponding type of decoder)

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGE("%s", "Decoder could not be opened\n");

return;

}

//Read Read

//AVPacket is used to store compressed data frame by frame (H264)

//Buffer, open up space

AVPacket *packet = (AVPacket *) av_malloc(sizeof(AVPacket));

//AVFrame is used to store decoded pixel data (YUV)

//memory allocation

AVFrame *yuv_frame = av_frame_alloc();

AVFrame *rgb_frame = av_frame_alloc();

int got_picture, ret;

int frame_count = 0;

//forms

ANativeWindow *pWindow = ANativeWindow_fromSurface(env, surface);

//Buffer while drawing

ANativeWindow_Buffer out_buffer;

//Read compressed data frame by frame

while (av_read_frame(pFormatCtx, packet) >= 0) {

//As long as the video is compressed (based on the index position of the stream)

if (packet->stream_index == v_stream_idx) {

//7. Decode a frame of video compression data to get video pixel data

ret = avcodec_decode_video2(pCodecCtx, yuv_frame, &got_picture, packet);

if (ret < 0) {

LOGE("%s", "Decoding error");

return;

}

//Decoding completed for 0, non-0 is decoding

if (got_picture) {

//lock window

//Set the properties of the buffer: width and height, pixel format (need to be consistent with Java layer format)

ANativeWindow_setBuffersGeometry(pWindow, pCodecCtx->width, pCodecCtx->height,

WINDOW_FORMAT_RGBA_8888);

ANativeWindow_lock(pWindow, &out_buffer, NULL);

//Initialization Buffer

//Set properties, pixel format, width and height

//rgb_frame's buffer is Window's buffer, the same, drawn when unlocked

avpicture_fill((AVPicture *) rgb_frame, out_buffer.bits, AV_PIX_FMT_RGBA,

pCodecCtx->width,

pCodecCtx->height);

//YUV format data can be converted to RGBA 8888 format data, FFmpeg can also be converted, but there are problems, using libyuv library to achieve

I420ToARGB(yuv_frame->data[0], yuv_frame->linesize[0],

yuv_frame->data[2], yuv_frame->linesize[2],

yuv_frame->data[1], yuv_frame->linesize[1],

rgb_frame->data[0], rgb_frame->linesize[0],

pCodecCtx->width, pCodecCtx->height);

//3,unlock window

ANativeWindow_unlockAndPost(pWindow);

frame_count++;

LOGI("Decode Drawing%d frame", frame_count);

}

}

//Release Resources

av_free_packet(packet);

}

av_frame_free(&yuv_frame);

avcodec_close(pCodecCtx);

avformat_free_context(pFormatCtx);

(*env)->ReleaseStringUTFChars(env, input_, input);

}

#include "libswresample/swresample.h"

#define MAX_AUDIO_FRME_SIZE 48000 * 4

//Audio decoding (resampling)

JNIEXPORT void JNICALL

Java_com_haohao_ffmpeg_AVUtils_audioDecode(JNIEnv *env, jclass type, jstring input_,

jstring output_) {

//Access static methods

jmethodID mid = (*env)->GetStaticMethodID(env, type, "onNativeCallback", "()V");

const char *input = (*env)->GetStringUTFChars(env, input_, 0);

const char *output = (*env)->GetStringUTFChars(env, output_, 0);

//Register Components

av_register_all();

AVFormatContext *pFormatCtx = avformat_alloc_context();

//Open Audio File

if (avformat_open_input(&pFormatCtx, input, NULL, NULL) != 0) {

LOGI("%s", "Unable to open audio file");

return;

}

//Get input file information

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGI("%s", "Unable to get input file information");

return;

}

//Get Audio Stream Index Location

int i = 0, audio_stream_idx = -1;

for (; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_AUDIO) {

audio_stream_idx = i;

break;

}

}

//Get Decoder

AVCodecContext *codecCtx = pFormatCtx->streams[audio_stream_idx]->codec;

AVCodec *codec = avcodec_find_decoder(codecCtx->codec_id);

if (codec == NULL) {

LOGI("%s", "Unable to get decoder");

return;

}

//Open Decoder

if (avcodec_open2(codecCtx, codec, NULL) < 0) {

LOGI("%s", "Unable to open decoder");

return;

}

//compressed data

AVPacket *packet = (AVPacket *) av_malloc(sizeof(AVPacket));

//Decompress data

AVFrame *frame = av_frame_alloc();

//Frame->16bit 44100 PCM Uniform Audio Sampling Format and Sampling Rate

SwrContext *swrCtx = swr_alloc();

//Resampling Setting Parameters

//Sampling format of input

enum AVSampleFormat in_sample_fmt = codecCtx->sample_fmt;

//Output Sampling Format 16bit PCM

enum AVSampleFormat out_sample_fmt = AV_SAMPLE_FMT_S16;

//Input Sampling Rate

int in_sample_rate = codecCtx->sample_rate;

//Output Sampling Rate

int out_sample_rate = 44100;

//Get Input Channel Layout

//Get the default channel layout based on the number of channels (2 channels, default stereo)

//av_get_default_channel_layout(codecCtx->channels);

uint64_t in_ch_layout = codecCtx->channel_layout;

//Output Channel Layout (Stereo)

uint64_t out_ch_layout = AV_CH_LAYOUT_STEREO;

swr_alloc_set_opts(swrCtx,

out_ch_layout, out_sample_fmt, out_sample_rate,

in_ch_layout, in_sample_fmt, in_sample_rate,

0, NULL);

swr_init(swrCtx);

//Number of channels output

int out_channel_nb = av_get_channel_layout_nb_channels(out_ch_layout);

//Resampling Setting Parameters

//PCM data with bit-width 16bit sampling rate 44100HZ

uint8_t *out_buffer = (uint8_t *) av_malloc(MAX_AUDIO_FRME_SIZE);

FILE *fp_pcm = fopen(output, "wb");

int got_frame = 0, index = 0, ret;

//Constantly read compressed data

while (av_read_frame(pFormatCtx, packet) >= 0) {

//Decode

ret = avcodec_decode_audio4(codecCtx, frame, &got_frame, packet);

if (ret < 0) {

LOGI("%s", "Decoding complete");

}

//Decode a frame successfully

if (got_frame > 0) {

LOGI("Decode:%d", index++);

swr_convert(swrCtx, &out_buffer, MAX_AUDIO_FRME_SIZE, frame->data, frame->nb_samples);

//Get size of sample

int out_buffer_size = av_samples_get_buffer_size(NULL, out_channel_nb,

frame->nb_samples, out_sample_fmt, 1);

fwrite(out_buffer, 1, out_buffer_size, fp_pcm);

}

av_free_packet(packet);

}

fclose(fp_pcm);

av_frame_free(&frame);

av_free(out_buffer);

swr_free(&swrCtx);

avcodec_close(codecCtx);

avformat_close_input(&pFormatCtx);

(*env)->ReleaseStringUTFChars(env, input_, input);

(*env)->ReleaseStringUTFChars(env, output_, output);

//Notify Java layer decoding is complete

(*env)->CallStaticVoidMethod(env, type, mid);

}

JNIEXPORT void JNICALL

Java_com_haohao_ffmpeg_AVUtils_audioPlay(JNIEnv *env, jclass type, jstring input_) {

const char *input = (*env)->GetStringUTFChars(env, input_, 0);

LOGI("%s", "sound");

//Register Components

av_register_all();

AVFormatContext *pFormatCtx = avformat_alloc_context();

//Open audio file

if (avformat_open_input(&pFormatCtx, input, NULL, NULL) != 0) {

LOGI("%s", "Unable to open audio file");

return;

}

//Get input file information

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGI("%s", "Unable to get input file information");

return;

}

//Get Audio Stream Index Location

int i = 0, audio_stream_idx = -1;

for (; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_AUDIO) {

audio_stream_idx = i;

break;

}

}

//Get Decoder

AVCodecContext *codecCtx = pFormatCtx->streams[audio_stream_idx]->codec;

AVCodec *codec = avcodec_find_decoder(codecCtx->codec_id);

if (codec == NULL) {

LOGI("%s", "Unable to get decoder");

return;

}

//Open Decoder

if (avcodec_open2(codecCtx, codec, NULL) < 0) {

LOGI("%s", "Unable to open decoder");

return;

}

//compressed data

AVPacket *packet = (AVPacket *) av_malloc(sizeof(AVPacket));

//Decompress data

AVFrame *frame = av_frame_alloc();

//Frame->16bit 44100 PCM Uniform Audio Sampling Format and Sampling Rate

SwrContext *swrCtx = swr_alloc();

//Sampling format of input

enum AVSampleFormat in_sample_fmt = codecCtx->sample_fmt;

//Output Sampling Format 16bit PCM

enum AVSampleFormat out_sample_fmt = AV_SAMPLE_FMT_S16;

//Input Sampling Rate

int in_sample_rate = codecCtx->sample_rate;

//Output Sampling Rate

int out_sample_rate = in_sample_rate;

//Get Input Channel Layout

//Get the default channel layout based on the number of channels (2 channels, default stereo)

//av_get_default_channel_layout(codecCtx->channels);

uint64_t in_ch_layout = codecCtx->channel_layout;

//Output Channel Layout (Stereo)

uint64_t out_ch_layout = AV_CH_LAYOUT_STEREO;

swr_alloc_set_opts(swrCtx,

out_ch_layout, out_sample_fmt, out_sample_rate,

in_ch_layout, in_sample_fmt, in_sample_rate,

0, NULL);

swr_init(swrCtx);

//Number of channels output

int out_channel_nb = av_get_channel_layout_nb_channels(out_ch_layout);

//AudioTrack Object

jmethodID create_audio_track_mid = (*env)->GetStaticMethodID(env, type, "createAudioTrack",

"(II)Landroid/media/AudioTrack;");

jobject audio_track = (*env)->CallStaticObjectMethod(env, type, create_audio_track_mid,

out_sample_rate, out_channel_nb);

//Call AudioTrack.play method

jclass audio_track_class = (*env)->GetObjectClass(env, audio_track);

jmethodID audio_track_play_mid = (*env)->GetMethodID(env, audio_track_class, "play", "()V");

jmethodID audio_track_stop_mid = (*env)->GetMethodID(env, audio_track_class, "stop", "()V");

(*env)->CallVoidMethod(env, audio_track, audio_track_play_mid);

//AudioTrack.write

jmethodID audio_track_write_mid = (*env)->GetMethodID(env, audio_track_class, "write",

"([BII)I");

//16bit 44100 PCM data

uint8_t *out_buffer = (uint8_t *) av_malloc(MAX_AUDIO_FRME_SIZE);

int got_frame = 0, index = 0, ret;

//Constantly read compressed data

while (av_read_frame(pFormatCtx, packet) >= 0) {

//Packet decoding audio type

if (packet->stream_index == audio_stream_idx) {

//Decode

ret = avcodec_decode_audio4(codecCtx, frame, &got_frame, packet);

if (ret < 0) {

LOGI("%s", "Decoding complete");

}

//Decode a frame successfully

if (got_frame > 0) {

LOGI("Decode:%d", index++);

swr_convert(swrCtx, &out_buffer, MAX_AUDIO_FRME_SIZE,

(const uint8_t **) frame->data, frame->nb_samples);

//Get size of sample

int out_buffer_size = av_samples_get_buffer_size(NULL, out_channel_nb,

frame->nb_samples, out_sample_fmt,

1);

//out_buffer buffer data, converted to byte array

jbyteArray audio_sample_array = (*env)->NewByteArray(env, out_buffer_size);

jbyte *sample_bytep = (*env)->GetByteArrayElements(env, audio_sample_array, NULL);

//out_buffer's data is copied to sampe_bytep

memcpy(sample_bytep, out_buffer, out_buffer_size);

//synchronization

(*env)->ReleaseByteArrayElements(env, audio_sample_array, sample_bytep, 0);

//AudioTrack.write PCM data

(*env)->CallIntMethod(env, audio_track, audio_track_write_mid,

audio_sample_array, 0, out_buffer_size);

//Release local references

(*env)->DeleteLocalRef(env, audio_sample_array);

}

}

av_free_packet(packet);

}

(*env)->CallVoidMethod(env, audio_track, audio_track_stop_mid);

av_frame_free(&frame);

av_free(out_buffer);

swr_free(&swrCtx);

avcodec_close(codecCtx);

avformat_close_input(&pFormatCtx);

(*env)->ReleaseStringUTFChars(env, input_, input);

}

CMakeLists.txt

cmake_minimum_required(VERSION 3.4.1)

include_directories(

C

M

A

K

E

S

O

U

R

C

E

D

I

R

/

s

r

c

/

m

a

i

n

/

c

p

p

/

i

n

c

l

u

d

e

)

s

e

t

(

j

n

i

l

i

b

s

"

{CMAKE_SOURCE_DIR}/src/main/cpp/include) set(jnilibs "

CMAKESOURCEDIR/src/main/cpp/include)set(jnilibs"{CMAKE_SOURCE_DIR}/src/main/jniLibs")

set(CMAKE_LIBRARY_OUTPUT_DIRECTORY

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI})

add_library( # Sets the name of the library.

native-lib

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

src/main/cpp/native-lib.c)

Add eight function libraries and yuvlib libraries for FFmpeg

add_library(avutil-54 SHARED IMPORTED )

set_target_properties(avutil-54 PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libavutil-54.so")

add_library(swresample-1 SHARED IMPORTED )

set_target_properties(swresample-1 PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libswresample-1.so")

add_library(avcodec-56 SHARED IMPORTED )

set_target_properties(avcodec-56 PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libavcodec-56.so")

add_library(avformat-56 SHARED IMPORTED )

set_target_properties(avformat-56 PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libavformat-56.so")

add_library(swscale-3 SHARED IMPORTED )

set_target_properties(swscale-3 PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libswscale-3.so")

add_library(postproc-53 SHARED IMPORTED )

set_target_properties(postproc-53 PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libpostproc-53.so")

add_library(avfilter-5 SHARED IMPORTED )

set_target_properties(avfilter-5 PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libavfilter-5.so")

add_library(avdevice-56 SHARED IMPORTED )

set_target_properties(avdevice-56 PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libavdevice-56.so")

add_library(yuv SHARED IMPORTED )

set_target_properties(yuv PROPERTIES IMPORTED_LOCATION "

j

n

i

l

i

b

s

/

{jnilibs}/

jnilibs/{ANDROID_ABI}/libyuv.so")

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log )

#Find libraries related to Window drawing on Android

find_library(

android-lib

android

)

target_link_libraries( native-lib

${log-lib}

${android-lib}

avutil-54

swresample-1

avcodec-56

avformat-56

swscale-3

postproc-53

avfilter-5

avdevice-56

yuv)

PS: 1. Note the addition of file read and write permissions. # 2. Principle and Implementation of Audio-Video Synchronization ## 2.1. Principles If playback is done simply according to the sampling rate of the audio and the frame rate of the video, it will be difficult to synchronize due to various factors such as machine speed, decoding efficiency and so on. The time difference between audio and video will show linear growth.So there are three ways to synchronize audio and video: 1.Refer to an external clock to synchronize audio and video to this time.I first thought about this, but it's not good. Because of some biological principles, people are more sensitive to changes in sound, but less sensitive to changes in vision.So frequently adjusting the sound play can be a bit harsh or noisy, which can affect the user experience. ( ps: Incidentally General Science Biology knowledge, feel good about yourself_). 2.Audio desynchronizes the time of the video based on the video.No, for the same reason. 3.Video de-synchronizes the time of audio based on audio.So this is the way. So the principle is to adjust the speed of the video based on the audio time to determine if the video is fast or slow.In fact, it is a dynamic process of chasing and waiting. ## 2.2. Some concepts Both audio and video DTS and PTS. DTS ,Decoding Time Stamp,Decode the timestamp to tell the decoder packet Decoding order. PTS ,Presentation Time Stamp,Show timestamp indicating from packet Display order of decoded data in. In audio they are the same, but in video because B The presence of frames (bi-directional prediction) causes the decoding order to be different from the display order, that is, in video DTS and PTS Not necessarily the same. Time Base :see FFmpeg Source code

/**

* This is the fundamental unit of time (in seconds) in terms

* of which frame timestamps are represented. For fixed-fps content,

* timebase should be 1/framerate and timestamp increments should be

* identically 1.

* This often, but not always is the inverse of the frame rate or field rate

* for video.

* - encoding: MUST be set by user.

* - decoding: the use of this field for decoding is deprecated.

* Use framerate instead.

*/

AVRational time_base;

/**

- rational number numerator/denominator

*/

typedef struct AVRational{

int num; ///< numerator

int den; ///< denominator

} AVRational;

Personal understanding is ffmpeg The time unit is expressed as a fraction in num Is a molecule, den Is the denominator.also ffmpeg A calculation method is provided:

/**

- Convert rational to double.

- @param a rational to convert

- @return (double) a

*/

static inline double av_q2d(AVRational a){

return a.num / (double) a.den;

}

So the display time of a frame in a video is calculated as(Unit is wonderful):

time = pts * av_q2d(time_base);

## 2.3, Synchronization code **1, Audio part** clock How long to play the audio (from start to current time)

if (packet->pts != AV_NOPTS_VALUE) {

audio->clock = av_q2d(audio->time_base) * packet->pts;

}

Then add this packet Time to play data in

double time = datalen/((double) 44100 *2 * 2);

audio->clock = audio->clock +time;

datalen Is the length of the data.The sampling rate is 44100, the number of sampling bits is 16, and the number of channels is 2.So data length / Bytes per second. ps: The calculation method here is not perfect, there are many problems, I will make up for them later. **2, Video section** Define a few values first:

double last_play //Play time of previous frame

, play //play time of current frame

, last_delay //Time between two frames of the last video played

, delay //time between two frames of video

, audio_clock // audio track actual play time

, time difference between diff //audio and video frames

, sync_threshold // reasonable range

, start_time //absolute time from the first frame

,pts

, actual_delay//Really requires delay time

start_time = av_gettime() / 1000000.0;

//Get pts

if ((pts = av_frame_get_best_effort_timestamp(frame)) == AV_NOPTS_VALUE) {

pts = 0;

}

play = pts * av_q2d(vedio->time_base);

//Correction time

play = vedio->synchronize(frame, play);

delay = play - last_play;

if (delay <= 0 || delay > 1) {

delay = last_delay;

}

audio_clock = vedio->audio->clock;

last_delay = delay;

last_play = play;

//Time difference between audio and video

diff = vedio->clock - audio_clock;

//Delay acceleration beyond reasonable range

sync_threshold = (delay > 0.01 ? 0.01 : delay);

if (fabs(diff) < 10) {

if (diff <= -sync_threshold) {

delay = 0;

} else if (diff >= sync_threshold) {

delay = 2 * delay;

}

}

start_time += delay;

actual_delay = start_time - av_gettime() / 1000000.0;

if (actual_delay < 0.01) {

actual_delay = 0.01;

}

//Sleep time ffmpeg recommends writing like this. Why it should be studied

av_usleep(actual_delay * 1000000.0 + 6000);

correct play (Play time) method repeat_pict / (2 * fps) yes ffmpeg Interpreted

synchronize(AVFrame *frame, double play) {

//clock is the current playing time location

if (play != 0)

clock=play;

else //pst 0 sets pts to previous frame time first

play = clock;

//Possible pts 0 actively increases clock

//Extension delay required:

double repeat_pict = frame->repeat_pict;

//Using AvCodecContext instead of stream ing

double frame_delay = av_q2d(codec->time_base);

//fps

double fps = 1 / frame_delay;

//pts plus this delay is the display time

double extra_delay = repeat_pict / (2 * fps);

double delay = extra_delay + frame_delay;

clock += delay;

return play;

}

Reference resources

https://www.jianshu.com/p/3578e794f6b5

https://www.jianshu.com/p/de3c07fc6f81

Ali P7 Mobile Internet Architect Advanced Video (Daily Update) Free Learning Click:[https://space.bilibili.com/474380680]( )

### Other key points of knowledge

Here are a few Android Industry gangsters sort out some advanced data corresponding to the technical points above.

**[CodeChina Open Source Project: Android Summary of Learning Notes+Mobile Architecture Video+True subject for factory interview+Project Actual Source](https://codechina.csdn.net/m0_60958482/android_p7)**

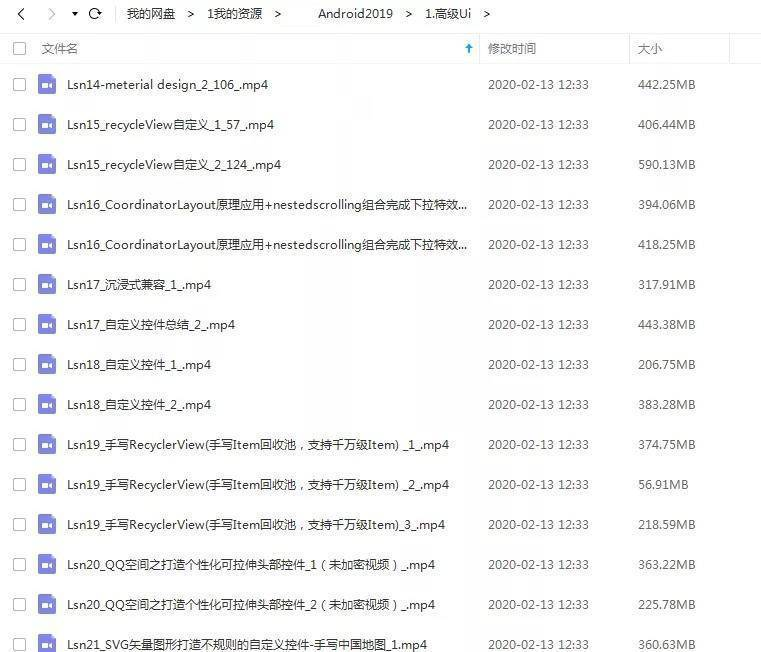

**Advanced Advanced Chapter - Advanced UI,custom View(Partial Display)**

UI This knowledge is used by most people today.It was a hit that year Android Get started training, learn this small piece of knowledge and you'll be able to find a good job.Obviously, however, it's not enough now to refuse endless CV,Go to the project in person, read the source code, study the principle!

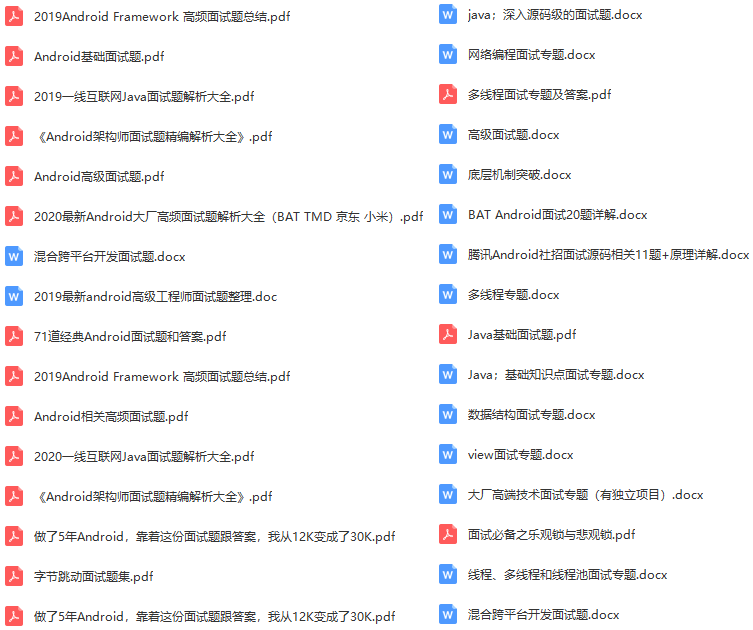

* **Part Set of Interview Questions**

delay = av_q2d(codec->time_base);

//fps

double fps = 1 / frame_delay;

//pts plus this delay is the display time

double extra_delay = repeat_pict / (2 * fps);

double delay = extra_delay + frame_delay;

clock += delay;

return play;

}

Reference resources

https://www.jianshu.com/p/3578e794f6b5

https://www.jianshu.com/p/de3c07fc6f81

Ali P7 Mobile Internet Architect Advanced Video (Updated Daily) Free Learning Click: https://space.bilibili.com/474380680

Other key points of knowledge

Here are some advanced data compiled by Android industry geeks for the technical points above.

[img-xE1y7xck-1630514813921)]

Advanced Advanced - Advanced UI, Custom View (partial display)

UI is the most used knowledge today.That year's hot Android introductory training, learn this small piece of knowledge and you can easily find a good job.Obviously, it's not enough now. Reject endless CV s, go to the project in person, read the source code, and study the principles.

[img-BVttBFJS-1630514813924)]

- Part Set of Interview Questions

[img-v4zuqT7N-1630514813925)]