1. Distributed installation and deployment

1. Cluster planning

Deploy Zookeeper on Hadoop 102, Hadoop 103 and Hadoop 104 nodes.

2. Decompression and installation

(1) Unzip the Zookeeper installation package to the / opt/module / directory

[zs@hadoop102 software]$ tar -zxvf zookeeper-3.4.10.tar.gz -C /opt/module/

(2) Synchronize the contents of / opt/module/zookeeper-3.4.10 directory to Hadoop 103 and Hadoop 104

[zs@hadoop102 module]$ xsync zookeeper-3.4.10/

3. Configure server number

(1) Create zkData in / opt/module/zookeeper-3.4.10 /

[zs@hadoop102 zookeeper-3.4.10]$ mkdir -p zkData

(2) Create a myid file in the / opt/module/zookeeper-3.4.10/zkData directory

[zs@hadoop102 zkData]$ touch myid

Add the myid file. Be sure to create it in linux. It may be garbled in notepad + +

(3) Edit myid file

[zs@hadoop102 zkData]$ vi myid

Add the number corresponding to the server in the file:

2

(4) Copy the configured zookeeper to other machines

[zs@hadoop102 zkData]$ xsync myid

And modify the contents of myid file as 3 and 4 on Hadoop 102 and Hadoop 103 respectively (don't forget)

4. Configure the zoo.cfg file

(1) Rename the zoo in the directory / opt/module/zookeeper-3.4.10/conf_ Sample.cfg is zoo.cfg

[zs@hadoop102 conf]$ mv zoo_sample.cfg zoo.cfg

(2) Open the zoo.cfg file

[zs@hadoop102 conf]$ vim zoo.cfg

To modify the data storage path configuration:

dataDir=/opt/module/zookeeper-3.4.10/zkData

Add the following configuration:

#######################cluster########################## server.2=hadoop102:2888:3888 server.3=hadoop103:2888:3888 server.4=hadoop104:2888:3888

(3) Synchronize the zoo.cfg configuration file

[zs@hadoop102 conf]$ xsync zoo.cfg

(4) Interpretation of configuration parameters

server.A=B:C:D

- A is a number indicating the server number;

In the cluster mode, configure A file myid, which is in the dataDir directory. There is A value of A in this file. When Zookeeper starts, read this file and compare the data with the configuration information in zoo.cfg to determine which server it is.

-

B is the ip address of the server;

-

C is the port where the server exchanges information with the Leader server in the cluster;

-

D is that in case the Leader server in the cluster hangs up, a port is needed to re elect and select a new Leader, and this port is the port used to communicate with each other during the election.

4. Cluster operation

(1) Start Zookeeper separately

[zs@hadoop102 zookeeper-3.4.10]$ bin/zkServer.sh start [zs@hadoop103 zookeeper-3.4.10]$ bin/zkServer.sh start [zs@hadoop104 zookeeper-3.4.10]$ bin/zkServer.sh start

(2) View status

[atguigu@hadoop102 zookeeper-3.4.10]# bin/zkServer.sh status JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: follower [atguigu@hadoop103 zookeeper-3.4.10]# bin/zkServer.sh status JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: leader [atguigu@hadoop104 zookeeper-3.4.5]# bin/zkServer.sh status JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: follower

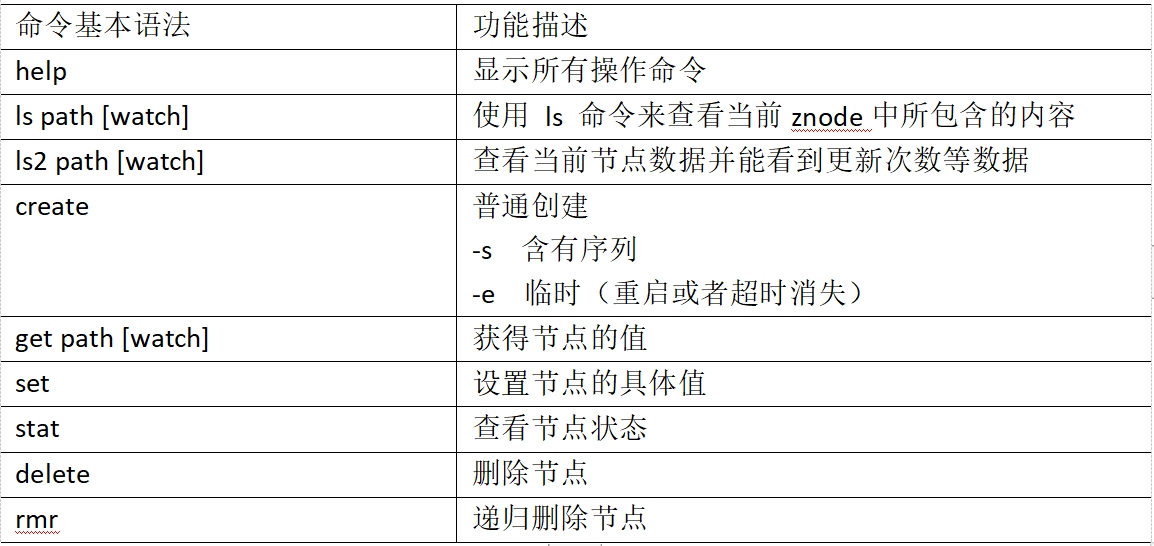

2. Client command line operation

1. Start the client

[zs@hadoop103 zookeeper-3.4.10]$ bin/zkCli.sh

2. Display all operation commands

[zk: localhost:2181(CONNECTED) 1] help

3. View the contents contained in the current znode

[zk: localhost:2181(CONNECTED) 0] ls / [zookeeper]

4. View the detailed data of the current node

[zk: localhost:2181(CONNECTED) 1] ls2 / [zookeeper] cZxid = 0x0 ctime = Thu Jan 01 08:00:00 CST 1970 mZxid = 0x0 mtime = Thu Jan 01 08:00:00 CST 1970 pZxid = 0x0 cversion = -1 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 1

5. Create two common nodes respectively

[zk: localhost:2181(CONNECTED) 3] create /sanguo "jinlian" Created /sanguo [zk: localhost:2181(CONNECTED) 4] create /sanguo/shuguo "liubei" Created /sanguo/shuguo

6. Get the value of the node

[zk: localhost:2181(CONNECTED) 5] get /sanguo jinlian cZxid = 0x100000003 ctime = Wed Aug 29 00:03:23 CST 2018 mZxid = 0x100000003 mtime = Wed Aug 29 00:03:23 CST 2018 pZxid = 0x100000004 cversion = 1 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 7 numChildren = 1 [zk: localhost:2181(CONNECTED) 6] [zk: localhost:2181(CONNECTED) 6] get /sanguo/shuguo liubei cZxid = 0x100000004 ctime = Wed Aug 29 00:04:35 CST 2018 mZxid = 0x100000004 mtime = Wed Aug 29 00:04:35 CST 2018 pZxid = 0x100000004 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 6 numChildren = 0

7. Create a transient node

[zk: localhost:2181(CONNECTED) 7] create -e /sanguo/wuguo "zhouyu" Created /sanguo/wuguo

(1) It can be viewed on the current client

[zk: localhost:2181(CONNECTED) 3] ls /sanguo [wuguo, shuguo]

(2) Exit the current client and then restart the client

[zk: localhost:2181(CONNECTED) 12] quit [zs@hadoop104 zookeeper-3.4.10]$ bin/zkCli.sh

(3) Check again that the temporary node under the root directory has been deleted

[zk: localhost:2181(CONNECTED) 0] ls /sanguo [shuguo]

8. Create node with serial number

(1) First create a common root node / sanguo/weiguo

[zk: localhost:2181(CONNECTED) 1] create /sanguo/weiguo "caocao" Created /sanguo/weiguo

(2) Create node with sequence number

[zk: localhost:2181(CONNECTED) 2] create -s /sanguo/weiguo/xiaoqiao "jinlian" Created /sanguo/weiguo/xiaoqiao0000000000 [zk: localhost:2181(CONNECTED) 3] create -s /sanguo/weiguo/daqiao "jinlian" Created /sanguo/weiguo/daqiao0000000001 [zk: localhost:2181(CONNECTED) 4] create -s /sanguo/weiguo/diaocan "jinlian" Created /sanguo/weiguo/diaocan0000000002

If there is no Sn node, the SN will be incremented from 0. If there are 2 nodes under the original node, the reordering starts from 2, and so on.

9. Modify node data value

[zk: localhost:2181(CONNECTED) 6] set /sanguo/weiguo "simayi"

10. Node value change monitoring

(1) Register and listen for data changes of / sanguo node on Hadoop 104 host

[zk: localhost:2181(CONNECTED) 26] [zk: localhost:2181(CONNECTED) 8] get /sanguo watch

(2) Modify the data of / sanguo node on Hadoop 103 host

[zk: localhost:2181(CONNECTED) 1] set /sanguo "xisi"

(3) Observe the monitoring of data changes received by Hadoop 104 host

WATCHER:: WatchedEvent state:SyncConnected type:NodeDataChanged path:/sanguo

11. Child node change monitoring (path change)

(1) Register and listen for changes of child nodes of / sanguo node on Hadoop 104 host

[zk: localhost:2181(CONNECTED) 1] ls /sanguo watch [aa0000000001, server101]

(2) Create child nodes on Hadoop 103 host / sanguo node

[zk: localhost:2181(CONNECTED) 2] create /sanguo/jin "simayi" Created /sanguo/jin

(3) Observe that the Hadoop 104 host receives the monitoring of child node changes

WATCHER:: WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/sanguo

12. Delete node

[zk: localhost:2181(CONNECTED) 4] delete /sanguo/jin

13. Delete nodes recursively

[zk: localhost:2181(CONNECTED) 15] rmr /sanguo/shuguo

14. View node status

[zk: localhost:2181(CONNECTED) 17] stat /sanguo cZxid = 0x100000003 ctime = Wed Aug 29 00:03:23 CST 2018 mZxid = 0x100000011 mtime = Wed Aug 29 00:21:23 CST 2018 pZxid = 0x100000014 cversion = 9 dataVersion = 1 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 4 numChildren = 1

come on.

thank!

strive!