Welcome to my official account.

At present, I have just started writing for one month, and I have written a total of 18 original articles. The article directory is as follows:

istio multi cluster exploration. I have reached the conclusion after deploying 50 multi clusters

istio multi cluster link tracking, with practical operation video

Istio anti failure weapon, you know a few, istio novice don't read, it's too difficult!

istio business permission control. You can play like this

istio implements non-invasive compression. How to implement compression between microservices

1 what is ratelimit

Rate limit means speed limit. Its main function is to limit the flow and prevent collapse due to system overload. istio speed limits fall into two categories: local speed limits and global speed limits. Local speed limit is a token bucket speed limit function provided inside envoy. Global speed limit requires access to external speed limit services. According to the effective location, it can be divided into virtual host level speed limit and route level speed limit. According to the protocol, it can be divided into http speed limit and tcp speed limit. According to the service location, it can be divided into mesh internal speed limit and external request speed limit. By cluster, it can be divided into single cluster speed limit and multi cluster speed limit.

2 configuration

extensions.filters.http.ratelimit.v3.RateLimit:

{

"domain": "...",corresponding ratelimit Domain name in configuration file

"stage": "...",stage Configuration and action Medium stage Must match, default 0

"request_type": "...", internal, external or both default both

"timeout": "{...}",ratelimit Timeout of service request, default 20 ms

"failure_mode_deny": "...",If ratelimit If the service request fails, an error is returned

"rate_limited_as_resource_exhausted": "...",RESOURCE_EXHAUSTED code replace UNAVAILABLE code

"rate_limit_service": "{...}",Specify external speed limit service

"enable_x_ratelimit_headers": "...",definition ratelimit Header version

"disable_x_envoy_ratelimited_header": "..."Disable x-envoy-ratelimited head

}

rate_limit_service:

{

"grpc_service": "{...}",to configure ratelimit service

"transport_api_version": "..."edition

}

grpc_service:

{

"envoy_grpc": "{...}",envoy grpc client

"google_grpc": "{...}",google grpc client

"timeout": "{...}",grpc Request timeout

"initial_metadata": []Additional metadata information

}

envoy_grpc:

{

"cluster_name": "...",Cluster name

"authority": "..." :authority Header value, default cluster_name

}

google_grpc:

{

"target_uri": "...",target uri

"channel_credentials": "{...}",Secret key

"call_credentials": [],Call key

"stat_prefix": "...",stat prefix

"credentials_factory_name": "...",Secret key factory name

"config": "{...}",Additional configuration

"per_stream_buffer_limit_bytes": "{...}",Cache limit of each stream, default 1 MiB

"channel_args": "{...}"Channel parameters

}

transport_api_version:

xDS API and non-xDS services version. This is used to describe both resource and transport protocol versions (in distinct configuration fields).

-

AUTO

(DEFAULT) When not specified, we assume v2, to ease migration to Envoy's stable API versioning. If a client does not support v2 (e.g. due to deprecation), this is an invalid value.

-

V2

Use xDS v2 API.

-

V3

Use xDS v3 API.

enable_x_ratelimit_headers:

Defines the version of the standard to use for X-RateLimit headers.

-

OFF

(DEFAULT) X-RateLimit headers disabled.

-

DRAFT_VERSION_03

Use draft RFC Version 03.

extensions.filters.network.local_ratelimit.v3.LocalRateLimit

{

"stat_prefix": "...",stat prefix

"token_bucket": "{...}",Token bucket rule

"runtime_enabled": "{...}"Percentage enabled

}

token_bucket:

{

"max_tokens": "...",Maximum number of tokens

"tokens_per_fill": "{...}",Number of tokens per fill

"fill_interval": "{...}"Fill interval

}

runtime_enabled:

{

"default_value": "{...}",percentage

"runtime_key": "..."Runtime key

}

config.route.v3.RateLimit

{

"stage": "{...}",Phase number, must be and ratelimit Filter matching

"disable_key": "...",Disabled key

"actions": [],action

"limit": "{...}"Dynamic Metadata

}

actions:

{

"source_cluster": "{...}",Source cluster action

"destination_cluster": "{...}",Target cluster action

"request_headers": "{...}",Request header action

"remote_address": "{...}",Remote address action

"generic_key": "{...}",currency key action

"header_value_match": "{...}",Head matching action

"dynamic_metadata": "{...}",Dynamic metadata action

"metadata": "{...}",Metadata action

"extension": "{...}"Extended action

}

limit:

{

"dynamic_metadata": "{...}"Dynamic Metadata

}

extensions.filters.network.ratelimit.v3.RateLimit

{

"stat_prefix": "...",

"domain": "...",

"descriptors": [],

"timeout": "{...}",

"failure_mode_deny": "...",

"rate_limit_service": "{...}"

}

3 actual combat

3.1.1http

3.1.1.1 single cluster

3.1.1.1.1 service flow restriction in the cluster

3.1.1.1.1.1 local current limiting

cat <<EOF > envoyfilter-local-rate-limit.yaml apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: filter-local-ratelimit-svc spec: workloadSelector: labels: app: productpage configPatches: - applyTo: HTTP_FILTER match: listener: filterChain: filter: name: "envoy.filters.network.http_connection_manager" patch: operation: INSERT_BEFORE value: name: envoy.filters.http.local_ratelimit typed_config: "@type": type.googleapis.com/udpa.type.v1.TypedStruct type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit value: stat_prefix: http_local_rate_limiter token_bucket: max_tokens: 10 tokens_per_fill: 10 fill_interval: 60s filter_enabled: runtime_key: local_rate_limit_enabled default_value: numerator: 100 denominator: HUNDRED filter_enforced: runtime_key: local_rate_limit_enforced default_value: numerator: 100 denominator: HUNDRED response_headers_to_add: - append: false header: key: x-local-rate-limit value: 'true' EOF kubectl apply -f envoyfilter-local-rate-limit.yaml -n istio

Note: local current limiting needs to be implemented through EnvoyFilter. It does not request external services. It is supported by envoy. It is a token bucket algorithm. The name of http filter must be envoy.filters.http.local_ratelimit, type and typeurl are fixed, stat_prefix can be changed at will, indicating the indicator prefix for generating stat. token_bucket configuration token bucket, max_tokens indicates the maximum number of tokens, tokens_per_fill indicates the number of tokens filled each time, fill_interval indicates the interval between filling tokens. filter_enabled means enabled but not mandatory, filter_enforced means mandatory, and the percentage can be configured. response_headers_to_add modifies the response header information. If append is false, it indicates modification and true indicates addition. runtime_ The key of the key runtime. I'm not sure what it's used for.

Perform pressure test:

[root@node01 45]# go-stress-testing -c 10 -n 10000 -u http://192.168.229.134:30945/productpage Start Concurrent number:10 Number of requests:10000 Request parameters: request: form:http url:http://192.168.229.134:30945/productpage method:GET headers:map[] data: verify:statusCode timeout:30s debug:false ─────┬───────┬───────┬───────┬────────┬────────┬────────┬────────┬────────┬────────┬──────── time consuming│ Concurrent number│ Number of successes│ Number of failures│ qps │Maximum time consuming│Minimum time consuming│Average time│Download bytes│Bytes per second│ Error code ─────┼───────┼───────┼───────┼────────┼────────┼────────┼────────┼────────┼────────┼──────── 1s│ 7│ 2│ 761│ 2.94│ 124.68│ 1.98│ 3406.97│ 21,476│ 21,470│200:2;429:761 2s│ 10│ 5│ 1636│ 2.55│ 1788.46│ 1.98│ 3928.11│ 52,771│ 26,383│200:5;429:1636 3s│ 10│ 5│ 2962│ 1.70│ 1788.46│ 1.04│ 5871.68│ 76,639│ 25,545│200:5;429:2962 4s│ 10│ 5│ 4459│ 1.28│ 1788.46│ 1.04│ 7810.78│ 103,585│ 25,896│200:5;429:4459

429 Too Many Requests

This status code is useful when you need to limit the number of clients requesting a service, that is, to limit the request speed

clear:

kubectl delete envoyfilter filter-local-ratelimit-svc -n istio

3.1.1.1.1.2 global current limiting

Deploy ratelimit

1 create cm

cat << EOF > ratelimit-config.yaml apiVersion: v1 kind: ConfigMap metadata: name: ratelimit-config data: config.yaml: | domain: productpage-ratelimit descriptors: - key: PATH value: "/productpage" rate_limit: unit: minute requests_per_unit: 1 - key: PATH rate_limit: unit: minute requests_per_unit: 100 EOF kubectl apply -f ratelimit-config.yaml -n istio

Note: this configmap is the configuration file used by the speed limit service. It is the speed limit format of envoy v3. Domain is the domain name, which will be referenced in the envoyfilter. The PATH of descriptors indicates that the requested PATH can have multiple values, rate_limit configures the speed limit quota. Here, the productpage is configured with one request per minute, and other URLs are 100 requests per minute

2. Create speed limit service deployment

cat << EOF > ratelimit-deploy.yaml apiVersion: v1 kind: Service metadata: name: redis labels: app: redis spec: ports: - name: redis port: 6379 selector: app: redis --- apiVersion: apps/v1 kind: Deployment metadata: name: redis spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - image: redis:alpine imagePullPolicy: Always name: redis ports: - name: redis containerPort: 6379 restartPolicy: Always serviceAccountName: "" --- apiVersion: v1 kind: Service metadata: name: ratelimit labels: app: ratelimit spec: ports: - name: http-port port: 8080 targetPort: 8080 protocol: TCP - name: grpc-port port: 8081 targetPort: 8081 protocol: TCP - name: http-debug port: 6070 targetPort: 6070 protocol: TCP selector: app: ratelimit --- apiVersion: apps/v1 kind: Deployment metadata: name: ratelimit spec: replicas: 1 selector: matchLabels: app: ratelimit strategy: type: Recreate template: metadata: labels: app: ratelimit spec: containers: - image: envoyproxy/ratelimit:6f5de117 # 2021/01/08 imagePullPolicy: Always name: ratelimit command: ["/bin/ratelimit"] env: - name: LOG_LEVEL value: debug - name: REDIS_SOCKET_TYPE value: tcp - name: REDIS_URL value: redis:6379 - name: USE_STATSD value: "false" - name: RUNTIME_ROOT value: /data - name: RUNTIME_SUBDIRECTORY value: ratelimit ports: - containerPort: 8080 - containerPort: 8081 - containerPort: 6070 volumeMounts: - name: config-volume mountPath: /data/ratelimit/config/config.yaml subPath: config.yaml volumes: - name: config-volume configMap: name: ratelimit-config EOF kubectl apply -f ratelimit-deploy.yaml -n istio

redis and an official ratelimit service were created.

3. Create envy filter

cat << EOF > envoyfilter-filter.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit

namespace: istio-system

spec:

workloadSelector:

# select by label in the same namespace

labels:

istio: ingressgateway

configPatches:

# The Envoy config you want to modify

- applyTo: HTTP_FILTER

match:

context: GATEWAY

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: INSERT_BEFORE

# Adds the Envoy Rate Limit Filter in HTTP filter chain.

value:

name: envoy.filters.http.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ratelimit.v3.RateLimit

# domain can be anything! Match it to the ratelimter service config

domain: productpage-ratelimit

failure_mode_deny: true

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_cluster

timeout: 10s

transport_api_version: V3

- applyTo: CLUSTER

match:

cluster:

service: ratelimit.istio.svc.cluster.local

patch:

operation: ADD

# Adds the rate limit service cluster for rate limit service defined in step 1.

value:

name: rate_limit_cluster

type: STRICT_DNS

connect_timeout: 10s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: rate_limit_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: ratelimit.istio.svc.cluster.local

port_value: 8081

EOF

kubectl apply -f envoyfilter-filter.yaml -n istio-system

This envoyfilter acts on the gateway and is configured with an HTTP filter envoy.filters.http.ratelimit and a cluster. The cluster address of the HTTP filter points to the address of the cluster configuration. Here is the address of our ratelimit service. domain is the same as the value of the above configmap, failure_mode_deny means reject when the request limit is exceeded, rate_limit_service configures the address (cluster) of the ratelimit service. grpc type or HTTP type can be configured here.

4. Create action envoyfilter

cat << EOF > envoyfilter-action.yaml apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: filter-ratelimit-svc namespace: istio-system spec: workloadSelector: labels: istio: ingressgateway configPatches: - applyTo: VIRTUAL_HOST match: context: GATEWAY routeConfiguration: vhost: name: "*:80" route: action: ANY patch: operation: MERGE # Applies the rate limit rules. value: rate_limits: - actions: # any actions in here - request_headers: header_name: ":path" descriptor_key: "PATH" EOF kubectl apply -f envoyfilter-action.yaml -n istio-system

This envoyfilter functions at the entrance gateway and configures a rate for the 80 port virtual host_ Limits action, descriptor_key is used to select the key configured in the configmap.

Pressure measurement:

[root@node01 ~]# go-stress-testing -c 10 -n 10000 -u http://192.168.229.134:30945/productpage Start Concurrent number:10 Number of requests:10000 Request parameters: request: form:http url:http://192.168.229.134:30945/productpage method:GET headers:map[] data: verify:statusCode timeout:30s debug:false ─────┬───────┬───────┬───────┬────────┬────────┬────────┬────────┬────────┬────────┬──────── time consuming│ Concurrent number│ Number of successes│ Number of failures│ qps │Maximum time consuming│Minimum time consuming│Average time│Download bytes│Bytes per second│ Error code ─────┼───────┼───────┼───────┼────────┼────────┼────────┼────────┼────────┼────────┼──────── 1s│ 10│ 1│ 1051│ 1.01│ 55.51│ 3.70│ 9914.38│ 4,183│ 4,176│200:1;429:1051 2s│ 10│ 1│ 1629│ 0.50│ 55.51│ 3.70│19807.86│ 4,183│ 2,090│200:1;429:1629 3s│ 10│ 1│ 2154│ 0.34│ 55.51│ 3.70│29829.63│ 4,183│ 1,393│200:1;429:2154 4s│ 10│ 1│ 2662│ 0.25│ 55.51│ 3.70│39823.69│ 4,183│ 1,045│200:1;429:2662 5s│ 10│ 1│ 3166│ 0.20│ 58.63│ 3.70│49865.16│ 4,183│ 836│200:1;429:3166

clear:

kubectl delete cm ratelimit-config -n istio kubectl delete -f ratelimit-deploy.yaml -n istio kubectl delete envoyfilter filter-ratelimit -n istio-system kubectl delete envoyfilter filter-ratelimit-svc -n istio-system

3.1.1.1.2 service flow restriction outside the cluster

3.1.1.1.2.1 local current limiting

cat << EOF > se-baidu.yaml apiVersion: networking.istio.io/v1alpha3 kind: ServiceEntry metadata: name: baidu spec: hosts: - www.baidu.com ports: - number: 80 name: http-port protocol: HTTP resolution: DNS EOF kubectl apply -f se-baidu.yaml -n istio

Description: create a service entry to visit Baidu

cat <<EOF > envoyfilter-local-rate-limit-http-outside.yaml apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: filter-local-ratelimit-svc spec: workloadSelector: labels: app: ratings configPatches: - applyTo: HTTP_FILTER match: context: SIDECAR_OUTBOUND listener: filterChain: filter: name: "envoy.filters.network.http_connection_manager" subFilter: name: "envoy.filters.http.router" patch: operation: INSERT_BEFORE value: name: envoy.filters.http.local_ratelimit typed_config: "@type": type.googleapis.com/udpa.type.v1.TypedStruct type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit value: stat_prefix: http_local_rate_limiter token_bucket: max_tokens: 1 tokens_per_fill: 1 fill_interval: 60s filter_enabled: runtime_key: local_rate_limit_enabled default_value: numerator: 100 denominator: HUNDRED filter_enforced: runtime_key: local_rate_limit_enforced default_value: numerator: 100 denominator: HUNDRED response_headers_to_add: - append: false header: key: x-local-rate-limit value: 'true' EOF kubectl apply -f envoyfilter-local-rate-limit-http-outside.yaml -n istio

Description: SIDECAR_OUTBOUND indicates that the external request works. Local current limiting needs to be implemented through EnvoyFilter. It does not request external services. It is supported by envoy. It is a token bucket algorithm. The name of http filter must be envoy.filters.http.local_ratelimit, type and typeurl are fixed, stat_prefix can be changed at will, indicating the indicator prefix for generating stat. token_bucket configuration token bucket, max_tokens indicates the maximum number of tokens, tokens_per_fill indicates the number of tokens filled each time, fill_interval indicates the interval between filling tokens. filter_enabled means enabled but not mandatory, filter_enforced means mandatory, and the percentage can be configured. response_headers_to_add modifies the response header information. If append is false, it indicates modification and true indicates addition. runtime_ The key of the key runtime. I'm not sure what it's used for.

kubectl exec -it -n istio ratings-v2-mysql-vm-66dc56449d-lk6gv /bin/bash local_rate_limitednode@ratings-v2-mysql-vm-66dc56449d-lk6gv:/opt/microservices$ curl www.baidu.com -I HTTP/1.1 429 Too Many Requests x-local-rate-limit: true content-length: 18 content-type: text/plain date: Fri, 17 Sep 2021 23:20:13 GMT server: envoy

Enter the ratings container and send a request to Baidu. 409 error indicates that the current limit is effective

clear:

kubectl delete se baidu -n istio kubectl delete envoyfilter filter-local-ratelimit-svc -n istio

3.1.1.1.2.2 global current limiting

Deploy ratelimit

1 create cm

cat << EOF > ratelimit-config-outside-http.yaml apiVersion: v1 kind: ConfigMap metadata: name: ratelimit-config data: config.yaml: | domain: productpage-ratelimit descriptors: - key: PATH value: "/" rate_limit: unit: minute requests_per_unit: 1 - key: PATH value: "/aa" rate_limit: unit: minute requests_per_unit: 1 - key: PATH rate_limit: unit: minute requests_per_unit: 100 EOF kubectl apply -f ratelimit-config-outside-http.yaml -n istio

Note: this configmap is the configuration file used by the speed limit service. It is the speed limit format of envoy v3. Domain is the domain name, which will be referenced in the envoyfilter. The PATH of descriptors indicates that the requested PATH can have multiple values, rate_limit configures the speed limit quota. Here, the productpage is configured with one request per minute, / aa one request per minute, and other URLs are 100 requests per minute

2. Create speed limit service deployment

cat << EOF > ratelimit-deploy.yaml apiVersion: v1 kind: Service metadata: name: redis labels: app: redis spec: ports: - name: redis port: 6379 selector: app: redis --- apiVersion: apps/v1 kind: Deployment metadata: name: redis spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - image: redis:alpine imagePullPolicy: Always name: redis ports: - name: redis containerPort: 6379 restartPolicy: Always serviceAccountName: "" --- apiVersion: v1 kind: Service metadata: name: ratelimit labels: app: ratelimit spec: ports: - name: http-port port: 8080 targetPort: 8080 protocol: TCP - name: grpc-port port: 8081 targetPort: 8081 protocol: TCP - name: http-debug port: 6070 targetPort: 6070 protocol: TCP selector: app: ratelimit --- apiVersion: apps/v1 kind: Deployment metadata: name: ratelimit spec: replicas: 1 selector: matchLabels: app: ratelimit strategy: type: Recreate template: metadata: labels: app: ratelimit spec: containers: - image: envoyproxy/ratelimit:6f5de117 # 2021/01/08 imagePullPolicy: Always name: ratelimit command: ["/bin/ratelimit"] env: - name: LOG_LEVEL value: debug - name: REDIS_SOCKET_TYPE value: tcp - name: REDIS_URL value: redis:6379 - name: USE_STATSD value: "false" - name: RUNTIME_ROOT value: /data - name: RUNTIME_SUBDIRECTORY value: ratelimit ports: - containerPort: 8080 - containerPort: 8081 - containerPort: 6070 volumeMounts: - name: config-volume mountPath: /data/ratelimit/config/config.yaml subPath: config.yaml volumes: - name: config-volume configMap: name: ratelimit-config EOF kubectl apply -f ratelimit-deploy.yaml -n istio

redis and an official ratelimit service were created.

cat << EOF > se-baidu.yaml apiVersion: networking.istio.io/v1alpha3 kind: ServiceEntry metadata: name: baidu spec: hosts: - www.baidu.com ports: - number: 80 name: http-port protocol: HTTP resolution: DNS EOF kubectl apply -f se-baidu.yaml -n istio

Create a serviceentry to visit Baidu

Create envy filter

cat << EOF > envoyfilter-filter-outside-http.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit

namespace: istio

spec:

workloadSelector:

# select by label in the same namespace

labels:

app: ratings

configPatches:

- applyTo: HTTP_FILTER

match:

context: SIDECAR_OUTBOUND

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: INSERT_BEFORE

# Adds the Envoy Rate Limit Filter in HTTP filter chain.

value:

name: envoy.filters.http.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ratelimit.v3.RateLimit

# domain can be anything! Match it to the ratelimter service config

domain: productpage-ratelimit

failure_mode_deny: true

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_cluster

timeout: 10s

transport_api_version: V3

- applyTo: CLUSTER

match:

cluster:

service: ratelimit.istio.svc.cluster.local

patch:

operation: ADD

# Adds the rate limit service cluster for rate limit service defined in step 1.

value:

name: rate_limit_cluster

type: STRICT_DNS

connect_timeout: 10s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: rate_limit_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: ratelimit.istio.svc.cluster.local

port_value: 8081

EOF

kubectl apply -f envoyfilter-filter-outside-http.yaml -n istio

This envoyfilter acts on ratings, sidecar_ For the external traffic used by outbound, an HTTP filter envoy.filters.http.ratelimit and a cluster are configured. The cluster address of the HTTP filter points to the address of the cluster configuration. Here is the address of our ratelimit service. domain is the same as the value of the above configmap, failure_mode_deny means reject when the request limit is exceeded, rate_limit_service configures the address (cluster) of the ratelimit service. grpc type or HTTP type can be configured here.

4. Create action envoyfilter

cat << EOF > envoyfilter-action-outside-http.yaml apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: filter-ratelimit-svc namespace: istio spec: workloadSelector: labels: app: ratings configPatches: - applyTo: VIRTUAL_HOST match: context: SIDECAR_OUTBOUND routeConfiguration: vhost: name: "www.baidu.com:80" route: action: ANY patch: operation: MERGE # Applies the rate limit rules. value: rate_limits: - actions: # any actions in here - request_headers: header_name: ":path" descriptor_key: "PATH" EOF kubectl apply -f envoyfilter-action-outside-http.yaml -n istio

Host is the address of Baidu we configured (www.baidu.com:80). This envoyfilter acts at ratings and configures a rate for the virtual host at port 80_ Limits action, descriptor_key is used to select the key configured in the configmap.

kubectl exec -it -n istio ratings-v2-mysql-vm-66dc56449d-lk6gv /bin/bash node@ratings-v2-mysql-vm-66dc56449d-lk6gv:/opt/microservices$ curl www.baidu.com/ -I HTTP/1.1 429 Too Many Requests x-envoy-ratelimited: true date: Fri, 17 Sep 2021 23:51:33 GMT server: envoy transfer-encoding: chunked

Enter the rating container and send a request to Baidu. 409 error indicates that the current limit is successful

clear:

kubectl delete cm ratelimit-config -n istio kubectl delete -f ratelimit-deploy.yaml -n istio kubectl delete envoyfilter filter-ratelimit -n istio kubectl delete envoyfilter filter-ratelimit-svc -n istio

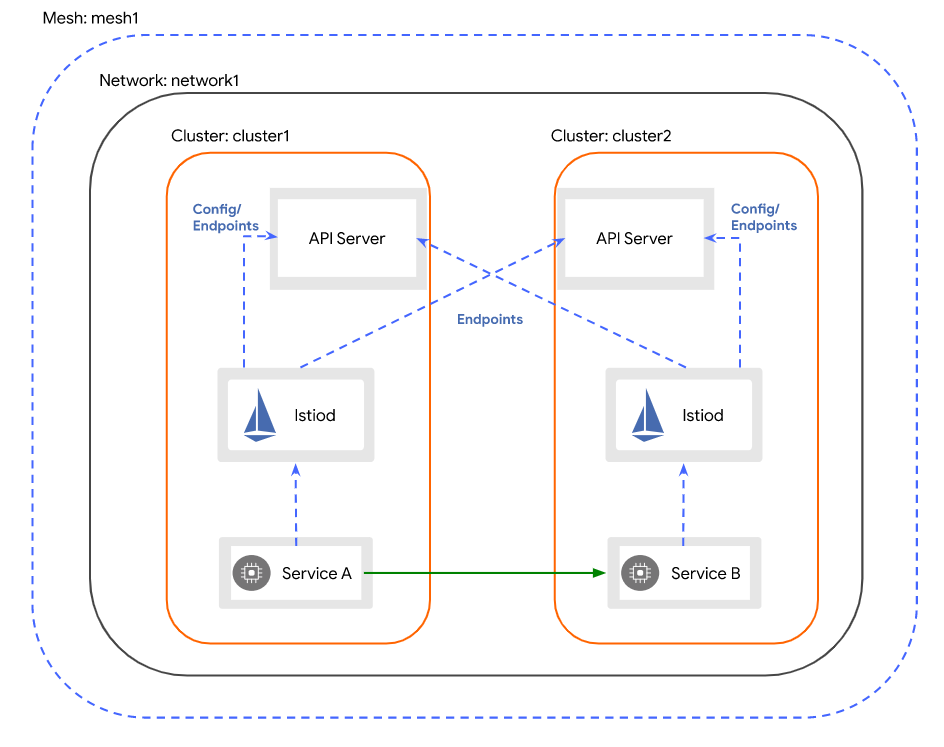

3.1.1.2 multi cluster

3.1.1.2.1 cluster preparation

We are not launching the multi cluster installation here. If you don't know, you can read my previous articles.

Cluster 1 128,129,130 Cluster 2 131,132,133 Two networks are connected 128. 129.130 route add -net 172.21.1.0 netmask 255.255.255.0 gw 192.168.229.131 route add -net 172.21.2.0 netmask 255.255.255.0 gw 192.168.229.133 route add -net 172.21.0.0 netmask 255.255.255.0 gw 192.168.229.132 route add -net 10.69.0.0 netmask 255.255.0.0 gw 192.168.229.131 131,132,133 route add -net 172.20.0.0 netmask 255.255.255.0 gw 192.168.229.128 route add -net 172.20.1.0 netmask 255.255.255.0 gw 192.168.229.129 route add -net 172.20.2.0 netmask 255.255.255.0 gw 192.168.229.130 route add -net 10.68.0.0 netmask 255.255.0.0 gw 192.168.229.128 cluster1: generate istio install operator file cat <<EOF > cluster1.yaml apiVersion: install.istio.io/v1alpha1 kind: IstioOperator spec: profile: demo values: global: meshID: mesh1 multiCluster: clusterName: cluster1 network: network1 meshConfig: accessLogFile: /dev/stdout enableTracing: true components: egressGateways: - name: istio-egressgateway enabled: true EOF generate istio install operator file cat <<EOF > cluster2.yaml apiVersion: install.istio.io/v1alpha1 kind: IstioOperator spec: profile: demo values: global: meshID: mesh1 multiCluster: clusterName: cluster2 network: network1 meshConfig: accessLogFile: /dev/stdout enableTracing: true components: egressGateways: - name: istio-egressgateway enabled: true EOF Transfer deployment files to cluster2 scp cluster2.yaml root@192.168.229.131:/root cluster1: Generate access apiserver secret istioctl x create-remote-secret --name=cluster1 --server=https://192.168.229.128:6443 > remote-secret-cluster1.yaml transmission secret reach cluster2 scp remote-secret-cluster1.yaml root@192.168.229.131:/root cluster2 Generate access apiserver secret istioctl x create-remote-secret --name=cluster2 --server=https://192.168.229.131:6443 > remote-secret-cluster2.yaml transmission secret reach cluster2 scp remote-secret-cluster2.yaml root@192.168.229.128:/root cluster1 application secret kubectl apply -f remote-secret-cluster2.yaml Deploy cluster istioctl install -f cluster1.yaml cluster2: application secret kubectl apply -f remote-secret-cluster1.yaml Deploy cluster istioctl install -f cluster2.yaml cluster1: restart pod kubectl rollout restart deploy -n istio kubectl rollout restart deploy -n istio-system cluster2: restart pod kubectl rollout restart deploy -n istio kubectl rollout restart deploy -n istio-system

clear:

cluster1: kubectl delete secret istio-remote-secret-cluster2 -n istio-system istioctl x uninstall -f cluster1.yaml reboot cluster2: kubectl delete secret istio-remote-secret-cluster1 -n istio-system istioctl x uninstall -f cluster2.yaml reboot

3.1.1.2.2 local current limiting in the cluster

Multiple clusters only demonstrate local current limiting in the cluster, and others are the same

cat <<EOF > envoyfilter-local-rate-limit-multi-http.yaml apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: filter-local-ratelimit-svc spec: workloadSelector: labels: app: productpage configPatches: - applyTo: HTTP_FILTER match: listener: filterChain: filter: name: "envoy.filters.network.http_connection_manager" patch: operation: INSERT_BEFORE value: name: envoy.filters.http.local_ratelimit typed_config: "@type": type.googleapis.com/udpa.type.v1.TypedStruct type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit value: stat_prefix: http_local_rate_limiter token_bucket: max_tokens: 10 tokens_per_fill: 10 fill_interval: 60s filter_enabled: runtime_key: local_rate_limit_enabled default_value: numerator: 100 denominator: HUNDRED filter_enforced: runtime_key: local_rate_limit_enforced default_value: numerator: 100 denominator: HUNDRED response_headers_to_add: - append: false header: key: x-local-rate-limit value: 'true' EOF kubectl apply -f envoyfilter-local-rate-limit-multi-http.yaml -n istio

Note: local current limiting needs to be implemented through EnvoyFilter. It does not request external services. It is supported by envoy. It is a token bucket algorithm. The name of http filter must be envoy.filters.http.local_ratelimit, type and typeurl are fixed, stat_prefix can be changed at will, indicating the indicator prefix for generating stat. token_bucket configuration token bucket, max_tokens indicates the maximum number of tokens, tokens_per_fill indicates the number of tokens filled each time, fill_interval indicates the interval between filling tokens. filter_enabled means enabled but not mandatory, filter_enforced means mandatory, and the percentage can be configured. response_headers_to_add modifies the response header information. If append is false, it indicates modification and true indicates addition. runtime_ The key of the key runtime. I'm not sure what it's used for.

Start pressure measurement:

[root@node01 45]# go-stress-testing -c 10 -n 10000 -u http://192.168.229.128:30363/productpage Start Concurrent number:10 Number of requests:10000 Request parameters: request: form:http url:http://192.168.229.128:30363/productpage method:GET headers:map[] data: verify:statusCode timeout:30s debug:false ─────┬───────┬───────┬───────┬────────┬────────┬────────┬────────┬────────┬────────┬──────── time consuming│ Concurrent number│ Number of successes│ Number of failures│ qps │Maximum time consuming│Minimum time consuming│Average time│Download bytes│Bytes per second│ Error code ─────┼───────┼───────┼───────┼────────┼────────┼────────┼────────┼────────┼────────┼──────── 1s│ 0│ 0│ 0│ 0.00│ 0.00│ 0.00│ 0.00│ │ │ 2s│ 7│ 16│ 6│ 15.25│ 1453.38│ 4.56│ 655.73│ 71,950│ 35,974│200:16;429:6 3s│ 7│ 17│ 6│ 14.30│ 1453.38│ 4.56│ 699.44│ 76,133│ 25,376│200:17;429:6 4s│ 10│ 34│ 24│ 14.30│ 3207.96│ 2.71│ 699.46│ 154,262│ 38,559│200:34;429:24 5s│ 10│ 78│ 68│ 16.03│ 3207.96│ 2.71│ 623.67│ 370,054│ 74,009│200:78;429:68 6s│ 10│ 160│ 150│ 26.98│ 3207.96│ 2.71│ 370.66│ 770,420│ 128,402│200:160;429:150 7s│ 10│ 238│ 228│ 34.53│ 3207.96│ 2.71│ 289.60│1,148,994│ 164,131│200:238;429:228

It is found that the configurations of multi cluster and single cluster are the same and take effect

3.2.2tcp

3.2.2.1 single cluster

3.2.2.1.1 service flow restriction in the cluster

3.2.2.1.1.1 local current limiting

Deploy mysql

cat << EOF > mysql.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysql-credentials

type: Opaque

data:

rootpasswd: cGFzc3dvcmQ=

---

apiVersion: v1

kind: Service

metadata:

name: mysqldb

labels:

app: mysqldb

service: mysqldb

spec:

ports:

- port: 3306

name: tcp

selector:

app: mysqldb

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysqldb-v1

labels:

app: mysqldb

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: mysqldb

version: v1

template:

metadata:

labels:

app: mysqldb

version: v1

spec:

containers:

- name: mysqldb

image: docker.io/istio/examples-bookinfo-mysqldb:1.16.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-credentials

key: rootpasswd

args: ["--default-authentication-plugin","mysql_native_password"]

volumeMounts:

- name: var-lib-mysql

mountPath: /var/lib/mysql

volumes:

- name: var-lib-mysql

emptyDir: {}

EOF

kubectl apply -f mysql.yaml -n istio

Note: mysql service is deployed, which will be requested by ratings when obtaining data

Deploy mysql ratings

cat << EOF > ratings-mysql.yaml apiVersion: apps/v1 kind: Deployment metadata: name: ratings-v2-mysql labels: app: ratings version: v2-mysql spec: replicas: 1 selector: matchLabels: app: ratings version: v2-mysql template: metadata: labels: app: ratings version: v2-mysql spec: containers: - name: ratings image: docker.io/istio/examples-bookinfo-ratings-v2:1.16.2 imagePullPolicy: IfNotPresent env: # ratings-v2 will use mongodb as the default db backend. # if you would like to use mysqldb then you can use this file # which sets DB_TYPE = 'mysql' and the rest of the parameters shown # here and also create the # mysqldb service using bookinfo-mysql.yaml # NOTE: This file is mutually exclusive to bookinfo-ratings-v2.yaml - name: DB_TYPE value: "mysql" - name: MYSQL_DB_HOST value: mysqldb - name: MYSQL_DB_PORT value: "3306" - name: MYSQL_DB_USER value: root - name: MYSQL_DB_PASSWORD value: password ports: - containerPort: 9080 securityContext: runAsUser: 1000 EOF kubectl apply -f ratings-mysql.yaml -n istio

The mysql version of ratings is deployed, which refers to the env variable.

cat <<EOF > envoyfilter-local-rate-limit-mysql-inside.yaml apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: filter-local-ratelimit-svc spec: workloadSelector: labels: app: mysqldb version: v1 configPatches: - applyTo: NETWORK_FILTER match: listener: portNumber: 3306 filterChain: filter: name: "envoy.filters.network.tcp_proxy" patch: operation: INSERT_BEFORE value: name: envoy.filters.network.local_ratelimit typed_config: "@type": type.googleapis.com/udpa.type.v1.TypedStruct type_url: type.googleapis.com/envoy.extensions.filters.network.local_ratelimit.v3.LocalRateLimit value: stat_prefix: tcp_local_rate_limiter token_bucket: max_tokens: 1 tokens_per_fill: 1 fill_interval: 60s runtime_enabled: runtime_key: tcp_rate_limit_enabled default_value: true EOF kubectl apply -f envoyfilter-local-rate-limit-mysql-inside.yaml -n istio

Note that applyTo here is NETWORK_FILTER, because mysql is a TCP service, not an http service. The name of the filter is envoy.filters.network.local_ratelimit,type_ The URL is also fixed. Don't write it wrong. token_bucket is configured with the number of access speed limiting tokens and their filling speed. The filter we set is at envy.filters.network.tcp_ Proxy, so it's INSERT_BEFORE.

clear:

kubectl delete -f mysql.yaml -n istio kubectl delete -f ratings-mysql.yaml -n istio kubectl delete envoyfilter filter-local-ratelimit-svc -n istio

3.2.2.1.1.2 global current limiting

Deploy mysql

wechat/envoyfilter/ratelimit/tcp/inner/global/mysql.yaml

cat << EOF > mysql.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysql-credentials

type: Opaque

data:

rootpasswd: cGFzc3dvcmQ=

---

apiVersion: v1

kind: Service

metadata:

name: mysqldb

labels:

app: mysqldb

service: mysqldb

spec:

ports:

- port: 3306

name: tcp

selector:

app: mysqldb

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysqldb-v1

labels:

app: mysqldb

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: mysqldb

version: v1

template:

metadata:

labels:

app: mysqldb

version: v1

spec:

containers:

- name: mysqldb

image: docker.io/istio/examples-bookinfo-mysqldb:1.16.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-credentials

key: rootpasswd

args: ["--default-authentication-plugin","mysql_native_password"]

volumeMounts:

- name: var-lib-mysql

mountPath: /var/lib/mysql

volumes:

- name: var-lib-mysql

emptyDir: {}

EOF

kubectl apply -f mysql.yaml -n istio

Deploy mysql ratings

cat << EOF > ratings-mysql.yaml apiVersion: apps/v1 kind: Deployment metadata: name: ratings-v2-mysql labels: app: ratings version: v2-mysql spec: replicas: 1 selector: matchLabels: app: ratings version: v2-mysql template: metadata: labels: app: ratings version: v2-mysql spec: containers: - name: ratings image: docker.io/istio/examples-bookinfo-ratings-v2:1.16.2 imagePullPolicy: IfNotPresent env: # ratings-v2 will use mongodb as the default db backend. # if you would like to use mysqldb then you can use this file # which sets DB_TYPE = 'mysql' and the rest of the parameters shown # here and also create the # mysqldb service using bookinfo-mysql.yaml # NOTE: This file is mutually exclusive to bookinfo-ratings-v2.yaml - name: DB_TYPE value: "mysql" - name: MYSQL_DB_HOST value: mysqldb - name: MYSQL_DB_PORT value: "3306" - name: MYSQL_DB_USER value: root - name: MYSQL_DB_PASSWORD value: password ports: - containerPort: 9080 securityContext: runAsUser: 1000 EOF kubectl apply -f ratings-mysql.yaml -n istio

Deploy ratelimit

1 create cm

cat << EOF > ratelimit-config-mysql-inside.yaml apiVersion: v1 kind: ConfigMap metadata: name: ratelimit-config data: config.yaml: | domain: mysql-ratelimit descriptors: - key: test value: "test" rate_limit: unit: minute requests_per_unit: 1 - key: test rate_limit: unit: minute requests_per_unit: 10 EOF kubectl apply -f ratelimit-config-mysql-inside.yaml -n istio

Note: this configmap is the configuration file used by the speed limit service. It is the speed limit format of envoy v3. Domain is the domain name, which will be referenced in the envoyfilter. The PATH of descriptors indicates that the requested PATH can have multiple values, rate_limit configures the speed limit quota. Here, the productpage is configured with one request per minute, and other URLs are 100 requests per minute

2. Create speed limit service deployment

cat << EOF > ratelimit-deploy.yaml apiVersion: v1 kind: Service metadata: name: redis labels: app: redis spec: ports: - name: redis port: 6379 selector: app: redis --- apiVersion: apps/v1 kind: Deployment metadata: name: redis spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - image: redis:alpine imagePullPolicy: Always name: redis ports: - name: redis containerPort: 6379 restartPolicy: Always serviceAccountName: "" --- apiVersion: v1 kind: Service metadata: name: ratelimit labels: app: ratelimit spec: ports: - name: http-port port: 8080 targetPort: 8080 protocol: TCP - name: grpc-port port: 8081 targetPort: 8081 protocol: TCP - name: http-debug port: 6070 targetPort: 6070 protocol: TCP selector: app: ratelimit --- apiVersion: apps/v1 kind: Deployment metadata: name: ratelimit spec: replicas: 1 selector: matchLabels: app: ratelimit strategy: type: Recreate template: metadata: labels: app: ratelimit spec: containers: - image: envoyproxy/ratelimit:6f5de117 # 2021/01/08 imagePullPolicy: Always name: ratelimit command: ["/bin/ratelimit"] env: - name: LOG_LEVEL value: debug - name: REDIS_SOCKET_TYPE value: tcp - name: REDIS_URL value: redis:6379 - name: USE_STATSD value: "false" - name: RUNTIME_ROOT value: /data - name: RUNTIME_SUBDIRECTORY value: ratelimit ports: - containerPort: 8080 - containerPort: 8081 - containerPort: 6070 volumeMounts: - name: config-volume mountPath: /data/ratelimit/config/config.yaml subPath: config.yaml volumes: - name: config-volume configMap: name: ratelimit-config EOF kubectl apply -f ratelimit-deploy.yaml -n istio

redis and an official ratelimit service were created.

3. Create envy filter

cat << EOF > envoyfilter-filter-mysql-inside.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit

namespace: istio

spec:

workloadSelector:

labels:

app: mysqldb

version: v1

configPatches:

- applyTo: NETWORK_FILTER

match:

listener:

portNumber: 3306

filterChain:

filter:

name: "envoy.filters.network.tcp_proxy"

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.network.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.ratelimit.v3.RateLimit

domain: mysql-ratelimit

failure_mode_deny: true

stat_prefix: mysql_ratelimit

descriptors:

- entries:

- key: test

value: test

limit:

requests_per_unit: 2

unit: MINUTE

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_cluster

timeout: 10s

transport_api_version: V3

- applyTo: CLUSTER

match:

cluster:

service: ratelimit.istio.svc.cluster.local

patch:

operation: ADD

value:

name: rate_limit_cluster

type: STRICT_DNS

connect_timeout: 10s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: rate_limit_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: ratelimit.istio.svc.cluster.local

port_value: 8081

EOF

kubectl apply -f envoyfilter-filter-mysql-inside.yaml -n istio

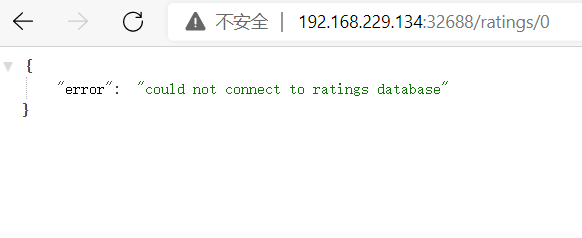

node@ratings-v2-mysql-565f8fd887-8hp9s:/opt/microservices$ curl ratings.istio.svc:9080/ratings/0

{"id":0,"ratings":{"Reviewer1":5,"Reviewer2":4}}node@ratings-v2-mysql-565f8fd887-8hp9s:/opt/microservices$ curl ratings.istio.svc:9080/ratings/0

{"error":"could not connect to ratings database"}node@ratings-v2-mysql-565f8fd887-8hp9s:/opt/microservices$ curl ratings.istio.svc:9080/ratings/0

{"error":"could not connect to ratings database"}node@ratings-v2-mysql-565f8fd887-8hp9s:/opt/microservices$

clear:

kubectl delete -f mysql.yaml -n istio kubectl delete -f ratings-mysql.yaml -n istio kubectl delete -f envoyfilter-filter-mysql-inside.yaml -n istio kubectl delete -f ratelimit-deploy.yaml -n istio kubectl delete -f ratelimit-config-mysql-inside.yaml -n istio

3.2.2.1.2 service flow restriction outside the cluster

3.2.2.1.2.1 local current limiting

Deploying rating-v2

cat << EOF > bookinfo-ratings-v2-mysql-vm.yaml apiVersion: apps/v1 kind: Deployment metadata: name: ratings-v2-mysql-vm labels: app: ratings version: v2-mysql-vm spec: replicas: 1 selector: matchLabels: app: ratings version: v2-mysql-vm template: metadata: labels: app: ratings version: v2-mysql-vm spec: containers: - name: ratings image: docker.io/istio/examples-bookinfo-ratings-v2:1.16.2 imagePullPolicy: IfNotPresent env: # This assumes you registered your mysql vm as # istioctl register -n vm mysqldb 1.2.3.4 3306 - name: DB_TYPE value: "mysql" - name: MYSQL_DB_HOST value: mysql.vm.demo - name: MYSQL_DB_PORT value: "3306" - name: MYSQL_DB_USER value: root - name: MYSQL_DB_PASSWORD value: root ports: - containerPort: 9080 securityContext: runAsUser: 1000 EOF kubectl apply -f bookinfo-ratings-v2-mysql-vm.yaml -n istio

Deploy mysql on vm. This is omitted. Students who need documents can add my wechat because it is a little complicated

Create serviceentry

cat << EOF > se-mysql.yaml apiVersion: networking.istio.io/v1beta1 kind: ServiceEntry metadata: name: mysql-se spec: hosts: - mysql.vm.demo addresses: - 192.168.229.12 location: MESH_INTERNAL ports: - number: 3306 name: mysql protocol: TCP targetPort: 3306 resolution: STATIC workloadSelector: labels: app: mysql type: vm EOF kubectl apply -f se-mysql.yaml -n vm

Here, a serviceentry is created to access mysql, the virtual machine service we deployed

coredns configuration plus parsing records

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

hosts {

192.168.229.11 httpd.vm.demo

192.168.229.12 mysql.vm.demo

fallthrough

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

reload

loadbalance

}

kind: ConfigMap

Add the paragraph 192.168.229.12 mysql.vm.demo

Restart coredns

kubectl rollout restart -n kube-system deployment coredns

Perform pressure test

go-stress-testing -c 1 -n 10000 -u http://192.168.229.134:32688/productpage

[root@node01 ~]# go-stress-testing -c 1 -n 10000 -u http://192.168.229.134:32688/productpage Start Concurrent number:1 Number of requests:10000 Request parameters: request: form:http url:http://192.168.229.134:32688/productpage method:GET headers:map[] data: verify:statusCode timeout:30s debug:false ─────┬───────┬───────┬───────┬────────┬────────┬────────┬────────┬────────┬────────┬──────── time consuming│ Concurrent number│ Number of successes│ Number of failures│ qps │Maximum time consuming│Minimum time consuming│Average time│Download bytes│Bytes per second│ Error code ─────┼───────┼───────┼───────┼────────┼────────┼────────┼────────┼────────┼────────┼──────── 1s│ 1│ 18│ 0│ 18.20│ 91.36│ 17.66│ 54.94│ 87,270│ 87,264│200:18 2s│ 1│ 37│ 0│ 19.11│ 91.40│ 13.13│ 52.34│ 178,723│ 89,351│200:37 3s│ 1│ 54│ 0│ 18.42│ 97.80│ 13.13│ 54.30│ 260,814│ 86,928│200:54 4s│ 1│ 72│ 0│ 18.22│ 97.80│ 13.13│ 54.88│ 349,080│ 87,258│200:72 5s│ 1│ 90│ 0│ 18.17│ 103.04│ 12.98│ 55.04│ 436,350│ 87,264│200:90 6s│ 1│ 111│ 0│ 18.59│ 103.04│ 12.98│ 53.80│ 538,165│ 89,686│200:111 7s│ 1│ 132│ 0│ 18.91│ 103.04│ 12.98│ 52.89│ 638,984│ 91,279│200:132 8s│ 1│ 150│ 0│ 18.88│ 103.04│ 12.30│ 52.95│ 727,250│ 90,905│200:150

Before current limiting, the pressure measurement is the return result of 200

cat <<EOF > envoyfilter-local-rate-limit-mysql-vm-outside.yaml apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: filter-local-ratelimit-svc spec: workloadSelector: labels: app: ratings configPatches: - applyTo: NETWORK_FILTER match: context: SIDECAR_OUTBOUND listener: portNumber: 3306 filterChain: filter: name: "envoy.filters.network.tcp_proxy" patch: operation: INSERT_BEFORE value: name: envoy.filters.network.local_ratelimit typed_config: "@type": type.googleapis.com/udpa.type.v1.TypedStruct type_url: type.googleapis.com/envoy.extensions.filters.network.local_ratelimit.v3.LocalRateLimit value: stat_prefix: tcp_local_rate_limiter token_bucket: max_tokens: 10 tokens_per_fill: 10 fill_interval: 60s runtime_enabled: runtime_key: tcp_rate_limit_enabled default_value: true EOF kubectl apply -f envoyfilter-local-rate-limit-mysql-vm-outside.yaml -n istio

Deploy envoyfilter to make the current limit effective, which acts on the ratings service and is the outlet traffic SIDECAR_OUTBOUND, network filter name must be envoy.filters.network.local_ratelimit,type _ The URL is also fixed. token_bucket sets the token bucket.

The database cannot be connected here, which means that the database is limited and ratings cannot connect to the vm mysql service.

clear:

kubectl delete envoyfilter filter-local-ratelimit-svc -n istio kubectl delete se mysql-se -n vm

3.2.2.1.2.2 global current limiting

Deploying rating-v2

cat << EOF > bookinfo-ratings-v2-mysql-vm.yaml apiVersion: apps/v1 kind: Deployment metadata: name: ratings-v2-mysql-vm labels: app: ratings version: v2-mysql-vm spec: replicas: 1 selector: matchLabels: app: ratings version: v2-mysql-vm template: metadata: labels: app: ratings version: v2-mysql-vm spec: containers: - name: ratings image: docker.io/istio/examples-bookinfo-ratings-v2:1.16.2 imagePullPolicy: IfNotPresent env: # This assumes you registered your mysql vm as # istioctl register -n vm mysqldb 1.2.3.4 3306 - name: DB_TYPE value: "mysql" - name: MYSQL_DB_HOST value: mysql.vm.demo - name: MYSQL_DB_PORT value: "3306" - name: MYSQL_DB_USER value: root - name: MYSQL_DB_PASSWORD value: root ports: - containerPort: 9080 securityContext: runAsUser: 1000 EOF kubectl apply -f bookinfo-ratings-v2-mysql-vm.yaml -n istio

Deploy mysql on vm. This is omitted. Students who need documents can add my wechat because it is a little complicated

Create serviceentry

cat << EOF > se-mysql.yaml apiVersion: networking.istio.io/v1beta1 kind: ServiceEntry metadata: name: mysql-se spec: hosts: - mysql.vm.demo addresses: - 192.168.229.12 location: MESH_INTERNAL ports: - number: 3306 name: mysql protocol: TCP targetPort: 3306 resolution: STATIC workloadSelector: labels: app: mysql type: vm EOF kubectl apply -f se-mysql.yaml -n vm

Here, a serviceentry is created to access mysql, the virtual machine service we deployed

coredns configuration plus parsing records

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

hosts {

192.168.229.11 httpd.vm.demo

192.168.229.12 mysql.vm.demo

fallthrough

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

reload

loadbalance

}

kind: ConfigMap

Add the paragraph 192.168.229.12 mysql.vm.demo

Restart coredns

kubectl rollout restart -n kube-system deployment coredns

Perform pressure test

go-stress-testing -c 1 -n 10000 -u http://192.168.229.134:32688/productpage

[root@node01 ~]# go-stress-testing -c 1 -n 10000 -u http://192.168.229.134:32688/productpage Start Concurrent number:1 Number of requests:10000 Request parameters: request: form:http url:http://192.168.229.134:32688/productpage method:GET headers:map[] data: verify:statusCode timeout:30s debug:false ─────┬───────┬───────┬───────┬────────┬────────┬────────┬────────┬────────┬────────┬──────── time consuming│ Concurrent number│ Number of successes│ Number of failures│ qps │Maximum time consuming│Minimum time consuming│Average time│Download bytes│Bytes per second│ Error code ─────┼───────┼───────┼───────┼────────┼────────┼────────┼────────┼────────┼────────┼──────── 1s│ 1│ 18│ 0│ 18.20│ 91.36│ 17.66│ 54.94│ 87,270│ 87,264│200:18 2s│ 1│ 37│ 0│ 19.11│ 91.40│ 13.13│ 52.34│ 178,723│ 89,351│200:37 3s│ 1│ 54│ 0│ 18.42│ 97.80│ 13.13│ 54.30│ 260,814│ 86,928│200:54 4s│ 1│ 72│ 0│ 18.22│ 97.80│ 13.13│ 54.88│ 349,080│ 87,258│200:72 5s│ 1│ 90│ 0│ 18.17│ 103.04│ 12.98│ 55.04│ 436,350│ 87,264│200:90 6s│ 1│ 111│ 0│ 18.59│ 103.04│ 12.98│ 53.80│ 538,165│ 89,686│200:111 7s│ 1│ 132│ 0│ 18.91│ 103.04│ 12.98│ 52.89│ 638,984│ 91,279│200:132 8s│ 1│ 150│ 0│ 18.88│ 103.04│ 12.30│ 52.95│ 727,250│ 90,905│200:150

Before current limiting, the pressure measurement is the return result of 200

cat << EOF > envoyfilter-filter-mysql-outside.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit

namespace: istio

spec:

workloadSelector:

labels:

app: ratings

configPatches:

- applyTo: NETWORK_FILTER

match:

context: SIDECAR_OUTBOUND

listener:

portNumber: 3306

filterChain:

filter:

name: "envoy.filters.network.tcp_proxy"

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.network.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.ratelimit.v3.RateLimit

domain: mysql-ratelimit

failure_mode_deny: true

stat_prefix: mysql_ratelimit

descriptors:

- entries:

- key: test

value: test

limit:

requests_per_unit: 2

unit: MINUTE

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_cluster

timeout: 10s

transport_api_version: V3

- applyTo: CLUSTER

match:

cluster:

service: ratelimit.istio.svc.cluster.local

patch:

operation: ADD

value:

name: rate_limit_cluster

type: STRICT_DNS

connect_timeout: 10s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: rate_limit_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: ratelimit.istio.svc.cluster.local

port_value: 8081

EOF

kubectl apply -f envoyfilter-filter-mysql-outside.yaml -n istio

clear

kubectl delete envoyfilter filter-local-ratelimit-svc -n istio kubectl delete se mysql-se -n vm

3.2.2.2 multi cluster

3.2.2.2.1 cluster preparation

ditto

3.2.2.2.2 local current limiting in the cluster

cluster1,cluster2 deploy mysql

cat << EOF > mysql.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysql-credentials

type: Opaque

data:

rootpasswd: cGFzc3dvcmQ=

---

apiVersion: v1

kind: Service

metadata:

name: mysqldb

labels:

app: mysqldb

service: mysqldb

spec:

ports:

- port: 3306

name: tcp

selector:

app: mysqldb

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysqldb-v1

labels:

app: mysqldb

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: mysqldb

version: v1

template:

metadata:

labels:

app: mysqldb

version: v1

spec:

containers:

- name: mysqldb

image: docker.io/istio/examples-bookinfo-mysqldb:1.16.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-credentials

key: rootpasswd

args: ["--default-authentication-plugin","mysql_native_password"]

volumeMounts:

- name: var-lib-mysql

mountPath: /var/lib/mysql

volumes:

- name: var-lib-mysql

emptyDir: {}

EOF

kubectl apply -f mysql.yaml -n istio

Note: mysql service is deployed, which will be requested by ratings when obtaining data

Deploy mysql ratings for cluster1 and cluster2

cat << EOF > ratings-mysql.yaml apiVersion: apps/v1 kind: Deployment metadata: name: ratings-v2-mysql labels: app: ratings version: v2-mysql spec: replicas: 1 selector: matchLabels: app: ratings version: v2-mysql template: metadata: labels: app: ratings version: v2-mysql spec: containers: - name: ratings image: docker.io/istio/examples-bookinfo-ratings-v2:1.16.2 imagePullPolicy: IfNotPresent env: # ratings-v2 will use mongodb as the default db backend. # if you would like to use mysqldb then you can use this file # which sets DB_TYPE = 'mysql' and the rest of the parameters shown # here and also create the # mysqldb service using bookinfo-mysql.yaml # NOTE: This file is mutually exclusive to bookinfo-ratings-v2.yaml - name: DB_TYPE value: "mysql" - name: MYSQL_DB_HOST value: mysqldb - name: MYSQL_DB_PORT value: "3306" - name: MYSQL_DB_USER value: root - name: MYSQL_DB_PASSWORD value: password ports: - containerPort: 9080 securityContext: runAsUser: 1000 EOF kubectl apply -f ratings-mysql.yaml -n istio

The mysql version of ratings is deployed, which refers to the env variable.

cat <<EOF > envoyfilter-local-rate-limit-mysql-inside.yaml apiVersion: networking.istio.io/v1alpha3 kind: EnvoyFilter metadata: name: filter-local-ratelimit-svc spec: workloadSelector: labels: app: mysqldb version: v1 configPatches: - applyTo: NETWORK_FILTER match: listener: portNumber: 3306 filterChain: filter: name: "envoy.filters.network.tcp_proxy" patch: operation: INSERT_BEFORE value: name: envoy.filters.network.local_ratelimit typed_config: "@type": type.googleapis.com/udpa.type.v1.TypedStruct type_url: type.googleapis.com/envoy.extensions.filters.network.local_ratelimit.v3.LocalRateLimit value: stat_prefix: tcp_local_rate_limiter token_bucket: max_tokens: 1 tokens_per_fill: 1 fill_interval: 60s runtime_enabled: runtime_key: tcp_rate_limit_enabled default_value: true EOF cluster1,cluster2: kubectl apply -f envoyfilter-local-rate-limit-mysql-inside.yaml -n istio

Note that applyTo here is NETWORK_FILTER, because mysql is a TCP service, not an http service. The name of the filter is envoy.filters.network.local_ratelimit,type_ The URL is also fixed. Don't write it wrong. token_bucket is configured with the number of access speed limiting tokens and their filling speed. The filter we set is at envy.filters.network.tcp_ Proxy, so it's INSERT_BEFORE.

For local current limiting in multiple clusters, ratelimit configuration needs to be added in each istiod

clear: kubectl delete envoyfilter filter-local-ratelimit-svc -n istio

3.3 different current limiting actions

3.3.1source_cluster

Deploy ratelimit

1 create cm

cat << EOF > ratelimit-config.yaml apiVersion: v1 kind: ConfigMap metadata: name: ratelimit-config data: config.yaml: | domain: productpage-ratelimit descriptors: - key: source_cluster value: "outbound|80||istio-ingressgateway.istio-system.svc.cluster.local" rate_limit: unit: minute requests_per_unit: 1 - key: source_cluster rate_limit: unit: minute requests_per_unit: 10 EOF kubectl apply -f ratelimit-config.yaml -n istio

2. Create speed limit service deployment

cat << EOF > ratelimit-deploy.yaml apiVersion: v1 kind: Service metadata: name: redis labels: app: redis spec: ports: - name: redis port: 6379 selector: app: redis --- apiVersion: apps/v1 kind: Deployment metadata: name: redis spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - image: redis:alpine imagePullPolicy: Always name: redis ports: - name: redis containerPort: 6379 restartPolicy: Always serviceAccountName: "" --- apiVersion: v1 kind: Service metadata: name: ratelimit labels: app: ratelimit spec: ports: - name: http-port port: 8080 targetPort: 8080 protocol: TCP - name: grpc-port port: 8081 targetPort: 8081 protocol: TCP - name: http-debug port: 6070 targetPort: 6070 protocol: TCP selector: app: ratelimit --- apiVersion: apps/v1 kind: Deployment metadata: name: ratelimit spec: replicas: 1 selector: matchLabels: app: ratelimit strategy: type: Recreate template: metadata: labels: app: ratelimit spec: containers: - image: envoyproxy/ratelimit:6f5de117 # 2021/01/08 imagePullPolicy: Always name: ratelimit command: ["/bin/ratelimit"] env: - name: LOG_LEVEL value: debug - name: REDIS_SOCKET_TYPE value: tcp - name: REDIS_URL value: redis:6379 - name: USE_STATSD value: "false" - name: RUNTIME_ROOT value: /data - name: RUNTIME_SUBDIRECTORY value: ratelimit ports: - containerPort: 8080 - containerPort: 8081 - containerPort: 6070 volumeMounts: - name: config-volume mountPath: /data/ratelimit/config/config.yaml subPath: config.yaml volumes: - name: config-volume configMap: name: ratelimit-config EOF kubectl apply -f ratelimit-deploy.yaml -n istio

3. Create envy filter

cat << EOF > envoyfilter-filter.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit

namespace: istio

spec:

workloadSelector:

# select by label in the same namespace

labels:

app: productpage

configPatches:

# The Envoy config you want to modify

- applyTo: HTTP_FILTER

match:

context: SIDECAR_INBOUND

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: INSERT_BEFORE

# Adds the Envoy Rate Limit Filter in HTTP filter chain.

value:

name: envoy.filters.http.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ratelimit.v3.RateLimit

# domain can be anything! Match it to the ratelimter service config

domain: productpage-ratelimit

failure_mode_deny: true

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_cluster

timeout: 10s

transport_api_version: V3

- applyTo: CLUSTER

match:

cluster:

service: ratelimit.istio.svc.cluster.local

patch:

operation: ADD

# Adds the rate limit service cluster for rate limit service defined in step 1.

value:

name: rate_limit_cluster

type: STRICT_DNS

connect_timeout: 10s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: rate_limit_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: ratelimit.istio.svc.cluster.local

port_value: 8081

EOF

kubectl apply -f envoyfilter-filter.yaml -n istio

4. Create action envoyfilter

cat << EOF > envoyfilter-action.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit-svc

namespace: istio

spec:

workloadSelector:

labels:

app: productpage

configPatches:

- applyTo: VIRTUAL_HOST

match:

context: SIDECAR_INBOUND

routeConfiguration:

vhost:

name: "inbound|http|9080"

route:

action: ANY

patch:

operation: MERGE

# Applies the rate limit rules.

value:

rate_limits:

- actions:

- source_cluster: {}

EOF

kubectl apply -f envoyfilter-action.yaml -n istio

[root@node01 ~]# go-stress-testing -n 1000000 -c 10 -u http://192.168.229.135:32688/productpage Start Concurrent number:10 Number of requests:1000000 Request parameters: request: form:http url:http://192.168.229.135:32688/productpage method:GET headers:map[] data: verify:statusCode timeout:30s debug:false ─────┬───────┬───────┬───────┬────────┬────────┬────────┬────────┬────────┬────────┬──────── time consuming│ Concurrent number│ Number of successes│ Number of failures│ qps │Maximum time consuming│Minimum time consuming│Average time│Download bytes│Bytes per second│ Error code ─────┼───────┼───────┼───────┼────────┼────────┼────────┼────────┼────────┼────────┼──────── 1s│ 10│ 10│ 741│ 10.06│ 164.41│ 5.07│ 994.21│ 48,814│ 48,793│200:10;429:739;500:2 2s│ 10│ 10│ 1644│ 5.02│ 164.41│ 5.07│ 1992.68│ 48,814│ 24,403│200:10;429:1642;500:2 3s│ 10│ 10│ 2486│ 3.34│ 164.41│ 4.99│ 2989.70│ 48,814│ 16,268│200:10;429:2484;500:2 4s│ 10│ 10│ 3332│ 2.51│ 164.41│ 4.94│ 3989.95│ 48,814│ 12,203│200:10;429:3330;500:2 5s│ 10│ 10│ 4213│ 2.00│ 164.41│ 4.61│ 4988.90│ 48,814│ 9,762│200:10;429:4207;500:6

3.3.2destination_cluster

Deploy ratelimit

1 create cm

cat << EOF > ratelimit-config.yaml apiVersion: v1 kind: ConfigMap metadata: name: ratelimit-config data: config.yaml: | domain: productpage-ratelimit descriptors: - key: destination_cluster value: "inbound|9080||" rate_limit: unit: minute requests_per_unit: 1 - key: destination_cluster rate_limit: unit: minute requests_per_unit: 10 EOF kubectl apply -f ratelimit-config.yaml -n istio

2. Create speed limit service deployment

cat << EOF > ratelimit-deploy.yaml apiVersion: v1 kind: Service metadata: name: redis labels: app: redis spec: ports: - name: redis port: 6379 selector: app: redis --- apiVersion: apps/v1 kind: Deployment metadata: name: redis spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - image: redis:alpine imagePullPolicy: Always name: redis ports: - name: redis containerPort: 6379 restartPolicy: Always serviceAccountName: "" --- apiVersion: v1 kind: Service metadata: name: ratelimit labels: app: ratelimit spec: ports: - name: http-port port: 8080 targetPort: 8080 protocol: TCP - name: grpc-port port: 8081 targetPort: 8081 protocol: TCP - name: http-debug port: 6070 targetPort: 6070 protocol: TCP selector: app: ratelimit --- apiVersion: apps/v1 kind: Deployment metadata: name: ratelimit spec: replicas: 1 selector: matchLabels: app: ratelimit strategy: type: Recreate template: metadata: labels: app: ratelimit spec: containers: - image: envoyproxy/ratelimit:6f5de117 # 2021/01/08 imagePullPolicy: Always name: ratelimit command: ["/bin/ratelimit"] env: - name: LOG_LEVEL value: debug - name: REDIS_SOCKET_TYPE value: tcp - name: REDIS_URL value: redis:6379 - name: USE_STATSD value: "false" - name: RUNTIME_ROOT value: /data - name: RUNTIME_SUBDIRECTORY value: ratelimit ports: - containerPort: 8080 - containerPort: 8081 - containerPort: 6070 volumeMounts: - name: config-volume mountPath: /data/ratelimit/config/config.yaml subPath: config.yaml volumes: - name: config-volume configMap: name: ratelimit-config EOF kubectl apply -f ratelimit-deploy.yaml -n istio

3. Create envy filter

cat << EOF > envoyfilter-filter.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit

namespace: istio

spec:

workloadSelector:

# select by label in the same namespace

labels:

app: productpage

configPatches:

# The Envoy config you want to modify

- applyTo: HTTP_FILTER

match:

context: SIDECAR_INBOUND

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: INSERT_BEFORE

# Adds the Envoy Rate Limit Filter in HTTP filter chain.

value:

name: envoy.filters.http.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ratelimit.v3.RateLimit

# domain can be anything! Match it to the ratelimter service config

domain: productpage-ratelimit

failure_mode_deny: true

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_cluster

timeout: 10s

transport_api_version: V3

- applyTo: CLUSTER

match:

cluster:

service: ratelimit.istio.svc.cluster.local

patch:

operation: ADD

# Adds the rate limit service cluster for rate limit service defined in step 1.

value:

name: rate_limit_cluster

type: STRICT_DNS

connect_timeout: 10s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: rate_limit_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: ratelimit.istio.svc.cluster.local

port_value: 8081

EOF

kubectl apply -f envoyfilter-filter.yaml -n istio

4. Create action envoyfilter

cat << EOF > envoyfilter-action.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit-svc

namespace: istio

spec:

workloadSelector:

labels:

app: productpage

configPatches:

- applyTo: VIRTUAL_HOST

match:

context: SIDECAR_INBOUND

routeConfiguration:

vhost:

name: "inbound|http|9080"

route:

action: ANY

patch:

operation: MERGE

# Applies the rate limit rules.

value:

rate_limits:

- actions:

- destination_cluster: {}

EOF

kubectl apply -f envoyfilter-action.yaml -n istio

[root@node01 ~]# go-stress-testing -n 1000000 -c 10 -u http://192.168.229.135:32688/productpage Start Concurrent number:10 Number of requests:1000000 Request parameters: request: form:http url:http://192.168.229.135:32688/productpage method:GET headers:map[] data: verify:statusCode timeout:30s debug:false ─────┬───────┬───────┬───────┬────────┬────────┬────────┬────────┬────────┬────────┬──────── time consuming│ Concurrent number│ Number of successes│ Number of failures│ qps │Maximum time consuming│Minimum time consuming│Average time│Download bytes│Bytes per second│ Error code ─────┼───────┼───────┼───────┼────────┼────────┼────────┼────────┼────────┼────────┼──────── 1s│ 10│ 1│ 799│ 1.01│ 38.49│ 5.84│ 9904.46│ 4,183│ 4,182│200:1;429:799 2s│ 10│ 1│ 1703│ 0.50│ 38.49│ 5.31│19918.49│ 4,183│ 2,090│200:1;429:1703 3s│ 10│ 1│ 2553│ 0.33│ 38.49│ 4.88│29894.78│ 4,183│ 1,394│200:1;429:2553 4s│ 10│ 1│ 3452│ 0.25│ 38.49│ 4.88│39907.66│ 4,183│ 1,045│200:1;429:3452 5s│ 10│ 1│ 4339│ 0.20│ 38.49│ 4.26│49863.27│ 4,183│ 836│200:1;429:4339 6s│ 10│ 1│ 5130│ 0.17│ 38.49│ 4.26│59819.79│ 4,183│ 697│200:1;429:5130 7s│ 10│ 1│ 5945│ 0.14│ 38.49│ 4.26│69888.47│ 4,183│ 597│200:1;429:5945 8s│ 10│ 1│ 6773│ 0.13│ 38.49│ 4.26│79848.79│ 4,183│ 522│200:1;429:6772;500:1

3.3.3request_headers

slightly

3.3.4remote_address

Deploy ratelimit

1 create cm

cat << EOF > ratelimit-config.yaml apiVersion: v1 kind: ConfigMap metadata: name: ratelimit-config data: config.yaml: | domain: productpage-ratelimit descriptors: - key: remote_address value: "172.20.1.0" rate_limit: unit: minute requests_per_unit: 1 - key: remote_address rate_limit: unit: minute requests_per_unit: 10 EOF kubectl apply -f ratelimit-config.yaml -n istio

2. Create speed limit service deployment

cat << EOF > ratelimit-deploy.yaml apiVersion: v1 kind: Service metadata: name: redis labels: app: redis spec: ports: - name: redis port: 6379 selector: app: redis --- apiVersion: apps/v1 kind: Deployment metadata: name: redis spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - image: redis:alpine imagePullPolicy: Always name: redis ports: - name: redis containerPort: 6379 restartPolicy: Always serviceAccountName: "" --- apiVersion: v1 kind: Service metadata: name: ratelimit labels: app: ratelimit spec: ports: - name: http-port port: 8080 targetPort: 8080 protocol: TCP - name: grpc-port port: 8081 targetPort: 8081 protocol: TCP - name: http-debug port: 6070 targetPort: 6070 protocol: TCP selector: app: ratelimit --- apiVersion: apps/v1 kind: Deployment metadata: name: ratelimit spec: replicas: 1 selector: matchLabels: app: ratelimit strategy: type: Recreate template: metadata: labels: app: ratelimit spec: containers: - image: envoyproxy/ratelimit:6f5de117 # 2021/01/08 imagePullPolicy: Always name: ratelimit command: ["/bin/ratelimit"] env: - name: LOG_LEVEL value: debug - name: REDIS_SOCKET_TYPE value: tcp - name: REDIS_URL value: redis:6379 - name: USE_STATSD value: "false" - name: RUNTIME_ROOT value: /data - name: RUNTIME_SUBDIRECTORY value: ratelimit ports: - containerPort: 8080 - containerPort: 8081 - containerPort: 6070 volumeMounts: - name: config-volume mountPath: /data/ratelimit/config/config.yaml subPath: config.yaml volumes: - name: config-volume configMap: name: ratelimit-config EOF kubectl apply -f ratelimit-deploy.yaml -n istio

3. Create envy filter

cat << EOF > envoyfilter-filter.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit

namespace: istio

spec:

workloadSelector:

# select by label in the same namespace

labels:

app: productpage

configPatches:

# The Envoy config you want to modify

- applyTo: HTTP_FILTER

match:

context: SIDECAR_INBOUND

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: INSERT_BEFORE

# Adds the Envoy Rate Limit Filter in HTTP filter chain.

value:

name: envoy.filters.http.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ratelimit.v3.RateLimit

# domain can be anything! Match it to the ratelimter service config

domain: productpage-ratelimit

failure_mode_deny: true

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_cluster

timeout: 10s

transport_api_version: V3

- applyTo: CLUSTER

match:

cluster:

service: ratelimit.istio.svc.cluster.local

patch:

operation: ADD

# Adds the rate limit service cluster for rate limit service defined in step 1.

value:

name: rate_limit_cluster

type: STRICT_DNS

connect_timeout: 10s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: rate_limit_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: ratelimit.istio.svc.cluster.local

port_value: 8081

EOF

kubectl apply -f envoyfilter-filter.yaml -n istio

4. Create action envoyfilter

cat << EOF > envoyfilter-action.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit-svc

namespace: istio

spec:

workloadSelector:

labels:

app: productpage

configPatches:

- applyTo: VIRTUAL_HOST

match:

context: SIDECAR_INBOUND

routeConfiguration:

vhost:

name: "inbound|http|9080"

route:

action: ANY

patch:

operation: MERGE

# Applies the rate limit rules.

value:

rate_limits:

- actions:

- remote_address: {}

EOF

kubectl apply -f envoyfilter-action.yaml -n istio

[root@node01 ~]# go-stress-testing -n 1000000 -c 10 -u http://192.168.229.135:32688/productpage Start Concurrent number:10 Number of requests:1000000 Request parameters: request: form:http url:http://192.168.229.135:32688/productpage method:GET headers:map[] data: verify:statusCode timeout:30s debug:false ─────┬───────┬───────┬───────┬────────┬────────┬────────┬────────┬────────┬────────┬──────── time consuming│ Concurrent number│ Number of successes│ Number of failures│ qps │Maximum time consuming│Minimum time consuming│Average time│Download bytes│Bytes per second│ Error code ─────┼───────┼───────┼───────┼────────┼────────┼────────┼────────┼────────┼────────┼──────── 1s│ 10│ 1│ 825│ 1.01│ 58.24│ 4.89│ 9914.57│ 4,183│ 4,181│200:1;429:825 2s│ 10│ 1│ 1652│ 0.50│ 58.24│ 4.89│19930.21│ 4,183│ 2,091│200:1;429:1652 3s│ 10│ 1│ 2565│ 0.33│ 58.24│ 4.89│29894.37│ 4,183│ 1,394│200:1;429:2565 4s│ 10│ 1│ 3433│ 0.25│ 58.24│ 4.76│39857.25│ 4,183│ 1,045│200:1;429:3433 5s│ 10│ 1│ 4176│ 0.20│ 58.24│ 4.76│49888.21│ 4,183│ 836│200:1;429:4176

3.3.5generic_key

{

"descriptor_value": "...",

"descriptor_key": "..."

}

Deploy ratelimit

1 create cm

cat << EOF > ratelimit-config.yaml apiVersion: v1 kind: ConfigMap metadata: name: ratelimit-config data: config.yaml: | domain: productpage-ratelimit descriptors: - key: test value: "test" rate_limit: unit: minute requests_per_unit: 2 - key: test rate_limit: unit: minute requests_per_unit: 10 EOF kubectl apply -f ratelimit-config.yaml -n istio

2. Create speed limit service deployment

cat << EOF > ratelimit-deploy.yaml apiVersion: v1 kind: Service metadata: name: redis labels: app: redis spec: ports: - name: redis port: 6379 selector: app: redis --- apiVersion: apps/v1 kind: Deployment metadata: name: redis spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - image: redis:alpine imagePullPolicy: Always name: redis ports: - name: redis containerPort: 6379 restartPolicy: Always serviceAccountName: "" --- apiVersion: v1 kind: Service metadata: name: ratelimit labels: app: ratelimit spec: ports: - name: http-port port: 8080 targetPort: 8080 protocol: TCP - name: grpc-port port: 8081 targetPort: 8081 protocol: TCP - name: http-debug port: 6070 targetPort: 6070 protocol: TCP selector: app: ratelimit --- apiVersion: apps/v1 kind: Deployment metadata: name: ratelimit spec: replicas: 1 selector: matchLabels: app: ratelimit strategy: type: Recreate template: metadata: labels: app: ratelimit spec: containers: - image: envoyproxy/ratelimit:6f5de117 # 2021/01/08 imagePullPolicy: Always name: ratelimit command: ["/bin/ratelimit"] env: - name: LOG_LEVEL value: debug - name: REDIS_SOCKET_TYPE value: tcp - name: REDIS_URL value: redis:6379 - name: USE_STATSD value: "false" - name: RUNTIME_ROOT value: /data - name: RUNTIME_SUBDIRECTORY value: ratelimit ports: - containerPort: 8080 - containerPort: 8081 - containerPort: 6070 volumeMounts: - name: config-volume mountPath: /data/ratelimit/config/config.yaml subPath: config.yaml volumes: - name: config-volume configMap: name: ratelimit-config EOF kubectl apply -f ratelimit-deploy.yaml -n istio

3. Create envy filter

cat << EOF > envoyfilter-filter.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: filter-ratelimit

namespace: istio

spec:

workloadSelector:

# select by label in the same namespace

labels:

app: productpage

configPatches:

# The Envoy config you want to modify

- applyTo: HTTP_FILTER

match:

context: SIDECAR_INBOUND

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: INSERT_BEFORE

# Adds the Envoy Rate Limit Filter in HTTP filter chain.

value:

name: envoy.filters.http.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ratelimit.v3.RateLimit

# domain can be anything! Match it to the ratelimter service config

domain: productpage-ratelimit

failure_mode_deny: true

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_cluster

timeout: 10s

transport_api_version: V3

- applyTo: CLUSTER

match: