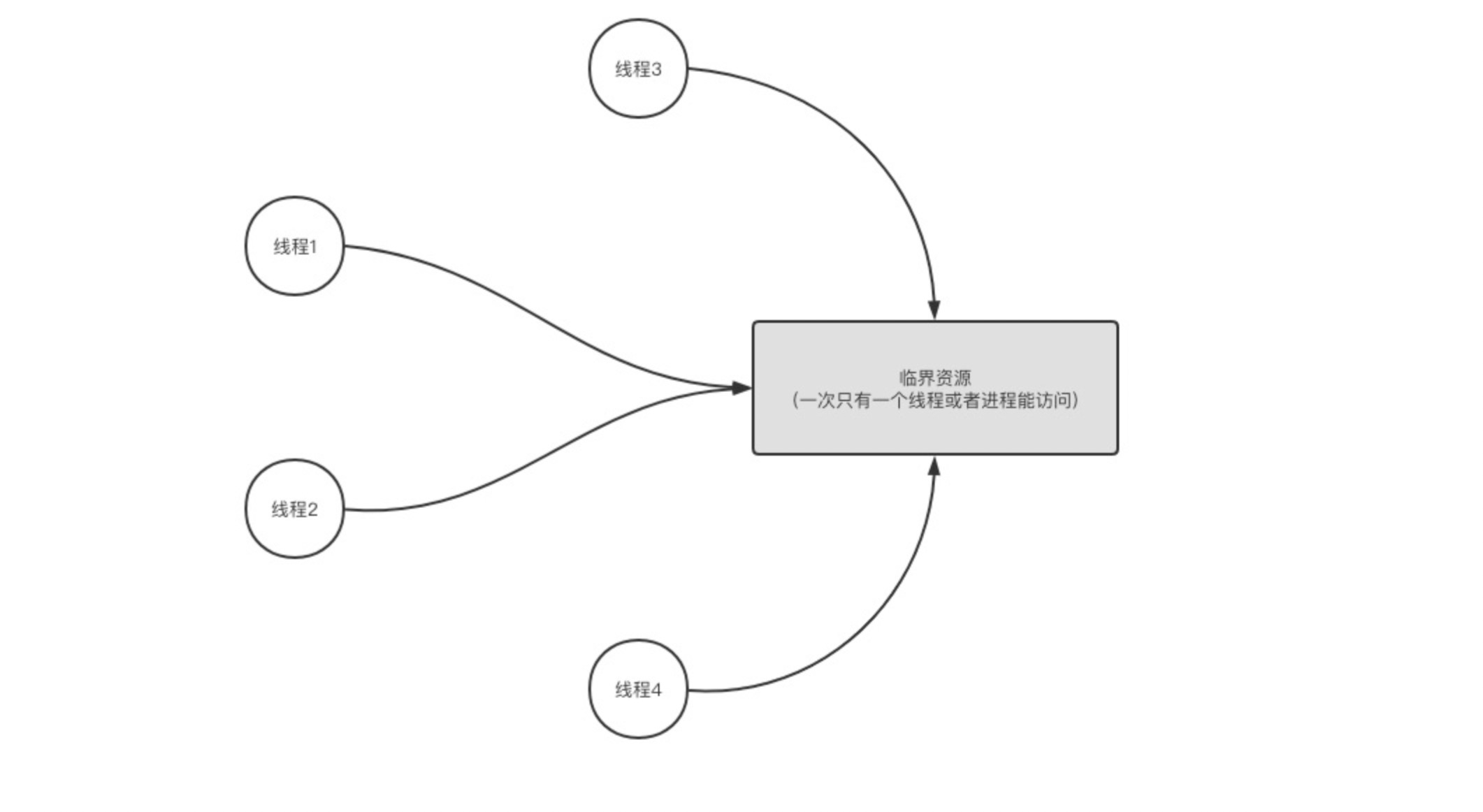

Why locks are needed:

Because there are critical resources, the so-called critical resources means that there can only be one resource operating in the unified time, such as a printer. If multiple print tasks are executed at the same time, it will be disordered. Critical resources are resources accessed by only one process or thread at the same time in the program. How to ensure that only one thread is accessed in the unified time is locking.

For example, in the following code, the size variable is the critical resource. Under normal circumstances, the size value of the program execution result should be 10000, but the actual result will be a value less than 10000, which is the thread safety problem caused by not locking.

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.CountDownLatch;

public class ThreadUnSafeDemo {

static int size;

public static void main(String[] args) throws InterruptedException {

// The function of CountDownLatch is to try to make the threads start executing at the same time

CountDownLatch countDownLatch = new CountDownLatch(1);

final List<Thread> list = new ArrayList<>(10);

for (int i = 0; i < 10; i++) {

Thread thread =

new Thread(

() -> {

try {

countDownLatch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

for (int j = 0; j < 1000; j++) {

size++;

}

});

thread.start();

list.add(thread);

}

countDownLatch.countDown();

// Here, wait for all threads to execute, and then let the main thread execute

for (Thread thread : list) {

thread.join();

}

// The expected result should be 10000

System.out.println(size);

}

}

usage method:

There are three usage methods: static method, non static method and custom code block.

In the static method, the class object of the current class is used as the lock object. In the non static method, the object calling the method, this, is used as the lock object. The self-defined code block is the object specified by itself.

When using the synchronized key sub in static methods and common methods, we should pay special attention to that if the lock object is one, there will be great performance problems.

Cases of performance bottlenecks:

When test2 executes, test1 cannot execute because test2 method obtains the lock of the user object, and test1 method has to wait until the lock is released.

public class Test {

public static void main(String[] args) throws InterruptedException {

User user = new User();

new Thread(

() -> {

for (; ; ) {

user.test1();

}

})

.start();

new Thread(

() -> {

try {

user.test2();

} catch (InterruptedException e) {

e.printStackTrace();

}

})

.start();

}

}

class User {

public synchronized void test1() {

System.out.println("start");

}

public synchronized void test2() throws InterruptedException {

Thread.sleep(5000);

}

}

Lock reentry:

The same lock object, whether synchronized or ReentrantLock, can be held by the same thread multiple times. That is, after obtaining the lock object, if you encounter the synchronized resources of the lock object again, you can still enter.

So why do we design like this? In the process of daily development, most of the methods call methods. It can't be said that I have obtained the lock in this method. I have to wait for the lock to be released in the next method. This lock is originally held by me, which is a deadlock. Therefore, synchronized and ReentrantLock are "reentrant locks".

public class ReentrantDemo {

final Object lock = new Object();

private void method1() {

synchronized (lock) {

// do something

method2();

}

}

private void method2() {

// If the lock is not reentrant, the lock will never be acquired here, and a deadlock will occur.

synchronized (lock) {

// do something

}

}

}

Synchronized Optimization:

Lock escalation (irreversible):

Prior to jdk1.6, the performance of synchronized locks was not as good as that of lock locks, because previously, synchronized locks were heavyweight locks, which depended on collaboration. They had to interact with the os, and the efficiency was not very high. After jdk1.6, the first locks were biased locks, which were upgraded to lightweight locks and heavyweight locks according to the degree of competition for lock resources. Among them, there is basically no lock competition. The lightweight lock has very little lock competition, and the time to release the lock is also very short. There is no need to release the execution power of the cpu, while the heavyweight lock handles the situation where the lock competition is very fierce.

Before jdk1.6, the performance of synchronized is lower than that of ReentrantLock. After the optimization of lock upgrade after jdk1.6, the performance is similar to that of ReentrantLock, but ReentrantLock has more functions.

The specific lock level is stored in the object header.

Lock coarsening:

Locking and unlocking also consume resources. If there are a series of locking and unlocking operations, it may cause unnecessary performance loss. At this time, these locks will be expanded into a larger lock to avoid frequent locking and unlocking operations.

// Before optimization

class User {

private final Object lock = new Object();

public void test() {

synchronized (lock) {

System.out.println("print 1");

}

synchronized (lock) {

System.out.println("print 2");

}

synchronized (lock) {

System.out.println("print 3");

}

}

}

// After optimization

class User {

private final Object lock = new Object();

public void test() {

synchronized (lock) {

System.out.println("print 1");

System.out.println("print 2");

System.out.println("print 3");

}

}

}

Lock elimination:

During JIT compilation of Java virtual machine (which can be simply understood as compiling when a piece of code is about to be executed for the first time, also known as immediate compilation), the lock that cannot compete for shared resources is removed by scanning the running context and escape analysis. By eliminating unnecessary locks in this way, meaningless request lock time can be saved.

class User {

public void test() {

final Object lock = new Object();

// This lock is meaningless and will be eliminated

synchronized (lock) {

System.out.println("print 1");

System.out.println("print 2");

System.out.println("print 3");

}

}

}

Visibility:

Synchronized can ensure the visibility of the thread, because when obtaining the lock, the thread will copy the critical resources to its own working memory, and then refresh the resources back to the main memory when releasing the lock. During this operation, other threads have been blocking, so the visibility of shared variable memory is ensured.