Objectives:

TensorFlow2 is used to realize the matrix decomposition in collaborative filtering algorithm. The vast majority of those found online are copied based on a template and are based on TensorFlow1. Therefore, I do it myself and implement it with TensorFlow2.

- I wonder why TensorFlow2 is not implemented for us. In Spark, just call spark.mllib.recommendation.ALS().

Content:

Collaborative filtering algorithm is a common recommendation algorithm in recommendation system. Central idea: birds of a feather flock together. That is, people with the same taste recommend their favorite items or movie songs to you; Or recommend your favorite items and similar items to you.

Overall process:

1. Get the user's score and purchase record of goods

2. Construction of cooperative matrix M

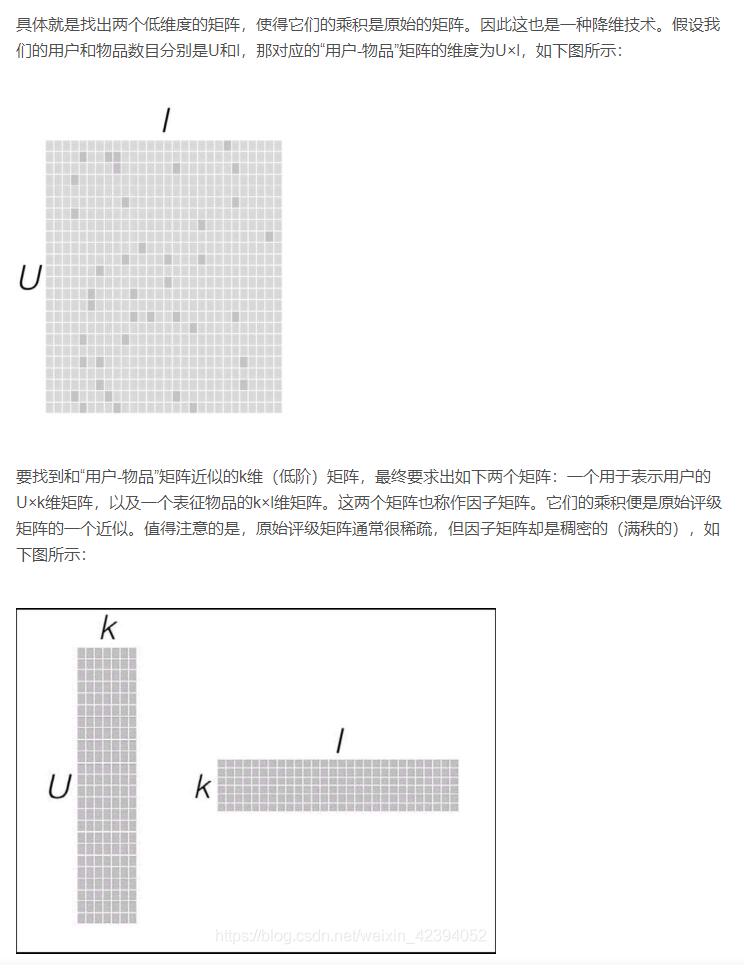

3. Decomposition based on matrix M=U*V

4. Use the items or users to be recommended to calculate the similarity with U or V

code:

TensorFlow2 can automatically help you derive and update parameters. It's too convenient. All you have to do is construct the loss function loss.

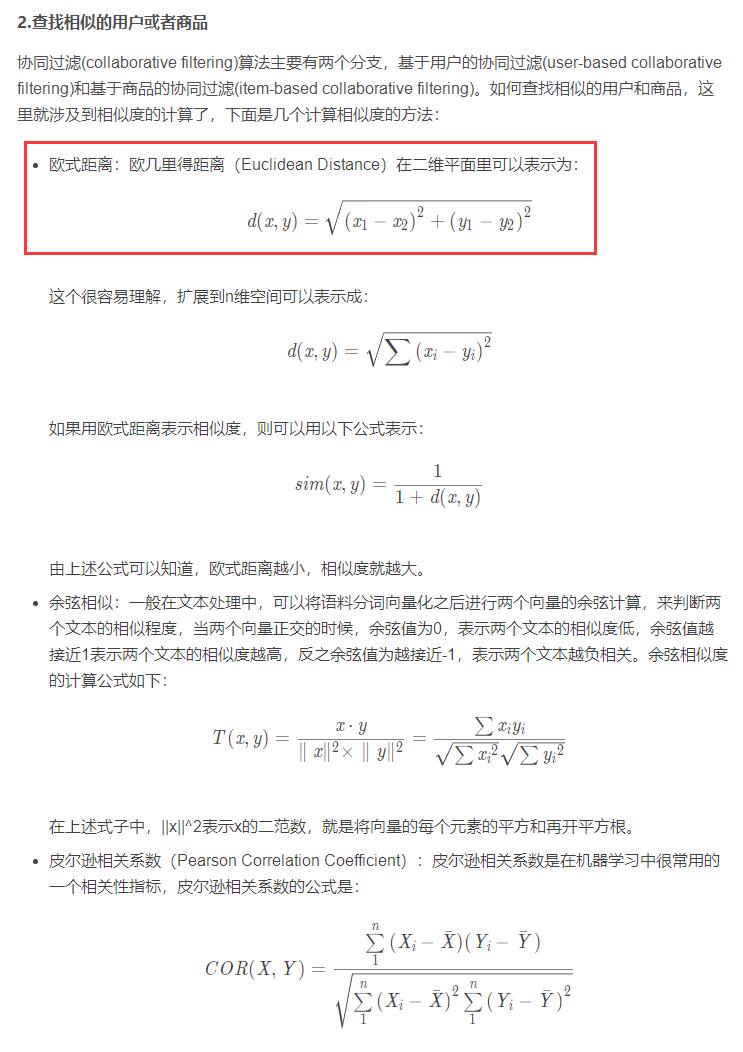

The loss function can be understood as that we decompose U*V to get the predicted M_pre, with m and M_pre find Euclidean Distance: that is, Euclidean Distance

The formula is:

The general meaning is to decompose a large matrix into two small matrices.

The specific code is:

'''=================================================

@Function -> use TensorFlow2 Decomposition of collaborative filtering matrix

@Author : luoji

@Date : 2021-10-19

=================================================='''

import numpy as np

import tensorflow as tf

def matrixDecomposition(alike_matix,rank=10,num_epoch= 5000,learning_rate=0.001,reg=0.5):

row,column = len(alike_matix),len(alike_matix[0])

y_true = tf.constant(alike_matix, dtype=tf.float32) # Build y_true

U = tf.Variable(shape=(row, rank), initial_value=np.random.random(size=(row, rank)),dtype=tf.float32) # Construct a variable U to represent the user weight matrix

V = tf.Variable(shape=(rank, column), initial_value=np.random.random(size=(rank, column)),dtype=tf.float32) # Construct a variable representing the weight matrix and initialize it to 0

variables = [U,V]

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

for batch_index in range(num_epoch):

with tf.GradientTape() as tape:

y_pre = tf.matmul(U, V)

loss = tf.reduce_sum(tf.norm(y_true-y_pre, ord='euclidean')

+ reg*(tf.norm(U,ord='euclidean')+tf.norm(V,ord='euclidean'))) #Regularization term

print("batch %d : loss %f" %(batch_index,loss.numpy()))

grads = tape.gradient(loss,variables)

optimizer.apply_gradients(grads_and_vars=zip(grads,variables))

return U,V,tf.matmul(U, V)

if __name__ == "__main__":

# The matrix is decomposed into M=U*V, and the rank of U and V is specified by the user

alike_matrix = [[1.0, 2.0, 3.0],

[4.5, 5.0, 3.1],

[1.0, 2.0, 3.0],

[4.5, 5.0, 5.1],

[1.0, 2.0, 3.0]]

U,V,preMatrix = matrixDecomposition(alike_matrix,rank=2,reg=0.5,num_epoch=2000) # reg decreases then num_epoch needs to be increased

print(U)

print(V)

print(alike_matrix)

print(preMatrix)

print("this difference between alike_matrix and preMatrix is :")

print(alike_matrix-preMatrix)

print('loss is :',sum(sum(abs(alike_matrix - preMatrix))))

Matrix to be decomposed:

[[1.0, 2.0, 3.0],

[4.5, 5.0, 3.1],

[1.0, 2.0, 3.0],

[4.5, 5.0, 5.1],

[1.0, 2.0, 3.0]]

Matrix multiplied after decomposition:

[[1.0647349 1.929376 2.9957888]

[4.6015587 4.7999315 3.1697667]

[1.0643657 1.9290545 2.9957101]

[4.287443 5.211667 4.996485 ]

[1.0647217 1.9293401 2.9957187]],

It can be seen that the two are still very similar, which proves that our matrix decomposition with TensorFlow2 is correct.

Note that the regularization terms REG and num are required_ Epoch matching, the larger the reg, the faster the convergence, but the effect is not necessarily the best.

produce:

TensorFlow2 realizes the matrix decomposition in the collaborative filtering algorithm, and the module can be reused directly.

1. It deepens the understanding of TensorFlow2. It's amazing. As long as the loss function loss is found, the model can be trained. Amazing!

2. CSDN technology blog 1, the whole network can not find the second one based on TensorFlow2. I wonder why TensorFlow2 is not implemented for us. In Spark, just call spark.mllib.recommendation.ALS()