catalogue

Introduction to Python concurrent programming

1. Why introduce concurrent programming?

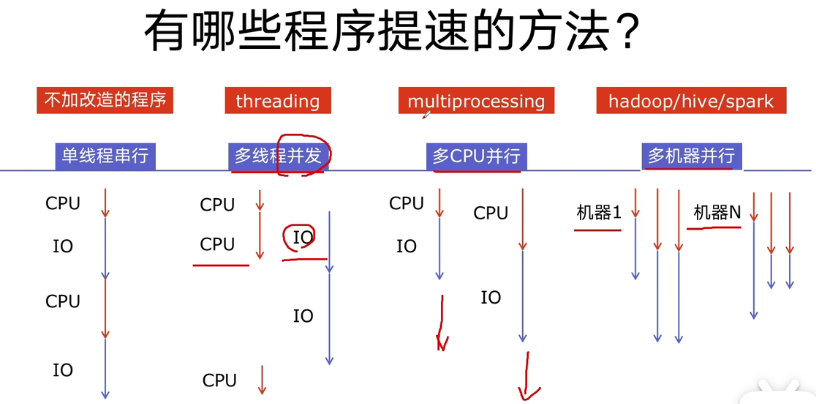

2. What are the methods to speed up the program?

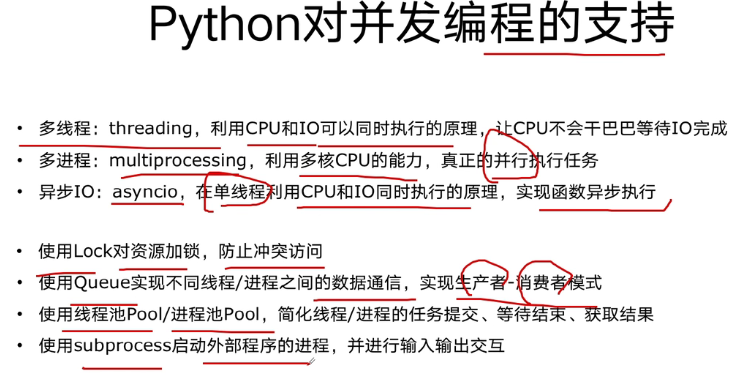

3.python support for concurrent programming

How to select multithreaded Thread, multiprocess Process and multiprocess Coroutine

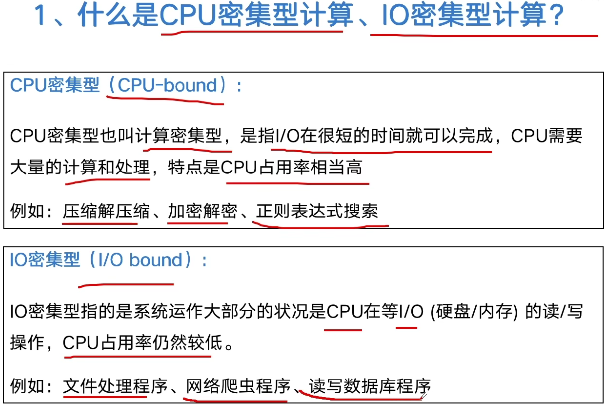

1. What are CPU intensive computing and IO intensive computing?

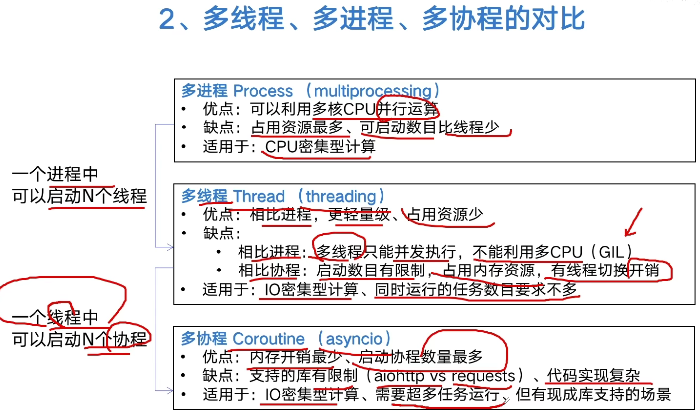

2. Comparison of multithreading, multiprocessing and multiprocessing

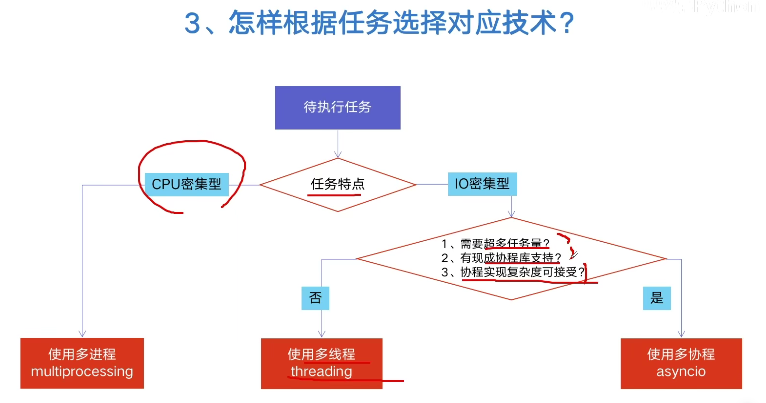

3. How to select the corresponding technology according to the task?

The culprit of Python's slow speed is the global interpreter lock GIL

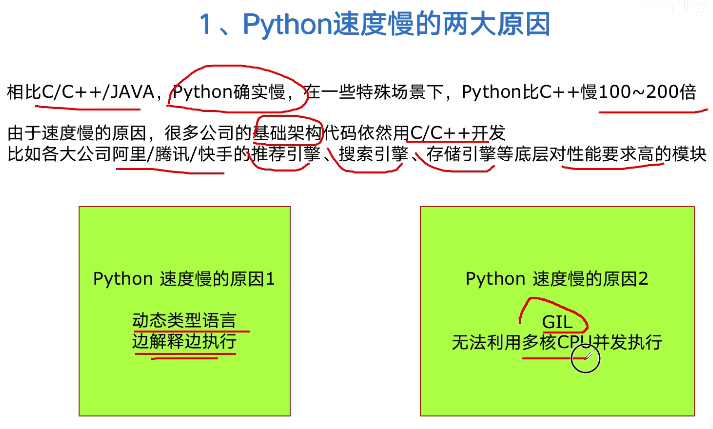

1. Two reasons why Python is slow

4. How to avoid the restrictions brought by GIL?

With multithreading, Python multithreading is accelerated 10 times

1.Python's method of creating multithreading

2. Rewrite the crawler program and program multi-threaded crawling

3. Speed comparison: single thread crawler VS multi thread crawler

Python implements producer consumer mode multithreaded crawler!

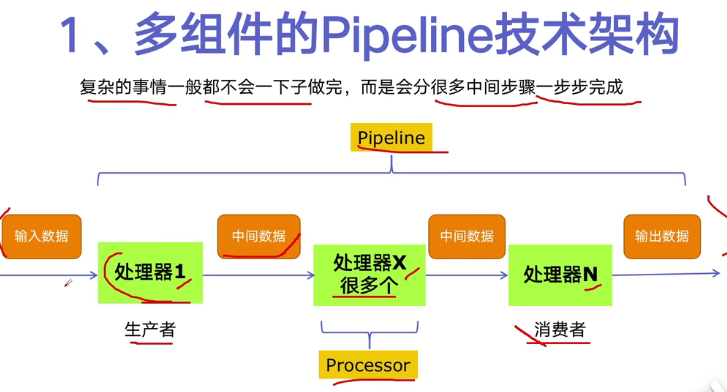

1. Multi component Pipeline technology architecture

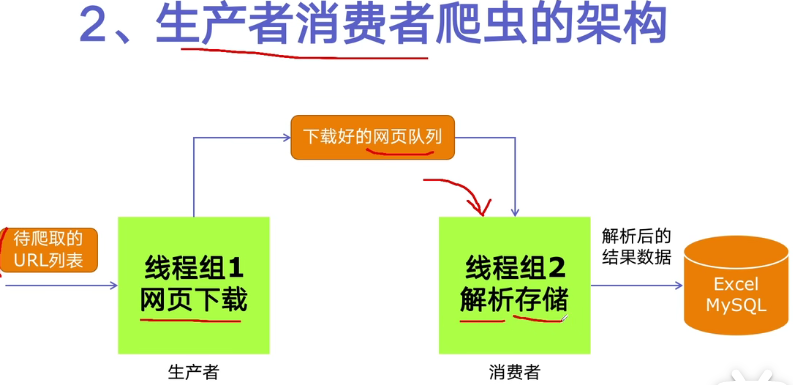

2. Framework of producer consumer crawler

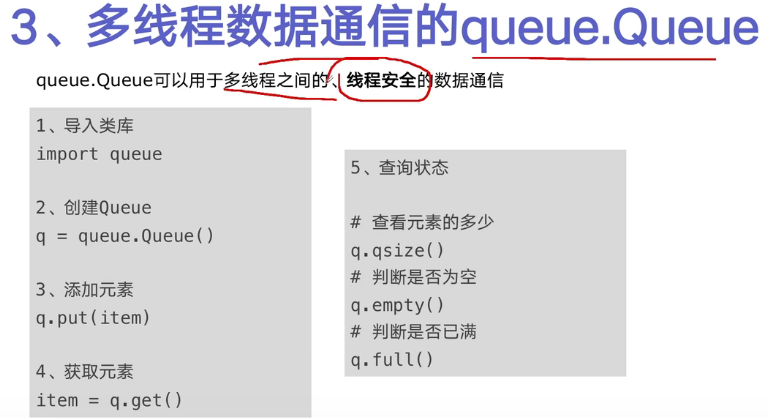

3. queue.Queue of multithreaded data communication

4. Code writing to realize producer consumer crawler

Python thread safety problems and Solutions

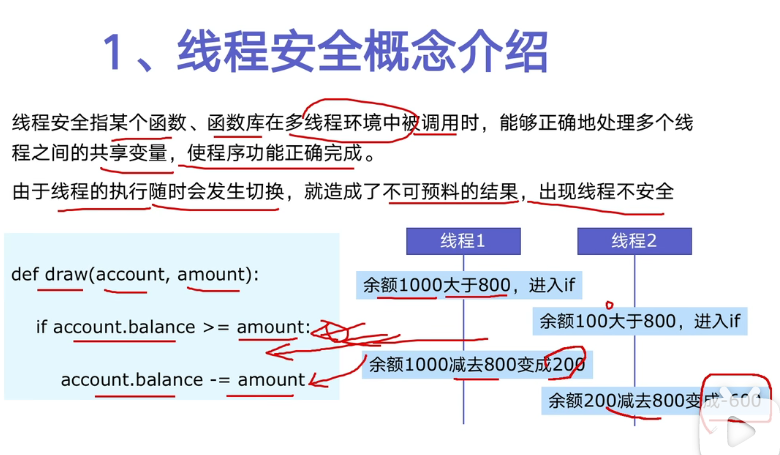

1. Introduction to thread safety concept

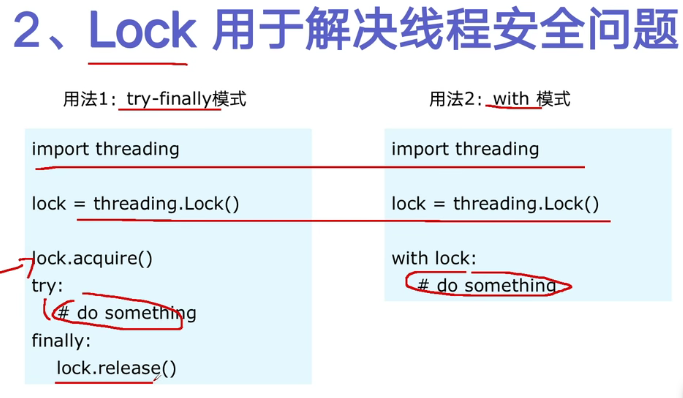

2.Lock is used to solve thread safety problems

3. Example code to demonstrate problems and Solutions

Easy to use thread pool ThreadPoolExecutor

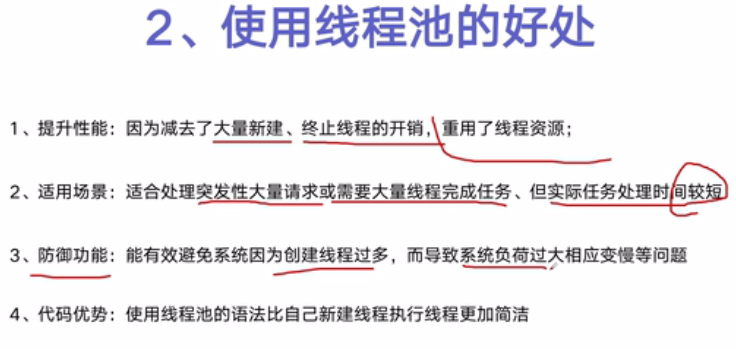

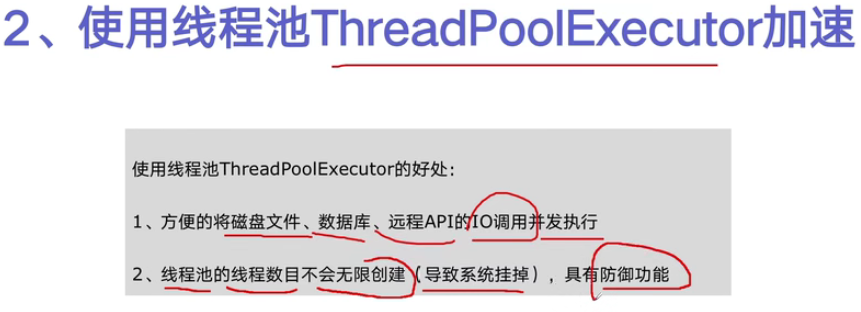

2. Benefits of using thread pool

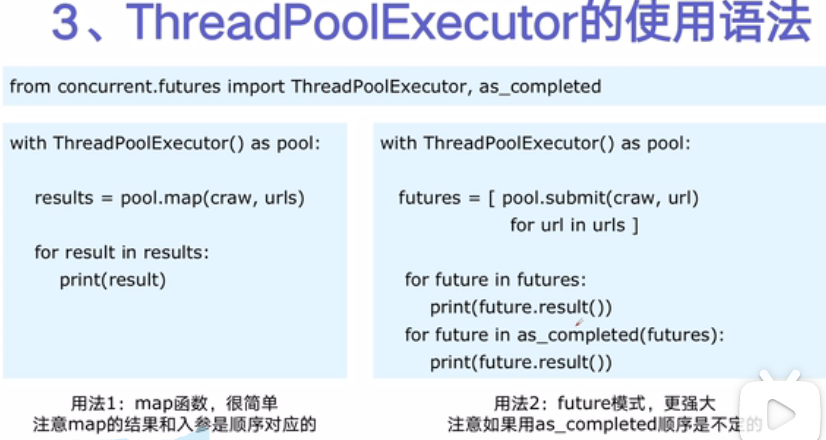

3. Usage syntax of ThreadPoolExecutor

4. Use thread pool to transform crawler program

Using thread pool acceleration in web server

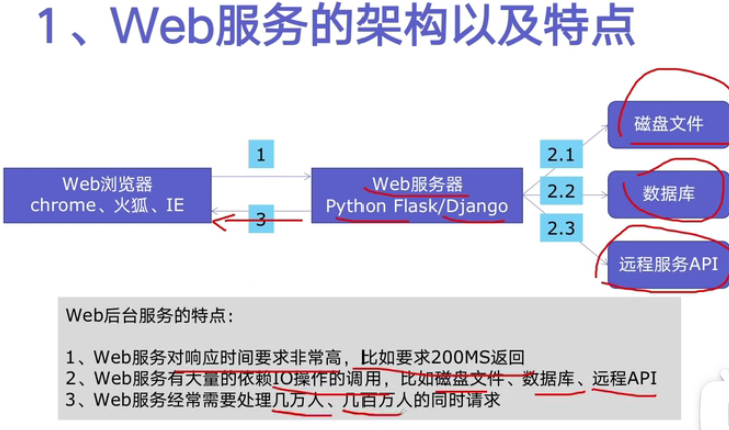

1. Architecture and characteristics of Web Services

2. Use thread pool ThreadPoolExecutor to accelerate

3. Flash code implements web services and accelerates the implementation

Use multiprocessing to speed up the running of programs

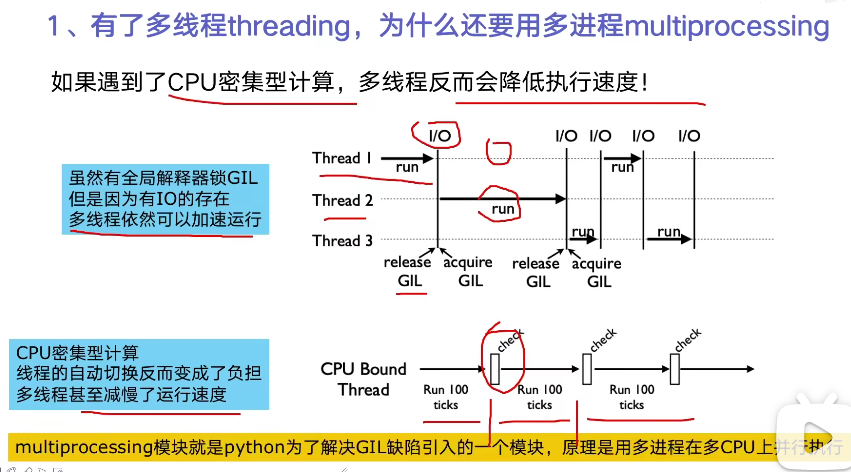

1. With multi threading, why use multiprocessing

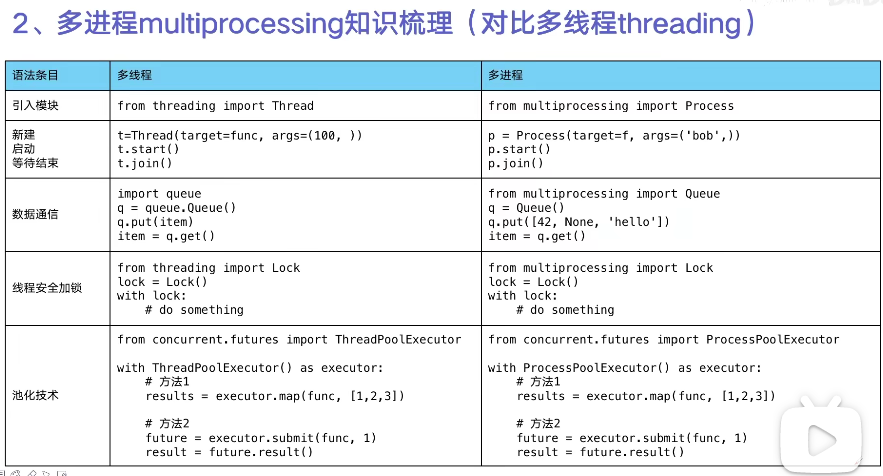

2. Multi process knowledge sorting

Using process pool acceleration in Flask service

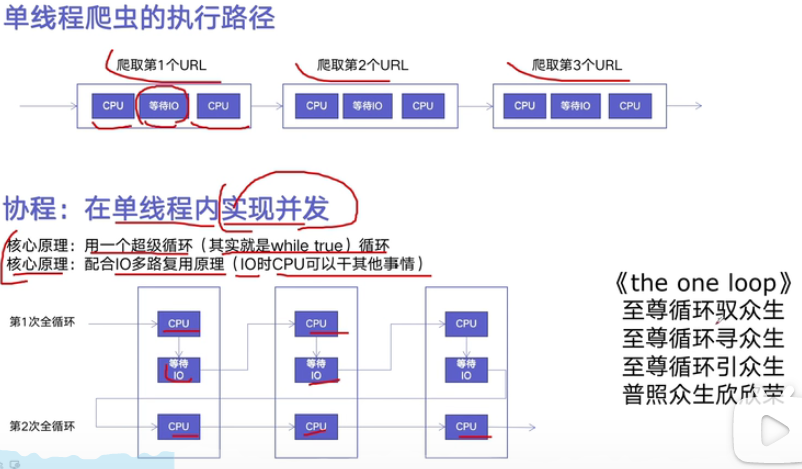

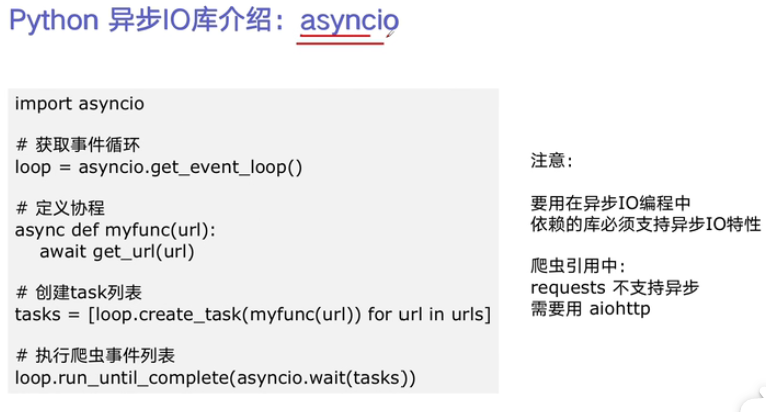

Python asynchronous IO implementation concurrent crawler

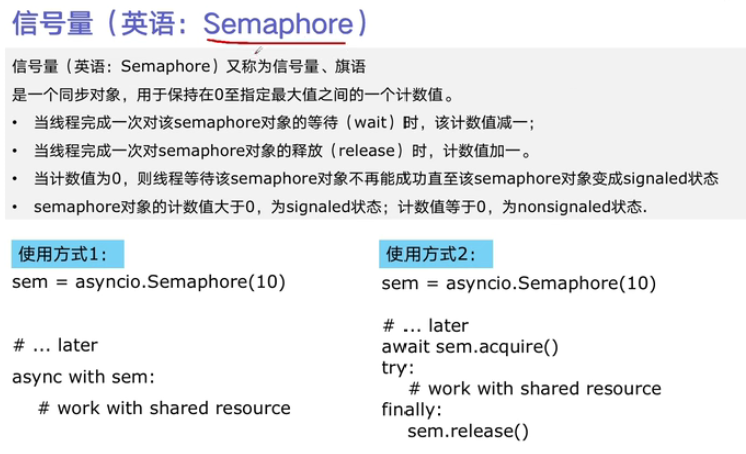

Using semaphores to control crawler concurrency in asynchronous IO

Introduction to Python concurrent programming

1. Why introduce concurrent programming?

Scenario 1: a web crawler crawls in sequence for 1 hour, and uses concurrent download to reduce it to 20 minutes!

Scenario 2: for an APP application, it takes 3 seconds to open the page each time before optimization, and asynchronous concurrency is used to improve it to 200 milliseconds each time;

2. What are the methods to speed up the program?

3.python support for concurrent programming

How to select multithreaded Thread, multiprocess Process and multiprocess Coroutine

1. What are CPU intensive computing and IO intensive computing?

2. Comparison of multithreading, multiprocessing and multiprocessing

3. How to select the corresponding technology according to the task?

The culprit of Python's slow speed is the global interpreter lock GIL

1. Two reasons why Python is slow

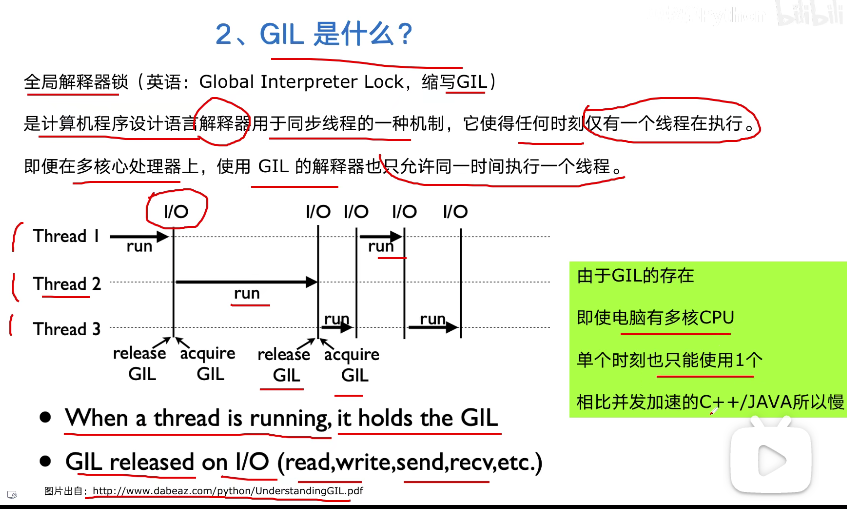

2. What is Gil?

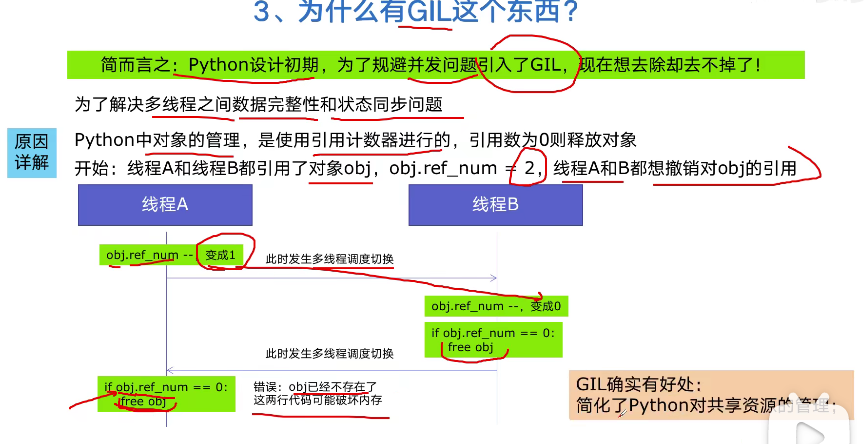

3. Why GIL?

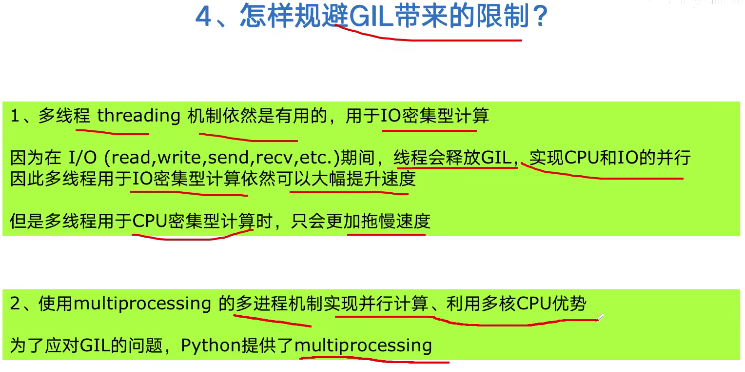

4. How to avoid the restrictions brought by GIL?

With multithreading, Python multithreading is accelerated 10 times

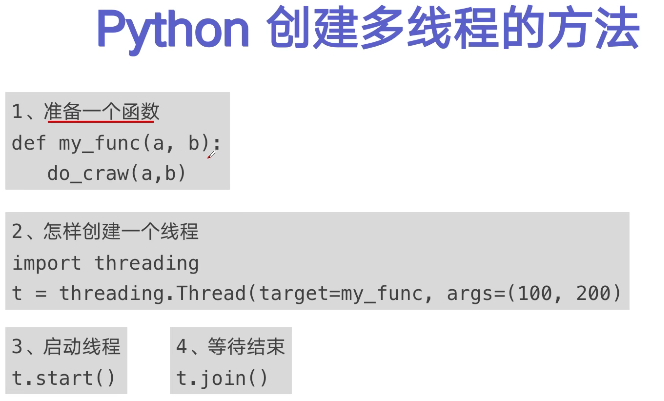

1.Python's method of creating multithreading

2. Rewrite the crawler program and program multi-threaded crawling

blog_spider.py

import requests

urls=[f"https://www.cnblogs.com/#p{page}"

for page in range(1,50+1)

]

def craw(url):

r=requests.get(url)

print(url,len(r.text))

craw(urls[0])multi_thread_craw.py

import blog_spider

import threading

import time

def single_thread():

print("single_thread begin")

for url in blog_spider.urls:

blog_spider.craw(url)

print("single_thread end")

def multi_thread():

print("single_thread begin")

threads=[]

for url in blog_spider.urls:

threads.append(

threading.Thread(target=blog_spider.craw,args=(url,))

)

for thread in threads:

thread.start()

for thread in threads:

thread.join()

print("single_thread end")

if __name__=='__main__':

start=time.time()

single_thread()

end=time.time()

print("single thread cost:",end-start)

start = time.time()

multi_thread()

end = time.time()

print("multi thread cost:", end - start)3. Speed comparison: single thread crawler VS multi thread crawler

Python implements producer consumer mode multithreaded crawler!

1. Multi component Pipeline technology architecture

2. Framework of producer consumer crawler

3. queue.Queue of multithreaded data communication

4. Code writing to realize producer consumer crawler

Python thread safety problems and Solutions

1. Introduction to thread safety concept

2.Lock is used to solve thread safety problems

3. Example code to demonstrate problems and Solutions

Easy to use thread pool ThreadPoolExecutor

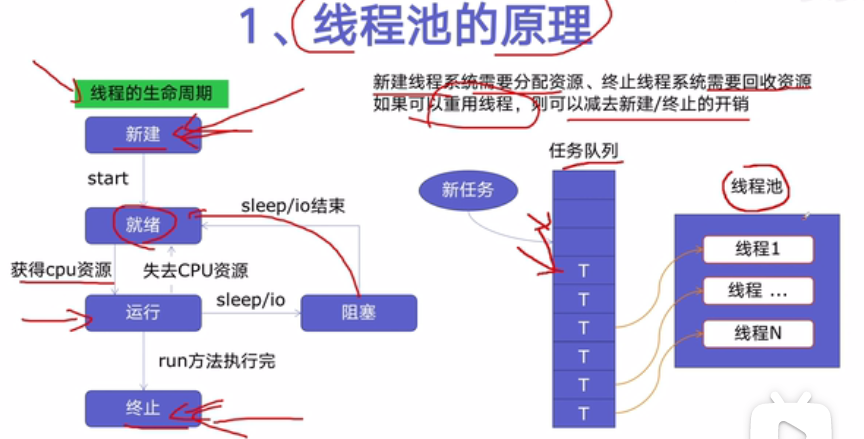

1. Principle of thread pool

2. Benefits of using thread pool

3. Usage syntax of ThreadPoolExecutor

4. Use thread pool to transform crawler program

Using thread pool acceleration in web server

1. Architecture and characteristics of Web Services

2. Use thread pool ThreadPoolExecutor to accelerate

3. Flash code implements web services and accelerates the implementation

import flask

import json

import time

from concurrent.futures import ThreadPoolExecutor

app=flask.Flask(__name__)

pool=ThreadPoolExecutor()

def read_file():

time.sleep(0.1)

return "file result"

def read_db():

time.sleep(0.2)

return "db result"

def read_api():

time.sleep(0.3)

return "api result"

@app.route("/")

def index():

result_file=pool.submit(read_file)

result_db=pool.submit(read_db)

result_api=pool.submit(read_api)

return json.dumps({

"result_file":result_file.result(),

"result_db":result_db.result(),

"result_api":result_api.result(),

})

if __name__=='__main__':

app.run()Use multiprocessing to speed up the running of programs

1. With multi threading, why use multiprocessing

2. Multi process knowledge sorting

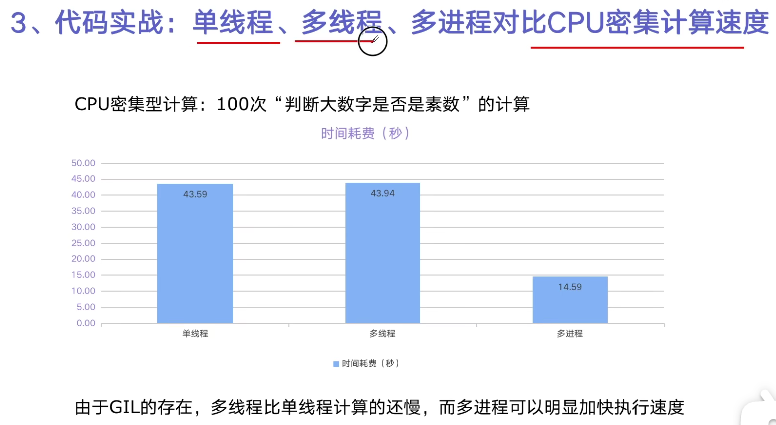

3. Code practice: single thread, multi thread and multi process compare CPU intensive computing speed

import math

import time

from concurrent.futures import ThreadPoolExecutor,ProcessPoolExecutor

PRIMES=[112272535095293]*100

def is_prime(n):

if n<2:

return False

if n==2:

return True

if n%2==0:

return False

sqrt_n=int(math.floor(math.sqrt(n)))

for i in range(3,sqrt_n+1,2):

if n%i==0:

return False

return True

def single_thread():

for number in PRIMES:

is_prime(number)

def multi_thread():

with ThreadPoolExecutor() as pool:

pool.map(is_prime,PRIMES)

def multi_process():

with ProcessPoolExecutor() as pool:

pool.map(is_prime,PRIMES)

if __name__=="__main__":

start=time.time()

single_thread()

end=time.time()

print("single_thread,cost:",end-start,"seconds")

start=time.time()

multi_thread()

end=time.time()

print("multi_thread,cost:",end-start,"seconds")

start=time.time()

multi_process()

end=time.time()

print("multi_process,cost:",end-start,"seconds")Using process pool acceleration in Flask service

import flask

import math

import json

from concurrent.futures import ProcessPoolExecutor

process_pool=ProcessPoolExecutor()

app=flask.Flask()

def is_prime(n):

if n<2:

return False

if n==2:

return True

if n%2==0:

return False

sqrt_n=int(math.floor(math.sqrt(n)))

for i in range(3,sqrt_n+1,2):

if n%i==0:

return False

return True

@app.route("/is_prime/<numbers>")

def api_is_prime(numbers):

number_list=[int(x) for x in numbers.split(",")]

results=process_pool.map(is_prime,number_list)

return json.dumps(dict(zip(number_list,results)))

if __name__=="__main__":

process_pool=ProcessPoolExecutor()

app.run()Python asynchronous IO implementation concurrent crawler

Using semaphores to control crawler concurrency in asynchronous IO

Using semaphores to control crawler concurrency in asynchronous IO

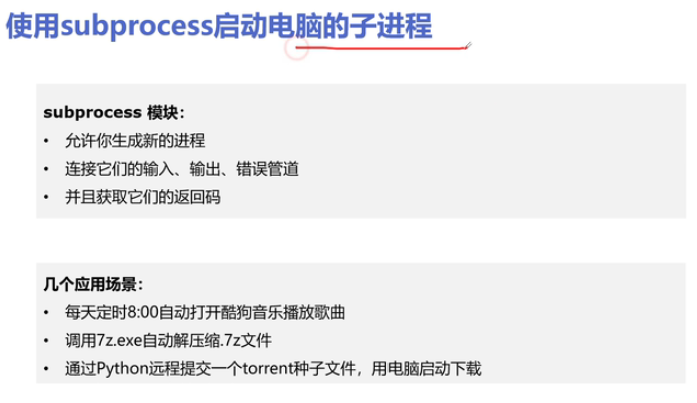

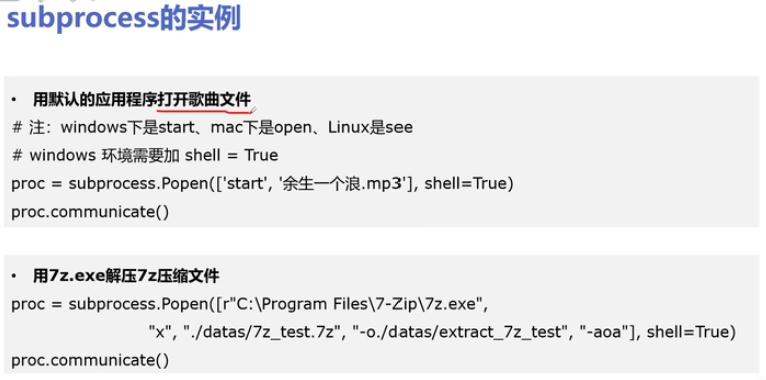

Use subprocess to start any computer program, listen to music, decompress, download automatically, etc

Use subprocess to start any computer program, listen to music, decompress, download automatically, etc