Code address of this series: https://github.com/JoJoTec/spring-cloud-parent

In the previous section, we implemented the Retry of FeignClient resilience4j. Careful readers may ask why the circuit breaker and thread current limiting are not added together in the implementation here:

@Bean

public FeignDecorators.Builder defaultBuilder(

Environment environment,

RetryRegistry retryRegistry

) {

//Get microservice name

String name = environment.getProperty("feign.client.name");

Retry retry = null;

try {

retry = retryRegistry.retry(name, name);

} catch (ConfigurationNotFoundException e) {

retry = retryRegistry.retry(name);

}

//Override the exception judgment and retry only for feign.RetryableException. All exceptions that need to be retried are encapsulated as RetryableException in DefaultErrorDecoder and Resilience4jFeignClient

retry = Retry.of(name, RetryConfig.from(retry.getRetryConfig()).retryOnException(throwable -> {

return throwable instanceof feign.RetryableException;

}).build());

return FeignDecorators.builder().withRetry(

retry

);

}

The main reason is that circuit breakers and thread isolation are added here, and their granularity is microservice level. The disadvantages are:

- As long as one instance in the microservice is always abnormal, the whole microservice will be broken

- As long as one method of the microservice is always abnormal, the whole microservice will be broken

- One instance of the microservice is slow and other instances are normal, but the polling load balancing mode causes the thread pool to be full of requests from this instance. Because of this slow instance, the entire microservice request is slowed down

Review the microservice retry, disconnection, and thread isolation we want to implement

Request retry

Let's look at a few scenarios:

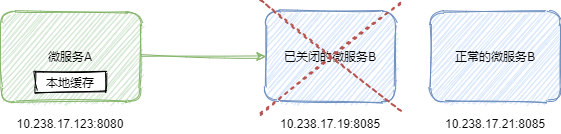

1. When publishing a service online or when a service goes offline due to a problem, the old service instance has gone offline in the registry and the instance has been closed, but other microservices have a local service instance cache or are calling using this service instance. At this time, a java.io.IOException will be thrown because the TCP connection cannot be established, Different frameworks use different sub exceptions of this exception, but the prompt message generally includes connect time out or no route to host. At this time, if you retry and the instance you retry is not this instance but a normal instance, the call will succeed. As shown in the figure below:

2. When calling a microservice, a non 2XX response code is returned:

a) 4XX: when publishing interface updates, both the caller and the callee may need to publish. Assuming that the new interface parameter changes and is not compatible with the old call, there will be an exception, which is generally a parameter error, that is, a 4XX response code will be returned. For example, the new caller calls the old callee. In this case, retry can solve the problem. However, for insurance, we only retry the GET method (i.e. query method, or clearly mark the non GET method that can be retried) for such requests that have been issued, and we do not retry for non GET requests. As shown in the figure below:

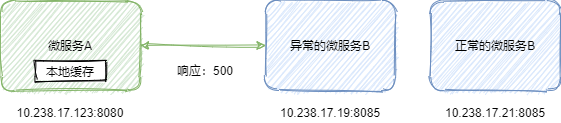

b) 5XX: when an exception occurs to an instance, such as database failure, JVM stop the world, etc., there will be 5XX exception. In this case, retry can also be solved. Similarly, for the sake of insurance, we only retry the GET method (i.e. query method, or clearly mark the non GET method that can be retried) for such requests that have been issued, and we do not retry for non GET requests. As shown in the figure below:

3. Exception of circuit breaker opening: we will know later that our circuit breaker is for a method level of an instance of microservice. If an exception of circuit breaker opening is thrown and the request is not sent, we can try again directly.

4. Flow limiting exception: as we will know later, we have isolated the thread pool for each microservice instance. If the thread pool is full and the request is rejected, a flow limiting exception will be thrown. For this exception, we also need to retry directly.

These scenarios are still very common when online updates are published online, and when the sudden arrival of traffic leads to problems in some instances. If there is no retry, the user will often see the exception page, which will affect the user experience. Therefore, it is necessary to retry in these scenarios. For retry, we use resilience4j as the core of our whole framework to implement the retry mechanism.

Microservice instance level thread isolation

Take another look at the following scenario:

Microservice A calls all instances of microservice B through the same thread pool. If there is A problem with an instance, the request is blocked, or the response is very slow. Over time, the thread pool will be filled with requests sent to the exception instance, but in fact, microservice B has A working instance.

In order to prevent this situation and limit the concurrency (i.e. flow restriction) of calling each instance of microservice, we use different thread pools to call different instances of different microservices. This is also achieved through resilience4j.

Microservice instance method granularity circuit breaker

If an instance is under too much pressure for a period of time, resulting in a slow request, or the instance is closing, and the request response is mostly 500 due to an instance problem, even if we have a retry mechanism, if many requests are sent to the problematic instance according to the request - > failure - > retry other instances, the efficiency is very low. This requires the use of circuit breakers.

In practical applications, we find that in most abnormal cases, some interfaces of some instances of a microservice are abnormal, and other interfaces on these problem instances are often available. Therefore, our circuit breaker cannot directly open the whole instance, let alone the whole microservice. Therefore, what we use resilience4j to implement is the circuit breaker of microservice instance method level (that is, different methods of different microservices and different instances are different circuit breakers)

Circuit breaker and thread current limiter using resilience4j

Let's take a look at the relevant configurations of the circuit breaker to understand the principle of the resilience4j circuit breaker:

//Judge whether an exception is recorded as circuit breaker failure. By default, all exceptions are failures, which is equivalent to blacklist private Predicate<Throwable> recordExceptionPredicate = throwable -> true; //Judge whether a return object is recorded as circuit breaker failure. By default, as long as the return object is normal, it is not considered as failure private transient Predicate<Object> recordResultPredicate = (Object object) -> false; //Judge whether an exception can not be regarded as a circuit breaker failure. By default, all exceptions are failures, which is equivalent to a white list private Predicate<Throwable> ignoreExceptionPredicate = throwable -> false; //Get current time function private Function<Clock, Long> currentTimestampFunction = clock -> System.nanoTime(); //Unit of current time private TimeUnit timestampUnit = TimeUnit.NANOSECONDS; //The Exception list specifies a list of exceptions. All exceptions in this collection or subclasses of these exceptions will be recorded as failures when they are thrown during the call. Other exceptions are not considered failures, or exceptions configured in ignoreExceptions are not considered failures. By default, all exceptions are considered failures. private Class<? extends Throwable>[] recordExceptions = new Class[0]; //Exception white list. All exceptions and their subclasses in this list will not be considered as request failure, even if these exceptions are configured in recordExceptions. The default whitelist is empty. private Class<? extends Throwable>[] ignoreExceptions = new Class[0]; //Percentage of failed requests. If it exceeds this percentage, 'CircuitBreaker' will become 'OPEN', which is 50% by default private float failureRateThreshold = 50; //When 'CircuitBreaker' is in 'half'_ The number of requests allowed to pass in the open status private int permittedNumberOfCallsInHalfOpenState = 10; //Sliding window size, if configured ` COUNT_BASED ` the default value of 100 represents the last 100 requests. If you configure ` TIME_BASED ` the default value of 100 represents the request in the last 100s. private int slidingWindowSize = 100; //Sliding window type, ` COUNT_BASED ` stands for count based sliding window, ` TIME_BASED ` stands for a sliding window based on timing private SlidingWindowType slidingWindowType = SlidingWindowType.COUNT_BASED; //Minimum number of requests. Only when the number of requests reaches this number in the sliding window will the 'CircuitBreaker' judge whether to open the circuit breaker be triggered. private int minimumNumberOfCalls = 100; //The writableStackTrace property corresponding to RuntimeException, that is, whether to cache the exception stack when an exception is generated //Circuit breaker related exceptions inherit RuntimeException, and the writableStackTrace of these exceptions is uniformly specified here //Set to false, the exception will not have an exception stack, but will improve performance private boolean writableStackTraceEnabled = true; //If it is set to 'true', it indicates whether to automatically change from 'OPEN' status to 'half'_ OPEN `, even if there is no request. private boolean automaticTransitionFromOpenToHalfOpenEnabled = false; //The waiting time function in the OPEN state of the circuit breaker is fixed for 60s by default. After waiting and time, it will exit the OPEN state private IntervalFunction waitIntervalFunctionInOpenState = IntervalFunction.of(Duration.ofSeconds(60)); //When some objects or exceptions are returned, the state is directly converted to another state. By default, no state conversion mechanism is configured private Function<Either<Object, Throwable>, TransitionCheckResult> transitionOnResult = any -> TransitionCheckResult.noTransition(); //When the slow call reaches this percentage, 'CircuitBreaker' will become 'OPEN' //By default, slow calls will not cause 'CircuitBreaker' to become 'OPEN' because the default configuration is 100% private float slowCallRateThreshold = 100; //Slow call time. When a call is slower than this time, it will be recorded as slow call private Duration slowCallDurationThreshold = Duration.ofSeconds(60); //`CircuitBreaker ` hold ` HALF_OPEN ` time. The default value is 0, that is, keep ` HALF_OPEN ` status until minimumNumberOfCalls succeeds or fails. private Duration maxWaitDurationInHalfOpenState = Duration.ofSeconds(0);

Then the related configuration of thread isolation:

//The following five parameters correspond to the configuration of Java thread pool, which will not be repeated here private int maxThreadPoolSize = Runtime.getRuntime().availableProcessors(); private int coreThreadPoolSize = Runtime.getRuntime().availableProcessors(); private int queueCapacity = 100; private Duration keepAliveDuration = Duration.ofMillis(20); private RejectedExecutionHandler rejectedExecutionHandler = new ThreadPoolExecutor.AbortPolicy(); //The writableStackTrace property corresponding to RuntimeException, that is, whether to cache the exception stack when an exception is generated //All current limiter related exceptions inherit RuntimeException, and the writableStackTrace of these exceptions is uniformly specified here //Set to false, the exception will not have an exception stack, but will improve performance private boolean writableStackTraceEnabled = true; //Many Java Context transfers are based on ThreadLocal, but this is equivalent to switching threads. Some tasks need to maintain the Context. You can join here by implementing ContextPropagator private List<ContextPropagator> contextPropagators = new ArrayList<>();

After adding the resilience4j-spring-cloud2 dependency mentioned in the previous section, we can configure the circuit breaker and thread isolation as follows:

resilience4j.circuitbreaker:

configs:

default:

registerHealthIndicator: true

slidingWindowSize: 10

minimumNumberOfCalls: 5

slidingWindowType: TIME_BASED

permittedNumberOfCallsInHalfOpenState: 3

automaticTransitionFromOpenToHalfOpenEnabled: true

waitDurationInOpenState: 2s

failureRateThreshold: 30

eventConsumerBufferSize: 10

recordExceptions:

- java.lang.Exception

resilience4j.thread-pool-bulkhead:

configs:

default:

maxThreadPoolSize: 50

coreThreadPoolSize: 10

queueCapacity: 1000

How to implement microservice instance method granularity circuit breaker

What we want to achieve is that each method of each instance of each microservice is a different circuit breaker. We need to get:

- Microservice name

- Instance ID, or a string that uniquely identifies an instance

- Method name: can be a URL path or a fully qualified method name.

The method name here adopts the fully qualified name of the method instead of the URL path, because some feignclients put parameters on the path, such as @ PathVriable. If the parameters are similar to the user ID, a user will have an independent circuit breaker, which is not what we expect. Therefore, the method of full name restriction is used to avoid this problem.

So where can I get these? Reviewing the core process of FeignClient, we find that the instance ID can only be obtained after the load balancer call is completed during the actual call. That is, after the org.springframework.cloud.openfeign.loadbalancer.FeignBlockingLoadBalancerClient call is completed. Therefore, we implant our circuit breaker code here to realize the circuit breaker.

In addition, the configuration granularity can be configured separately for each FeignClient without going to the method level. An example is as follows:

resilience4j.circuitbreaker:

configs:

default:

slidingWindowSize: 10

feign-client-1:

slidingWindowSize: 100

In the following code, contextId is feign-client-1. Different microservice instance methods have different serviceinstancemethodids. If the configuration corresponding to contextId is not found, a ConfigurationNotFoundException will be thrown. At this time, we will read and use the default configuration.

try {

circuitBreaker = circuitBreakerRegistry.circuitBreaker(serviceInstanceMethodId, contextId);

} catch (ConfigurationNotFoundException e) {

circuitBreaker = circuitBreakerRegistry.circuitBreaker(serviceInstanceMethodId);

}

How to implement microservice instance thread limiter

For thread isolation restrictors, we only need the microservice name and instance ID, and these thread pools only make calls. Therefore, like circuit breakers, we can implant the code related to thread restrictors after the org.springframework.cloud.openfeign.loadbalancer.FeignBlockingLoadBalancerClient call is completed.

WeChat search "my programming meow" attention to the official account, daily brush, easy to upgrade technology, and capture all kinds of offer: