1, PNN probabilistic neural network speech emotion recognition

1 speech emotion recognition system

In the speech emotion recognition system, the recorded corpus contains five different emotions of five actors, namely happiness, fear, sadness, anger and neutrality. At the same time, the corpus is divided into training set and test set. The design of speech emotion recognition system is shown in Figure 1. Firstly, the feature of speech signal is extracted, and the HMM is trained by HMM training algorithm (piecewise k-means algorithm) to obtain the HMM parameters of each word; Secondly, the optimal state time series of speech signal is obtained by using this parameter; Thirdly, according to several frames of speech cepstrum vectors corresponding to each HMM state, they can be regarded as speech events with similar characteristics (such as phonemes, syllables, etc.), and these cepstrum vector speech signals with different lengths and the same state are regularized in time to make them become speech feature vectors with the same dimension; Finally, PNN neural network is used for speech recognition.

Figure 1 speech emotion recognition system

1.1 speech parameter feature extraction

The commonly used feature parameters of speech emotion recognition are mainly considered from the structure of time knot, energy, pitch, formant and so on. The system selects average amplitude, maximum amplitude, speech speed, sentence pronunciation duration, average pitch frequency, maximum pitch frequency, pitch change rate, maximum first formant, mean value of first formant and change rate of first formant as emotional characteristic parameters.

1.2 speech emotion recognition model based on PNN

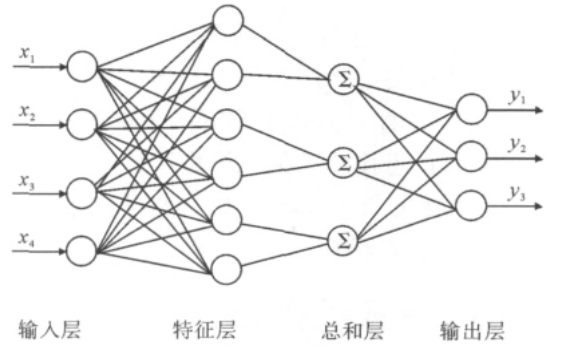

PNN is a neural network model designed based on statistical principle, as shown in Figure 2. The network is composed of input layer, feature layer, summation layer and output layer. The input and output layers are composed of linear neurons; The feature layer is the dynamic probability layer, which contains P neurons, and P changes dynamically in network training; The summation layer node is a Gaussian kernel function, which can produce a local response to the input, so as to divide the input space into several small local intervals to achieve the purpose of classification and function approximation; The output layer contains k neurons, and each neuron corresponds to a speech primitive to be recognized (k = 10 in the experiment). Compared with multi-layer perception (MLP), when the system is faced with the change of external environmental factors, the network structure only needs to define the weight of the new classification data, and there is no need to add a new classification like other types of network structures

At the same time, the network learning speed is very fast, and the learning time is zero, because its network connection weight only loads the required data directly from the training example without iterative process. When the dimension of pattern vector is high, PNN has good classification performance and faster training speed.

Figure 2 PNN structure diagram

2, Partial source code

lc

close all

clear all

load A_fear fearVec;

load F_happiness hapVec;

load N_neutral neutralVec;

load T_sadness sadnessVec;

load W_anger angerVec;

trainsample(1:30,1:140)=angerVec(:,1:30)';

trainsample(31:60,1:140)=hapVec(:,1:30)';

trainsample(61:90,1:140)=neutralVec(:,1:30)';

trainsample(91:120,1:140)=sadnessVec(:,1:30)';

trainsample(121:150,1:140)=fearVec(:,1:30)';

trainsample(1:30,141)=1;

trainsample(31:60,141)=2;

trainsample(61:90,141)=3;

trainsample(91:120,141)=4;

trainsample(121:150,141)=5;

testsample(1:20,1:140)=angerVec(:,31:50)';

testsample(21:40,1:140)=hapVec(:,31:50)';

testsample(41:60,1:140)=neutralVec(:,31:50)';

testsample(61:80,1:140)=sadnessVec(:,31:50)';

testsample(81:100,1:140)=fearVec(:,31:50)';

testsample(1:20,141)=1;

testsample(21:40,141)=2;

testsample(41:60,141)=3;

testsample(61:80,141)=4;

testsample(81:100,141)=5;

class=trainsample(:,141);

sum=bpnn(trainsample,testsample,class);

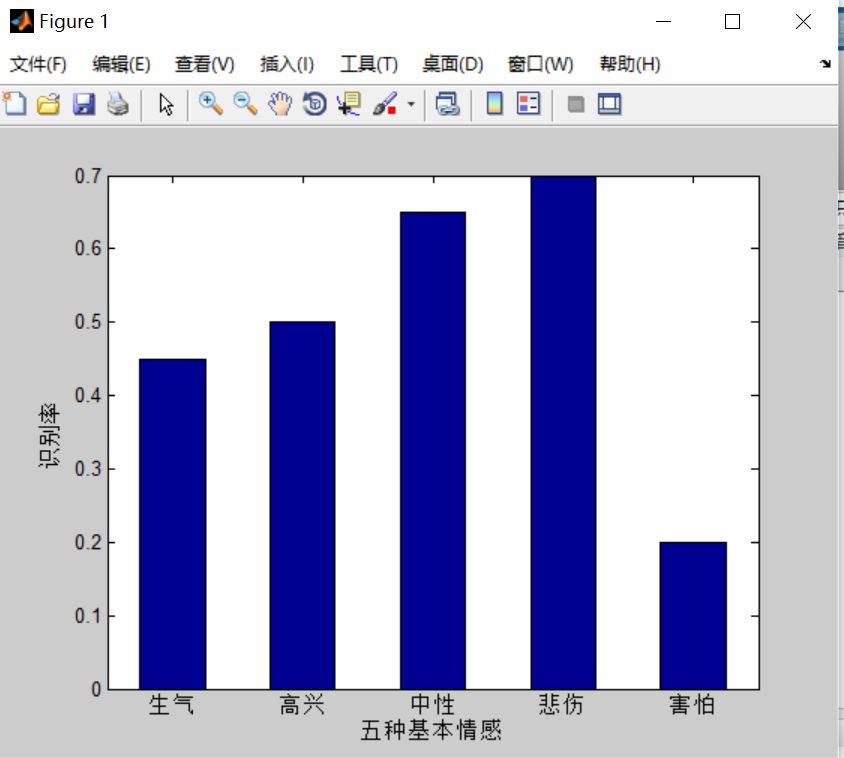

figure(1)

bar(sum,0.5);

set(gca,'XTickLabel',{'get angry','happy','neutral','Sadness','fear'});

ylabel('Recognition rate');

xlabel('Five basic emotions');

p_train=trainsample(:,1:140)';

t_train=trainsample(:,141)';

p_test=testsample(:,1:140)';

t_test=testsample(:,141)';

sumpnn=pnn(p_train,t_train,p_test,t_test);

figure(2)

bar(sumpnn,0.5);

set(gca,'XTickLabel',{'get angry','happy','neutral','Sadness','fear'});

ylabel('Recognition rate');

xlabel('Five basic emotions');

sumlvq=lvq(trainsample,testsample,class);

function sum=bpnn(trainsample,testsample,class)

%Input parameters: trainsample It's a training sample,testsample It's a test sample,class Represents the category of training samples, and trainsample Data correspondence in

%sum: Recognition rate of five basic emotions

for i=1:140

feature(:,i)= trainsample(:,i);

end

%Eigenvalue normalization

[input,minI,maxI] = premnmx( feature') ;

%Construct output matrix

s = length( class ) ;

output = zeros( s , 5 ) ;

for i = 1 : s

output( i , class( i ) ) = 1 ;

end

%Creating neural networks

net = newff( minmax(input) , [10 5] , { 'logsig' 'purelin' } , 'traingdx' ) ; %Create feedforward neural network

%Set training parameters

net.trainparam.show = 50 ;

net.trainparam.epochs = 150 ;

net.trainparam.goal = 0.1 ;

net.trainParam.lr = 0.05 ;

%Start training

net = train( net, input , output' ) ;

%Read test data

for i=1:140

featuretest(:,i)= testsample(:,i);

end

c=testsample(:,141);

%Normalization of test data

testInput = tramnmx(featuretest' , minI, maxI ) ;

%simulation

Y = sim( net , testInput )

sum=[0 0 0 0 0]; %Number of correct recognition of each type of emotion

%Count the number of correctly identified samples

for i=1:20

if Y(1,i)>Y(2,i)&&Y(1,i)>Y(3,i)&&Y(1,i)>Y(4,i)&&Y(1,i)>Y(5,i)

sum(1)=sum(1)+1;

end

function sumlvq=lvq(trainsample,testsample,class)

P=trainsample(:,1:140)';

C=class';

T=ind2vec(C);

net=newlvq(minmax(P),20,[0.2 0.2 0.2 0.2 0.2],0.1); %establish lvq network

w1=net.IW{1};

net.trainParam.epochs=100;

net=train(net,P,T);

y=sim(net,testsample(:,1:140)');

y3c=vec2ind(y);

sumlvq=[0 0 0 0 0]; %Number of correct recognition of each type of emotion

%Count the number of correctly identified samples

for i=1:20

if y3c(i)==1

sumlvq(1)=sumlvq(1)+1;

end

end

for i=21:40

if y3c(i)==2

sumlvq(2)=sumlvq(2)+1;

end

end

for i=41:60

if y3c(i)==3

sumlvq(3)=sumlvq(3)+1;

end

end

for i=61:80

if y3c(i)==4

sumlvq(4)=sumlvq(4)+1;

end

end

for i=81:100

end

3, Operation results

4, matlab version and references

1 matlab version

2014a

2 references

[1] Han Jiqing, Zhang Lei, Zheng tieran. Speech signal processing (3rd Edition) [M]. Tsinghua University Press, 2019

[2] Liu ruobian. Deep learning: speech recognition technology practice [M]. Tsinghua University Press, 2019

[3] Ye bin. Research on speech emotion recognition based on HMM and PNN. [J] Journal of Qingdao University (Engineering Technology Edition). 2011,26 (04)