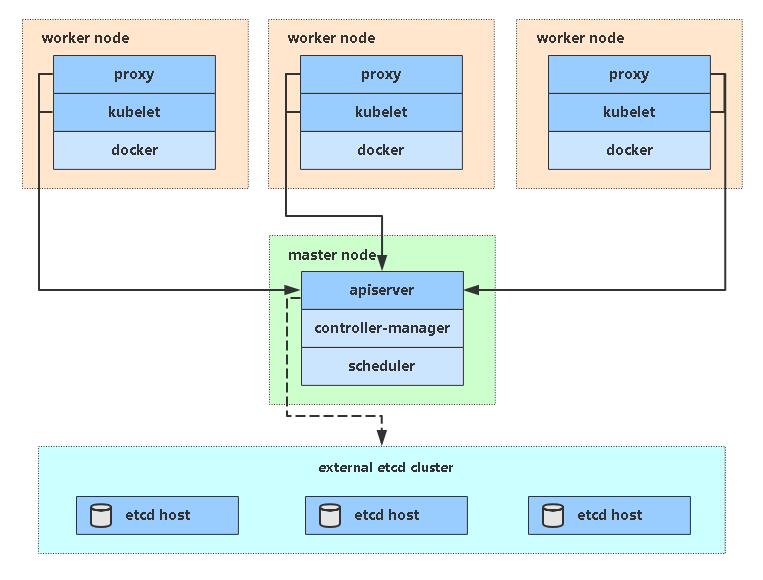

1. Two ways to deploy K8s in production environment

- kubeadm

Kubedm is a tool that provides kubedm init and kubedm join for rapid deployment of Kubernetes clusters.

Deployment address: https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/ - Binary

It is recommended to download the binary package of the distribution from the official and manually deploy each component to form a Kubernetes cluster.

Download address: https://github.com/kubernetes/kubernetes/releases

2. Recommended server hardware configuration

3. Quickly deploy a K8s cluster using kubedm

# Create a Master node kubeadm init # Add a Node to the current cluster kubeadm join <Master Nodal IP And ports>

3.1 installation requirements

Before you start, you need to meet the following conditions to deploy Kubernetes cluster machines:

- One or more machines, operating system CentOS7.x-86_x64, recommended 7.5-7.8

- Hardware configuration: 2GB or more RAM, 2 CPUs or more CPUs, 30GB or more hard disk, 2C4G is recommended

- Network interworking between all machines in the cluster

- You can access the external network. You need to pull the image. If you can't connect to the network, find a server that can connect to the network for docker pull/load/save and other operations

- Prohibit swap partition to prevent memory overflow and falling on disk, resulting in slow speed and affecting work efficiency

3.2 preparation environment

| role | IP |

|---|---|

| k8s-master | 10.0.0.61 |

| k8s-node1 | 10.0.0.62 |

| k8s-node2 | 10.0.0.63 |

Turn off firewall (all nodes execute):

[root@k8s-cka-master01 ~]# systemctl stop firewalld

[root@k8s-cka-master01 ~]# systemctl disable firewalld

close selinux(All nodes:

[root@k8s-cka-master01 ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config # permanent

[root@k8s-cka-master01 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# disabled - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of disabled.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@k8s-cka-master01 ~]# setenforce 0 # Temporarily Closed

setenforce: SELinux is disabled # Because I have closed it, there will be this display. If it is not closed, it will be closed normally

close swap(All nodes:

[root@k8s-cka-master01 ~]# swapoff -a # temporary

[root@k8s-cka-master01 ~]# vim /etc/fstab # Permanently, comment out swap

[root@k8s-cka-master01 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Sun Jul 26 16:55:01 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos_mobanji01-root / xfs defaults 0 0

UUID=95be88d4-4459-4996-a8cf-6d2fa2aa6344 /boot xfs defaults 0 0

#/dev/mapper/centos_mobanji01-swap swap swap defaults 0 0

Set host name (all nodes execute):

[root@k8s-cka-master01 ~]# hostnamectl set-hostname K8S-master

[root@k8s-cka-master01 ~]# bash

bash

[root@k8s-master ~]#

stay master add to hosts(Just master Settings under node:

[root@k8s-master ~]# cat >> /etc/hosts << EOF

10.0.0.61 k8s-master

10.0.0.62 k8s-node1

10.0.0.63 k8s-node2

EOF

[root@k8s-master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.61 k8s-master

10.0.0.62 k8s-node1

10.0.0.63 k8s-node2

To be bridged IPv4 Flow transfer to iptables Chain of (executed by all nodes):

[root@k8s-master ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@k8s-master ~]# sysctl --system # take effect

* Applying /usr/lib/sysctl.d/00-system.conf ...

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

* Applying /etc/sysctl.conf ...

time synchronization (All nodes execute):

[root@k8s-master ~]# yum install ntpdate -y

Loaded plugins: fastestmirror

Determining fastest mirrors

....

Installed:

ntpdate.x86_64 0:4.2.6p5-29.el7.centos.2

Complete!

[root@k8s-master ~]# ntpdate time.windows.com

21 Nov 18:13:28 ntpdate[3724]: adjust time server 52.231.114.183 offset 0.007273 sec

3.3 install docker / kubedm / kubelet [all nodes]

By default, the CRI (container runtime) of Kubernetes is Docker, so Docker is installed first.

3.3.1 installing Docker

[root@k8s-master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo [root@k8s-master ~]# yum -y install docker-ce [root@k8s-master ~]# systemctl enable docker && systemctl start docker

To configure the image download accelerator:

[root@k8s-master ~]# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

[root@k8s-master ~]# systemctl restart docker

[root@k8s-master ~]# docker info

3.3.2 add alicloud YUM software source

[root@k8s-master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

3.3.3 install kubedm, kubelet and kubectl

Due to frequent version updates, the version number deployment is specified here:

[root@k8s-master ~]# yum install -y kubelet-1.19.0 kubeadm-1.19.0 kubectl-1.19.0 [root@k8s-master ~]# systemctl enable kubelet

3.4 deployment of Kubernetes Master

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/#config-file

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/#initializing-your-control-plane-node

Execute at 10.0.0.61 (Master).

[root@k8s-master ~]# kubeadm init \ > --apiserver-advertise-address=10.0.0.61 \ > --image-repository registry.aliyuncs.com/google_containers \ > --kubernetes-version v1.19.0 \ > --service-cidr=10.96.0.0/12 \ > --pod-network-cidr=10.244.0.0/16 \ > --ignore-preflight-errors=all

- – apiserver advertisement address

- – the image repository cannot be accessed in China because the default image address is k8s.gcr.io. Here, specify the Alibaba cloud image warehouse address

- – kubernetes version k8s version, consistent with the above installation

- – service CIDR cluster internal virtual network, Pod unified access portal

- – pod network CIDR pod network, which is consistent with the CNI network component yaml deployed below

Or use the configuration file to boot:

[root@k8s-master ~]# vi kubeadm.conf apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.19.0 imageRepository: registry.aliyuncs.com/google_containers networking: podSubnet: 10.244.0.0/16 serviceSubnet: 10.96.0.0/12 [root@k8s-master ~]# kubeadm init --config kubeadm.conf --ignore-preflight-errors=all [root@k8s-master ~]# docker image ls REPOSITORY TAG IMAGE ID CREATED SIZE 10.0.0.65/library/tomcat <none> b9479c1bc4c6 12 months ago 459MB 10.0.0.65/library/nginx <none> c345bbeb41b9 12 months ago 397MB calico/node v3.16.5 c1fa37765208 12 months ago 163MB calico/pod2daemon-flexvol v3.16.5 178cfd5d2400 12 months ago 21.9MB calico/cni v3.16.5 9165569ec236 12 months ago 133MB registry.aliyuncs.com/google_containers/kube-proxy v1.19.0 bc9c328f379c 15 months ago 118MB registry.aliyuncs.com/google_containers/kube-controller-manager v1.19.0 09d665d529d0 15 months ago 111MB registry.aliyuncs.com/google_containers/kube-apiserver v1.19.0 1b74e93ece2f 15 months ago 119MB registry.aliyuncs.com/google_containers/kube-scheduler v1.19.0 cbdc8369d8b1 15 months ago 45.7MB registry.aliyuncs.com/google_containers/etcd 3.4.9-1 d4ca8726196c 17 months ago 253MB registry.aliyuncs.com/google_containers/coredns 1.7.0 bfe3a36ebd25 17 months ago 45.2MB registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 21 months ago 683kB

Kubedm init workflow

kubeadm Component container deployment (image), kubelet No containerization, systemctl Binary daemon systemctl kubeadm init Workflow: 1,Installation environment check, e.g swapoff Is it off? Does the machine configuration meet or not 2,Download Image kubeadm config images pull 3,Generate certificate and save path/etc/kubernetes/pki(k8s,etcd) 4,[kubeconfig] generate kubeconfig Files, other component connections apiserver 5,[kubelet-start] generate kubelet Profile and launch 6,[control-plane] start-up master Node component 7,Store some configuration files in configmap Used for initial pulling of other nodes 8,[mark-control-plane] to master The node is stained and not allowed to pod Run on it 9,[bootstrap-token] Automatically as kubelet Issue certificate 10,[addons] Install plug-ins CoreDNS kube-proxy

Last copy kubectl For tools kubeconfig To the default path.

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Output other nodes join master Command for:

kubeadm join 10.0.0.61:6443 --token 3abvx6.5em4yxz9fwzsroyi \

--discovery-token-ca-cert-hash sha256:a2ce75ca1be016a9a679e2c5c4e9e4f8c97ed148dad6936edda6947a7e33aa97

Problems encountered

# Problem: Controller Manager and scheduler status are unhealthy

[root@k8s-master kubernetes]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

# terms of settlement:

# Comment out the -- port=0 of kube-controller-manager.yaml and kube-scheduler.yaml under / etc / kubernetes / manifest

[root@k8s-master kubernetes]# cd

[root@k8s-master ~]# cd /etc/kubernetes/manifests/

[root@k8s-master manifests]# ll

total 16

-rw------- 1 root root 2109 Nov 21 23:18 etcd.yaml

-rw------- 1 root root 3166 Nov 21 23:18 kube-apiserver.yaml

-rw------- 1 root root 2858 Nov 21 23:18 kube-controller-manager.yaml

-rw------- 1 root root 1413 Nov 21 23:18 kube-scheduler.yaml

[root@k8s-master manifests]#

[root@k8s-master manifests]# ll

total 16

-rw------- 1 root root 2109 Nov 21 23:18 etcd.yaml

-rw------- 1 root root 3166 Nov 21 23:18 kube-apiserver.yaml

-rw------- 1 root root 2858 Nov 21 23:18 kube-controller-manager.yaml

-rw------- 1 root root 1413 Nov 21 23:18 kube-scheduler.yaml

[root@k8s-master manifests]# vim kube-controller-manager.yaml

[root@k8s-master ~]# cat /etc/kubernetes/manifests/kube-controller-manager.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-controller-manager

tier: control-plane

name: kube-controller-manager

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=127.0.0.1

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-cidr=10.244.0.0/16

- --cluster-name=kubernetes

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --leader-elect=true

- --node-cidr-mask-size=24

#- --port=0

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --use-service-account-credentials=true

...

[root@k8s-master manifests]# vim kube-scheduler.yaml

[root@k8s-master ~]# cat /etc/kubernetes/manifests/kube-scheduler.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

#- --port=0

image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.19.0

imagePullPolicy: IfNotPresent

...

# Problem solving

[root@k8s-master manifests]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

3.5 join Kubernetes Node

Execute at 10.0.0.62/63 (Node).

Add a new node to the cluster and execute the kubedm join command output in kubedm init:

[root@k8s-node1 ~]# kubeadm join 10.0.0.61:6443 --token 3abvx6.5em4yxz9fwzsroyi \

--discovery-token-ca-cert-hash sha256:a2ce75ca1be016a9a679e2c5c4e9e4f8c97ed148dad6936edda6947a7e33aa97

Problems encountered:

# Problem: execute the kubectl join command on the node, and then execute kubectl get nodes. The error is as follows

[root@k8s-node2 ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

# Solve the problem: transfer the / etc/kubernetes/admin.conf of the master node to the node server, mv to the / etc/kubernetes directory

[root@k8s-master ~]# scp .kube/config root@10.0.0.62:~

The authenticity of host '10.0.0.62 (10.0.0.62)' can't be established.

ECDSA key fingerprint is SHA256:OWuZy2NmY2roM1RqIamUATXYA+wqXai6nqsA1LesvjU.

ECDSA key fingerprint is MD5:04:af:eb:98:a5:8d:e0:a4:b4:16:29:80:8e:f9:e6:fc.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.0.0.62' (ECDSA) to the list of known hosts.

root@10.0.0.62's password:

Permission denied, please try again.

root@10.0.0.62's password:

config 100% 5565 1.3MB/s 00:00

[root@k8s-node1 ~]# mv config .kube/

perhaps

[root@k8s-node2 ~]# mv admin.conf /etc/kubernetes/

[root@k8s-node2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 11h v1.19.0

k8s-node1 Ready <none> 11h v1.19.0

k8s-node2 Ready <none> 11h v1.19.0

[root@k8s-node2 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

# Problem solving

The default token is valid for 24 hours. When it expires, the token will not be available. In this case, you need to re create the token, as follows:

$ kubeadm token create $ kubeadm token list $ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 63bca849e0e01691ae14eab449570284f0c3ddeea590f8da988c07fe2729e924 $ kubeadm join 192.168.31.61:6443 --token nuja6n.o3jrhsffiqs9swnu --discovery-token-ca-cert-hash sha256:63bca849e0e01691ae14eab449570284f0c3ddeea590f8da988c07fe2729e924

Or directly generate a quick command: kubedm token create -- print join command

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-join/

3.6 deployment container network (CNI)

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/#pod-network

Note: only one of the following needs to be deployed. Calico is recommended.

Calico is a pure three-tier data center network solution. Calico supports a wide range of platforms, including Kubernetes, OpenStack, etc.

Calico implements an efficient virtual router (vRouter) in each computing node using Linux Kernel to be responsible for data forwarding, and each vRouter is responsible for transmitting the routing information of its workload to the whole calico network through BGP protocol.

In addition, Calico project also implements Kubernetes network policy and provides ACL function.

https://docs.projectcalico.org/getting-started/kubernetes/quickstart

$ wget https://docs.projectcalico.org/manifests/calico.yaml

After downloading, you also need to modify the Pod network defined in it (CALICO_IPV4POOL_CIDR), which is the same as that specified in kubedm init (10.244.0.0 / 16)

Application list after modification:

[root@k8s-master ~]# kubectl apply -f calico.yaml configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created [root@k8s-master ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-97769f7c7-z6npb 1/1 Running 0 8m18s calico-node-4pwdc 1/1 Running 0 8m18s calico-node-9r6zd 1/1 Running 0 8m18s calico-node-vqzdj 1/1 Running 0 8m18s coredns-6d56c8448f-gcgrh 1/1 Running 0 26m coredns-6d56c8448f-tbsmv 1/1 Running 0 26m etcd-k8s-master 1/1 Running 0 26m kube-apiserver-k8s-master 1/1 Running 0 26m kube-controller-manager-k8s-master 1/1 Running 0 26m kube-proxy-5qpgc 1/1 Running 0 22m kube-proxy-q2xfq 1/1 Running 0 22m kube-proxy-tvzpd 1/1 Running 0 26m kube-scheduler-k8s-master 1/1 Running 0 26m

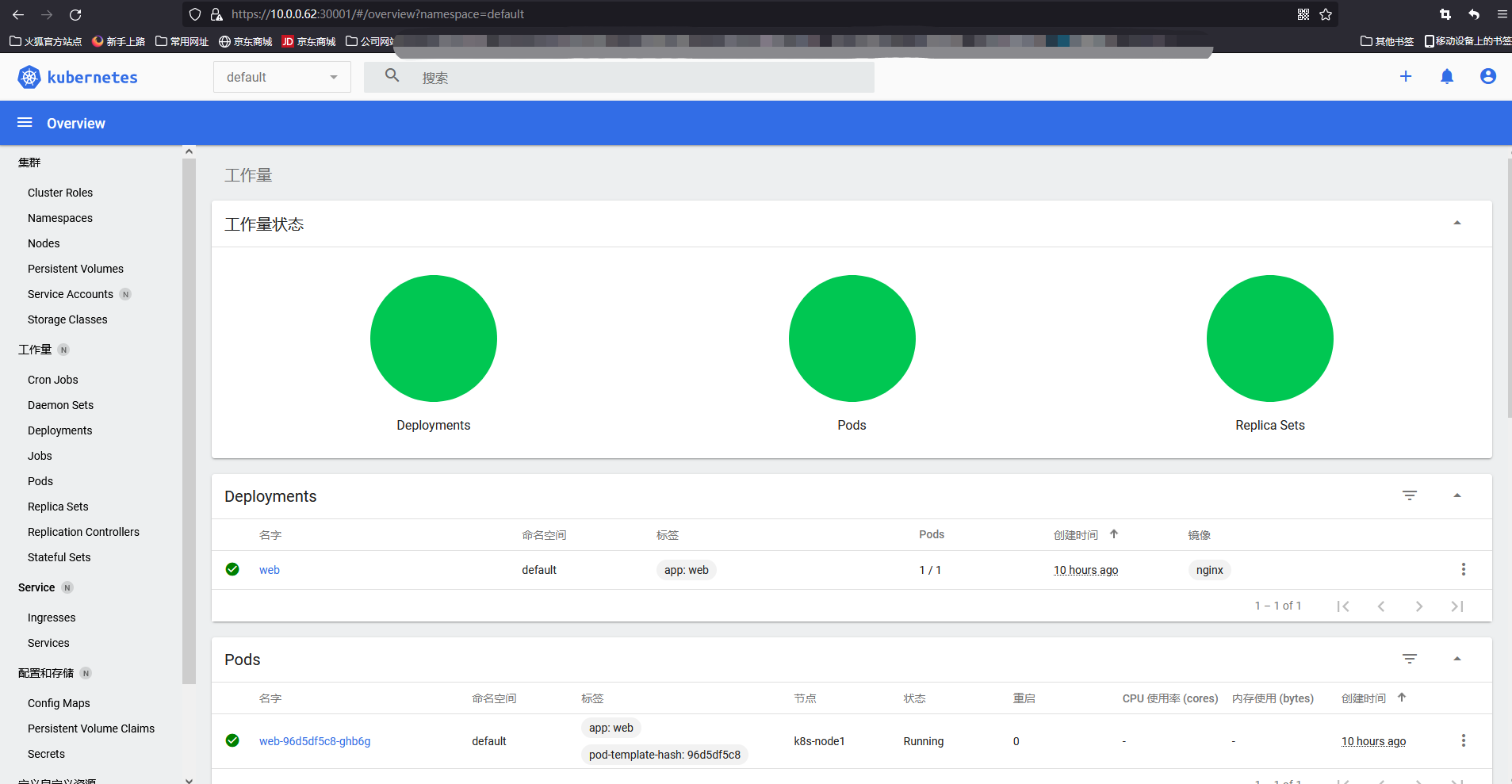

3.7 testing kubernetes clusters

- Verify Pod operation

- Verify Pod network communication

- Verify DNS resolution

Create a pod in the Kubernetes cluster and verify that it works normally:

$ kubectl create deployment nginx --image=nginx $ kubectl expose deployment nginx --port=80 --type=NodePort $ kubectl get pod,svc

[root@k8s-master ~]# kubectl create deployment web --image=nginx deployment.apps/web created [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE web-96d5df5c8-ghb6g 0/1 ContainerCreating 0 17s [root@k8s-master ~]# kubectl expose deployment web --port=80 --target-port=80 --type=NodePort service/web exposed [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE web-96d5df5c8-ghb6g 1/1 Running 0 2m38s [root@k8s-master ~]# kubectl get pods,svc NAME READY STATUS RESTARTS AGE pod/web-96d5df5c8-ghb6g 1/1 Running 0 2m48s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 12h service/web NodePort 10.96.132.243 <none> 80:31340/TCP 16s # View pod log [root@k8s-master ~]# kubectl logs web-96d5df5c8-ghb6g -f /docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration /docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/ /docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh 10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf 10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf /docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh /docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh /docker-entrypoint.sh: Configuration complete; ready for start up 2021/11/22 03:30:28 [notice] 1#1: using the "epoll" event method 2021/11/22 03:30:28 [notice] 1#1: nginx/1.21.4 2021/11/22 03:30:28 [notice] 1#1: built by gcc 10.2.1 20210110 (Debian 10.2.1-6) 2021/11/22 03:30:28 [notice] 1#1: OS: Linux 3.10.0-1160.45.1.el7.x86_64 2021/11/22 03:30:28 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576 2021/11/22 03:30:28 [notice] 1#1: start worker processes 2021/11/22 03:30:28 [notice] 1#1: start worker process 31 2021/11/22 03:30:28 [notice] 1#1: start worker process 32 10.0.0.62 - - [22/Nov/2021:03:34:04 +0000] "GET / HTTP/1.1" 200 615 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:94.0) Gecko/20100101 Firefox/94.0" "-" 2021/11/22 03:34:04 [error] 31#31: *1 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: 10.0.0.62, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "10.0.0.62:31340", referrer: "http://10.0.0.62:31340/" 10.0.0.62 - - [22/Nov/2021:03:34:04 +0000] "GET /favicon.ico HTTP/1.1" 404 153 "http://10.0.0.62:31340/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:94.0) Gecko/20100101 Firefox/94.0" "-" 10.0.0.62 - - [22/Nov/2021:03:38:29 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:94.0) Gecko/20100101 Firefox/94.0" "-" 10.0.0.62 - - [22/Nov/2021:03:38:30 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:94.0) Gecko/20100101 Firefox/94.0" "-" 10.244.169.128 - - [22/Nov/2021:03:40:10 +0000] "GET / HTTP/1.1" 200 615 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:94.0) Gecko/20100101 Firefox/94.0" "-" 10.244.169.128 - - [22/Nov/2021:03:40:10 +0000] "GET /favicon.ico HTTP/1.1" 404 153 "http://10.0.0.63:31340/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:94.0) Gecko/20100101 Firefox/94.0" "-" 2021/11/22 03:40:10 [error] 31#31: *3 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: 10.244.169.128, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "10.0.0.63:31340", referrer: "http://10.0.0.63:31340/" 10.244.169.128 - - [22/Nov/2021:03:40:14 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:94.0) Gecko/20100101 Firefox/94.0" "-" 10.244.169.128 - - [22/Nov/2021:03:40:15 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:94.0) Gecko/20100101 Firefox/94.0" "-" # Verify pod network communication [root@k8s-master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web-96d5df5c8-ghb6g 1/1 Running 0 11m 10.244.36.66 k8s-node1 <none> <none> [root@k8s-node1 kubernetes]# ping 10.244.36.66 PING 10.244.36.66 (10.244.36.66) 56(84) bytes of data. 64 bytes from 10.244.36.66: icmp_seq=1 ttl=64 time=0.087 ms 64 bytes from 10.244.36.66: icmp_seq=2 ttl=64 time=0.063 ms 64 bytes from 10.244.36.66: icmp_seq=3 ttl=64 time=0.145 ms ^C --- 10.244.36.66 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2000ms rtt min/avg/max/mdev = 0.063/0.098/0.145/0.035 ms #Verify DNS resolution [root@k8s-master ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 12h web NodePort 10.96.132.243 <none> 80:31340/TCP 11m

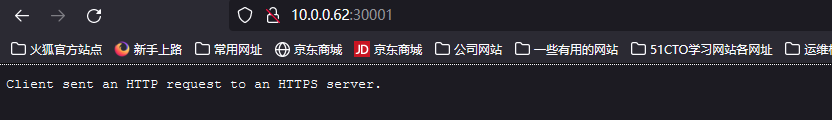

Access address: http://NodeIP:Port

3.8 deploying Dashboard

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

The default Dashboard can only be accessed inside the cluster. Modify the Service to NodePort type and expose it to the outside:

$ vi recommended.yaml

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

type: NodePort

...

$ kubectl apply -f recommended.yaml

$ kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6b4884c9d5-gl8nr 1/1 Running 0 13m

kubernetes-dashboard-7f99b75bf4-89cds 1/1 Running 0 13m

[root@k8s-master ~]# vim kubernertes-dashboard.yaml [root@k8s-master ~]# kubectl apply -f kubernertes-dashboard.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created [root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE dashboard-metrics-scraper-7b59f7d4df-jxb4b 1/1 Running 0 3m28s kubernetes-dashboard-5dbf55bd9d-zpr7t 1/1 Running 0 3m28s [root@k8s-master ~]# kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.108.245.81 <none> 8000/TCP 5h47m kubernetes-dashboard NodePort 10.99.22.58 <none> 443:30001/TCP 5h47m

Access address: https://NodeIP:30001

The client logs in using HTTPS

Create a service account and bind the default cluster admin administrator cluster role:

# Create user

$ kubectl create serviceaccount dashboard-admin -n kube-system

# User authorization

$ kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

# Get user Token

$ kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

[root@k8s-master ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@k8s-master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@k8s-master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-wnt6h

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: f2277e2c-4c4d-401c-a0fa-f48110f6e259

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImVJVEZNbVB3Y0xXMWVWMU1xYk9RdUVPdFhvM1ByTUdZY2xjS0I0anhjMlkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4td250NmgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZjIyNzdlMmMtNGM0ZC00MDFjLWEwZmEtZjQ4MTEwZjZlMjU5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.hcxJhsSGcgwpoQnRqeWIZLFRZM4kp0itTPYJjFuSpUOLObiLfBcQqXq4zCNAAYI3axpilv0kQA5A-l_clqpTmEKQzSa2wwx5KA-V-KNHVJ9animI691INL_5Qe9O7qyF6QybnOeVXm6K-VaC2a-njAigF_0VcSweX3VdVg9Qr0ck_RsbyerP-Hhxuo-6uep_V0AfeD4ex3OE8DTlZtktAjvvalm0hNPMq-cWVdPENe-ml7Gk0NC8iyNGNbvkwk4-z3vYj2C_4Vx3JxXTAiPRqneg_NSQKsR6H7lvZ6bPvG1OW1CeZ52JiFVErdSowRh32G5sIoF7dzQFBLYiAk6mYw

Log in to the Dashboard using the output token.

View pod log

Some problems encountered during construction

1. calico node pod status CrashLoopBackOff

- There is a problem with the configuration file

- Host network card name is unconventional

- name: IP_AUTODETECTION_METHOD

value: "interface=eth0"

2. Downloading images is slow or even timeout ImagePullBackOff

You can manually pull docker on each node

3. Kubedm init, join initialization failed

Kubedm reset clears the kubedm execution record of the current machine

4. Troubleshooting ideas for calico pod startup failure:

kubectl describe pod calico-node-b2lkr -n kube-system

kubectl logs calico-node-b2lkr -n kube-system

5. kubectl get cs health check failed

vi /etc/kubernetes/manifests/kube-scheduler.yaml

#-- port=0 comment out this parameter

kubectl delete pod kube-scheduler-k8s-master -n kube-system

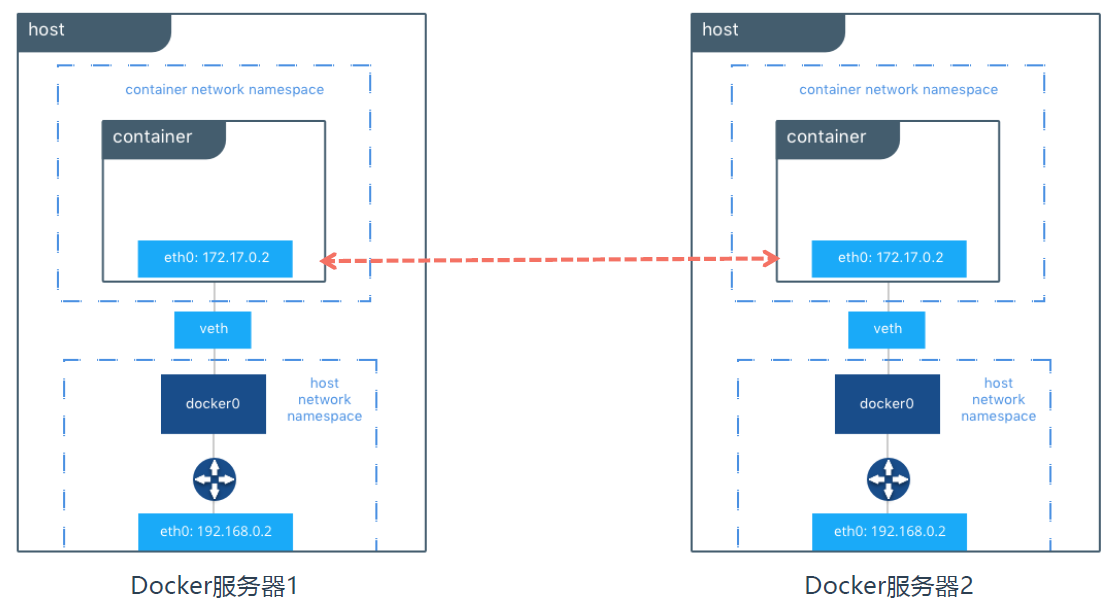

4. K8s CNI network model

How do two Docker hosts realize container interworking?

Existing problems:

1. The two docker host networks are independent

2. How to uniformly manage the container ip of multiple docker hosts

3. The network segment of the two docker hosts is a docker internal network

If you want to access container 1 to container 2, you need to use the host network. container1 -> docker1 <-> docker2 -> container2

The technologies to realize this cross host container communication include flannel, calico and so on.

Selection basis of CNI network components:

- Server size

- Support function

- performance

Q:

Q:

1. These k8s node segments are managed uniformly to ensure that each container is assigned different IP addresses

2. Do you want to know which docker host to forward?

3. How to realize this forwarding (send from the container on docker host 1 to the container on another docker host)

A:

1. Assign a unique network segment to each docker host

2. Make records of the network segments that each docker host should respond to

3. You can use iptables or use the host as a router to configure the routing table

K8s is a flat network.

That is, all deployed network components must meet the following requirements:

- One Pod and one IP

- All pods can communicate directly with any other Pod

- All nodes can communicate directly with all pods

- The IP address obtained by the Pod is the same as that of other pods or nodes when communicating with them

Mainstream network components include Flannel, Calico, etc

Calico

Calico is a pure three-tier data center network solution. Calico supports a wide range of platforms, including Kubernetes, OpenStack, etc.

Calico implements an efficient virtual router (vRouter) in each computing node using Linux Kernel to be responsible for data forwarding, and each vRouter is responsible for transmitting the routing information of its workload to the whole calico network through BGP protocol.

In addition, Calico project also implements Kubernetes network policy and provides ACL function.

Calico deployment:

wget https://docs.projectcalico.org/manifests/calico.yaml

After downloading, you also need to modify the Pod network (CALICO_IPV4POOL_CIDR) defined in it, which is the same as that specified in kubedm init

kubectl apply -f calico.yaml kubectl get pods -n kube-system

Flannel

Flannel is a network component maintained by CoreOS. Flannel provides globally unique IP for each Pod. Flannel uses ETCD to store the relationship between Pod subnet and Node IP. The flanneld daemon runs on each host and is responsible for maintaining ETCD information and routing packets.

Flannel deployment:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.11.0-amd64#g" kube-flannel.yml

5. kubectl command line management tool

Kubectl uses the kubeconfig authentication file to connect to the K8s cluster, and uses the kubectl config command to generate the kubeconfig file.

kubeconfig connects to K8s authentication file

apiVersion: v1 kind: Config #colony clusters: -cluster: certificate-authority-data: server: https://192.168.31.61:6443 name: kubernetes #context contexts: -context: cluster: kubernetes user: kubernetes-admin name: kubernetes-admin@kubernetes #Current context current-context: kubernetes-admin@kubernetes #Client authentication users: -name: kubernetes-admin user: client-certificate-data: client-key-data:

Official document reference address: https://kubernetes.io/zh/docs/reference/kubectl/overview/

| type | command | describe |

|---|---|---|

| Basic command | create | Create a resource by file name or standard input |

| Basic command | expose | Create Service for Deployment, Pod |

| Basic command | run | Running a specific mirror in a cluster |

| Basic command | set | Set specific functions on the object |

| Basic command | explain | Document references |

| Basic command | get | Displays one or more resources |

| Basic command | edit | Use the system editor to edit a resource. |

| Basic command | delete | Delete a resource through a file name, standard input, resource name, or label selector. |

| Deployment command | rollout | Manage the publishing of Deployment and daemon resources (such as status, publishing records, rollback, etc.) |

| Deployment command | rolling-update | |

| Deployment command | scale | Expand or shrink the number of pods for Deployment, ReplicaSet, RC, or Job resources |

| Deployment command | autoscale | Configure auto scaling rules for deploy, RS and RC (depending on metrics server and hpa) |

| Cluster management command | certificate | Modify certificate resource |

| Cluster management command | cluster-info | Display cluster information |

| Cluster management command | top | View resource utilization (dependent on metrics server) |

| Cluster management command | cordon | Marked node is not schedulable |

| Cluster management command | uncordon | Marked node schedulable |

| Cluster management command | drain | Expel the application on the node and prepare for offline maintenance |

| Cluster management command | taint | Modify node taint tag |

| Troubleshooting and debugging commands | describe | Show resource details |

| Troubleshooting and debugging commands | logs | View the Pod content container log. If the Pod has multiple containers, the - c parameter specifies the container name |

| Troubleshooting and debugging commands | attach | A container attached to the Pod |

| Troubleshooting and debugging commands | exec | Execute the command inside the container |

| Troubleshooting and debugging commands | port-forward | Create local port mapping for Pod |

| Troubleshooting and debugging commands | proxy | Create a proxy for KubernetesAPIserver |

| Troubleshooting and debugging commands | cp | Copy files or directories into the container or from the container |

| Advanced command | apply | Create / update resource from file name or standard input |

| Advanced command | patch | Use the patch method to modify and update some fields of resources |

| Advanced command | replace | Replace a resource from a file name or standard input |

| Advanced command | convert | Converting object definitions between different API versions |

| set command | label | Set and update labels for resources |

| set command | annotate | Set and update comments for resources |

| set command | completion | Kubectl tool auto completion, source < (kubectl completion bash) (dependent on bash completion) |

| Other commands | api-resources | View all resources |

| Other commands | api-versions | Print supported API versions |

| Other commands | config | Modify kubeconfig file (used to access API, such as configuring authentication information) |

| Other commands | help | All command help |

| Other commands | version | View kubectl and k8s versions |

kubectl create supports the creation of resources

Available Commands:

| clusterrole | Create a ClusterRole. |

|---|---|

| clusterrolebinding | Create a ClusterRoleBinding for a particular ClusterRole |

| configmap | Create a configmap from a local file, directory or literal value |

| cronjob | Create a cronjob with the specified name. |

| deployment | Create a deployment with the specified name. |

| job | Create a job with the specified name. |

| namespace | Create a namespace with the specified name |

| poddisruptionbudget | Create a pod disruption budget with the specified name. |

| priorityclass | Create a priorityclass with the specified name. |

| quota | Create a quota with the specified name. |

| role | Create a role with single rule. |

| rolebinding | Create a RoleBinding for a particular Role or ClusterRole |

| secret | Create a secret using specified subcommand |

| service | Create a service using specified subcommand. |

| serviceaccount | Create a service account with the specified name |

[root@k8s-master ~]# yum install bash-completion -y

[root@k8s-master ~]# source <(kubectl completion bash)

If you can't complete it, execute it bash,Reuse source Import it again, and then you can complete it

[root@k8s-master ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.0", GitCommit:"e19964183377d0ec2052d1f1fa930c4d7575bd50", GitTreeState:"clean", BuildDate:"2020-08-26T14:30:33Z", GoVersion:"go1.15", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.0", GitCommit:"e19964183377d0ec2052d1f1fa930c4d7575bd50", GitTreeState:"clean", BuildDate:"2020-08-26T14:23:04Z", GoVersion:"go1.15", Compiler:"gc", Platform:"linux/amd64"}

Quick deployment of a website

To deploy a mirror using the Deployment controller:

kubectl create deployment web --image=lizhenliang/java-demo

kubectl get deploy,pods

Expose the Pod using Service:

kubectl expose deployment web --port=80 --type=NodePort --target-port=8080 --name=web

kubectl get service

Access application:

http://NodeIP:Port #The port is randomly generated and obtained through get svc

[root@k8s-master ~]# kubectl create deployment my-dep --image=lizhenliang/demo --replicas=3 deployment.apps/my-dep created [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-dep-99596b7c8-8tt6f 0/1 ContainerCreating 0 26s my-dep-99596b7c8-9xgn4 0/1 ImagePullBackOff 0 26s my-dep-99596b7c8-lpbzt 0/1 ContainerCreating 0 26s web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-dep-99596b7c8-8tt6f 0/1 ImagePullBackOff 0 64s my-dep-99596b7c8-9xgn4 0/1 ImagePullBackOff 0 64s my-dep-99596b7c8-lpbzt 0/1 ContainerCreating 0 64s web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE my-dep 0/3 3 0 96s web 1/1 1 1 2d18h [root@k8s-master ~]# kubectl delete deployments my-dep deployment.apps "my-dep" deleted [root@k8s-master ~]# kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE web 1/1 1 1 2d18h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl create deployment my-dep --image=lizhenliang/java-demo --replicas=3 deployment.apps/my-dep created [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-dvxp8 0/1 ContainerCreating 0 7s my-dep-5f8dfc8c78-f4ln4 0/1 ContainerCreating 0 7s my-dep-5f8dfc8c78-j9fqp 0/1 ContainerCreating 0 7s web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-dvxp8 0/1 ContainerCreating 0 48s my-dep-5f8dfc8c78-f4ln4 0/1 ContainerCreating 0 48s my-dep-5f8dfc8c78-j9fqp 0/1 ContainerCreating 0 48s web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-dvxp8 1/1 Running 0 69s my-dep-5f8dfc8c78-f4ln4 1/1 Running 0 69s my-dep-5f8dfc8c78-j9fqp 1/1 Running 0 69s web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl create deployment my-dep --image=lizhenliang/java-demo --replicas=3 deployment.apps/my-dep created [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-dvxp8 0/1 ContainerCreating 0 7s my-dep-5f8dfc8c78-f4ln4 0/1 ContainerCreating 0 7s my-dep-5f8dfc8c78-j9fqp 0/1 ContainerCreating 0 7s web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-dvxp8 0/1 ContainerCreating 0 48s my-dep-5f8dfc8c78-f4ln4 0/1 ContainerCreating 0 48s my-dep-5f8dfc8c78-j9fqp 0/1 ContainerCreating 0 48s web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-dvxp8 1/1 Running 0 69s my-dep-5f8dfc8c78-f4ln4 1/1 Running 0 69s my-dep-5f8dfc8c78-j9fqp 1/1 Running 0 69s web-96d5df5c8-ghb6g 1/1 Running 0 2d18h [root@k8s-master ~]# kubectl expose deployment my-dep --port=80 --target-port=8080 --type=NodePort service/my-dep exposed [root@k8s-master ~]# kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d6h my-dep NodePort 10.111.199.51 <none> 80:31734/TCP 18s web NodePort 10.96.132.243 <none> 80:31340/TCP 2d18h [root@k8s-master ~]# kubectl get ep NAME ENDPOINTS AGE kubernetes 10.0.0.61:6443 3d6h my-dep 10.244.169.134:8080,10.244.36.69:8080,10.244.36.70:8080 40s web 10.244.36.66:80 2d18h

Resource concept

- Pod

- Minimum deployment unit

- A collection of containers

- Containers in a Pod share a network namespace

- Pod is short

- Controllers

- Deployment: stateless application deployment

- Stateful set: stateful application deployment

- Daemon set: ensure that all nodes run the same Pod

- Job: one time task

- Cronjob: scheduled task

Higher level objects, deploy and manage Pod

- Service

- Prevent Pod loss

- Define access policies for a set of pods

- Label: label, attached to a resource, used to associate objects, query and filter

Label management

Kubectl get pods -- show labels # view resource Tags

Kubectl get Pods - L app = my dep # list resources by tag

[root@k8s-master ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS my-dep-5f8dfc8c78-dvxp8 1/1 Running 0 13m app=my-dep,pod-template-hash=5f8dfc8c78 my-dep-5f8dfc8c78-f4ln4 1/1 Running 0 13m app=my-dep,pod-template-hash=5f8dfc8c78 my-dep-5f8dfc8c78-j9fqp 1/1 Running 0 13m app=my-dep,pod-template-hash=5f8dfc8c78 web-96d5df5c8-ghb6g 1/1 Running 0 2d18h app=web,pod-template-hash=96d5df5c8 [root@k8s-master ~]# kubectl get pods -l app=my-dep NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-dvxp8 1/1 Running 0 14m my-dep-5f8dfc8c78-f4ln4 1/1 Running 0 14m my-dep-5f8dfc8c78-j9fqp 1/1 Running 0 14m

- Namespaces: a namespace that logically isolates objects

Namespace

Namespaces was introduced for:- Resource isolation

- Permission control on namespace

-n parameter specifies the namespace

[root@k8s-master ~]# kubectl get namespaces NAME STATUS AGE default Active 3d7h kube-node-lease Active 3d7h kube-public Active 3d7h kube-system Active 3d7h kubernetes-dashboard Active 2d14h [root@k8s-master ~]# kubectl create namespace test namespace/test created [root@k8s-master ~]# kubectl get namespaces NAME STATUS AGE default Active 3d7h kube-node-lease Active 3d7h kube-public Active 3d7h kube-system Active 3d7h kubernetes-dashboard Active 2d15h test Active 8s [root@k8s-master ~]# kubectl create deployment my-dep --image=lizhenliang/java-demo --replicas=3 -n test deployment.apps/my-dep created [root@k8s-master ~]# kubectl get pods -n test NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-58sdk 0/1 ContainerCreating 0 21s my-dep-5f8dfc8c78-77cld 0/1 ContainerCreating 0 21s my-dep-5f8dfc8c78-965w7 0/1 ContainerCreating 0 21s [root@k8s-master ~]# kubectl get pods -n default NAME READY STATUS RESTARTS AGE my-dep-5f8dfc8c78-dvxp8 1/1 Running 0 62m my-dep-5f8dfc8c78-f4ln4 1/1 Running 0 62m my-dep-5f8dfc8c78-j9fqp 1/1 Running 0 62m web-96d5df5c8-ghb6g 1/1 Running 0 2d19h [root@k8s-master ~]# kubectl get pods,deployment -n test NAME READY STATUS RESTARTS AGE pod/my-dep-5f8dfc8c78-58sdk 1/1 Running 0 69s pod/my-dep-5f8dfc8c78-77cld 1/1 Running 0 69s pod/my-dep-5f8dfc8c78-965w7 1/1 Running 0 69s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/my-dep 3/3 3 3 69s

[root@k8s-master ~]# kubectl edit svc my-dep

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2021-11-24T21:47:28Z"

labels:

app: my-dep

name: my-dep

namespace: default

resourceVersion: "207081"

selfLink: /api/v1/namespaces/default/services/my-dep

uid: 34ae1f85-94a5-4c67-bfb4-9f73e2277f55

spec:

clusterIP: 10.111.199.51

externalTrafficPolicy: Cluster

ports:

- nodePort: 31734

port: 80

protocol: TCP

targetPort: 8080

selector:

app: my-dep

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

Homework after class:

1. Build a K8s cluster using kubedm

2. Create a new namespace and create a pod in the namespace

- Namespace name: cka

- Pod Name: pod-01

- Mirroring: nginx

3. Create a deployment and expose the Service

- Name: liang-666

- Mirroring: nginx

4. Lists the specified tag pod under the namespace

- Namespace name: Kube system

- Label: k8s app = Kube DNS