Introduction to this article

This article prohibits others from reprinting. If there is infringement, take it seriously!

Doraemon: walking with me 2 is one of the works commemorating the 50th anniversary of Doraemon. It was released in mainland China on May 28, 2021.

Doraemon series is A story that accompanied me and even several generations of people. Over the past 50 years, Mr. Fujiko F. buerxiong has created countless novel props, such as bamboo dragonflies, arbitrary doors, time machines and so on, so that Da Xiong and his friends have experienced various adventures such as the space war and the dinosaur era, as well as many dramatic daily life.

Before and after children's day, I heard that Da Xiong and Jing Xiang were married. I wrote this article deliberately, and I taught you how to draw words and pictures.

Well, this article is based on the word cloud drawing of Douban film review. Through this article, you will receive:

- ① Crawling of Douban film data;

- ② Hand in hand to teach you how to draw word cloud map;

Steps of Douban reptile

Of course, there are many other data on Douban, which is worth climbing and analyzing. But in this article, we only crawl the comment information.

Website to be crawled: https://movie.douban.com/subject/34913671/comments?status=P

Since there is only one field, we directly use re regular expression to solve this problem.

Look at those reptiles, Xiaobai. This is another good opportunity for you to practice.

Let's talk about the steps of crawler directly:

# 1. Import related libraries and write down what libraries to use here

import requests

import chardet

import re

# 2. Construct the request header, which is an anti pickpocketing measure. Learn to summarize what websites are used in the early stage. If you summarize more, you can use it easily.

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36',

#If a Referer appears in the crawler, it's best to stick it, because sometimes the server will judge whether the request is sent by the browser or the crawler according to the Referer

'Referer':'https://www.douban.com/'

}

# 3. This cycle is similar to page turning operation

for i in range(0,1001,20):

url = f"https://movie.douban.com/subject/34913671/comments?start={i}&limit=20&status=P&sort=new_score"

# 4. Use the requests library to initiate requests

response = requests.get(url,headers=headers)#Initiate request received response

# 5. Sometimes the returned results may be garbled. Remember to call the encoding property to modify the encoding

response.encoding = "utf-8"

# 6. Get the returned result and call the text attribute. Be sure to distinguish between text and content attributes

text = response.text#Returns a decoded string

# 7. To parse data, regular parsing is directly used here

comments_list = [i for i in re.findall('<span class="short">(.*?)</span>',text,re.S)]

# 8. Data storage. For each piece of data in the list, we directly use the open() function to write it to the txt document

for comment in comments_list:

with open(r"Dora A Dream: accompany me 2.txt","a",encoding = "utf-8") as f :

f.write(comment + "\n")The final effect is as follows:

Production process of word cloud map

Many students can't make word cloud pictures. I'll take this opportunity to write a detailed process here. Just follow the cat and draw the tiger.

The detailed steps of drawing word cloud are as follows:

- ① Import related libraries;

- ② Read the text file and use jieba library to dynamically modify the dictionary;

- ③ Use the lcut() method in jieba library for word segmentation;

- ④ Read stop words, add additional stop words, and remove stop words;

- ⑤ Word frequency statistics;

- ⑥ Draw word cloud

① Import related libraries

Here, you can import whatever library you need.

import jieba

from wordcloud import WordCloud

import matplotlib.pyplot as plt

from imageio import imread

import warnings

warnings.filterwarnings("ignore")② Read the text file and use the jieba library to dynamically modify the dictionary

Here, with open() reads the text file, so I won't explain it. Here we explain the dynamic modification dictionary.

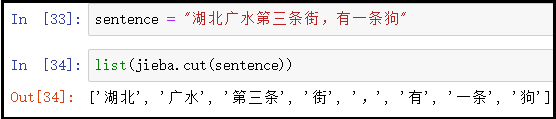

sentence = "There is a dog on the third street in Guangshui, Hubei Province" list(jieba.cut(sentence))

The results are as follows:

In view of the above segmentation results, what if we want to treat "Hubei Guangshui" and "the Third Street" as a complete word without cutting them apart? At this point, you need to use add_word() method to dynamically modify the dictionary.

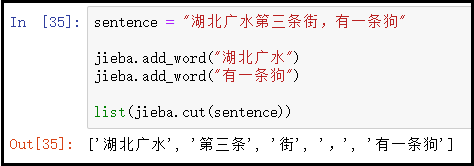

sentence = "There is a dog on the third street in Guangshui, Hubei Province"

jieba.add_word("Guangshui, Hubei")

jieba.add_word("There is a dog")

list(jieba.cut(sentence))The results are as follows:

Summary:

- jieba.add_word() method can only dynamically add a word one by one.

- If we need to add multiple words dynamically, we need to use jieba.load_userdict() method. That is to say: put all user-defined words into one text, and then use this method to dynamically modify the dictionary set at one time.

With the above foundation, we read the text directly and modify the dictionary dynamically.

with open(r"Dora A Dream: accompany me 2.txt",encoding="utf-8") as f:

txt = f.read()

txt = txt.split()

jieba.add_word("Dora A dream")

jieba.add_word("Da Xiong")③ Use the lcut() method in the jieba library for word segmentation

A short line of code, very simple.

data_cut = [jieba.lcut(x) for x in txt]

④ Read stop words, add additional stop words, and remove stop words

Read the stop words and use the split() function to get a list of stop words. Then, use the + sign to add the additional stop words to the list.

# Read stop words

with open(r"stoplist.txt",encoding="utf-8") as f:

stop = f.read()

stop = stop.split()

# Add an additional stop word. Here we only add a space

stop = [" "] + stop

# Remove stop words

s_data_cut = pd.Series(data_cut)

all_words_after = s_data_cut.apply(lambda x:[i for i in x if i not in stop])⑤ Word frequency statistics

Note the value in the series_ Use of counts().

all_words = []

for i in all_words_after:

all_words.extend(i)

word_count = pd.Series(all_words).value_counts()⑥ Draw word cloud

# 1. Read background picture

back_picture = imread(r"aixin.jpg")

# 2. Set word cloud parameters

wc = WordCloud(font_path="simhei.ttf",

background_color="white",

max_words=2000,

mask=back_picture,

max_font_size=200,

random_state=42

)

wc2 = wc.fit_words(word_count)

# 3. Draw word cloud

plt.figure(figsize=(16,8))

plt.imshow(wc2)

plt.axis("off")

plt.show()

wc.to_file("ciyun.png")The results are as follows:

It can be roughly seen from the word cloud picture: This is another tear film, this is a feeling film. The Da Xiong who grew up with us are married? What about us? In fact, when we were young, we looked forward to Da Xiong and Jingxiang being good friends. In this film, they got married. How should the film be staged? You can go to the cinema and find out.