1. Summary of prospects

When surfing the Internet, I saw that NASA had a popular science website with a popular science picture every day, which was very high-definition. I wanted to download it to make wallpaper.

So I'm going to write a Java crawler to crawl all the pictures. I can also add some notifications to check every night. When an update is detected, I get up the next morning and push it to the mobile phone. Of course, this function has not been realized.

2. Development

2.1 development environment

- Maven

- Java 17 (in order to follow the trend after Spring Framework 6, it is planned to use Zulu jdk17 for future development)

- WebMagic (crawler framework for Java)

2.2 Maven pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.yeahvin</groupId>

<artifactId>getStars</artifactId>

<version>1.0.1-SNAPSHOT</version>

<properties>

<maven.compiler.source>17</maven.compiler.source>

<maven.compiler.target>17</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-core</artifactId>

<version>0.7.5</version>

</dependency>

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-extension</artifactId>

<version>0.7.5</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.20</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>1.2.3</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<mainClass>com.yeahvin.StartApplication</mainClass>

<layout>ZIP</layout>

</configuration>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

Scan VX for Java data, front-end, test, python and so on

2.3 use of Java crawler webmagic

This framework is also very easy to start and use. The official manual is here WebMagic in Action

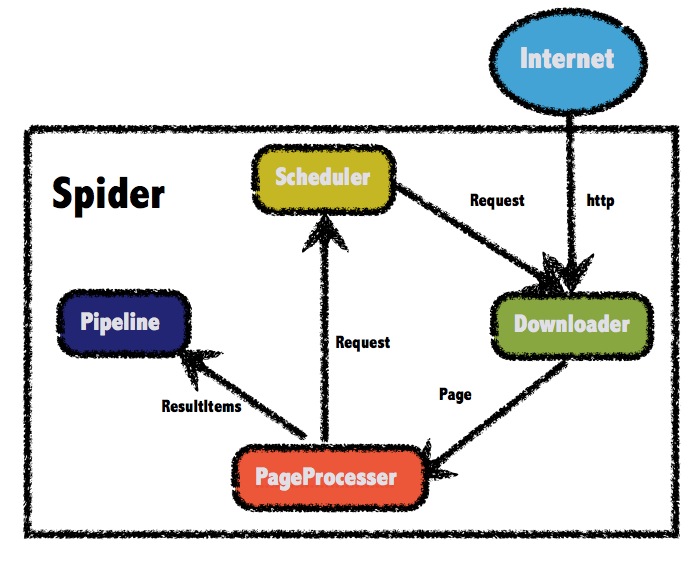

Here is a brief introduction to the use of this framework. As shown in the following figure, what our developers need to do in the whole process is:

- Write pageprocessor

- Write Pipeline

You can complete a very simple crawler. I recommend you take a look at this example first: First example

In this project, the analysis of the whole nasa website is actually very simple, and the process is probably

request Overview page -->Get all existing links, traverse -- > request details page -- > download pictures

Startup class

ok, let's develop and write StartApplication, which is the place to start the crawler.

package com.yeahvin;

import com.yeahvin.pageProcessors.NasaStarsProcessor;

import com.yeahvin.pipelines.NasaStarsPipeline;

import lombok.extern.slf4j.Slf4j;

import us.codecraft.webmagic.Spider;

/**

* @author Asher

* on 2021/11/6

*/

@Slf4j

public class StartApplication {

//Download picture storage path

public static final String DOWNLOAD_PATH = "/Users/asher/gitWorkspace/temp/";

public static final String INDEX_PATH = "https://apod.nasa.gov/apod/archivepix.html";

public static void main(String[] args) {

Spider.create(new NasaStarsProcessor())

.addUrl(INDEX_PATH)

.addPipeline(new NasaStarsPipeline())

.run();

}

}

In the above code, Spider.create(Processor) can build a crawler very quickly, and then addUrl configures the requested address, and addPipeline configures how to handle when the crawler grabs data. Finally, run starts

Write NasaStarsProcessor

The reason for writing this is to analyze and process the content on the web page after the request to the web page

package com.yeahvin.pageProcessors;

import com.yeahvin.StartApplication;

import com.yeahvin.utils.StringUtil;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.StringUtils;

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.processor.PageProcessor;

import java.io.File;

import java.util.Arrays;

import java.util.List;

import java.util.Optional;

import java.util.regex.Pattern;

import java.util.stream.Collectors;

import static com.yeahvin.StartApplication.DOWNLOAD_PATH;

/**

* @author Asher

* on 2021/11/6

*/

@Slf4j

public class NasaStarsProcessor implements PageProcessor {

private Site site = Site.me().setRetryTimes(5).setSleepTime(1000).setTimeOut(10000);

@Override

public void process(Page page) {

if (page.getUrl().regex(StartApplication.INDEX_PATH).match()){

//Get the saved files in the folder

File saveDir = new File(DOWNLOAD_PATH);

List<String> fileNames = Arrays.stream(Optional.ofNullable(saveDir.listFiles()).orElse(new File[]{}))

.map(File::getName)

.collect(Collectors.toList());

log.info("Start homepage crawling");

List<String> starsInfos = page.getRawText()

.lines()

.map(line -> {

List<String> infoList = StringUtil.getRegexString(line, "^\\d{4}.*<br>$");

boolean flag = infoList.size() > 0;

return flag ? infoList.get(0) : null;

})

.filter(StringUtils::isNoneBlank)

.collect(Collectors.toList());

starsInfos.forEach(info -> {

String link = StringUtil.getRegexString(info, "ap.*html").get(0);

String name = StringUtil.getRegexString(info, ">.*</a>").get(0)

.replace("</a>", "")

.replace(">", "");

//Skip if the corresponding file already exists

if (fileNames.stream().filter(fileName -> fileName.contains(name)).count() > 0) {

log.info("file [{}] Already exists,Skip", name);

return;

}

page.addTargetRequest("https://apod.nasa.gov/apod/" + link);

});

log.info("Complete the home page capture, a total of{}Data bar", starsInfos.size());

}else {

page.getRawText()

.lines()

.filter(line -> line.contains("<title>")

|| Pattern.compile("<a href=\"image.*jpg.*\">").matcher(line).find()

|| Pattern.compile("<a href=\"image.*jpg.*\"").matcher(line).find()

)

.forEach(line -> {

if (line.contains("<title>")){

String date = StringUtil.getRegexString(line, "\\d{4}.*-").get(0)

.replace("-", "");

String name = StringUtil.getRegexString(line, "-.*").get(0)

.replace("-", "");

page.putField("date", date);

page.putField("name", name);

}else {

String imageLink = StringUtil.getRegexString(line, "image.*jpg").get(0);

page.putField("imageLink", imageLink);

}

page.putField("url", page.getUrl());

});

}

}

@Override

public Site getSite() {

return site;

}

}

The logic of the above code is:

-

Property to configure a Site as the configuration when the crawler requests a web page.

-

Implementation interface Pag

-

Processor , Override method required process

-

In method In process, first request the overview page and make a judgment through if. If the incoming request is the same as the overview page, skip to the details page for processing, that is, in else. Why can this be done, because in In the condition processing of if(true), the following code can be used directly in the current If the Processor requests the link put in again, there is no need to write the Processor repeatedly and save the amount of development.

page.addTargetRequest("https://apod.nasa.gov/apod/" + link); -

When the if condition = = true, the file names of all files in the specified save folder will be taken first. When the application stops halfway and starts here, there is no need to run the previous web page again. then getRawText() gets the text content of the web page, filters it through regular rules, and gets the link s of all detail pages

-

Therefore, in else is the code processing process of the details page, such as Astronomy Picture of the Day The processing process after the interface obtains the web page content. among getRawText() gets the text content of the web page and filters it through regular, page.putField("date", date); Put it into the page variable, and the value will be passed into the startup class Specified by StartApplication Method in NasaStarsPipeline.

Scan VX for Java data, front-end, test, python and so on

Write NasaStarsPipeline

This NasaStarsPipeline is written to process the contents of the Field after the above Processor is put into the Field.

The general logic is:

- Get the specific information of the details page, such as name, date and the download link of the picture, and then download the picture. It should be noted here that sometimes the opened details page is not necessarily a picture, but also a video. When it cannot be parsed in the form of a picture, the processing method here is to skip directly. Save the error log.

package com.yeahvin.pipelines;

import com.yeahvin.utils.X509TrustManager;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.StringUtils;

import us.codecraft.webmagic.ResultItems;

import us.codecraft.webmagic.Task;

import us.codecraft.webmagic.pipeline.Pipeline;

import java.io.File;

import static com.yeahvin.StartApplication.DOWNLOAD_PATH;

/**

* @author Asher

* on 2021/11/6

*/

@Slf4j

public class NasaStarsPipeline implements Pipeline {

@Override

public void process(ResultItems resultItems, Task task) {

if (resultItems.getAll()!=null && resultItems.getAll().size()>0){

String date = resultItems.get("date");

String name = resultItems.get("name");

String imageLink = resultItems.get("imageLink");

if (StringUtils.isBlank(imageLink)){

log.error("Failed to get the picture download link, name:{}, date:{}, url:{}", name, date, resultItems.get("url"));

return;

}

String fileName = date + name + ".jpg";

if (new File(DOWNLOAD_PATH + fileName).exists()) {

log.info("Download pictures{}Already exists, skip, name:{}, date:{}", imageLink, name, date);

return;

}

try {

X509TrustManager.downLoadFromUrlHttps("https://apod.nasa.gov/apod/" + imageLink, fileName, DOWNLOAD_PATH);

} catch (Exception e) {

log.error("Picture download failed:", e);

e.printStackTrace();

}

log.info("Download pictures{}Done, name:{}, date:{}", imageLink, name, date);

}

}

}

When you finish writing so much, the whole code is actually completed. You can expand it later.

For example, for multi-threaded downloading of pictures, I perform scheduled tasks every day. Here, I complete this scheduled task through shell script, so I don't intend to hang this application in memory often.

Scan VX for Java data, front-end, test, python and so on