In the last article, we analyzed Common structures of unpacking part In this article, let's learn the common structures of the decoding part.

catalogue

- Breakpoint analysis ffplay decoding process and key structure

- (decoding part) analysis of common structures and their relationships

- data

- harvest

1, Breakpoint analysis ffplay decoding process and key structure

Or from read_thread for analysis

stream_component_open(is, st_index[AVMEDIA_TYPE_AUDIO]);

ret = stream_component_open(is, st_index[AVMEDIA_TYPE_VIDEO]);stream_component_open opens the specified stream

static int stream_component_open(VideoState *is, int stream_index)

AVFormatContext *ic = is->ic;

AVCodecContext *avctx;

const AVCodec *codec;

The key functions are as follows

avctx = avcodec_alloc_context3(NULL);

int avcodec_parameters_to_context(AVCodecContext *codec,

const AVCodecParameters *par)

codec = avcodec_find_decoder(avctx->codec_id);

codec = avcodec_find_decoder_by_name(forced_codec_name);

//Open codec

avcodec_open2(avctx, codec, &opts)

//Audio decoding

decoder_init(&is->auddec, avctx, &is->audioq, is->continue_read_thread)

decoder_start(&is->auddec, audio_thread, "audio_decoder", is)

//Video decoding

decoder_init(&is->viddec, avctx, &is->videoq, is->continue_read_thread)

decoder_start(&is->viddec, video_thread, "video_decoder", is)

avcodec_free_context(&avctx);

Structure involved: AVFormatContext,AVCodecContext,AVCodecavcodec_parameters_to_context

int avcodec_parameters_to_context(AVCodecContext *codec,

const AVCodecParameters *par)

Structure involved: AVCodecContext,AVCodecParametersavcodec_ find_ The decoder looks up the decoder through codecid

const AVCodec *avcodec_find_decoder(enum AVCodecID id) Structure involved: AVCodec

avcodec_open2

int avcodec_open2(AVCodecContext *avctx, const AVCodec *codec, AVDictionary **options) Structure involved: AVCodecContext,AVCodec

Decoding thread

static int audio_thread(void *arg) static int video_thread(void *arg) In the decoding thread AVFrame As the decompressed structure Structure involved: AVFrame

Then start the audio and video decoding threads respectively to start decoding. We can see that the main structures involved are AVCodecContext, AVCodecParameters, AVCodec and AVFrame

As the input of decoding, AVPacket (a structure that stores information related to compressed encoded data) and AVStream (each AVStream stores data related to a video / audio stream; it is a stream object separated by the unpacker) have been introduced in the previous article. Next, we will mainly analyze several structures related to decoding, AVCodecContext, AVCodec and AVFrame

2, (decoding part) analysis of common structures and their relationships

2.1 common structures and their relationships (again, Raytheon summarized and combed them very well)

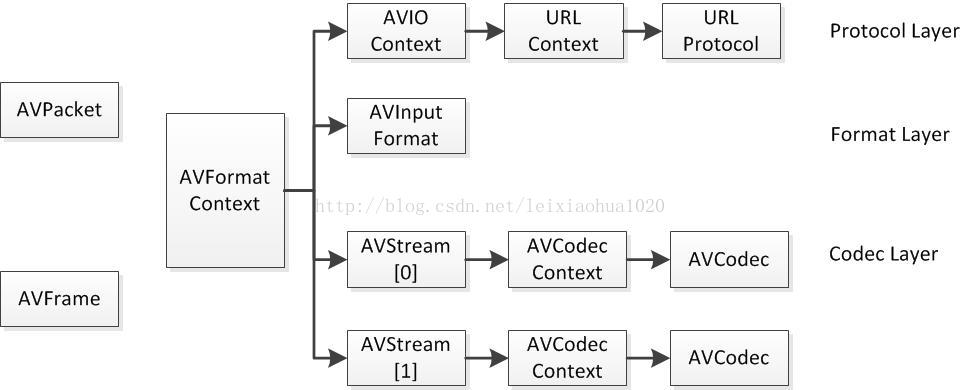

FFMPEG There are many structures in the. The most critical structures can be divided into the following categories: a) Solution protocol( http,rtsp,rtmp,mms) AVIOContext,URLProtocol,URLContext It mainly stores the type and status of the protocol used by video and audio. URLProtocol Store the packaging format used for input video and audio. Each protocol corresponds to one URLProtocol Structure. (Note: FFMPEG The file is also used as a protocol“ file") b) Unpacking( flv,avi,rmvb,mp4) AVFormatContext It mainly stores the information contained in the video and audio packaging format; AVInputFormat Stores the packaging format used by the input video and audio. Each video and audio packaging format corresponds to one AVInputFormat Structure. c) Decode( h264,mpeg2,aac,mp3) each AVStream Store a video/Relevant data of audio stream; each AVStream Corresponding to one AVCodecContext,Store this video/Relevant data of audio stream using decoding method; each AVCodecContext Corresponding to one in AVCodec,Include this video/The decoder corresponding to the audio. Each decoder corresponds to one AVCodec Structure. d) Save data For video, each structure usually stores one frame; for audio, there may be several frames Data before decoding: AVPacket Decoded data: AVFrame Quoted from: https://blog.csdn.net/leixiaohua1020/article/details/11693997

Their relationship is as follows:

The relationship between the most critical structures in FFMPEG

2.2 AVCodecContext AVCodecContext is a data structure describing the decoder context, which contains a lot of parameter information required by the encoder. The structure definition is located in libavcodec/AVcodec.h. The main variables are as follows:

enum AVMediaType codec_type; // This enumeration is defined in libavutil/Avutil.h, encoding type, audio, video, letters, etc const struct AVCodec *codec;//The adopted decoder AVCodec is analyzed separately below enum AVCodecID codec_id; // This enumeration is defined in libavcodec/Codec_id.h and defines all codec IDs void *priv_data; struct AVCodecInternal *internal;//Context for internal use void *opaque; int64_t bit_rate;//Average bitrate uint8_t *extradata; int extradata_size: Additional information contained for a particular encoder (e.g. for H.264 For decoders, storage SPS,PPS Etc.) AVRational time_base;//Time base. According to this parameter, PTS can be converted into actual time (unit: s) int width, height;//For video types only int gop_size;//Keyframe interval int max_b_frames;//Maximum number of b frames int has_b_frames;//Whether there are b frames is related to the compression ratio of video. Generally, the more b frames, the greater the compression ratio int slice_count;//The sum of slices, related to slice, can be [audio and video development journey (56) -H264/AVC basic structure]( https://mp.weixin.qq.com/s?__biz=MzU5NjkxMjE5Mg==&mid=2247484355&idx=1&sn=538378561c16b640a4ea42bc1f354044&chksm=fe5a32ecc92dbbfa1d6a2e83f22aece727badb99966b6e621322ed8bf6b0cd8f0b2d1c262013&token=778944351&lang=zh_CN#rd ) AVRational sample_aspect_ratio;//sampling rate /* audio only */ int sample_rate; ///< samples per second int channels; ///< number of audio channels enum AVSampleFormat sample_fmt; ///< sample format, defined in libavutil/Samplefmt.h enum AVColorSpace colorspace;//Color space, defined in libavutil/Pixfmt.h AVRational framerate;//Frame rate enum AVPixelFormat sw_pix_fmt;//The pixel format, such as yuv420pdeng, is defined in libavutil/Pixfmt.h. if it is not set correctly, the decoder cannot decode normally, and a splash screen will appear.

This structure involves many variables, and many of them are related to coding. In fact, there are not too many decoding variables.

2.3 AVCodec AVCodec is a structure that stores encoder information. The structure definition is located in libavcodec/Codec.h. The main variables are as follows:

const char *name;//codec name

enum AVMediaType type;//The type of codec has been described in AVCodecContext

enum AVCodecID id;//The id of codec has been described in AVCodecContext

int capabilities;//See AV_CODEC_CAP for encoding and decoding capabilities_

const AVRational *supported_framerates;//Supported frame rate

const enum AVPixelFormat *pix_fmts;//Supported pixel formats

const int *supported_samplerates; //Supported audio sampling rates

const enum AVSampleFormat *sample_fmts;//Supported sampling formats

Here are some function pointers

int (*init)(struct AVCodecContext *);//initialization

int (*encode2)(struct AVCodecContext *avctx, struct AVPacket *avpkt,const struct AVFrame *frame, int *got_packet_ptr);//code

int (*decode)(struct AVCodecContext *avctx, void *outdata,

int *got_frame_ptr, struct AVPacket *avpkt);

int (*close)(struct AVCodecContext *);//decode

int (*receive_packet)(struct AVCodecContext *avctx, struct AVPacket *avpkt);//Receive packet data

int (*receive_frame)(struct AVCodecContext *avctx, struct AVFrame *frame);//Receive frame data

void (*flush)(struct AVCodecContext *);//refresh buffer 2.4 AVFrame AVFrame is generally used to store original data (i.e. uncompressed data, such as YUV and RGB for video and PCM for audio). In addition, it also contains some relevant information. For example, macroblock type table, QP table, motion vector table and other data are stored during decoding. Relevant data is also stored during encoding. The structure definition is located in libavutil/Frame.h. The main variables are as follows:

#define AV_NUM_DATA_POINTERS 8 uint8_t *data[AV_NUM_DATA_POINTERS];//Data in planar format (such as YUV420P) will be separated into data[0], data[1], data[2]... (in YUV420P, data[0] is saved as Y, data[1] is saved as U, and data[2] is saved as V) int width, height;//Video width and height int nb_samples;//Number of audio sampling points per channel int format;//Pixel format of frame int key_frame;//1 -> keyframe, 0-> not enum AVPictureType pict_type;//Defined in libavutil/AVutil.h, the type of the frame, I, P, B, etc AVRational sample_aspect_ratio;//Aspect ratio (16:9, 4:3...) scores expressed in AVRational in FFMPEG: int64_t pts;//presentation time stamp int64_t pkt_dts;//PTS copied from packet int quality; void *opaque; int coded_picture_number;//Coded frame sequence number int display_picture_number;//Display frame sequence number int8_t *qscale_table;//QP table QP table points to a block of memory, which stores the QP value of each macroblock. The label of the macroblock is from left to right, line by line. Each macroblock corresponds to one QP. uint8_t *mbskip_table;//Skip macroblock table int16_t (*motion_val[2])[2];//Motion vector table int8_t *ref_index[2];//Motion estimation reference frame list int interlaced_frame;//Interleaved frame indicates whether the image content is interleaved, that is, whether it is interlaced int sample_rate;//Audio sampling rate uint8_t motion_subsample_log2;//For the number of motion vector samples in a macroblock, take the picture size represented by. 1 motion vector of log2 (expressed in width or height, in pixels). Note that log2 is taken here.

3, Information

- Android audio and video development - Chapter 8

- The relationship between the most critical structures in FFMPEG

- FFMPEG structure analysis: AVCodecContext

- FFMPEG structure analysis: AVCodec

- FFMPEG structure analysis: AVFrame

- FFMPEG realizes the conversion between YUV and RGB original image data (swscale)

4, Harvest

Through the study and practice of this chapter, the harvest is as follows:

- Breakpoint analysis and decoding process to deepen understanding

- Review the relationship between important structures of ffmpeg

- Understand the decoding related structures AVCodecContext, AVCodec and AVFrame. Many coding protocol related knowledge needs to be systematically studied (X265)

Thank you for reading Next, we will learn the last piece of knowledge fflay key structure, and solve protocol related structure. Welcome to pay attention to the official account of "audio and video development trip" and learn and grow together. Welcome to communicate