Recently, I've been discussing work fishing, and Netease cloud music also has a reasonable fishing schedule. Today, I recommend how to fish with python ~ code live yo!

Introduction: facial expression is one of the most direct external manifestations of human emotions and an important way of social activities. Giving machines the ability to perceive human emotions so that machines can recognize human emotional states is one of the important goals of human-computer interaction. With the rapid development of artificial intelligence, in the past ten years, scientific and technological workers have carried out in-depth research in the field of automatic facial expression recognition. The research of facial expression recognition has attracted extensive attention in the fields of psychology, fatigue driving detection, classroom teaching effect evaluation, intelligent medical treatment, public security lie detection system, vehicle safety system and so on.

In the face recognition algorithm, it is necessary to determine whether there is a face in the video or picture, and locate the position of the face. At present, there are mainly two face determination and location methods based on knowledge and statistics. Among them, knowledge-based face detection and location mainly uses the geometric relationship between face organ features and organs to determine whether there is a face in the image. The statistical based detection method uses whether the pixel distribution in the past picture is similar to that in the current target picture to determine the face.

Today, we will use KNN algorithm to realize face classification and monitor on the basis of classification. When an untrained face is detected, the set naming is automatically executed. Here we set it to open the document. We can form our face detection "fishing" artifact, or block our chin in time, that is, we can execute the program. The effects are as follows:

Introduction to KNN face classification algorithm

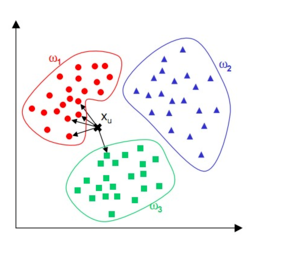

KNN algorithm is an inert learning algorithm. Its idea is that the category of samples to be classified is determined by the voting of K samples of its nearest neighbors. It has been widely used in data mining and data classification. In pattern recognition, the advantage of k-nearest neighbor algorithm is simple, insensitive to outliers and strong generalization ability. Its core content: among the k most similar samples of any sample in the data set, if most samples are merged into a certain category, this sample also belongs to this category. Its significance is to predict the attribution of the sample through the known data categories around the sample to be tested, which greatly weakens the difficulties brought by the high dimension and high coupling of the data set to the data feature analysis.

Its advantages are: it has strong tolerance to data noise, and is very suitable for complex and changeable feature selection scenarios in retail industry; When analyzing data features, only the K nearest samples are concerned, which greatly reduces the dimension of the data set.

Disadvantages: k-nearest neighbor algorithm is an inert algorithm and can not learn actively; The selection of K value has an impact on the results of data analysis

The face classification algorithm based on KNN is based on the offset generated by the abnormal face sample compared with the normal working condition sample, and determines whether it is an abnormal face by comparing the sum of the squares of the first k nearest neighbor samples in the training set between the normal sample and the abnormal sample. KNN face classification algorithm consists of model establishment and fault detection.

1.1 model establishment

The first part is the establishment of the model. Firstly, the k nearest neighbor samples of each sample are determined in the training set, and then the sum of the squares of the Euclidean distance from each sample to its K nearest neighbor samples is calculated as a statistic.

1.2 introduction to threedposeunitybaracuda

The second part is fault detection. Firstly, the first k nearest neighbors of the sample x to be tested are found in the training set, and then the sum of the squares of the Euclidean distance between X and its K nearest neighbors is calculated by the formula. Finally, compare the sum of squares with the standard. If it is greater than, sample x is an abnormal sample, otherwise it is a normal sample.

Face feature extraction

Face feature extraction is used here_ recognition. Face Recognition library mainly encapsulates dlib, a C + + graphics library. It is encapsulated into a very simple API library for Face Recognition through Python language, which shields the algorithm details of Face Recognition and greatly reduces the development difficulty of Face Recognition function. Face Recognition based on Face Recognition database mainly goes through several steps: face detection to find out all faces; Detect facial feature points: use feature points to correct posture and turn the side face into the front face; Coding the face: calculate the feature value (feature vector) of the face according to the feature points of the face.

Programming

Here, the program design is divided into the following steps: data set recording and production, KNN face classification training and anomaly detection execution program.

3.1 face data production

Here, we simply sample 1000 face samples_ Recognition extracts the face position, segments and saves it. The code is as follows:

facial_features = [

'chin',

'left_eyebrow',

'right_eyebrow',

'nose_bridge',

'nose_tip',

'left_eye',

'right_eye',

'top_lip',

'bottom_lip'

]

video_capture = cv2.VideoCapture(0)

label="flase"

num=0

try:

os.mkdir("img/"+label)

except:

pass

while True:

ret,frame=video_capture.read()

face_locations = face_recognition.face_locations(frame)

face_landmarks_list = face_recognition.face_landmarks(frame)

for face_location in face_locations:

top, right, bottom, left = face_location

if len(face_landmarks_list)==1:

num+=1

face_image = frame[top:bottom, left:right]

cv2.imwrite("img/"+label+"/"+str(num)+".jpg",face_image)

print("Save page"+str(num)+"Zhang face")

cv2.imshow("test",face_image)

cv2.waitKey(1)

else:

print("The face cannot be detected, or the number of faces is more than one. Please ensure that there is only one face")

if num == 1000:

break

cv2.destroyAllWindows()

3.2 KNN face classification

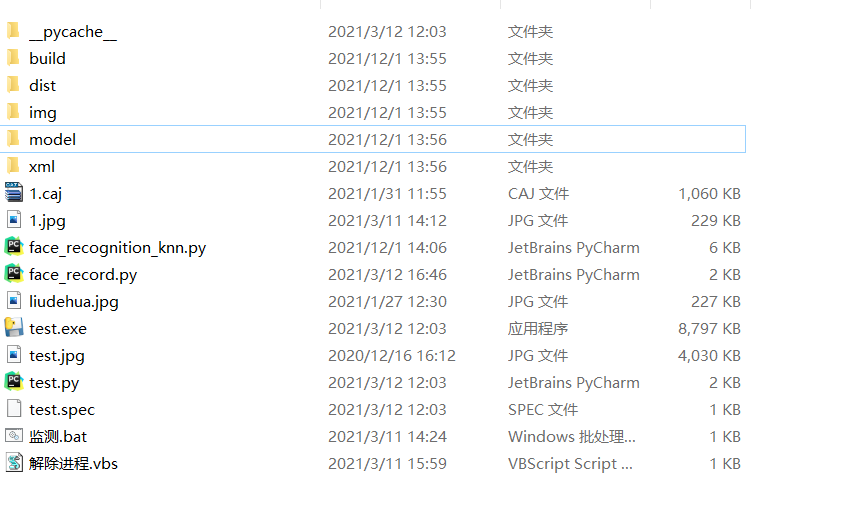

KNN classifies faces. First, it needs to traverse each face sample in the training set, and judge whether it is a positive sample by calculating the distance between each face code. The general program structure is shown in the figure below, i.e. face_record record face, face_recognition_knn trains and monitors human faces. test is the script to be executed to detect exceptions. Here, it has been packaged into an exe file. The code is as follows:

def train(train_dir, model_save_path=None, n_neighbors=None, knn_algo='ball_tree', verbose=False):

X = []

y = []

for class_dir in os.listdir(train_dir):

if not os.path.isdir(os.path.join(train_dir, class_dir)):

continue

for img_path in image_files_in_folder(os.path.join(train_dir, class_dir)):

image = face_recognition.load_image_file(img_path)

face_bounding_boxes = face_recognition.face_locations(image)

if len(face_bounding_boxes) != 1:

if verbose:

print("Image {} not suitable for training: {}".format(img_path,

"Didn't find a face" if len(

face_bounding_boxes) < 1 else "Found more than one face"))

else:

X.append(face_recognition.face_encodings(image, known_face_locations=face_bounding_boxes)[0])

y.append(class_dir)

if n_neighbors is None:

n_neighbors = int(round(math.sqrt(len(X))))

if verbose:

print("Chose n_neighbors automatically:", n_neighbors)

knn_clf = neighbors.KNeighborsClassifier(n_neighbors=n_neighbors, algorithm=knn_algo, weights='distance')

knn_clf.fit(X, y)

if model_save_path is not None:

with open(model_save_path, 'wb') as f:

pickle.dump(knn_clf, f)

return knn_clf

def predict(X_img_path, knn_clf=None, model_path=None, distance_threshold=0.5):

if knn_clf is None and model_path is None:

raise Exception("Must supply knn classifier either thourgh knn_clf or model_path")

if knn_clf is None:

with open(model_path, 'rb') as f:

knn_clf = pickle.load(f)

X_img = X_img_path

X_face_locations = face_recognition.face_locations(X_img)

if len(X_face_locations) == 0:

return []

faces_encodings = face_recognition.face_encodings(X_img, known_face_locations=X_face_locations)

closest_distances = knn_clf.kneighbors(faces_encodings, n_neighbors=1)

are_matches = [closest_distances[0][i][0] <= distance_threshold for i in range(len(X_face_locations))]

return [(pred, loc) if rec else ("unknown", loc) for pred, loc, rec in

zip(knn_clf.predict(faces_encodings), X_face_locations, are_matches)]

3.3 execution of abnormal face behavior

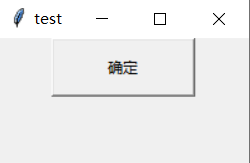

In order to prevent the computer from jamming due to the continuous execution of abnormal faces, it is necessary to formulate a GUI button to ensure that it is executed only once, and press the OK button to continue monitoring. The following codes respectively include the definition of GUI interface. There is only one button function. Clicking the button will restore the abnormal program to normal without affecting the face detection program. That is, this program and face classification do not interfere with each other. The code is as follows:

def get_window_positon(width, height):

window_x_position = (window.winfo_screenwidth() - width) // 2

window_y_position = (window.winfo_screenheight() - height) // 2

return window_x_position, window_y_position

pos = get_window_positon(tk_width, tk_height)

window.geometry(f'+{pos[0]}+{pos[1]}')

def closewindow():

messagebox.showinfo(title="warning",message="Please click OK")

return

def t():

try:

os.remove("ok.txt")

except:

pass

window.destroy()

window.protocol("WM_DELETE_WINDOW",closewindow)

bnt=Button(window,text="determine",width=15,height=2,command=t)

bnt.pack()

window.mainloop()

if temp>num:

if os.path.exists("ok.txt"):

pass

else:

t2 = threading.Thread(target=test2)

t2.start()

os.system("1.jpg")

f = open("ok.txt", "w")

f.close()

t1 = threading.Thread(target=test1)

t1.start()

for name, (top, right, bottom, left) in predictions:

draw.rectangle(((left, top), (right, bottom)), outline=(0, 0, 255))

text_width, text_height = draw.textsize(name)

draw.rectangle(((left, bottom - text_height - 10), (right, bottom)), fill=(0, 0, 255), outline=(0, 0, 255))

draw.text((int((left + right)/2), bottom - text_height - 10), name,font=myfont, fill=(0,0,0))

del draw

pil_image = np.array(pil_image)

temp = num

else:

pil_image=img_path

def test2():

os.system('1.caj')

def test1():

os.system('test.exe')

Full code:

https://codechina.csdn.net/qq_42279468/face-monitor/-/tree/master