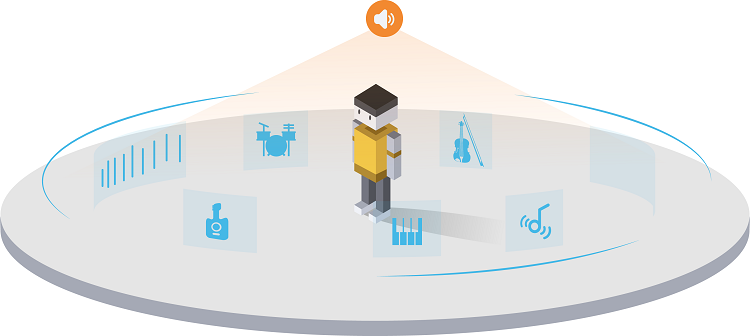

In the fields of music creation, audio and video editing and games, it is more and more important to bring immersive audio experience to users. How do developers create 3D surround sound effects in applications? Huawei audio editing service 6.2. Version 0 brings the spatial dynamic rendering function, which can render audio elements such as human voice and musical instruments to the specified three-dimensional spatial orientation, and supports two modes of static and dynamic rendering, so as to further improve the sound effect experience in the application. Developers can click to view the following Demo demonstration to understand the integration effect and start experimenting with functional features.

Development practice

1. Development preparation

Developers prepare music materials in advance, and MP3 format is the best. For other audio formats, please refer to step "2.4" for conversion, and for video format, please refer to step "2.5" for audio extraction.

1.1 project level build Configure Maven warehouse address in gradle:

buildscript {

repositories {

google()

jcenter()

// Configure the Maven warehouse address of the HMS Core SDK.

maven {url 'https://developer.huawei.com/repo/'}

}

dependencies {

...

// Add agcp plug-in configuration.

classpath 'com.huawei.agconnect:agcp:1.4.2.300'

}

}

allprojects {

repositories {

google()

jcenter()

// Configure the Maven warehouse address of the HMS Core SDK.

maven {url 'https://developer.huawei.com/repo/'}

}

}

1.2 add configuration to file header:

apply plugin: 'com.huawei.agconnect'

1.3 application level build SDK dependencies configured in gradle:

dependencies{

implementation 'com.huawei.hms:audio-editor-ui:{version}'

}

1.4 on Android manifest Apply for the following permissions in the XML file:

<!--Vibration authority--> <uses-permission android:name="android.permission.VIBRATE" /> <!--Microphone permissions--> <uses-permission android:name="android.permission.RECORD_AUDIO" /> <!--Write storage permissions--> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" /> <!--Read storage permissions--> <uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" /> <!--network right--> <uses-permission android:name="android.permission.INTERNET" /> <!--Network status permissions--> <uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" /> <!--Network state change permission--> <uses-permission android:name="android.permission.CHANGE_NETWORK_STATE" />

2. Code development

2.1 create an application defined activity interface to select audio and return the audio file path to the audio editing SDK:

// Return the audio file path List to the audio editing page

private void sendAudioToSdk() {

// Get the audio file path filePath

String filePath = "/sdcard/AudioEdit/audio/music.aac";

ArrayList<String> audioList = new ArrayList<>();

audioList.add(filePath);

// Return the audio file path to the audio editing page

Intent intent = new Intent();

// Use the haeconstant.xml provided by sdk AUDIO_ PATH_ LIST

intent.putExtra(HAEConstant.AUDIO_PATH_LIST, audioList);

// Use the haeconstant.xml provided by sdk RESULT_ CODE is the result CODE

this.setResult(HAEConstant.RESULT_CODE, intent);

finish();

}

2.2 when importing audio on the UI interface, the SDK will send an action value of com huawei. hms. audioeditor. Choose the intent of audio to jump to the activity. Therefore, the registration form in the activity "AndroidManifest.xml" is as follows:

<activity android:name="Activity "> <intent-filter> <action android:name="com.huawei.hms.audioeditor.chooseaudio"/> <category android:name="android.intent.category.DEFAULT"/> </intent-filter> </activity>

2.3 start the audio editing page, click "add audio", and the SDK will actively call the activity defined in "2.1". After adding the audio, you can edit the audio and add special effects. After that, export the edited audio.

HAEUIManager.getInstance().launchEditorActivity(this);

2.4. If the audio material is not in MP3 format, this step can complete the audio format conversion:

Call the transformAudioUseDefaultPath interface to convert the audio format, and export the converted audio file to the default path.

// Audio format converter

HAEAudioExpansion.getInstance().transformAudioUseDefaultPath(context,inAudioPath, audioFormat, new OnTransformCallBack() {

// Progress callback (0-100)

@Override

public void onProgress(int progress) {

}

// switch views

@Override

public void onFail(int errorCode) {

}

// Conversion succeeded

@Override

public void onSuccess(String outPutPath) {

}

// Cancel conversion

@Override

public void onCancel() {

}

});

// Cancel conversion task interface

HAEAudioExpansion.getInstance().cancelTransformAudio();

Call the transformAudio interface for audio format conversion, and export the converted audio file to the target path.

// Audio format converter

HAEAudioExpansion.getInstance().transformAudio(context,inAudioPath, outAudioPath, new OnTransformCallBack(){

// Progress callback (0-100)

@Override

public void onProgress(int progress) {

}

// switch views

@Override

public void onFail(int errorCode) {

}

// Conversion succeeded

@Override

public void onSuccess(String outPutPath) {

}

// Cancel conversion

@Override

public void onCancel() {

}

});

// Cancel conversion task interface

HAEAudioExpansion.getInstance().cancelTransformAudio();

2.5 if the material is in video format, you can call the extractAudio interface to extract the audio, extract the audio file from the video, and then export it to the specified directory:

// The folder path of audio saved extracted by outAudioDir. It is not required

// outAudioName: the extracted audio name, without suffix, is not required

HAEAudioExpansion.getInstance().extractAudio(context,inVideoPath,outAudioDir, outAudioName,new AudioExtractCallBack() {

@Override

public void onSuccess(String audioPath) {

Log.d(TAG, "ExtractAudio onSuccess : " + audioPath);

}

@Override

public void onProgress(int progress) {

Log.d(TAG, "ExtractAudio onProgress : " + progress);

}

@Override

public void onFail(int errCode) {

Log.i(TAG, "ExtractAudio onFail : " + errCode);

}

@Override

public void onCancel() {

Log.d(TAG, "ExtractAudio onCancel.");

}

});

// Cancel audio extraction task interface

HAEAudioExpansion.getInstance().cancelExtractAudio();

2.6 call getInstruments and startSeparationTasks interfaces to extract style.

// Get the extracted style type ID, and then pass this ID to the interface

HAEAudioSeparationFile haeAudioSeparationFile = new HAEAudioSeparationFile();

haeAudioSeparationFile.getInstruments(new SeparationCloudCallBack<List<SeparationBean>>() {

@Override

public void onFinish(List<SeparationBean> response) {

// The data returned, including the style type ID

}

@Override

public void onError(int errorCode) {

// Failure Return

}

});

// Set the style parameters to extract

List instruments = new ArrayList<>();

instruments.add("accompaniment id");

haeAudioSeparationFile.setInstruments(instruments);

// Start style separation

haeAudioSeparationFile.startSeparationTasks(inAudioPath, outAudioDir, outAudioName, new AudioSeparationCallBack() {

@Override

public void onResult(SeparationBean separationBean) { }

@Override

public void onFinish(List<SeparationBean> separationBeans) {}

@Override

public void onFail(int errorCode) {}

@Override

public void onCancel() {}

});

// Cancel detach task

haeAudioSeparationFile.cancel();

2.7 call the applyAudioFile interface for spatial orientation rendering.

// Spatial orientation rendering

// Fixed positioning

HAESpaceRenderFile haeSpaceRenderFile = new HAESpaceRenderFile(SpaceRenderMode.POSITION);

haeSpaceRenderFile.setSpacePositionParams(

new SpaceRenderPositionParams(x, y, z));

// Dynamic rendering

HAESpaceRenderFile haeSpaceRenderFile = new HAESpaceRenderFile(SpaceRenderMode.ROTATION);

haeSpaceRenderFile.setRotationParams( new SpaceRenderRotationParams(

x, y, z, surroundTime, surroundDirection));

// extend

HAESpaceRenderFile haeSpaceRenderFile = new HAESpaceRenderFile(SpaceRenderMode.EXTENSION);

haeSpaceRenderFile.setExtensionParams(new SpaceRenderExtensionParams(radiusVal, angledVal));

// Call interface

haeSpaceRenderFile.applyAudioFile(inAudioPath, outAudioDir, outAudioName, callBack);

// Cancel spatial orientation rendering

haeSpaceRenderFile.cancel();

After completing the above steps, you can get the corresponding spatial dynamic rendering effect, and easily realize the 2D to 3D sound effect in the application! This function can also be applied to corporate meetings and sports rehabilitation, such as immersive product display at the exhibition, as a sense of direction clue for visually impaired people, providing convenience for daily life, etc. Developers can choose to use it according to the actual needs of their applications. For more details, please refer to: Official website of Huawei developer alliance audio editing service ; obtain Integrated audio editing service guidance document.

Learn more > >

visit Official website of Huawei developer Alliance

obtain Development guidance document

Huawei mobile service open source warehouse address: GitHub,Gitee

Follow us and learn the latest technical information of HMS Core for the first time~