reference resources: https://blog.csdn.net/u012319493/article/details/85331567

Accessing disk based files is a complex activity, which involves not only the processing of VFS abstraction layer and block devices, but also the use of disk cache.

The ordinary files and block device files of the disk file system are simply collectively referred to as "files".

There are several modes of accessing files:

- Canonical mode: after the file is opened in canonical mode, the flag O_SYNC and O_DIRECT clears 0, and its contents are accessed by read() and write().

read() blocks the calling process until the data is copied into the user state address space.

But write() ends as soon as the data is copied to the page cache (deferred write). - Synchronization mode: when the file is opened in synchronization mode, the flag is O_SYNC is set to 1 or later set to 1 by the system call fcntl().

This flag only affects write operations (read operations are always blocked), and it blocks system calls until data is written to disk. - Memory mapping mode: in memory mapping mode, after the file is opened, the application sends out the system call mmap() to map the file to memory.

Therefore, the file becomes a byte array in RAM, and the application can directly access the array elements without calling read(), write(), or lseek(). - Direct I/O mode: in direct I/O mode, after the file is opened, the flag O_DIRECT is set to 1.

Any read-write operation transfers data directly between the user state address space and the disk without passing through the page cache. - Asynchronous mode: in asynchronous mode, there are two ways to access files, that is, through a set of POSIX API s or Linux specific system calls.

The so-called asynchronous mode is that the data transmission request does not block the calling process, but executes in the background, and the application continues its normal execution.

1, System call for read / write operation

reference resources: https://blog.csdn.net/yiqiaoxihui/article/details/79958039?spm=1001.2014.3001.5501

reference resources: https://blog.csdn.net/tiantao2012/article/details/70770500

The service routines of read() and write() will eventually call the read and write methods of the file object, which may depend on the file system.

For disk file systems, these methods can determine the location of the physical block where the accessed data is located, and activate the block device driver to start data transfer.

Reading files is page based, and the kernel always transfers several complete data pages at a time.

If the data is not in RAM after the process sends out read(), the kernel allocates a new page box, fills the page with the appropriate part of the file, adds the page to the page cache, and finally copies the requested bytes to the process address space.

For most file systems, reading a data page from a file is equivalent to finding on disk which blocks the requested data is stored in.

When this process is complete, the kernel populates these pages by submitting appropriate I/O operations to the general-purpose block assembly.

Most disk file system read methods are controlled by generic_file_read() is a general function implementation.

For disk based files, the write operation is complex. Because the file size can be changed, the kernel may allocate some physical blocks on the disk.

Many disk file systems pass generic_file_write() implements the write method.

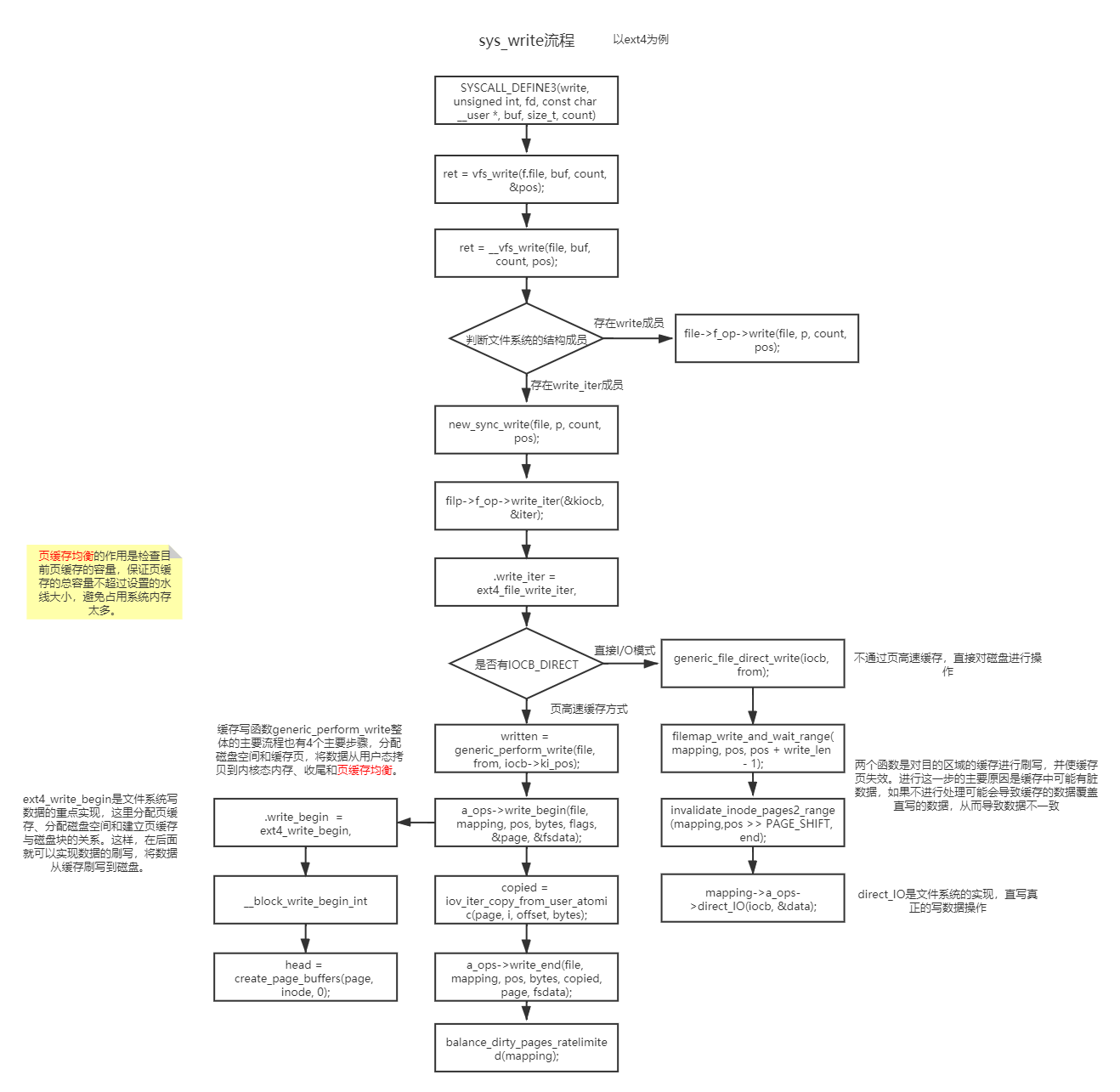

1.1 sys_write process

sys_write() is one of the core functions in the linux file system. Its operation is to write the file contents of the user buffer to the corresponding location of the file on the disk.

fs/read_write.c

SYSCALL_DEFINE3(read, unsigned int, fd, char __user *, buf, size_t, count)

{

struct file *file;

ssize_t ret = -EBADF;

int fput_needed;

file = fget_light(fd, &fput_needed);

if (file) {

loff_t pos = file_pos_read(file); //Return file offset file - > F_ pos

ret = vfs_read(file, buf, count, &pos);

file_pos_write(file, pos); //Reset file offset file - > F_ pos = pos

fput_light(file, fput_needed);

}

return ret;

ssize_t __vfs_write(struct file *file, const char __user *p, size_t count,

loff_t *pos)

{

if (file->f_op->write)

return file->f_op->write(file, p, count, pos); //Call the write function of the corresponding file system

else if (file->f_op->write_iter)

return new_sync_write(file, p, count, pos); //Asynchronous I/O write operations

else

return -EINVAL;

}

EXPORT_SYMBOL(__vfs_write);

__ vfs_ The write function calls the write function of the specific file system. You can see that file - > F is called first_ op->write.

Where file - > F_ OP is assigned when calling file system registration. Here, take ext4 as an example

const struct file_operations ext4_file_operations = {

.llseek = ext4_llseek,

.read_iter = generic_file_read_iter,

.write_iter = ext4_file_write_iter,

.unlocked_ioctl = ext4_ioctl,

#ifdef CONFIG_COMPAT

.compat_ioctl = ext4_compat_ioctl,

#endif

.mmap = ext4_file_mmap,

.open = ext4_file_open,

.release = ext4_release_file,

.fsync = ext4_sync_file,

.get_unmapped_area = thp_get_unmapped_area,

.splice_read = generic_file_splice_read,

.splice_write = iter_file_splice_write,

.fallocate = ext4_fallocate,

};

Call the process and draw a diagram:

It can be seen that the final write operation is still implemented in ext4. The flow of write operation is different due to different flag s. Here is just an example.

It can be seen that although the application does not need to pay attention to which file system interface, it only needs to use the general interface VFS, and then each file system implements the read/write interface and registers itself in the list of file systems.

reference resources: https://baijiahao.baidu.com/s?id=1621334078382950294&wfr=spider&for=pc

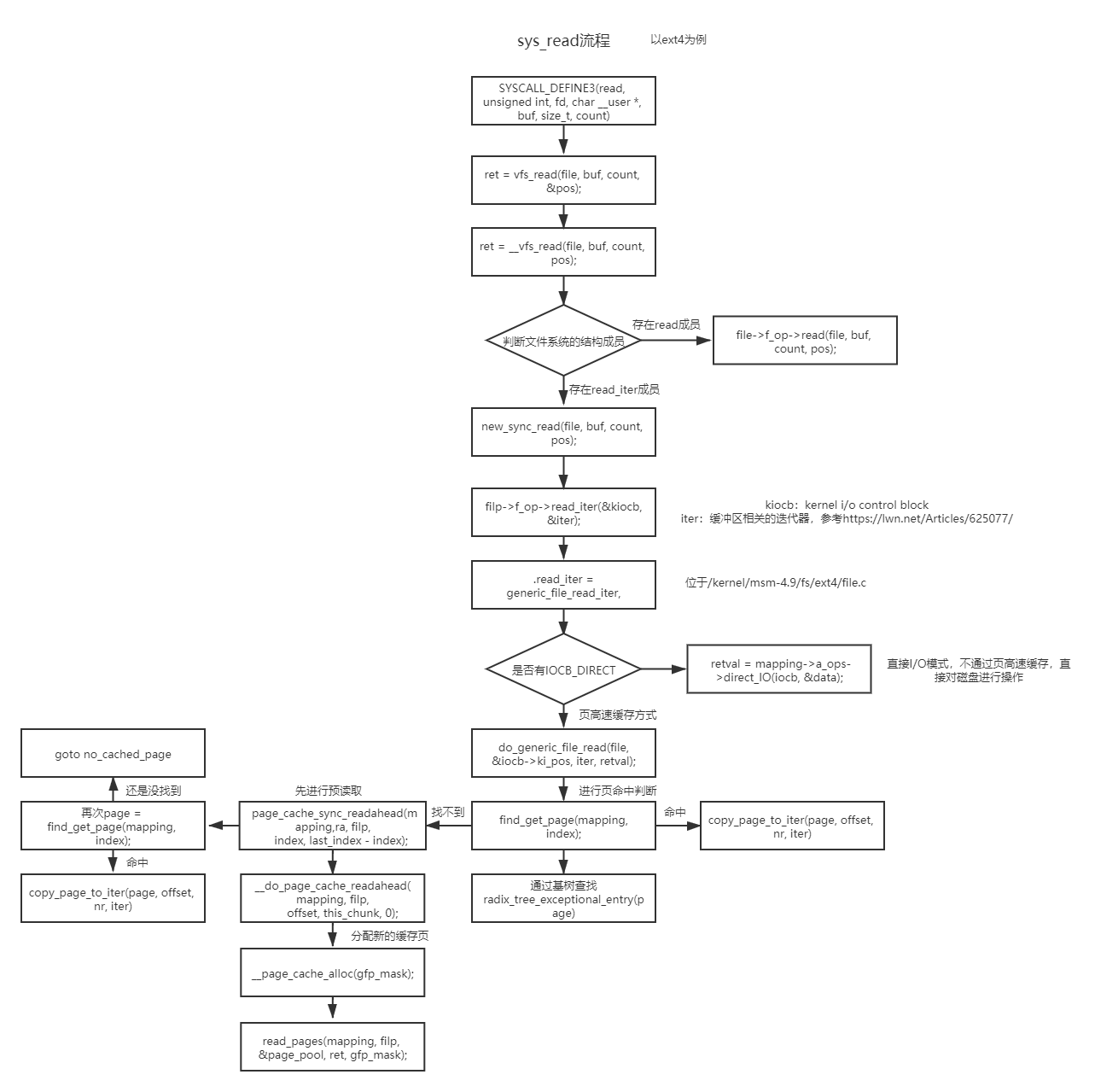

1.2 sys_read process