brief introduction

with the gradual deepening of research, I learned that loading the pytorch training model with libtorch is actually a very tortuous method. It can indeed meet our needs, but it may not be the best method. Now the more common model format is ONNX model, so I wonder if I can use this general model to solve the problem?

far away and near, I didn't expect OpenCV to have a DNN module that can be loaded directly. All we need to do is convert the pth model trained by pytorch into ONNX model. This article will introduce how to convert the model of pytorch training office into ONNX model and load the prediction with DNN.

pth model to ONNX

in the previous article, we introduced how to use pytorch to build the network and train the model. The trained model format is pth. Friends who are not clear can go to see the previous article by themselves. Here, we followed the previous article to introduce how to convert the model format.

1. Loading pth model:

model = LeNet5(6) state_dict = torch.load(input_pth_model, map_location='cpu').state_dict() # load the model model.load_state_dict(state_dict)

here's an explanation, through load() state_ Dict () loads only the parameters of the model, which can be more lightweight, save memory and improve the running speed. If direct load is used, all data of the whole model will be loaded directly, which will occupy a larger memory.

2. Transformation model

dummy_input = torch.randn(1, 1, input_img_size, input_img_size) input_names = ["input_image"] output_names = ["output_classification"] model.eval() # It is transformed into onnx model here torch.onnx.export(model, dummy_input, output_ONNX, verbose=True, input_names=input_names, output_names=output_names)

where input_img_size,input_pth_model,output_ONNX needs to be specified by itself.

Load ONNX model

here, we first create a class that can manage the network, load the model and predict.

Class creation

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include <iostream>

using namespace std;

using namespace cv;

class Dnn_NumDetect

{

public:

Dnn_NumDetect(const string& path);

// Run forward transfer to calculate the output of the layer

Point2f forward(Mat& src);

private:

dnn::Net Lenet5;

// Load onnx model

void loadModel(const string& path);

// Matrix normalization

void Mat_Normalization(Mat &matrix);

};

Class implementation

#include "Dnn.h"

/**

* @brief Use Opencv Dnn Module to read ONNX model

* @note If OpenCV is compiled using Intel's inference engine library, DNN_BACKEND_DEFAULT indicates DNN_BACKEND_INFERENCE_ENGINE.

* Otherwise, it means DNN_BACKEND_OPENCV.

*/

Dnn_NumDetect::Dnn_NumDetect(const string &path)

{

this->loadModel(path);

//The network uses a specific computing back end where supported

this->Lenet5.setPreferableBackend(dnn::DNN_BACKEND_OPENCV);

//The network performs calculations on specific target devices

this->Lenet5.setPreferableTarget(dnn::DNN_TARGET_CPU);

}

/**

* @brief Load ONNX model

*/

void Dnn_NumDetect::loadModel(const string &path)

{

this->Lenet5 = dnn::readNetFromONNX(path);

CV_Assert(!this->Lenet5.empty());

}

/**

* @brief Run forward transfer to calculate the output of the layer

* @return Specifies the Blob of the first output of the layer.

*/

Point2f Dnn_NumDetect::forward(Mat &src)

{

CV_Assert(!this->Lenet5.empty());

// Set input

Mat input;

input = dnn::blobFromImage(src);

this->Lenet5.setInput(input);

Mat prob = this->Lenet5.forward();

// cout << prob <<endl;

// Matrix normalization

this->Mat_Normalization(prob);

// cout << prob <<endl;

Point classIdPoint;

double confidence;

//Find maximum and minimum values

minMaxLoc(prob.reshape(1, 1), 0, &confidence, 0, &classIdPoint);

int classId = classIdPoint.x;

return Point2f(classId, confidence);

}

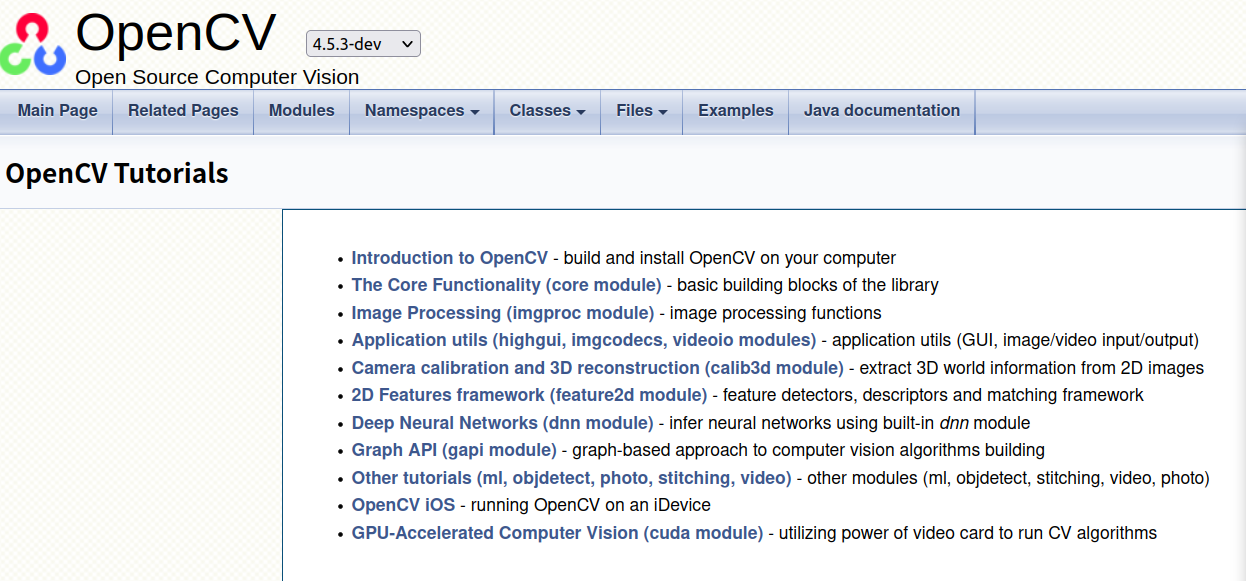

if you need more functions about DNN module, you can go directly to the opencv official website tutorial The dnn module is described in more detail.

summary

with regard to number recognition, three ways have been tried:

1. Build LeNet5 network directly with torch to generate pt model

2. pth model is trained with pytorch and converted into pt model, which is loaded in torch

3. Load onnx model with dnn module of Opencv (it is more convenient to use Openvino for acceleration)

a strange phenomenon is found when calculating the running time. It usually takes a long time to predict for the first time, and then the running time will decrease significantly.

Comparison of three methods:

torch directly constructs network training for the longest time and has the lowest accuracy. This method is not recommended.

the accuracy of torch loading pt model and dnn module loading onnx model are roughly the same, but onnx model is better in running time.