1. Requirements: do voice speech and then convert it into text

2. Using api: iFLYTEK voice dictation

3. To configure a file in a vue project

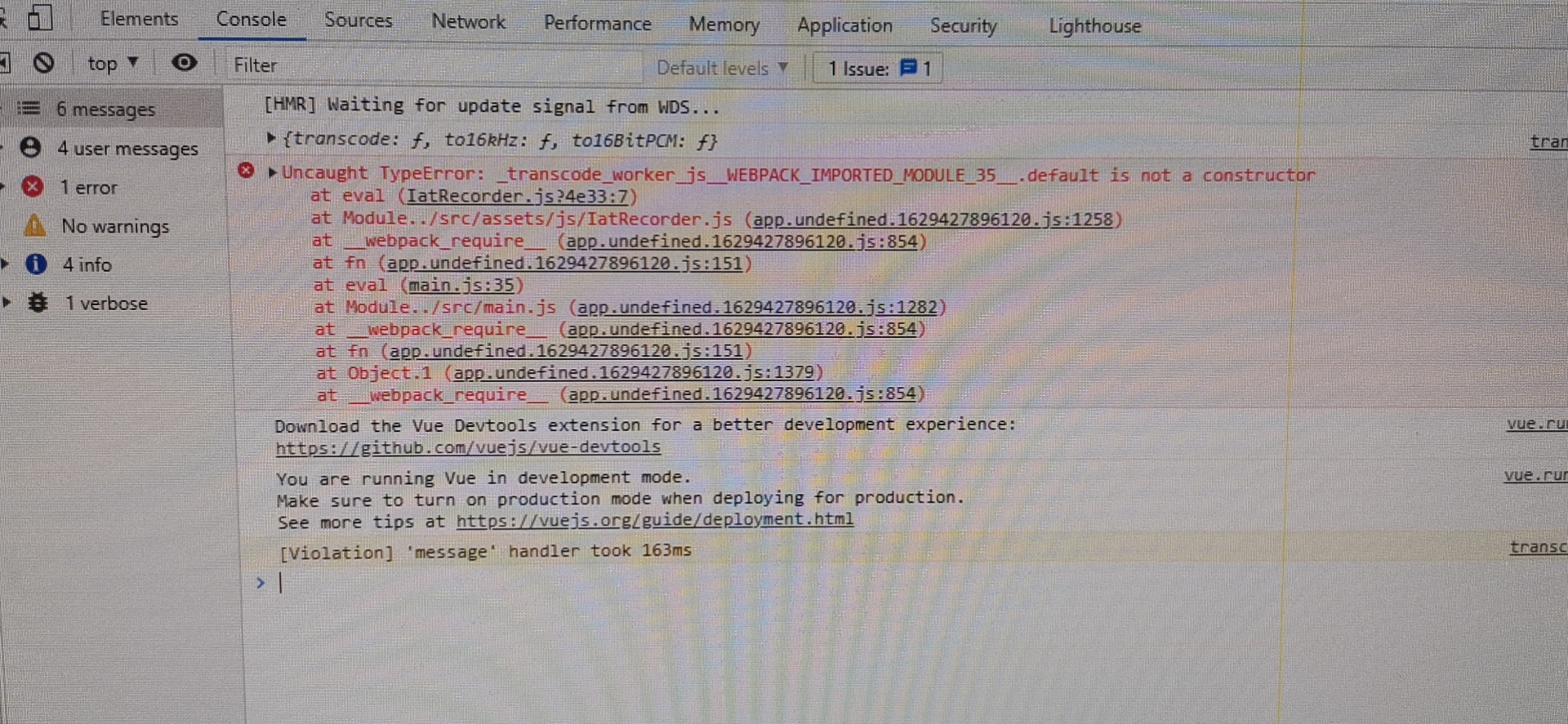

4. Process iatrecorder after configuration The problem that new Woeker() will report an error in JS

Now:

Step 1: let's download a demo of js version to get iatrecorder js and transcode worker. Copy the. js file to src

transcode.worker.js:

// (function(){

self.onmessage = function(e){

transAudioData.transcode(e.data)

}

let transAudioData = {

transcode(audioData) {

let output = transAudioData.to16kHz(audioData)

output = transAudioData.to16BitPCM(output)

output = Array.from(new Uint8Array(output.buffer))

self.postMessage(output)

// return output

},

to16kHz(audioData) {

var data = new Float32Array(audioData)

var fitCount = Math.round(data.length * (16000 / 44100))

var newData = new Float32Array(fitCount)

var springFactor = (data.length - 1) / (fitCount - 1)

newData[0] = data[0]

for (let i = 1; i < fitCount - 1; i++) {

var tmp = i * springFactor

var before = Math.floor(tmp).toFixed()

var after = Math.ceil(tmp).toFixed()

var atPoint = tmp - before

newData[i] = data[before] + (data[after] - data[before]) * atPoint

}

newData[fitCount - 1] = data[data.length - 1]

return newData

},

to16BitPCM(input) {

var dataLength = input.length * (16 / 8)

var dataBuffer = new ArrayBuffer(dataLength)

var dataView = new DataView(dataBuffer)

var offset = 0

for (var i = 0; i < input.length; i++, offset += 2) {

var s = Math.max(-1, Math.min(1, input[i]))

dataView.setInt16(offset, s < 0 ? s * 0x8000 : s * 0x7fff, true)

}

return dataView

},

}

// })()IatRecorder.js:

Here, you need to manually install crypto JS, and then import cryptojs from 'crypto JS'

npm install crypto-js --save-dev

const APPID = 'Yours APPID'

const API_SECRET = 'Yours API_SECRET '

const API_KEY = 'Yours API_KEY'

import CryptoJS from 'crypto-js'

import Worker from './transcode.worker.js'

const transWorker = new Worker()

console.log(transWorker)

var startTime = ""

var endTime = ""

function getWebSocketUrl(){

return new Promise((resolve, reject) => {

// The request address changes according to different languages

var url = 'wss://iat-api.xfyun.cn/v2/iat'

var host = 'iat-api.xfyun.cn'

var apiKey = API_KEY

var apiSecret = API_SECRET

var date = new Date().toGMTString()

var algorithm = 'hmac-sha256'

var headers = 'host date request-line'

var signatureOrigin = `host: ${host}\ndate: ${date}\nGET /v2/iat HTTP/1.1`

var signatureSha = CryptoJS.HmacSHA256(signatureOrigin, apiSecret)

var signature = CryptoJS.enc.Base64.stringify(signatureSha)

var authorizationOrigin = `api_key="${apiKey}", algorithm="${algorithm}", headers="${headers}", signature="${signature}"`

var authorization = btoa(authorizationOrigin)

url = `${url}?authorization=${authorization}&date=${date}&host=${host}`

resolve(url)

})

}

const IatRecorder = class {

constructor({ language, accent, appId } = {}) {

let self = this

this.status = 'null'

this.language = language || 'zh_cn'

this.accent = accent || 'mandarin'

this.appId = appId || APPID

// Record audio data

this.audioData = []

// Record the dictation results

this.resultText = ''

// The dictation results under wpgs need to be recorded in the intermediate state

this.resultTextTemp = ''

transWorker.onmessage = function (event) {

// console.log("in construction method", self.audioData)

self.audioData.push(...event.data)

}

}

// Modify recording dictation status

setStatus(status) {

this.onWillStatusChange && this.status !== status && this.onWillStatusChange(this.status, status)

this.status = status

}

setResultText({ resultText, resultTextTemp } = {}) {

this.onTextChange && this.onTextChange(resultTextTemp || resultText || '')

resultText !== undefined && (this.resultText = resultText)

resultTextTemp !== undefined && (this.resultTextTemp = resultTextTemp)

}

// Modify dictation parameters

setParams({ language, accent } = {}) {

language && (this.language = language)

accent && (this.accent = accent)

}

// Connect to websocket

connectWebSocket() {

return getWebSocketUrl().then(url => {

let iatWS

if ('WebSocket' in window) {

iatWS = new WebSocket(url)

} else if ('MozWebSocket' in window) {

iatWS = new MozWebSocket(url)

} else {

alert('Browser does not support WebSocket')

return

}

this.webSocket = iatWS

this.setStatus('init')

iatWS.onopen = e => {

this.setStatus('ing')

// Restart recording

setTimeout(() => {

this.webSocketSend()

}, 500)

}

iatWS.onmessage = e => {

this.result(e.data)

}

iatWS.onerror = e => {

this.recorderStop()

}

iatWS.onclose = e => {

endTime = Date.parse(new Date())

console.log("Duration",endTime-startTime)

this.recorderStop()

}

})

}

// Initialize browser recording

recorderInit() {

navigator.getUserMedia =

navigator.getUserMedia ||

navigator.webkitGetUserMedia ||

navigator.mozGetUserMedia ||

navigator.msGetUserMedia

// Create audio environment

try {

this.audioContext = new (window.AudioContext || window.webkitAudioContext)()

this.audioContext.resume()

if (!this.audioContext) {

alert('Browser does not support webAudioApi Related interface')

return

}

} catch (e) {

if (!this.audioContext) {

alert('Browser does not support webAudioApi Related interface')

return

}

}

// Get browser recording permission

if (navigator.mediaDevices && navigator.mediaDevices.getUserMedia) {

navigator.mediaDevices

.getUserMedia({

audio: true,

video: false,

})

.then(stream => {

getMediaSuccess(stream)

})

.catch(e => {

getMediaFail(e)

})

} else if (navigator.getUserMedia) {

navigator.getUserMedia(

{

audio: true,

video: false,

},

stream => {

getMediaSuccess(stream)

},

function(e) {

getMediaFail(e)

}

)

} else {

if (navigator.userAgent.toLowerCase().match(/chrome/) && location.origin.indexOf('https://') < 0) {

alert('chrome To obtain the browser recording function, because of security problems, you need to localhost Or 127.0.0.1 or https Permission can only be obtained under')

} else {

alert('Unable to get the recording function of the browser, please upgrade the browser or use chrome')

}

this.audioContext && this.audioContext.close()

return

}

// Successful callback for obtaining browser recording permission

let getMediaSuccess = stream => {

// Create a to process audio directly through JavaScript

this.scriptProcessor = this.audioContext.createScriptProcessor(0, 1, 1)

this.scriptProcessor.onaudioprocess = e => {

// To process audio data

if (this.status === 'ing') {

transWorker.postMessage(e.inputBuffer.getChannelData(0))

// this.audioData.push(e.inputBuffer.getChannelData(0))

}

}

// Create a new mediastreamcaudiosourcenode object so that audio from MediaStream can be played and manipulated

this.mediaSource = this.audioContext.createMediaStreamSource(stream)

// connect

this.mediaSource.connect(this.scriptProcessor)

this.scriptProcessor.connect(this.audioContext.destination)

this.connectWebSocket()

}

let getMediaFail = (e) => {

this.audioContext && this.audioContext.close()

this.audioContext = undefined

// Close websocket

if (this.webSocket && this.webSocket.readyState === 1) {

this.webSocket.close()

}

}

}

recorderStart() {

if (!this.audioContext) {

this.recorderInit()

} else {

this.audioContext.resume()

this.connectWebSocket()

}

}

// suspend recording

recorderStop() {

// resume again after suspend in safari. The recording content will be blank. Set no suspend in safari

if (!(/Safari/.test(navigator.userAgent) && !/Chrome/.test(navigator.userAgen))){

this.audioContext && this.audioContext.suspend()

}

this.setStatus('end')

}

// Processing audio data

// transAudioData(audioData) {

// audioData = transAudioData.transaction(audioData)

// this.audioData.push(...audioData)

// }

// base64 encoding the processed audio data,

toBase64(buffer) {

var binary = ''

var bytes = new Uint8Array(buffer)

var len = bytes.byteLength

for (var i = 0; i < len; i++) {

binary += String.fromCharCode(bytes[i])

}

return window.btoa(binary)

}

// Send data to webSocket

webSocketSend() {

if (this.webSocket.readyState !== 1) {

return

}

let audioData = this.audioData.splice(0, 1280)

var params = {

common: {

app_id: this.appId,

},

business: {

language: this.language, //Small languages can be added in the console - voice dictation (streaming) - dialect / language

domain: 'iat',

accent: this.accent, //Chinese dialects can be added in the console - voice dictation (streaming) - dialect / language

},

data: {

status: 0,

format: 'audio/L16;rate=16000',

encoding: 'raw',

audio: this.toBase64(audioData),

},

}

console.log("parameter language: ",this.language)

console.log("parameter accent: ",this.accent)

this.webSocket.send(JSON.stringify(params))

startTime = Date.parse(new Date())

this.handlerInterval = setInterval(() => {

// websocket is not connected

if (this.webSocket.readyState !== 1) {

console.log("websocket Not connected")

this.audioData = []

clearInterval(this.handlerInterval)

return

}

if (this.audioData.length === 0) {

console.log("Auto off",this.status)

if (this.status === 'end') {

this.webSocket.send(

JSON.stringify({

data: {

status: 2,

format: 'audio/L16;rate=16000',

encoding: 'raw',

audio: '',

},

})

)

this.audioData = []

clearInterval(this.handlerInterval)

}

return false

}

audioData = this.audioData.splice(0, 1280)

// Intermediate frame

this.webSocket.send(

JSON.stringify({

data: {

status: 1,

format: 'audio/L16;rate=16000',

encoding: 'raw',

audio: this.toBase64(audioData),

},

})

)

}, 40)

}

result(resultData) {

// End of identification

let jsonData = JSON.parse(resultData)

if (jsonData.data && jsonData.data.result) {

let data = jsonData.data.result

let str = ''

let resultStr = ''

let ws = data.ws

for (let i = 0; i < ws.length; i++) {

str = str + ws[i].cw[0].w

}

console.log("The results of identification are:",str)

// This field will be available when wpgs is enabled (premise: the dynamic correction function is enabled on the console)

// When the value is "apd", it means that the slice result is the final result appended to the front; When the value is "rpl", it means to replace the previous results, and the replacement range is rg field

if (data.pgs) {

if (data.pgs === 'apd') {

// Synchronize resultTextTemp to resultText

this.setResultText({

resultText: this.resultTextTemp,

})

}

// Store the results in resultTextTemp

this.setResultText({

resultTextTemp: this.resultText + str,

})

} else {

this.setResultText({

resultText: this.resultText + str,

})

}

}

if (jsonData.code === 0 && jsonData.data.status === 2) {

this.webSocket.close()

}

if (jsonData.code !== 0) {

this.webSocket.close()

console.log(`${jsonData.code}:${jsonData.message}`)

}

}

start() {

this.recorderStart()

this.setResultText({ resultText: '', resultTextTemp: '' })

}

stop() {

this.recorderStop()

}

}

export default IatRecorder

Step 2: configure the page you use:

<template>

<div class="conter">

<button @click="translationStart">start</button>

<button @click="translationEnd">stop it</button>

</div>

</template>

<script>

import IatRecorder from '@/assets/js/IatRecorder';

const iatRecorder = new IatRecorder('en_us','mandarin','9abbbfb0')//Small language - Chinese Dialect - appId

export default {

data() {

return {

};

},

methods: {

translationStart() {

iatRecorder.start();

},

translationEnd() {

iatRecorder.onTextChange = (text) => {

let inputText = text;

this.searchData = inputText.substring(0, inputText.length - 1); //Word processing, because I don't know why the recognition output is followed by '', This method removes the last bit of the string

console.log(this.searchData);

};

iatRecorder.stop();

},

}

};

</script>When you quote, the problem comes, transcode worker. JS file

const transWorker = new Worker() will report an error because the native new Worker cannot be used directly in vue

As we all know, JavaScript is single threaded, and some complex and time-consuming operations will block the rendering interaction of the page, cause page jam and affect the user experience. web worker is one of the new features of html5. It is mainly used to solve such problems by opening an additional thread for the page to handle some time-consuming operations without affecting the main thread.

During the development and use of actual vue projects, many problems have been encountered

The communication between different modules is mainly pushed through postMessage and received through onmessage, so the vue project cannot be used directly and has to be configured. However, the example of vue project is not given on iFLYTEK voice official website, and there is no special description, so I also stepped on a pit

Step 3: solve the error reporting problem of new Worker()

First, neither js file needs to be changed

Then install

npm install worker-loader -D

vue.config.js to add the following configuration:

configureWebpack: config => {

config.module.rules.push({

test: /\.worker.js$/,

use: {

loader: 'worker-loader',

options: { inline: true, name: 'workerName.[hash].js' }

}

})

},When you are running, you will find that the console will report an error, "window is undefined". This is because there is no window object in the worker thread, so it can not be used directly. Instead, use this instead, in Vue config. JS

chainWebpack: config => {

config.output.globalObject('this')

}When packing, add:

parallel: false

Together is

module.exports = {

configureWebpack: config => {

config.module.rules.push({

test: /\.worker.js$/,

use: {

loader: 'worker-loader',

options: { inline: true, name: 'workerName.[hash].js' }

}

})

},

parallel: false,

chainWebpack: config => {

config.output.globalObject('this')

}

}After configuration, you will find that there is no error, and then you can run normally!