The life of living alone is very boring. If there is anything refined, just chat with me

"Living alone is very boring. If there is anything refined, just chat with me." based on this unique idea, I decided to refine something and give it the ability to read the specified words.

At present, there are two products on the market that can better realize voice related functions, namely Baidu voice recognition and iFLYTEK voice recognition. Among the two, I choose iFLYTEK. If Python, node JS, c#, C + +, PHP as your development language, baidu speech recognition can find relevant documents. If the developed speech recognition is equipped with HarmonyOS system, iFLYTEK can be selected. Both have their own strengths and weaknesses.

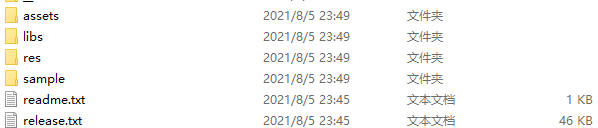

Before operation, you need to go to the official website to download the voice related demo, which contains the necessary resources for integrating voice technology.

After downloading, copy the assets and libs folders to your own project in Android manifest XML statically declares partial permissions.

<!--Connection network permission, used to perform cloud voice capability --> <uses-permission android:name="android.permission.INTERNET"/> <!--Obtain the permission to use the mobile phone recorder. This permission is required for dictation, recognition and semantic understanding --> <uses-permission android:name="android.permission.RECORD_AUDIO"/> <!--Read network information status --> <uses-permission android:name="android.permission.ACCESS_NETWORK_STATE"/> <!--Get current wifi state --> <uses-permission android:name="android.permission.ACCESS_WIFI_STATE"/> <!--Allow programs to change network connection status --> <uses-permission android:name="android.permission.CHANGE_NETWORK_STATE"/> <!--Permission to read mobile phone information --> <uses-permission android:name="android.permission.READ_PHONE_STATE"/> <!--External storage write permission, which is required for building syntax --> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/> <!--External storage read permission, which is required for building syntax --> <uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE"/> <!--Configuration permissions are used to record application configuration information --> <uses-permission android:name="android.permission.WRITE_SETTINGS"/> <!--Mobile positioning information is used to provide positioning for semantic and other functions and provide more accurate services--> <!--Location information is sensitive and can be accessed through Setting.setLocationEnable(false)Close location request --> <uses-permission android:name="android.permission.ACCESS_FINE_LOCATION"/>

Then dynamically apply for dangerous authority:

/**

* android 6.0 You need to dynamically apply for permission to record audio and write external storage

*/

private void initPermission() {

String permissions[] = {Manifest.permission.RECORD_AUDIO,

Manifest.permission.WRITE_EXTERNAL_STORAGE

};

ArrayList<String> toApplyList = new ArrayList<String>();

for (String perm : permissions) {

if (PackageManager.PERMISSION_GRANTED != ContextCompat.checkSelfPermission(this,

perm)) {

toApplyList.add(perm);

}

}

String tmpList[] = new String[toApplyList.size()];

if (!toApplyList.isEmpty()) {

ActivityCompat.requestPermissions(this, toApplyList.toArray(tmpList), 123);

}

}

The use of iFLYTEK voice recognition requires initialization, that is, to create a voice configuration object. Only after initialization can the services of MSC be used. It is recommended to put initialization at the program entrance (such as onCreate method of Application and Activity).

// Replace the string after "=" with the APPID you applied for. Application address: http://www.xfyun.cn // Do not add any empty characters or escape characters between "=" and appid SpeechUtility.createUtility(this, "appid=" + getString(R.string.app_id));

Realize speech recognition and monitoring

/**

* Speech recognition listener

*/

private RecognizerDialogListener mRecognizerDialogListener = new RecognizerDialogListener() {

public void onResult(RecognizerResult results, boolean isLast) {

if (!isLast) {

// Identification results

}

}

// Identify callback errors

public void onError(SpeechError error) {

Toast.makeText(MainActivity.this, error.getPlainDescription(true),

Toast.LENGTH_SHORT).show();

}

};

Then there's call grandson

public void call() {

// Use the SpeechRecognizer object to customize the interface according to the callback message;

mIat = SpeechRecognizer.createRecognizer(this, mInitListener);

if (null == mIat) {

// Failed to create a singleton, for the same reason as 21001 error, refer to http://bbs.xfyun.cn/forum.php?mod=viewthread&tid=9688

Toast.makeText(this, "Failed to create object, please confirm libmsc.so Correctly placed and called createUtility Initialize",

Toast.LENGTH_SHORT).show();

return;

}

// Clear data

mIatResults.clear();

// Set parameters

// Clear parameters

mIat.setParameter(SpeechConstant.PARAMS, null);

// Set dictation engine type

mIat.setParameter(SpeechConstant.ENGINE_TYPE, SpeechConstant.TYPE_CLOUD);

// Set the data format of the returned results

mIat.setParameter(SpeechConstant.RESULT_TYPE, "json");

if (language.equals("zh_cn")) {

String lag = mSharedPreferences.getString("iat_language_preference",

"mandarin");

// Set language

mIat.setParameter(SpeechConstant.LANGUAGE, "zh_cn");

// Set language region

mIat.setParameter(SpeechConstant.ACCENT, lag);

} else {

mIat.setParameter(SpeechConstant.LANGUAGE, language);

}

//This is used to set that no error code information is displayed in the dialog

mIat.setParameter("view_tips_plain", "false");

// Set the voice front endpoint: Mute timeout, that is, how long the user does not speak will be treated as timeout

mIat.setParameter(SpeechConstant.VAD_BOS, mSharedPreferences.getString(

"iat_vadbos_preference", "4000"));

// Set the post voice endpoint: the mute detection time at the back-end point, that is, the user will not input any more within how long he stops talking, and the recording will be stopped automatically

mIat.setParameter(SpeechConstant.VAD_EOS, mSharedPreferences.getString(

"iat_vadeos_preference", "1000"));

// Set the punctuation mark, set it to "0" to return the result without punctuation, and set it to "1" to return the result with punctuation

mIat.setParameter(SpeechConstant.ASR_PTT, mSharedPreferences.getString(

"iat_punc_preference", "1"));

// Set the audio saving path. The audio saving format supports pcm and wav. Set the path to sd card. Please note WRITE_EXTERNAL_STORAGE permissions

mIat.setParameter(SpeechConstant.AUDIO_FORMAT, "wav");

mIat.setParameter(SpeechConstant.ASR_AUDIO_PATH,

Environment.getExternalStorageDirectory() + "/msc/iat.wav");

mIatDialog.setListener(mRecognizerDialogListener);//Set listening

mIatDialog.show();// display a dialog box

}

See the show() method? With the execution of the show() method, the speech recognition function is completed

Grandpa asked questions. At this time, the grandson can't reply to Grandpa. For Grandpa's greeting, he can only silently look at Grandpa and feel at a loss like a wooden man.

When grandchildren speak, they need to use speech synthesis. In contrast to dictation, speech synthesis is to convert a paragraph of text into speech. It can synthesize sounds with different timbre, speed and intonation as needed, so that the machine can speak like a human. Not only that, it can also change grandchildren according to personal needs. High-end grandchildren and grandchildren can often recognize national languages and multi-national languages.

How does grandson know what message to reply to?

The reply information is preset by us in advance. The content of the reply is different according to the different speeches. There is a difference between speech recognition and command word recognition. Command word recognition is to recognize speech and extract preset keywords through text output. Speech recognition is to translate a string of speech into text and output it directly.

I insert grandpa's speech and grandson's reply into Sqlite in advance. When the text recognized by voice is consistent with Grandpa's speech stored in Sqlite, I take the corresponding answer. Through voice output, grandson will no longer be a mute.

So, let's realize the voice reply of grandson.

/**

* Grandson reply

* @param answer Sun Tzu's reply

*/

private void grandsonAnswer(String answer) {

// Initialize cloud speaker name list

cloudVoicersEntries = getResources().getStringArray(R.array.voicer_cloud_entries);

cloudVoicersValue = getResources().getStringArray(R.array.voicer_cloud_values);

// Initialize composite object

mTts = SpeechSynthesizer.createSynthesizer(this, mInitListener);

// Clear parameters

mTts.setParameter(SpeechConstant.PARAMS, null);

//Set composition

//Set up to use cloud engine

mTts.setParameter(SpeechConstant.ENGINE_TYPE, SpeechConstant.TYPE_CLOUD);

//Set speaker

mTts.setParameter(SpeechConstant.VOICE_NAME, voicerCloud);

//mTts.setParameter(SpeechConstant.TTS_DATA_NOTIFY,"1");// It supports real-time audio stream throwing, which is only supported under the condition of synthesizeToUri

//Set synthetic speed

mTts.setParameter(SpeechConstant.SPEED, "50");

//Set synthetic tone

mTts.setParameter(SpeechConstant.PITCH, "50");

//Set synthetic volume

mTts.setParameter(SpeechConstant.VOLUME, "50");

//Set player audio stream type

mTts.setParameter(SpeechConstant.STREAM_TYPE, "3");

// mTts.setParameter(SpeechConstant.STREAM_TYPE, AudioManager.STREAM_MUSIC+"");

// Set to play synthetic audio to interrupt music playback. The default value is true

mTts.setParameter(SpeechConstant.KEY_REQUEST_FOCUS, "true");

// Set the audio saving path. The audio saving format supports pcm and wav. Set the path to sd card. Please note WRITE_EXTERNAL_STORAGE permissions

mTts.setParameter(SpeechConstant.AUDIO_FORMAT, "wav");

mTts.setParameter(SpeechConstant.TTS_AUDIO_PATH,

Environment.getExternalStorageDirectory() + "/msc/tts.wav");

int code = mTts.startSpeaking(answer, mTtsListener);

if (code != ErrorCode.SUCCESS) {

Toast.makeText(this, "Speech synthesis failed,Error code: " + code + ",Please click the website https://www.xfyun" +

".cn/document/error-code Query solution", Toast.LENGTH_SHORT).show();

}

}

This is the result of running the code copy ↓

If you want to hear grandson call Grandpa, just pass a grandpa parameter directly when calling the method

public void callGrandpa(View view) {

grandsonAnswer("grandpa~");

}

If you are tired of listening to your grandson's voice, you can switch to your granddaughter's voice

/**

* Switch grandchildren

*/

public void update(View view) {

new AlertDialog.Builder(this).setTitle("Change grandchildren")

.setSingleChoiceItems(cloudVoicersEntries, // How many items are there in the radio box? What is the name of each item

selectedNumCloud, // Default options

new DialogInterface.OnClickListener() { // Processing after clicking the radio box

public void onClick(DialogInterface dialog,

int which) { // Which item was clicked

voicerCloud = cloudVoicersValue[which];

selectedNumCloud = which;

dialog.dismiss();

}

}).show();

}

Switchable grandchildren

<!-- synthesis -->

<string-array name="voicer_cloud_entries">

<item>Xiao Yan</item>

<item>Xiaoyu</item>

<item>Catherine</item>

<item>Henry</item>

<item>Mary</item>

<item>Xiaoyan</item>

<item>Xiao Qi</item>

<item>Xiaofeng</item>

<item>Xiaomei</item>

<item>Xiao Li</item>

<item>Xiao Rong</item>

<item>Rue </item>

<item>Xiao Kun</item>

<item>cockroach </item>

<item>Xiaoying</item>

<item>Xiaoxin</item>

<item>Nannan</item>

<item>Lao sun</item>

</string-array>

<string-array name="voicer_cloud_values">

<item>xiaoyan</item>

<item>xiaoyu</item>

<item>catherine</item>

<item>henry</item>

<item>vimary</item>

<item>vixy</item>

<item>xiaoqi</item>

<item>vixf</item>

<item>xiaomei</item>

<item>xiaolin</item>

<item>xiaorong</item>

<item>xiaoqian</item>

<item>xiaokun</item>

<item>xiaoqiang</item>

<item>vixying</item>

<item>xiaoxin</item>

<item>nannan</item>

<item>vils</item>

</string-array>

Here, all the functions you can think of are completed. Attach a demo to run GIF

Finally, don't forget that when the interface is destroyed, the corresponding variables should free memory

@Override

protected void onDestroy() {

super.onDestroy();

if( null != mIat ){

// Release connection on exit

mIat.cancel();

mIat.destroy();

}

if( null != mTts ){

mTts.stopSpeaking();

// Release connection on exit

mTts.destroy();

}

}

This article code has been uploaded to: What kind of experience is it to develop a "grandson" who will call himself "Grandpa"?

reference:

1,Android iFLYTEK speech recognition (detailed steps + source code)

2,IFLYTEK online voice dictation Android SDK document

3,IFLYTEK online speech synthesis Android SDK document