In the last article, we introduced the neural network to deal with a nonlinear regression problem. This time, we will use the neural network to deal with a multivariate classification problem.

This time we solve such a problem: input a person's height and weight data, and the program will judge the person's figure. There are three categories: thin, normal and fat.

The processing flow is as follows:

1. Collect data

2. Constructing neural network

3. Training network

4. Preservation and consumption model

The detailed steps are as follows:

1. Collect data

For a complex business data, it should be obtained through data collection in practical application. The focus of this paper is not data collection, so we will make a batch of standard data for learning.

There is a BMI algorithm for the fat and thin problem of human body, that is, BMI=weight / (height * height). When BMI is less than 18, it is considered to be thin, when BMI is greater than 28, it is considered to be fat, and between 18 and 28, it is considered normal.

Firstly, the data of height and weight are randomly generated, then the BMI value is calculated, and the results are marked. Among them, the thin mark is 0, the normal mark is 1, and the fat mark is 2. The code is as follows:

/// <summary> /// Load training data /// </summary> /// <param name="total_size"></param> private (NDArray, NDArray) PrepareData(int total_size) { float[,] arrx = new float[total_size, num_features]; int[] arry = new int[total_size]; for (int i = 0; i < total_size; i++) { float weight = (float)random.Next(30, 100) / 100; float height = (float)random.Next(140, 190) / 100; float bmi = (weight * 100) / (height * height); arrx[i, 0] = weight; arrx[i, 1] = height; switch (bmi) { case var x when x < 18.0f: arry[i] = 0; break; case var x when x >= 18.0f && x <= 28.0f: arry[i] = 1; break; case var x when x > 28.0f: arry[i] = 2; break; } }

2. Constructing neural network

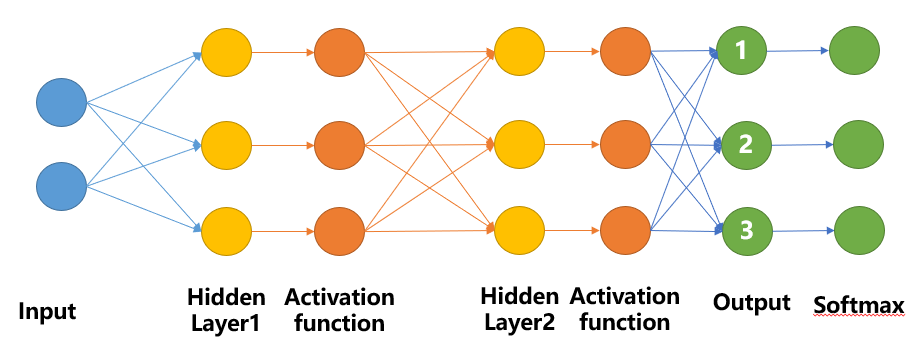

Compared with the simple nonlinear model, the network structure this time will be slightly more complex:

// Network parameters

int num_features = 2; // data features

int num_classes = 3; // total output

/// <summary> /// Building network model /// </summary> private Model BuildModel() { // Network parameters int n_hidden_1 = 64; // 1st layer number of neurons. int n_hidden_2 = 64; // 2nd layer number of neurons. var model = keras.Sequential(new List<ILayer> { keras.layers.InputLayer(num_features), keras.layers.Dense(n_hidden_1, activation:keras.activations.Relu), keras.layers.Dense(n_hidden_2, activation:keras.activations.Relu), keras.layers.Dense(num_classes, activation:keras.activations.Softmax) }); return model; }

Firstly, this time includes two layers of neural network, the activation function adopts RELU, and the output layer activation function adopts Softmax function.

Compared with the network structure in the previous article, it seems to be much more complex, but its essence is not much different, just an additional Softmax function.

Please note that if you only look at one of the three Output nodes, it is actually an ordinary nonlinear model.

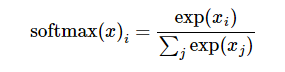

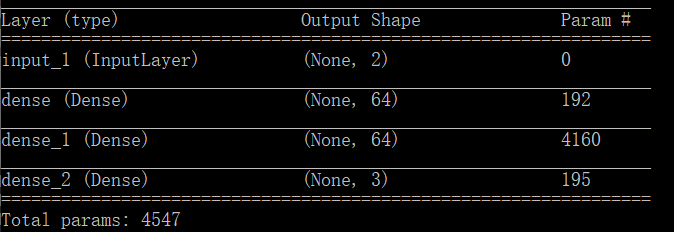

Since the sum of the data of nodes 1, 2 and 3 is not necessarily equal to 1, the purpose of the Softmax function is to make the sum of the three numbers finally output 1, so that the number itself can represent the probability. The calculation method is also very simple:

Finally, let's take a look at the summary information of this network:

Number of training parameters of layer 1 network: (2 + 1) * 64 = 192

Number of training parameters of layer 2 network: (64 + 1) * 64 = 4160

Number of training parameters of output layer network: (64 + 1) * 3 = 195

3. Training network

(NDArray train_x, NDArray train_y) = PrepareData(1000); model.compile(optimizer: keras.optimizers.Adam(0.001f), loss: keras.losses.SparseCategoricalCrossentropy(), metrics: new[] { "accuracy" }); model.fit(train_x, train_y, batch_size: 128, epochs: 300);

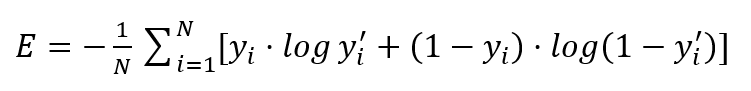

One thing to note here: the loss function adopts the sparse classification cross entropy method. For classification tasks, the classification cross entropy method is used as the loss function most of the time.

The following is the realization formula of binary cross entropy:

We can simply understand the meaning of cross entropy without looking at the formula: if the predicted value is close to 1 when the tag value is 1 or close to 0 when the tag value is 0, the value of the loss function will be relatively small.

For example, if the tag value is [1,0,0], and the predicted value is [0.99,0.01,0], the loss is relatively small. On the contrary, if the predicted value is [0.1,0.1,0.8], the loss is relatively large.

The following is an implementation method of binary cross entropy:

private Tensor BinaryCrossentropy(Tensor x, Tensor y) { var shape = tf.reduce_prod(tf.shape(x)); var count = tf.cast(shape, TF_DataType.TF_FLOAT); x = tf.clip_by_value(x, 1e-6f, 1.0f - 1e-6f); var z = y * tf.log(x) + (1 - y) * tf.log(1 - x); var result = -1.0f / count * tf.reduce_sum(z); return result; }

The difference between sparse classification cross entropy and binary cross entropy is that binary cross entropy needs one-hot coding for labeling results, while sparse classification cross entropy does not.

As mentioned earlier, we mark the classification results, where the thin mark is 0, the normal mark is 1, and the fat mark is 2; When the binary cross entropy is used for calculation, the thin mark is [1,0,0], the normal mark is [0,1,0], and the fat mark is [0,0,1].

4. Preservation and consumption model

After the training, we check the accuracy of the model by consuming the model.

/// <summary> /// Consumption model /// </summary> private void test(Model model) { int test_size = 20; for (int i = 0; i < test_size; i++) { float weight = (float)random.Next(40, 90) / 100; float height = (float)random.Next(145, 185) / 100; float bmi = (weight * 100) / (height * height); var test_x = np.array(new float[1, 2] { { weight, height } }); var pred_y = model.Apply(test_x); Console.WriteLine($"{i}:weight={(float)weight} \theight={height} \tBMI={bmi:0.0} \tPred:{pred_y[0].numpy()}"); } }

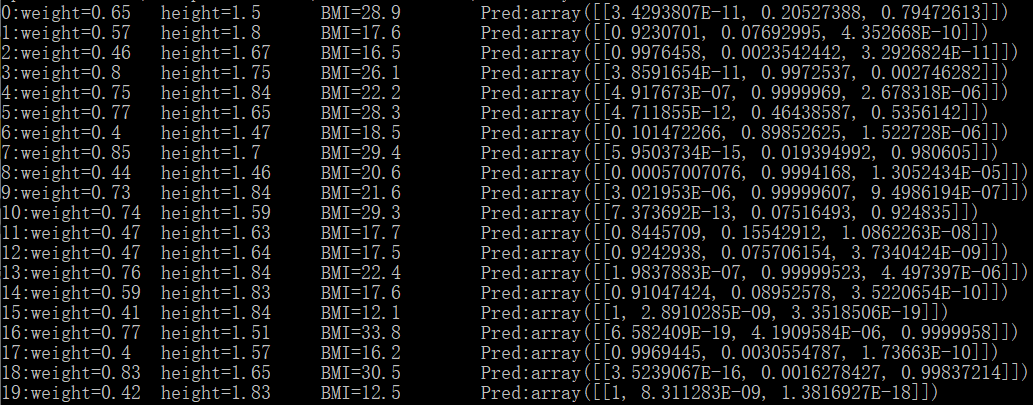

The test results are as follows:

Look at two pieces of data casually: when BMI is 30.5, the prediction result is [0,0.0016,0.9983]; When BMI is 12.5, the prediction result is: [1,0,0], which shows that the result is still accurate.

All codes are as follows:

/// <summary> /// Multiple classification is realized by neural network /// </summary> public class NN_MultipleClassification_BMI { private readonly Random random = new Random(1); // Network parameters int num_features = 2; // data features int num_classes = 3; // total output . public void Run() { var model = BuildModel(); model.summary(); Console.WriteLine("Press any key to continue..."); Console.ReadKey(); (NDArray train_x, NDArray train_y) = PrepareData(1000); model.compile(optimizer: keras.optimizers.Adam(0.001f), loss: keras.losses.SparseCategoricalCrossentropy(), metrics: new[] { "accuracy" }); model.fit(train_x, train_y, batch_size: 128, epochs: 300); test(model); } /// <summary> /// Building network model /// </summary> private Model BuildModel() { // Network parameters int n_hidden_1 = 64; // 1st layer number of neurons. int n_hidden_2 = 64; // 2nd layer number of neurons. var model = keras.Sequential(new List<ILayer> { keras.layers.InputLayer(num_features), keras.layers.Dense(n_hidden_1, activation:keras.activations.Relu), keras.layers.Dense(n_hidden_2, activation:keras.activations.Relu), keras.layers.Dense(num_classes, activation:keras.activations.Softmax) }); return model; } /// <summary> /// Load training data /// </summary> /// <param name="total_size"></param> private (NDArray, NDArray) PrepareData(int total_size) { float[,] arrx = new float[total_size, num_features]; int[] arry = new int[total_size]; for (int i = 0; i < total_size; i++) { float weight = (float)random.Next(30, 100) / 100; float height = (float)random.Next(140, 190) / 100; float bmi = (weight * 100) / (height * height); arrx[i, 0] = weight; arrx[i, 1] = height; switch (bmi) { case var x when x < 18.0f: arry[i] = 0; break; case var x when x >= 18.0f && x <= 28.0f: arry[i] = 1; break; case var x when x > 28.0f: arry[i] = 2; break; } } return (np.array(arrx), np.array(arry)); } /// <summary> /// Consumption model /// </summary> private void test(Model model) { int test_size = 20; for (int i = 0; i < test_size; i++) { float weight = (float)random.Next(40, 90) / 100; float height = (float)random.Next(145, 185) / 100; float bmi = (weight * 100) / (height * height); var test_x = np.array(new float[1, 2] { { weight, height } }); var pred_y = model.Apply(test_x); Console.WriteLine($"{i}:weight={(float)weight} \theight={height} \tBMI={bmi:0.0} \tPred:{pred_y[0].numpy()}"); } } }

[related resources]

Source code: Git: https://gitee.com/seabluescn/tf_not.git

Project Name: NN_MultipleClassification_BMI

catalog: View tensorflow Net machine learning introduction series directory