Kubernetes binary deployment

1, Environmental preparation

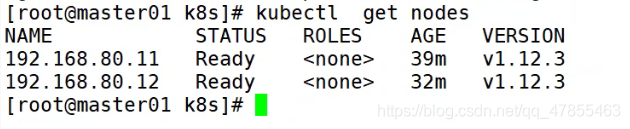

k8s colony master01: 192.168.80.10 kube-apiserver kube-controller-manager kube-scheduler etcd k8s colony master02: 192.168.80.20 k8s colony node01: 192.168.80.11 kubelet kube-proxy docker flannel k8s colony node02: 192.168.80.12 etcd Cluster node 1: 192.168.80.10 etcd etcd Cluster node 2: 192.168.80.11 etcd Cluster node 3: 192.168.80.12 load balancing nginx+keepalive01 (master) : 192.168.80.14 load balancing nginx+keepalive02 (backup) : 192.168.80.15 systemctl stop firewalld systemctl disable firewalld setenforce 0

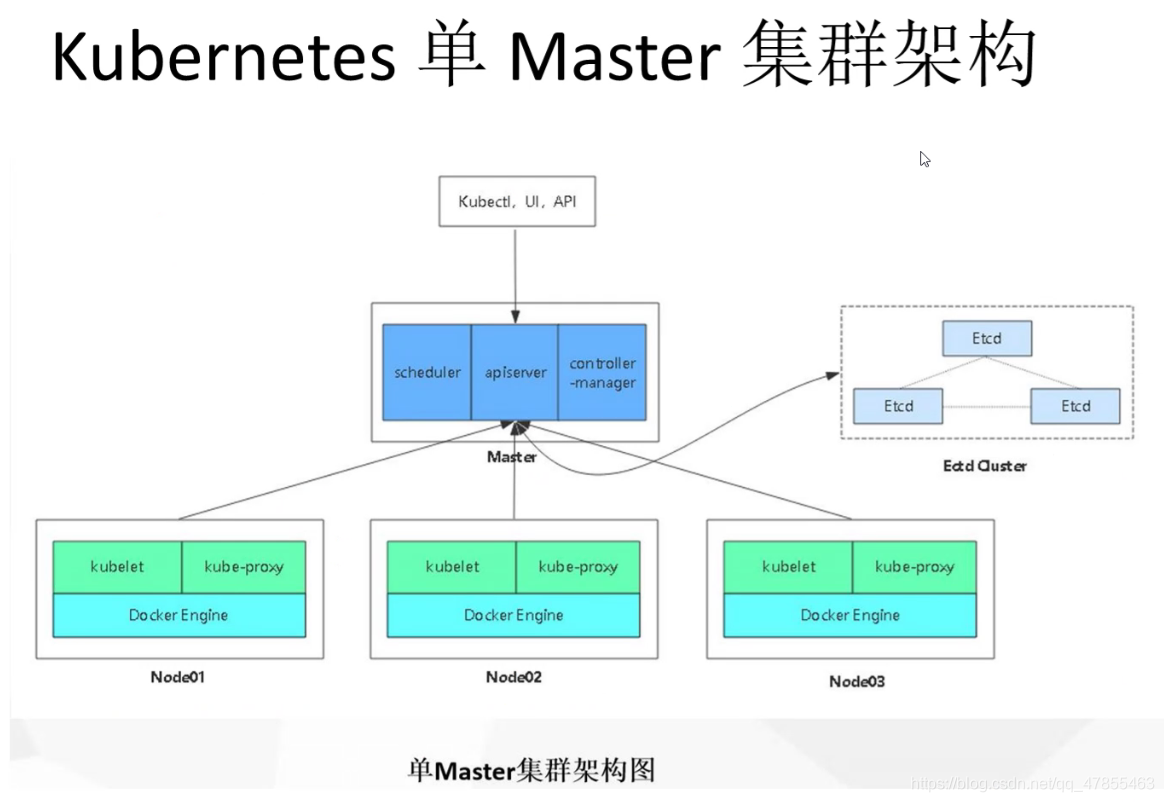

- There are at least three k8s clusters in a single master, one master host and two node nodes. The idea of decentralization is used to save servers. Three etcd nodes are configured on the master and node hosts

- There are at least six hosts in the dual master node, two master hosts, two node hosts, three etcd nodes configured on the master and node nodes, and two load balancing node hosts

- The communication between api server and client kubectl is encrypted, the communication between api server and etcd is encrypted, and the communication between etcd databases is encrypted. Certificates need to be issued

2, Deploy etcd cluster

- Etcd is an open source project initiated by the CoreOs team in June 2013. Its goal is to build a highly available distributed key value database (non relational database). The raft protocol is used as the consistency algorithm in etcd, and etcd is written in go language.

- As a service discovery system, etcd has the following characteristics:

| simple | The installation and configuration are simple, and the HTTP API is provided for interaction, and the use is also very simple |

|---|---|

| security | Support SSL certificate authentication |

| fast | Single instance supports 2k + read operations per second |

| reliable | The raft algorithm is used to realize the availability and consistency of distributed system data |

- etcd currently uses port 2379 to provide HTTP by default

- API service, port 2380 and peer communication (these two ports have been officially reserved for etcd by IANA).

- That is, etcd uses port 2379 by default to provide external communication for clients, and port 2380 for internal communication between servers.

- Cluster deployment is generally recommended for etcd in production environment. Due to the leader election mechanism of etcd, it is required to have at least 3 or more odd numbers.

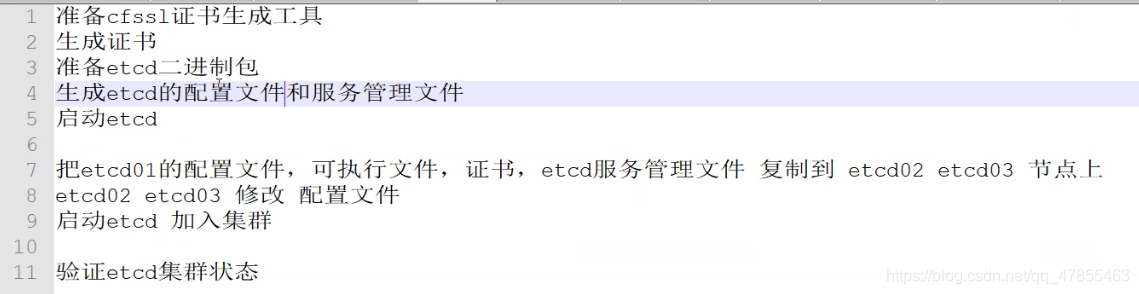

1. Prepare environment for issuing certificates

- CFSSL is an open source PKI/TLS tool of CloudFlare.

- CFSSL includes a command line tool and - an HTTP API service for signing, validating, and bundling TLS certificates. Written in Go language.

- CFSSL uses configuration files to generate certificates. Therefore, before self signing, it needs to generate the json format configuration files it recognizes. CFSSL provides a convenient command line to generate configuration files.

- CFSSL is used to provide TLS certificates for etcd. It supports signing three types of certificates:

- 1. Client certificate, the certificate carried by the server when connecting to the client, which is used for the client to verify the identity of the server, such as Kube apiserver accessing etcd;

- 2. Server certificate, the certificate carried by the client when connecting to the server, which is used by the server to verify the identity of the client, such as etcd providing services externally;

- 3. peer certificate, the certificate used when connecting with each other, such as etcd node for authentication and communication.

- All of them use the same set of certificates for authentication.

2. Steps for issuing certificates

#####stay master01 Operation on node#####

//Download certificate making tool

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

or

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux- amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl*

----------------------------------------

cfssl: Tool command issued by certificate

cfssljson: take cfssl Generated certificate( json format)Change to file hosted certificate

cfssl-certinfo: Verify certificate information

cfssl-certinfo -cert <Certificate name> #View certificate information

------------------------------------------

//Create k8s working directory

mkdir /opt/k8s

cd /opt/k8s/

//Upload etcd-cert.sh and etcd SH to / opt/k8s / directory

chmod +x etcd-cert.sh etcd.sh

//Create a directory for generating CA certificates, etcd server certificates, and private keys

mkdir /opt/k8s/etcd-cert

mv etcd-cert.sh etcd-cert/

cd /opt/k8s/etcd-cert/

./etcd-cert.sh #Generate CA certificate, etcd server certificate and private key

ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem etcd-cert.sh server.csr server-csr.json server-key.pem server.pem

-------------------------start-up etcd service------------------------

//Etcd binary package address: https://github.com/etcd-io/etcd/releases

//Upload etcd-v3 3.10-1inux-amd64. tar. GZ to the / opt/k8s / directory and unzip the etcd package

cd /opt/k8s/

tar zXVf etcd-v3.3.10-linux-amd64.tar.gz

ls etcd-v3.3.10-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

-----------------------------------------------------------

etcd namely etcd The service startup command can be followed by various startup parameters

etadct1 Mainly etcd The service provides command line operations

----------------------------------------------------------

//Create a directory for storing etcd configuration files, command files, and certificates

mkdir -p /opt/etcd/{cfg, bin,ss1)

mv /opt/k8s/etcd-v3.3.10-linux-amd64/etcd /opt/k8s/etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/

cp /opt/k8s/etcd-cert/*.pem /opt/etcd/ssl/

./etcd.sh etcd01 192.168.80.10 etcd02=https://192.168.80.11:2380,etcd03=https://192.168.80.12:2380

//Enter the stuck state and wait for other nodes to join. Here, three etcd services need to be started at the same time. If only one of them is started, the service will be stuck there until all etcd nodes in the cluster have been started. This situation can be ignored

//In addition, open a window to check whether the etcd process is normal

ps -ef | grep etcd

//Copy all etcd related certificate files and command files to the other two etcd cluster nodes

scp -r /opt/etcd/ root@192.168.80.11:/opt/

scp -r /opt/etcd/ root@192.168.80.12:/opt/

3. etcd-cert.sh and etcd SH script

etcd-cert.sh

#!/bin/bash

#Configure the certificate generation policy, let the CA software know what function certificates are issued, and generate the root certificate used to issue certificates of other components

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

#ca-config.json: multiple profiles can be defined to specify different expiration time, usage scenario and other parameters;

#A profile will be used when signing the certificate later; This instance has only one www template.

#Expire: Specifies the validity period of the certificate. 87600h is 10 years. If the default value is one year, the cluster will be down immediately after the certificate expires

#signing: indicates that the certificate can be used to sign other certificates; CA=TRUE in the generated ca.pem certificate;

#Key encryption: indicates the use of asymmetric key encryption, such as RSA encryption;

#server auth: indicates that the client can use this CA to verify the certificate provided by the server;

#client auth: indicates that the server can use this CA to verify the certificate provided by the client;

#Pay attention to punctuation. The last field usually has no comma.

#-----------------------

#Generate CA certificate and private key (root certificate and private key)

#Special note: there are some differences between cfssl and openssl. openssl needs to generate a private key, then use the private key to generate a request file, and finally generate a signed certificate and private key, but cfssl can directly obtain the request file.

cat > ca-csr.json <<EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

#CN: Common Name: the browser uses this field to verify whether the website or organization is legal. Generally, it writes the domain name

#key: Specifies the encryption algorithm. Generally, rsa (size: 2048) is used

#C: Country, country

#ST: State, State, province

#50: L ocality, region, city

#O: Organization Name

#OU: Organization Unit Name, company Department

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

#Generated files:

#ca-key.pem: root certificate private key

#ca.pem: root certificate

#ca.csr: root certificate issuance request file

#Cfssl gencert - initca < CSRJSON >: use the CSRJSON file to generate a new certificate and private key. If you do not add a pipe symbol, all certificate contents will be directly output to the screen.

#Note: the CSRJSON file uses a relative path, so cfssl needs to be executed under the path of the csr file, or it can be specified as an absolute path.

#Cfssl json changes the certificate generated by cfssl (json format) into a file hosted certificate, - bare is used to name the generated certificate file.

#-----------------------

#Generate etcd server certificate and private key

cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"192.168.80.71",

"192.168.80.72",

"192.168.80.73"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

#hosts: to add all etcd cluster nodes to the host list, you need to specify the node ip or host name of all etcd clusters. The network segment cannot be used. The new etcd server needs to reissue the certificate.

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

#Generated files:

#server.csr: certificate request file for the server

#server-key.pem: private key of the server

#server.pem: digital signature certificate of the server

#-Config: reference the certificate generation policy file CA config json

#-profile: Specifies the usage scenarios in the certificate generation policy file, such as Ca config www in JSON

etcd.sh

#!/bin/bash

# example: ./etcd.sh etcd01 192.168.80.10 etcd02=https://192.168.80.11:2380,etcd03=https://192.168.80.12:2380

#Create etcd configuration file / opt/etcd/cfg/etcd

ETCD_NAME=$1

ETCD_IP=$2

ETCD_CLUSTER=$3

WORK_DIR=/opt/etcd

cat > $WORK_DIR/cfg/etcd <<EOF

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

#Member: member configuration

#ETCD_NAME: node name, unique in the cluster. The member name must be unique in the cluster, such as etcd01

#ETCD_LISTEN_PEER_URLS: cluster communication listening address. The address used to listen for messages sent by other member s. If the ip is all 0, it means listening to all interfaces of the machine

#ETCD_LISTEN_CLIENT_URLS: client access listening address. The address used to listen for etcd clients to send messages. If the ip is all 0, it means listening to all interfaces of the machine

#Clustering: cluster configuration

#ETCD_ADVERTISE_CLIENT_URLS: client notification address. The etcd client uses this address to exchange information with this member. Make sure that the address is accessible from the customer side

#ETCD_INITIAL_CLUSTER: cluster node address. This member is used. Describe the information of all nodes in the cluster. This member contacts other members based on this information

#ETCD_INITIAL_CLUSTER_TOKEN: cluster token. Used to distinguish different clusters. If there are multiple clusters in the local area, they should be set to different clusters

#ETCD_INITIAL_CLUSTER_STATE: the current state of joining a cluster. New is a new cluster, and existing means joining an existing cluster.

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \

--key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#--Listen client URLs: used to specify the connection port between etcd and client

#--Advertisement client URLs: used to specify the communication port between etcd servers. Etcd has requirements. If -- listen client URLs is set, then -- advertisement client URLs must be set at the same time, so

Even if the settings are the same as the default, they must be set explicitly

#--The configuration item at the beginning of peer is used to specify TLS related certificates (peer certificates) in the cluster. All of them use the same set of certificates for authentication

#The parameter without -- peer is to specify the etcd server TLS related certificate (server certificate). All of them use the same set of certificate authentication

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

4. Deployment process

5. Workflow using certificate access:

- (1) The client initiates a request to connect to the process port of the server.

- (2) The server must have a set of digital certificates (certificate contents include public key, certification authority, expiration date, etc.).

- (3) The server sends its own digital certificate to the client (the public key is in the certificate and the private key is held by the server).

- (4) After the client receives the digital certificate, it will verify the validity of the certificate. If the certificate is verified, a random key pair will be generated Public key encryption.

- (5) The client sends the encrypted key of the public key to the server.

- (6) After receiving the ciphertext key sent by the client, the server uses its previously reserved private key to asymmetrically decrypt it. After decryption, it obtains the client's key, and then uses the client's key to symmetrically encrypt the returned data, so that the transmitted data is ciphertext.

- (7) The server returns the encrypted ciphertext data to the client.

- (8) After receiving it, the client symmetrically decrypts it with its own key to obtain the data returned by the server.

4, Deploy docker engine

//Deploy docker engine on all node nodes yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install -y docker-ce docker-ce-cli containerd.io systemctl start docker.service systemctl enable docker.service

5, flannel network configuration

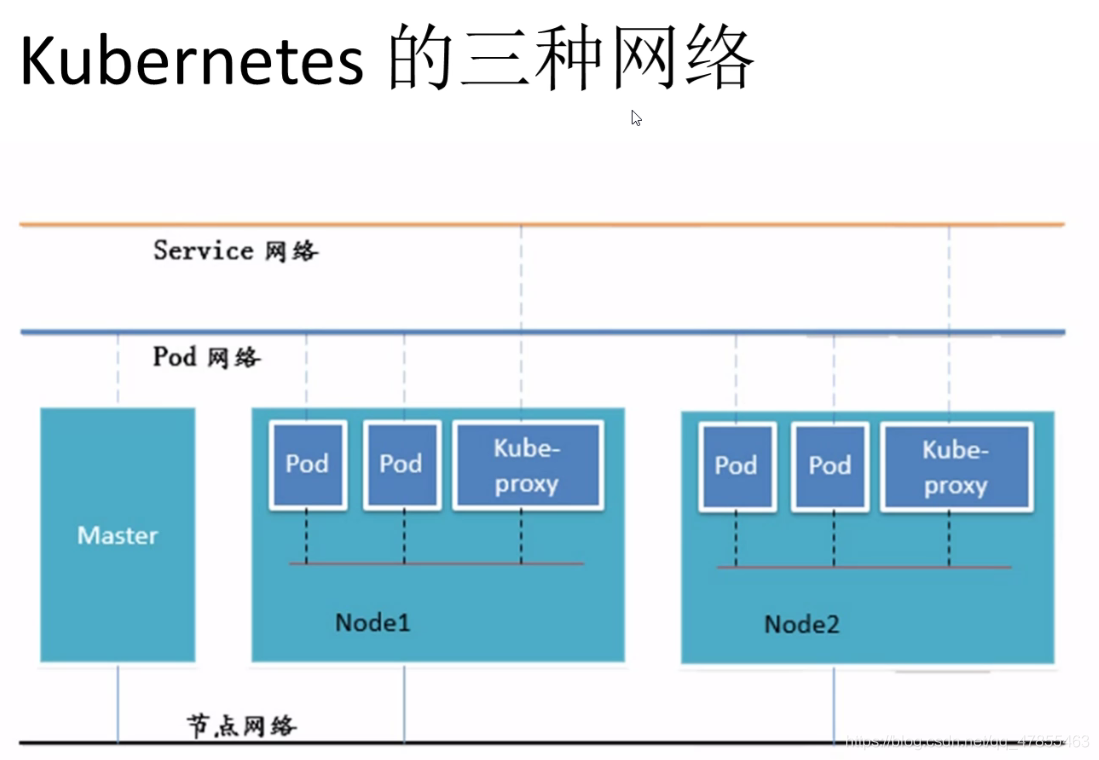

1. Pod network communication in K8S:

Communication between Pod content container and container

- Containers in the same Pod (containers in the Pod will not cross the host) share the same network command space, which is equivalent to that they are on the same machine. They can use the localhost address to access each other's ports.

Communication between pods in the same Node

- Each pod has a real global IP address. Different pods in the same Node can communicate directly using the IP address of the other pod. Pod1 and Pod2 are connected to the same docker0 bridge (172.1.0.0) through Veth. The network segments are the same, so they can communicate directly.

Communication between pods on different nodes

- The Pod address and docker0 are in the same network segment. Docker0 network segment and the host network card are two different network segments, and the communication between different nodes can only be carried out through the physical network card of the host.

- In order to realize the communication between pods on different nodes, we must find a way to address and communicate through the physical network card IP address of the host. Therefore, two conditions should be met: the IP of Pod cannot conflict; Associate the IP of the Pod with the IP of the Node where it is located. Through this association, pods on different nodes can communicate directly through the intranet IP address.

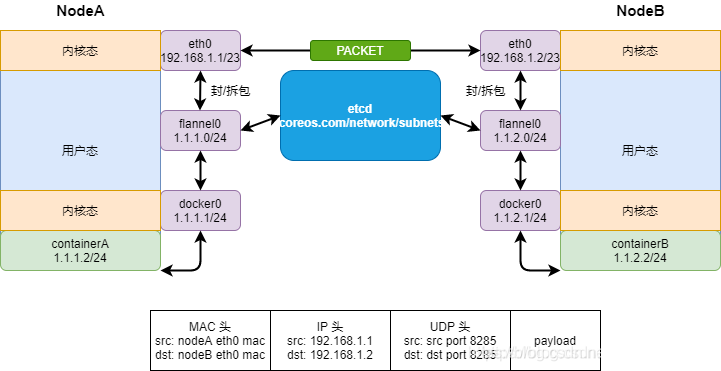

2,Flannel

Overlay Network:

- Overlay network, a virtual network technology mode superimposed on the two-tier or three-tier basic network. The hosts in the network are connected through virtual link tunnels (similar to VPN)

VXLAN:

- The source data packet is encapsulated into UDP, and the IP/MAC of the basic network is used as the outer message header for encapsulation, and then transmitted on the Ethernet. After reaching the destination, the tunnel endpoint unpacks and sends the data to the target address.

Flannel:

- Flannel's function is to make Docker containers created by different node hosts in the cluster have unique virtual IP addresses in the whole cluster.

- Flannel is a kind of Overlay network. It also encapsulates TCP source data packets in another network packet for routing, forwarding and communication. At present, it has supported data forwarding methods such as UDP, VXLAN, AWS VPC and so on.

Function: Flannel network plug-in, which is used to communicate with different node components pod

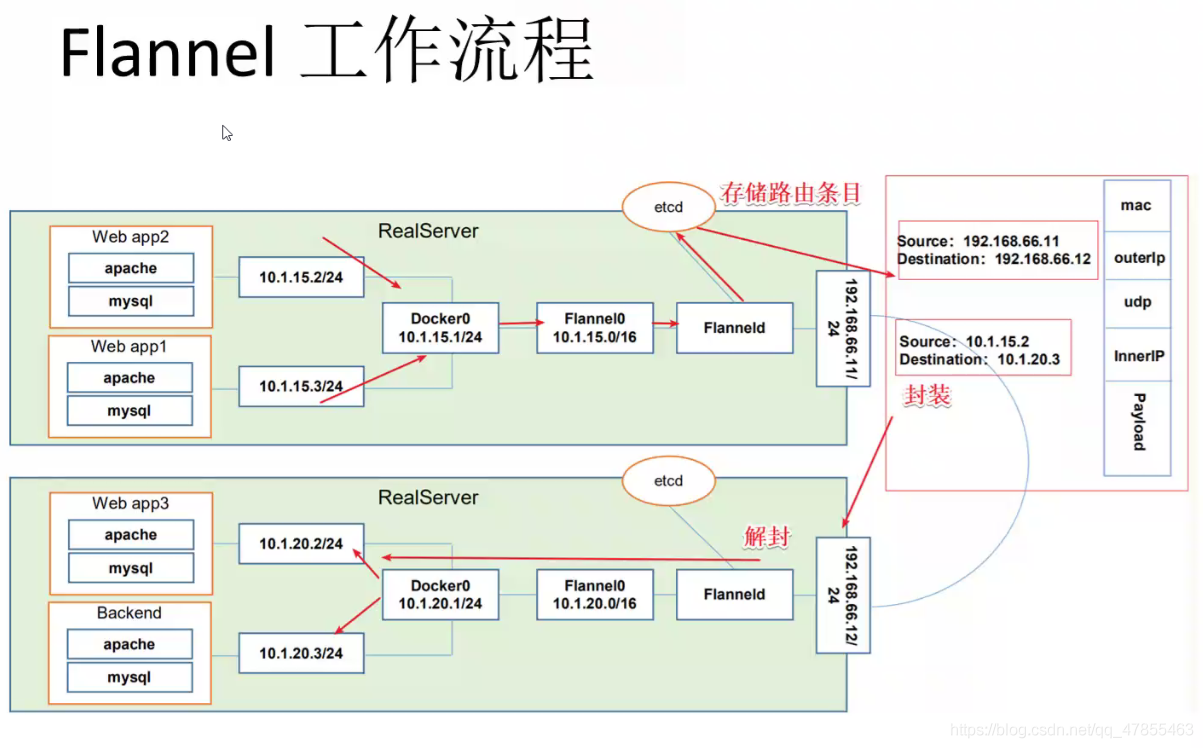

3. How Flannel works:

- After the data is sent from the source container of the Pod on node01, it is forwarded to the flannel0 virtual network card through the docker0 virtual network card of the host. The flanneld service monitors the other end of the flannel10 virtual network card.

- Flannel maintains a routing table between nodes through Etcd service. The flanneld service of the source host node01 encapsulates the original data content into UDP and delivers it to the flanneld service of the destination node node02 through the physical network card according to its own routing table. After the data arrives, it is unpacked, and then directly enters the flannel0 virtual network card of the destination node, and then it is forwarded to the docker0 virtual network card of the destination host, Finally, it is forwarded by docker0 to the target container just like native container communication.

source IP 10.1.15.3 --->66.11 (Flannel Packaging):[IP head MAC Header bitstream] (UDP)Package to UDP Yes UDP Internal in IP,Include source IP And purpose IP ---> 66.12 (Flannel Unpacking):[MAC head IP head UDP] ---> objective IP 10.1.20.3

- The purpose of flannel is to realize the communication between pod s on different nodes

- flannel will encapsulate the internal pod IP into UDP, view the etcd through the API server, and then send it to the destination node through the physical network card according to the routing table saved in the etcd

- After receiving the forwarded data, the destination node is unpacked by flannel to expose the internal pod IP in the udp, and then forwarded to the destination pod by flannei0 – > docker0 according to the destination IP.

Instructions provided by Flannel of ETCD:

- Storage management Flannel allocable IP address segment resources

- Monitor the actual address of each Pod in ETCD, and establish and maintain the Pod node routing table in memory

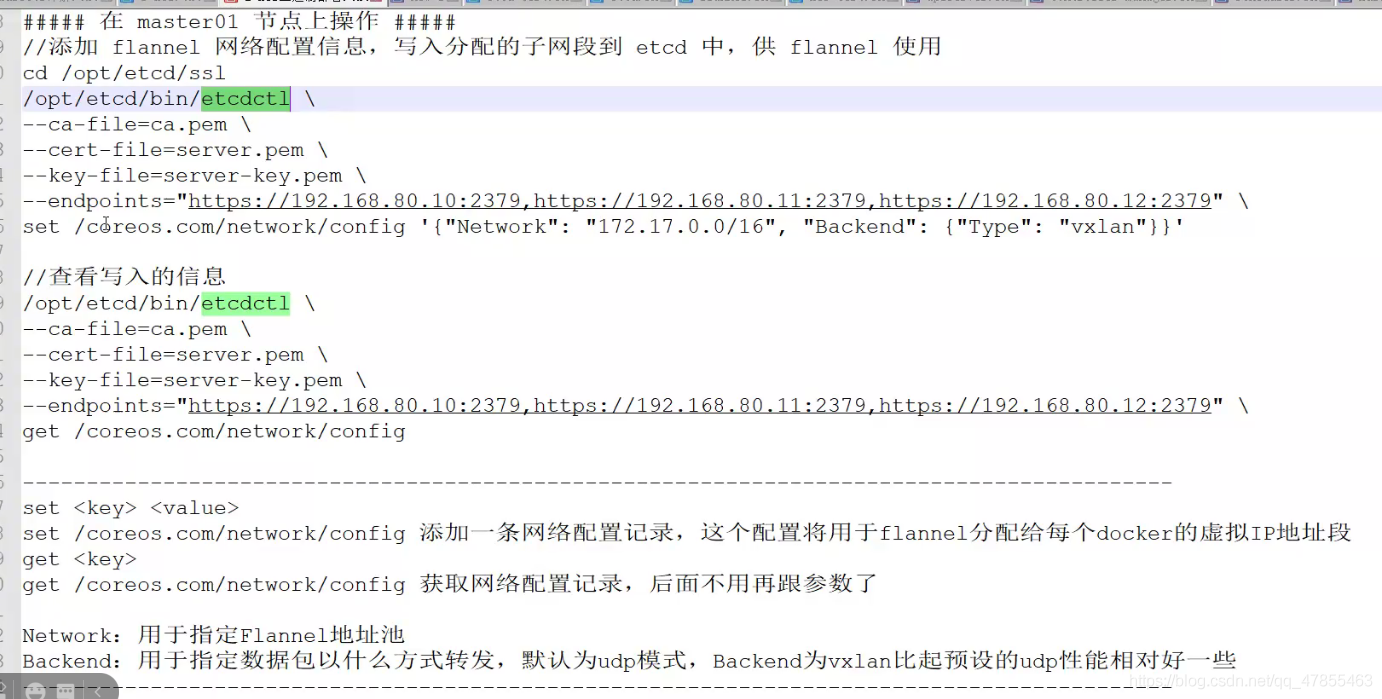

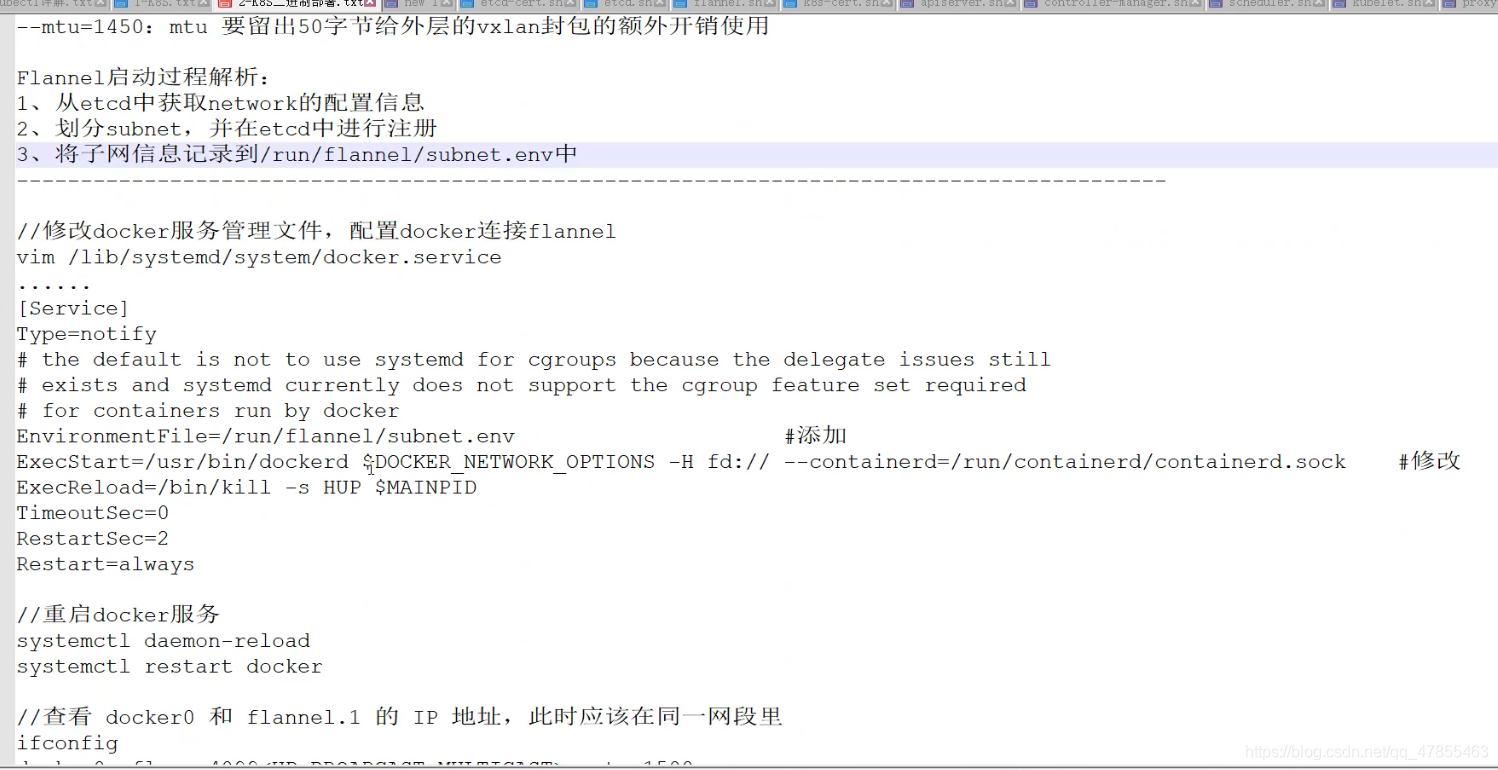

4. Set up a flannel (configured on the node node)

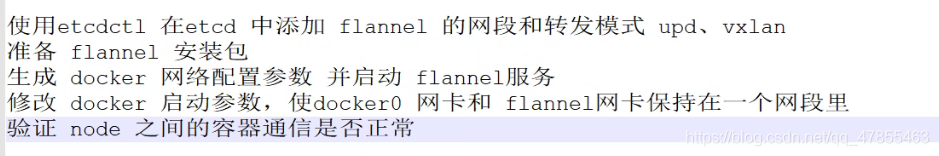

5. Operation flow of flannel

summary

flannel workflow

The function of flannel is to realize the mutual communication between pods on different nodes. The data packet accesses Node2 from node1. The data packet contains the source IP and target IP. The data is forwarded to flannel0 virtual network card through the docker0 virtual network card of the host on node1 Node. Flanneld service is responsible for monitoring flannel0 virtual network card and encapsulating the content of the source data packet into UDP. The source IP is the IP of node1, The target IP is the IP of Node2. According to the inter Node routing table saved by etcd, flannel forwards the packet to the flanneld service of the destination Node node2 through the physical network card. After the packet arrives, it is unpacked to expose the internal pod IP in the UDP. Then it is sent from flannel0 to docker0 according to the destination IP, and then docker0 forwards the data to the destination pod like local container communication.

1, Deploy master components

#####stay master01 Operation on node###

//Upload master Zip and k8s-cert.sh to the / opt/k8s directory, and unzip the master.exe file Zip package

cd /opt/k8s/

unzip master.zip

apiserver.sh

scheduler.sh

controller-manager.sh

chmod +x *.sh

//Create kubernetes working directory

mkdir -p /opt/kubernetes/{cfg,bin,ss1}

//Create a directory for generating CA certificates, certificates for related components, and private keys

mkdir /opt/k8s/k8s-cert

mv /opt/k8s/k8s-cert.sh /opt/k8s/k8s-cert

cd /opt/k8s/k8s-cert/

./k8s-cert.sh

#Generate CA certificate, certificate and private key of related components

ls *pem

admin-key.pem apiserver-key.pem Ca-key.pem kube-proxy-key.pem

admin.pem apiserver.pem ca.pem kube-proxy.pem

//The controller manager and Kube scheduler are set to call only the apiserver of the current machine, using 127.0 0.1:8080 communication, so no certificate is required

//Copy the CA certificate, apiserver related certificate and private key to the ssl subdirectory of kubernetesi working directory

cp ca*pem apiserver*pem /opt/kubernetes/ssl/

//Upload kubernetes-server-linux-amd64.0 tar. GZ to the / opt/k8s / directory and unzip the kubernetes compressed package

cd /opt/k8s/

tar zxvf kubernetes-server-linux-amd64.tar.gz

//Copy the key command files of the master component to the bin subdirectory of the kubernetes working directory

cd /opt/k8s/kubernetes/server/bin

cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

ln -s /opt/kubernetes/bin/* /usr/local/bin/

//Create a bootstrap token authentication file, which will be called when apiserver is started. Then it is equivalent to creating one user in the cluster, and then you can authorize him with RBAC

cd /opt/k8s/

vim token.sh

#!/bin/bash

#Get the first 16 bytes of random number, output it in hexadecimal format, and delete the spaces in it

BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

#Generate Token CSV file, generated in the format of Token serial number, user name, UID and user group

cat > /opt/kubernetes/cfg/token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system: kubelet-bootstrap"

EOF

chmod +x token.sh

./token.sh

cat /opt/kubernetes/cfg/token.csv

//When the binary file, token and certificate are ready, start the apiserver service

cd /opt/k8s/

./apiserver.sh 192.168.80.10 https://192.168.80.10:2379,https://192.168.80.11:2379,https://192.168.80.12:2379

//Check whether the process started successfully

ps aux | grep kube-apiserver

//k8s provides services through the Kube apiserver process, which runs on a single master node. By default, there are two ports 6443 and 8080

//The secure port 6443 is used to receive HTTPS requests and authenticate based on Token files or client certificates

netstat -natp | grep 6443

k8s There are two ways to communicate between cluster components, etcd The internal communication port is 2380 and the external communication port is 2379 master : apiserver <---> controller-manager,scheduler On the same node,127.0.0.1:8080 No certificate required node : kubelet,kube-proxy <---> apiserver https 6443

2, Deploy node components

#Upload the node compressed package and unzip it unzip node.zip #Execute the kubelet script to request connection to the master host bash kubelet.sh 192.168.80.71 ##View kubelet process ps aux | grep kubelet #View service status systemctl status kubelet.service #Check the request of node1 node on the master (operation on the master) kubectl get csr #Issue certificate (operation on master) kubectl certificate approve Name of node 1 #View csr again (operation on master) kubectl get csr #View cluster nodes (operation on the master) kubectl get node #Start the proxy service on node1 node bash proxy.sh 192.168.80.71 systemctl status kube-proxy.service

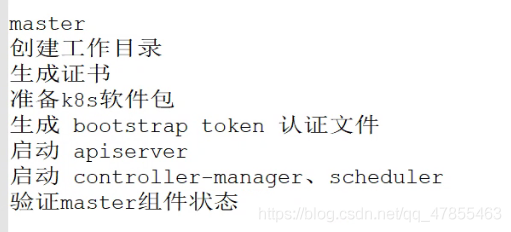

3, Build process

master setup process

Create working directory

Generate certificate

Prepare k8s package

Generate bootstrap token authentication file

Start apiserver

Start controller manager and scheduler

Verify master component status

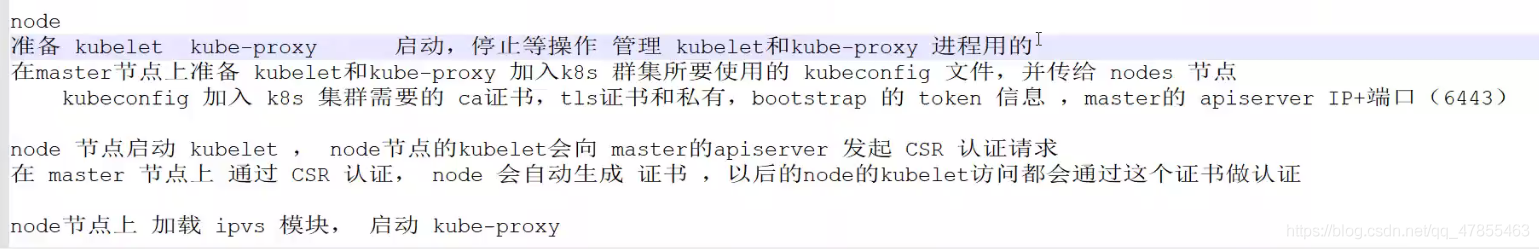

node construction process

- Prepare kubelet, Kube proxy start, stop and other operations for managing kubelei and Kube proxy processes

- Prepare kubeconfig files for kubelet and Kube proxy to join k8s cluster on the master node and pass them to the node node

- The kubeconfig file contains the ca certificate, tls certificate and private key required to join the k8s cluster, the token information of the bootstrap, and the apisever IP + port of the master (6443)

- The node node starts the kubelet, and the kublet of the node node will send a CSR authentication request to the apiserver of the master

- Through CSR authentication on the Master node, the node will automatically generate a certificate, and future kubelet accesses to the node will be authenticated through this certificate

- Load the ipvs module on the node node, and then start Kube proxy

k8s cluster construction:

etcd cluster

flannel network plug-in

Build master components

Build node components

success