Rimeng Society

Rimeng Society

AI AI:Keras PyTorch MXNet TensorFlow PaddlePaddle deep learning real combat (irregular update)

4.5 Keras Pipline and custom model

The Subclassing API of keras is used to establish the model, that is, TF keras. The model class is extended to define its own new model, and the process of training and evaluating the model is written manually. This method is highly flexible, It is the method recommended in this manual in common with other popular deep learning frameworks (such as PyTorch and chain). However, in many cases, we only need to establish a relatively simple and typical neural network (such as MLP and CNN above) and use conventional means for training. Keras also provides us with another set of simpler and more efficient built-in methods to establish, train and evaluate models.

4.5.1 Keras Sequential/Functional API model establishment

The most typical and commonly used neural network structure is to stack a pile of layers in a specific order. Then, do we just need to provide a list of layers, and Keras can automatically connect them end to end to form a model? This is the case with Keras's Sequential API.

- 1,tf.keras.models.Sequential() provides a list of layers to quickly create a TF keras. Model and return:

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(100, activation=tf.nn.relu),

tf.keras.layers.Dense(10),

tf.keras.layers.Softmax()

])

However, this layered structure can not represent any neural network structure.

- 2. To this end, Keras provides a Functional API to help us build more complex models, such as models that require multiple inputs / outputs or have parameter sharing. It uses the layer as a callable object, returns the tensor, and provides the input vector and output vector to TF Keras. The # inputs # and # outputs # parameters of model # such as

inputs = tf.keras.Input(shape=(28, 28, 1)) x = tf.keras.layers.Flatten()(inputs) x = tf.keras.layers.Dense(units=100, activation=tf.nn.relu)(x) x = tf.keras.layers.Dense(units=10)(x) outputs = tf.keras.layers.Softmax()(x) model = tf.keras.Model(inputs=inputs, outputs=outputs)

4.5. 2 train and evaluate the model using the compile, fit and evaluate methods of Keras Model

-

1. Configure the training parameters and evaluation methods required by the model by calling the compile method of the model.

- model.compile(optimizer,loss=None,metrics=None, accuracy scale): configure training related parameters

- Optimizer: gradient descent optimizer (at keras.optimizers)

from keras.optimizers import Adadelta from keras.optimizers import Adagrad from keras.optimizers import Adam from keras.optimizers import Adamax from keras.optimizers import Nadam from keras.optimizers import Optimizer from keras.optimizers import RMSprop from keras.optimizers import SGD from keras.optimizers import deserialize from keras.optimizers import get from keras.optimizers import serialize from keras.optimizers import AdamOptimizer()

- loss=None: loss type, which can be string or function name reference:

... from keras.losses import MAE as mae from keras.losses import MAE as mean_absolute_error from keras.losses import MAPE from keras.losses import binary_crossentropy from keras.losses import categorical_crossentropy from keras.losses import serialize ...

- metrics=None, ['accuracy']

- model.compile(optimizer,loss=None,metrics=None, accuracy scale): configure training related parameters

model.compile(optimizer=tf.keras.optimizers.Adam(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

Two similar differences

1,sparse_categorical_crossentropy: calculate the cross entropy loss if the target value is an integer

2,categorical_crossentropy: calculate the cross entropy loss for two output tensors and a target tensor

Use the following

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.001),

loss=tf.keras.losses.sparse_categorical_crossentropy,

metrics=[tf.keras.metrics.sparse_categorical_accuracy]

)

-

2,model.fit(): Training

-

model.fit(data_loader.train_data, data_loader.train_label, epochs=num_epochs, batch_size=batch_size)

-

x: Characteristic value:

1,Numpy array (or array-like), or a list of arrays 2,A TensorFlow tensor, or a list of tensors 3,`tf.data` dataset or a dataset iterator. Should return a tuple of either `(inputs, targets)` or `(inputs, targets, sample_weights)`. 4,A generator or `keras.utils.Sequence` returning `(inputs, targets)` or `(inputs, targets, sample weights)`.

-

y: Target value

-

batch_size=None: batch size

-

epochs=1: number of training iterations

-

validation_data: validation data, which can be used to monitor the performance of the model during training.

-

callbacks=None: add callback list (for example, tensorboard display)

-

model.fit(train_images, train_labels, epochs=5, batch_size=32)

- 3,model.evaluate(test_images, test_labels)

model.evaluate(test, test_label)

-

Prediction model predict(test):

-

Other methods:

- model.save_weights(filepath) saves the weights of the model as HDF5 file or ckpt file

- model.load_weights(filepath, by_name=False) loads the weights of the model from the HDF5 file from which save_weights was created. By default, the schema is not expected to change. To load weights into different architectures with some common layers, use by_name=True to load only those layers with the same name.

4.5. 3 case: introduction to CIFAR100 dataset

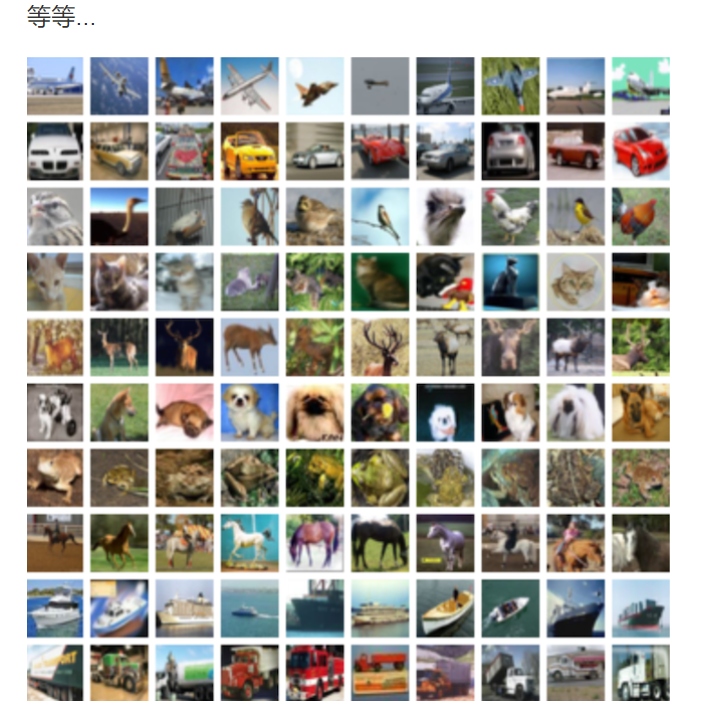

This dataset is like CIFAR-10, except that it has 100 classes, each containing 600 images., Each category has 500 training images and 100 test images. The 100 classes in CIFAR-100 are divided into 20 superclasses. Each image has a fine label (the class it belongs to) and a rough label (the superclass it belongs to). The following is a list of categories in CIFAR-100:

4.4.2.1 API usage

- API for building CNN model

- Conv2D: convolution, kernel_ Size, stripes, padding, dataformat, 'nhwc' and 'NCHW'

- MaxPool2D: pool operation

keras.layers.Conv2D(32, kernel_size=5, strides=1,

padding='same', data_format='channels_last', activation=tf.nn.relu),

keras.layers.MaxPool2D(pool_size=2, strides=2, padding='same'),

4.4. 2.2 step analysis and code implementation (reduced version LeNet5)

- Read dataset:

- Obtain the corresponding data sets from datasets, including training sets and test sets

- Shape processing and normalization are required

import tensorflow as tf

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

class CNNMnist(object):

def __init__(self):

(self.train, self.train_label), (self.test, self.test_label) = \

tf.keras.datasets.cifar100.load_data()

self.train = self.train.reshape(-1, 32, 32, 3) / 255.0

self.test = self.test.reshape(-1, 32, 32, 3) / 255.0

-

Model writing

- Two convolution layers + two neural network layers

- Network design:

-

first floor

- Convolution: 32 filter s, size 5 * 5, stripes = 1, padding="SAME"

- Activate: Relu

- Pool size: 2x2, stripes2

- The second floor

- Convolution: 64 filter s, size 5 * 5, stripes = 1, padding="SAME"

- Activate: Relu

- Pool size: 2x2, stripes2

- Full connection layer

After the change of image data size of each layer, it needs to be determined. The size of several image data of each batch input by CIFAR100 is [None, 32 * 32]. If convolution calculation is required, it needs to become [None, 32, 32, 3]

- first floor

- Convolution: [None, 32, 32, 3] - > [None, 32, 32, 32]

- Number of weights: [5, 5, 3, 32]

- Offset quantity: [32]

- Activation: [None, 32, 32, 32] - > [None, 32, 32, 32]

- Pooling: [None, 32, 32, 32] - > [none, 16, 16, 32]

- Convolution: [None, 32, 32, 3] - > [None, 32, 32, 32]

- The second floor

- Convolution: [None, 16, 16, 32] - > [none, 16, 16, 64]

- Number of weights: [5, 5, 32, 64]

- Offset quantity: [64]

- Activation: [None, 16, 16, 64] - > [None, 16, 16, 64]

- Pooling: [None, 16, 16, 64] - > [none, 8, 8, 64]

- Convolution: [None, 16, 16, 32] - > [none, 16, 16, 64]

- Full connection layer

- [None, 8, 8, 64]->[None, 8 8 64]

- [None, 8 8 64] x [8 8 64, 1024] = [None, 1024]

- [None,1024] x [1024, 100]->[None, 100]

- Number of weights: [8, 8, 64, 1024] + [1024, 100], depending on the number of categories

- Offset quantity: [1024] + [100], depending on the number of categories

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, kernel_size=5, strides=1,

padding='same', data_format='channels_last', activation=tf.nn.relu),

tf.keras.layers.MaxPool2D(pool_size=2, strides=2, padding='same'),

tf.keras.layers.Conv2D(64, kernel_size=5, strides=1,

padding='same', data_format='channels_last', activation=tf.nn.relu),

tf.keras.layers.MaxPool2D(pool_size=2, strides=2, padding='same'),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(1024, activation=tf.nn.relu),

tf.keras.layers.Dense(100, activation=tf.nn.softmax),

])

- Other complete codes

def compile(self):

CNNMnist.model.compile(optimizer=tf.keras.optimizers.Adam(),

loss=tf.keras.losses.sparse_categorical_crossentropy,

metrics=['accuracy'])

return None

def fit(self):

CNNMnist.model.fit(self.train, self.train_label, epochs=1, batch_size=32)

return None

def evaluate(self):

test_loss, test_acc = CNNMnist.model.evaluate(self.test, self.test_label)

print(test_loss, test_acc)

return None

if __name__ == '__main__':

cnn = CNNMnist()

cnn.compile()

cnn.fit()

cnn.predict()

print(CNNMnist.model.summary())

- training effect

epoch 1: ...... 43168/50000 [========================>.....] - ETA: 35s - loss: 3.6360 - acc: 0.1547 43200/50000 [========================>.....] - ETA: 35s - loss: 3.6354 - acc: 0.1547 43232/50000 [========================>.....] - ETA: 35s - loss: 3.6352 - acc: 0.1548 43264/50000 [========================>.....] - ETA: 34s - loss: 3.6348 - acc: 0.1549 43296/50000 [========================>.....] - ETA: 34s - loss: 3.6346 - acc: 0.1549

4.5. 3.3 manually saving and restoring models

1. Save weights manually

Model. save_ The weights method is as simple as saving them manually. By default, TF Keras and save_weights specifically uses TensorFlow checkpoints format ckpt extension.

The code stores the weights in the collection of checkpoint - format files, which contain only the training weights in binary format. Checkpoints include:

- 1. One or more that contain model weights.

- 2. Index file indicating which weights are stored in which slice.

If you train only one model on one machine, you will have a fragment with suffix: data-00000-of-00001

- It only contains the serialized data of several Variables objects and does not contain the graph structure. Therefore, it is impossible to rebuild the calculation graph only by providing code to the checkpoint model

Introduction to use

# Save weights

model.save_weights('./checkpoints/my_checkpoint')

# Create model instance

model = create_model()

# Load weight

model.load_weights('./checkpoints/my_checkpoint')

# Evaluation model

loss,acc = model.evaluate(test_images, test_labels, verbose=2)

print("Restored model, accuracy: {:5.2f}%".format(100*acc))

Save in the above example

- Save as ckpt

- model.save_weights('./weights/my_model')

- model.load_weights('./weights/my_model')

SingleNN.model.save_weights("./ckpt/SingleNN")

def predict(self):

# Directly use the weight test after training

if os.path.exists("./ckpt/checkpoint"):

SingleNN.model.load_weights("./ckpt/SingleNN")

predictions = SingleNN.model.predict(self.test)

# Process the prediction results

print(np.argmax(predictions, 1))

return

2. Save the entire model

The model and optimizer can be saved to a file containing its state (weights and variables) and model parameters. This can export the model so that it can be used without accessing the original python code. Moreover, you can resume training from where it was interrupted by restoring the optimizer state.

It is useful to save the complete model, which can be found in tensorflow JS (HDF5, Saved Model) loads them, then trains and runs them in a web browser, or converts them to run on a mobile device using TensorFlow Lite (HDF5, Saved Model)

- Save the model as an HDF5 file

SingleNN.model.save_weights("./ckpt/SingleNN.h5")

def predict(self):

# Directly use the weight test after training

if os.path.exists("./ckpt/SingleNN.h5"):

SingleNN.model.load_weights("./ckpt/SingleNN.h5")

predictions = SingleNN.model.predict(self.test)

print(np.argmax(predictions, 1))

return

4.5. 4 user defined layer, loss function and evaluation index

You may also ask, if the existing layers can not meet my requirements, what should I do if I need to define my own layer? In fact, we can not only inherit TF keras. Model writes its own model class, or it can inherit TF keras. layers. Layer writes its own layer.

4.5. 4.1 user defined layer

The custom layer needs to inherit TF keras. layers. Layer class and override the init, build and call methods, as shown below:

class MyLayer(tf.keras.layers.Layer):

def __init__(self):

super().__init__()

# setup code

def build(self, input_shape): # input_shape is an object of type TensorShape that provides input shapes

# This part of the code is called when the layer is used for the first time. Creating variables here can make the shape of the variables adapt to the shape of the input

# There is no need for the user to specify additional variable shapes.

# If you can completely determine the shape of the variable, you can also__ init__ Partially create variables

self.variable_0 = self.add_weight(...)

self.variable_1 = self.add_weight(...)

def call(self, inputs):

# Code called by the model (processing input and returning output)

return output

For example, if we want to implement a fully connected layer (tf.keras.layers.Dense), we can write it as follows. This code creates two variables in the build method and uses the created variables in the call method for operation:

class LinearLayer(tf.keras.layers.Layer):

def __init__(self, units):

super().__init__()

self.units = units

def build(self, input_shape): # Input here_ Shape is the shape of the parameter inputs when call() is run for the first time

self.w = self.add_variable(name='w',

shape=[input_shape[-1], self.units], initializer=tf.zeros_initializer())

self.b = self.add_variable(name='b',

shape=[self.units], initializer=tf.zeros_initializer())

def call(self, inputs):

y_pred = tf.matmul(inputs, self.w) + self.b

return y_pred

When defining the model, we can call our custom layer LinearLayer just like other layers in Keras

class LinearModel(tf.keras.Model):

def __init__(self):

super().__init__()

self.layer = LinearLayer(units=1)

def call(self, inputs):

output = self.layer(inputs)

return output

4.5. 4.2 user defined loss function and evaluation index

The custom loss function needs to inherit TF keras. losses. Class loss, override the call method, and enter the real value y_true and model predicted value y_pred, the loss value calculated by the user-defined loss function between the predicted value and the real value of the output model. The following example is the mean square loss function:

class MeanSquaredError(tf.keras.losses.Loss):

def call(self, y_true, y_pred):

return tf.reduce_mean(tf.square(y_pred - y_true))

User defined evaluation indicators need to inherit TF keras. metrics. Metric class and override init and update_state and result. The following example simply re implements the sparsecategoricaccuracy evaluation indicator class used earlier:

class SparseCategoricalAccuracy(tf.keras.metrics.Metric):

def __init__(self):

super().__init__()

self.total = self.add_weight(name='total', dtype=tf.int32, initializer=tf.zeros_initializer())

self.count = self.add_weight(name='count', dtype=tf.int32, initializer=tf.zeros_initializer())

def update_state(self, y_true, y_pred, sample_weight=None):

values = tf.cast(tf.equal(y_true, tf.argmax(y_pred, axis=-1, output_type=tf.int32)), tf.int32)

self.total.assign_add(tf.shape(y_true)[0])

self.count.assign_add(tf.reduce_sum(values))

def result(self):

return self.count / self.total

4.5. 5 Summary

- Use of keras pipline

- Use of keras model training verification method

- Saving and loading method of keras model

- Use of keras custom layer, loss function and evaluation index

keras_sequential.py

import tensorflow as tf

import numpy as np

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

class CNNCifar(object):

"""CNN conduct cifar100 Category classification

"""

# 2. Define model

# First floor: 32, 5x5,1,padding='same '

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, kernel_size=5, strides=1, padding='same', activation=tf.nn.relu),

tf.keras.layers.MaxPool2D(pool_size=2, strides=2),

tf.keras.layers.Conv2D(64, kernel_size=5, strides=1, padding='same', activation=tf.nn.relu),

tf.keras.layers.MaxPool2D(pool_size=2, strides=2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(1024, activation=tf.nn.relu),

tf.keras.layers.Dense(100, activation=tf.nn.softmax)

])

def __init__(self):

# 1. Read the data and perform shape and normalization processing

(self.train, self.train_label), (self.test, self.test_label) = \

tf.keras.datasets.cifar100.load_data()

self.train = self.train.reshape(-1, 32, 32, 3) / 255.0

self.test = self.test.reshape(-1, 32, 32, 3) / 255.0

def compile(self):

CNNCifar.model.compile(optimizer=tf.keras.optimizers.Adam(),

loss=tf.keras.losses.sparse_categorical_crossentropy,

metrics=['accuracy'])

return None

def fit(self):

check = tf.keras.callbacks.ModelCheckpoint('./ckpt/cnncifar_{epoch:02d}-{val_loss:.2f}.h5',

monitor='val_loss',

save_best_only=False,

save_weights_only=False,

mode='auto',

period=1)

tensorboard = tf.keras.callbacks.TensorBoard(log_dir='./graph', histogram_freq=1,

write_graph=True, write_images=True)

CNNCifar.model.fit(self.train, self.train_label, epochs=1, batch_size=32, callbacks=[check, tensorboard],

validation_data=(self.test, self.test_label))

return None

def evaluate(self):

test_loss, test_acc = CNNCifar.model.evaluate(self.test, self.test_label)

print(test_loss, test_acc)

return None

def predict(self):

# Directly use the weight test after training

if os.path.exists("./ckpt/checkpoint"):

CNNCifar.model.load_weights("./ckpt/CNNCifar")

predictions = CNNCifar.model.predict(self.test)

# Process the prediction results

print(np.argmax(predictions, 1))

return

if __name__ == '__main__':

cnc = CNNCifar()

# cnc.compile()

# cnc.fit()

# cnc.evaluate()

# print(cnc.model.summary())

# cnc.model.save_weights("./ckpt/CNNCifar")