Hello everyone, our business volume has soared recently, which has led to my being stupid recently. A few nights ago, it was found that due to the surge of business pressure, several instances of a core micro service were newly expanded, and the Redis connection failed to varying degrees:

org.springframework.data.redis.RedisConnectionFailureException: Unable to connect to Redis; nested exception is io.lettuce.core.RedisConnectionException: Unable to connect to redis.production.com at org.springframework.data.redis.connection.lettuce.LettuceConnectionFactory$ExceptionTranslatingConnectionProvider.translateException(LettuceConnectionFactory.java:1553) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.connection.lettuce.LettuceConnectionFactory$ExceptionTranslatingConnectionProvider.getConnection(LettuceConnectionFactory.java:1461) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.connection.lettuce.LettuceConnection.doGetAsyncDedicatedConnection(LettuceConnection.java:1027) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.connection.lettuce.LettuceConnection.getOrCreateDedicatedConnection(LettuceConnection.java:1013) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.connection.lettuce.LettuceConnection.openPipeline(LettuceConnection.java:527) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.connection.DefaultStringRedisConnection.openPipeline(DefaultStringRedisConnection.java:3245) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at jdk.internal.reflect.GeneratedMethodAccessor319.invoke(Unknown Source) ~[?:?] at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:?] at java.lang.reflect.Method.invoke(Method.java:566) ~[?:?] at org.springframework.data.redis.core.CloseSuppressingInvocationHandler.invoke(CloseSuppressingInvocationHandler.java:61) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at com.sun.proxy.$Proxy355.openPipeline(Unknown Source) ~[?:?] at org.springframework.data.redis.core.RedisTemplate.lambda$executePipelined$1(RedisTemplate.java:318) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:222) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:189) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:176) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.executePipelined(RedisTemplate.java:317) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.executePipelined(RedisTemplate.java:307) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate$$FastClassBySpringCGLIB$$81812bd6.invoke(<generated>) ~[spring-data-redis-2.4.9.jar!/:2.4.9] //Omit some stacks Caused by: org.springframework.dao.QueryTimeoutException: Redis command timed out at org.springframework.data.redis.connection.lettuce.LettuceConnection.closePipeline(LettuceConnection.java:592) ~[spring-data-redis-2.4.9.jar!/:2.4.9] ... 142 more

At the same time, there is also an exception that the business calls Redis command timeout:

org.springframework.data.redis.connection.RedisPipelineException: Pipeline contained one or more invalid commands; nested exception is org.springframework.data.redis.connection.RedisPipelineException: Pipeline contained one or more invalid commands; nested exception is org.springframework.dao.QueryTimeoutException: Redis command timed out at org.springframework.data.redis.connection.lettuce.LettuceConnection.closePipeline(LettuceConnection.java:594) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.connection.DefaultStringRedisConnection.closePipeline(DefaultStringRedisConnection.java:3224) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at jdk.internal.reflect.GeneratedMethodAccessor198.invoke(Unknown Source) ~[?:?] at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:?] at java.lang.reflect.Method.invoke(Method.java:566) ~[?:?] at org.springframework.data.redis.core.CloseSuppressingInvocationHandler.invoke(CloseSuppressingInvocationHandler.java:61) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at com.sun.proxy.$Proxy355.closePipeline(Unknown Source) ~[?:?] at org.springframework.data.redis.core.RedisTemplate.lambda$executePipelined$1(RedisTemplate.java:326) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:222) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:189) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:176) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.executePipelined(RedisTemplate.java:317) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate.executePipelined(RedisTemplate.java:307) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.data.redis.core.RedisTemplate$$FastClassBySpringCGLIB$$81812bd6.invoke(<generated>) ~[spring-data-redis-2.4.9.jar!/:2.4.9] at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218) ~[spring-core-5.3.7.jar!/:5.3.7] at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:779) ~[spring-aop-5.3.7.jar!/:5.3.7] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163) ~[spring-aop-5.3.7.jar!/:5.3.7] at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:750) ~[spring-aop-5.3.7.jar!/:5.3.7] at org.springframework.aop.interceptor.ExposeInvocationInterceptor.invoke(ExposeInvocationInterceptor.java:97) ~[spring-aop-5.3.7.jar!/:5.3.7] at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186) ~[spring-aop-5.3.7.jar!/:5.3.7] at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:750) ~[spring-aop-5.3.7.jar!/:5.3.7] at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:692) ~[spring-aop-5.3.7.jar!/:5.3.7] at org.springframework.data.redis.core.StringRedisTemplate$$EnhancerBySpringCGLIB$$c9b8cc15.executePipelined(<generated>) ~[spring-data-redis-2.4.9.jar!/:2.4.9] //Omit part of the stack Caused by: org.springframework.data.redis.connection.RedisPipelineException: Pipeline contained one or more invalid commands; nested exception is org.springframework.dao.QueryTimeoutException: Redis command timed out at org.springframework.data.redis.connection.lettuce.LettuceConnection.closePipeline(LettuceConnection.java:592) ~[spring-data-redis-2.4.9.jar!/:2.4.9] ... 142 more Caused by: org.springframework.dao.QueryTimeoutException: Redis command timed out at org.springframework.data.redis.connection.lettuce.LettuceConnection.closePipeline(LettuceConnection.java:592) ~[spring-data-redis-2.4.9.jar!/:2.4.9] ... 142 more

Our spring data redis configuration is:

spring:

redis:

host: redis.production.com

port: 6379

# command timeout

timeout: 3000

lettuce:

pool:

max-active: 128

max-idle: 128

max-wait: 3000Although these requests failed when the first request was sent to the instance, we have a retry mechanism, and the request succeeded in the end. However, it is 3s more than normal requests, which accounts for about 3% of all requests.

It can be seen from the exception stack that the root cause of the exception is the redis command timeout, but why does the redis command execute when establishing a redis connection?

lettuce the process of establishing a connection

Our Redis access uses the spring data Redis + lattice connection pool. By default, the Redis connection establishment process in lattice is:

- Establish TCP connection

- Make the necessary Handshake:

- For redis 2 x ~ 5. Version of X:

- If the user name and password are required, the user name and password information is sent

- If the pre use heartbeat of the connection is enabled, PING is sent

- For Redis 6 Version of X: 6 After X, a new command HELLO is introduced to uniformly initialize Redis connections: REDIS HELLO , this command parameter can contain the user name and password to complete the verification.

For redis 2 x ~ 5. X, we can configure whether to send PING heartbeat before enabling connection. The default is:

public static final boolean DEFAULT_PING_BEFORE_ACTIVATE_CONNECTION = true;

The Redis version we use is the latest 6.0 x. Therefore, in the connection establishment and handshake stage, you must send a HELLO command and wait for the response to be successful before the connection is created successfully.

So why does this simple command time out?

View Redis command pressure through JFR

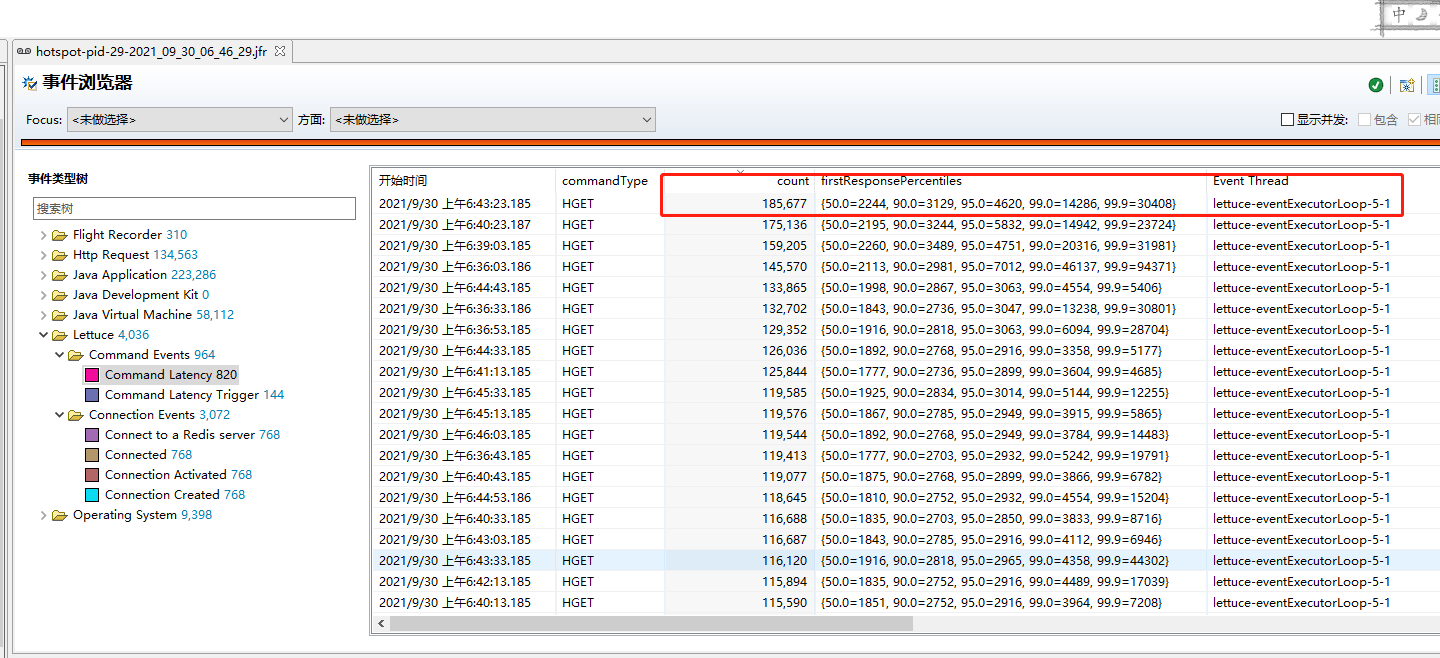

In our project, the redis operation is enabled through the spring data redis + lattice connection pool, and JFR monitoring of lattice commands is added. Please refer to my article: The new monitoring method of Redis connection pool is not poked ~ I'll add a little more seasoning So far, my pull request has been merged, and this feature will be released in 6.2 X version release. Let's look at the Redis command collection near the problem time, as shown in the following figure:

As you can see, Redis is still under great pressure at this time (the unit of firstResponsePercentiles in the figure is microseconds). At this time, we have 7 instances. This instance has just started, and the pressure is relatively small compared with other instances, and the connection command timeout has occurred. Moreover, we only intercepted the HGET command here, and the execution times of the GET command is the same order of magnitude as that of HGET, and then other commands are added It is equivalent to half of HGET. At this time, from the client, the QPS of commands sent to redis has exceeded one million.

From the monitoring of Redis, there are some pressures, which may cause some commands to wait too long, resulting in timeout exceptions.

Thoughts on Optimization

Let's be clear first. For spring data redis + lettuce, If we don't use the command that requires an exclusive connection (including redis transactions and Redis Pipeline), we do not need connection pool, because lettuce is asynchronous and responsive. For requests that can use shared connections, the same actual redis connection will be used for requests, and connection pool is not required. However, in this micro service, a large number of pipeline commands are used to improve query efficiency. If we do not use it Connection pooling will lead to frequent connection closure and creation (hundreds of thousands of connections per second), which will seriously reduce efficiency. Although the official website says that lettuce does not need connection pooling, this is when you do not use transactions and pipelines.

First, Redis capacity expansion: our Redis is deployed on the public cloud. If capacity expansion means improving the machine configuration, the next higher configuration index is twice as much as the current one, and the cost is almost twice as high. At present, only in case of instantaneous pressure, less than 3% of requests fail and retry the next instance, and finally succeed. It is not worth expanding Redis from the perspective of cost.

Then, for applications with excessive pressure, we have a dynamic capacity expansion mechanism. We also have retry for failed requests. But the impact of this problem is:

- Due to the arrival of transient pressure, a large number of requests may arrive at the beginning of a newly started instance, resulting in the mixing of interface requests and heartbeat requests after establishing a connection. And because these requests are not fairly queued, some heartbeat requests respond too slowly, resulting in failure, and the re establishment of the connection may still fail.

- Some instances may establish fewer connections and cannot meet the concurrency requirements. As a result, many requests are actually blocking the process of waiting for connection, so the CPU pressure does not suddenly become great, so the capacity expansion is not triggered. This brings greater lag to capacity expansion.

In fact, if we can minimize or avoid connection creation failures, we can greatly optimize this problem. That is, all connections in the connection pool are created before the microservice instance starts providing services.

How to pre create Redis connection pool connections

Let's first see if we can implement this connection pool with the help of official configuration.

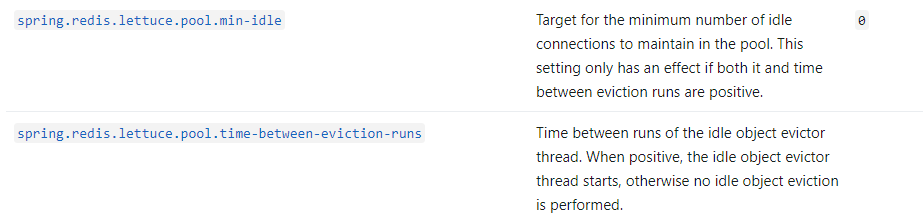

Let's see Official documents , two configurations were found:

Min idle is the minimum number of connections in the connection pool. Time between occurrence runs is a scheduled task to check whether the number of connections in the connection pool meets the minimum number of Min IDs and does not exceed the number of Max IDs. The official document says that Min idle will take effect only if it is configured with time between evil runs. The reason is that the link pool of lettuce is based on Commons pool. The connection pool can be configured with min idle, but you need to manually call preparePool to create at least the number of Min idle objects:

public void preparePool() throws Exception {

//If a valid min idle is configured, ensureMinIdle is called to ensure that at least a number of Min idle objects are created

if (this.getMinIdle() >= 1) {

this.ensureMinIdle();

}

}So when is this called? The Commons pool has scheduled tasks. The initial delay and timing interval are time between eviction runs, which are configured as follows:

public void run() {

final ClassLoader savedClassLoader =

Thread.currentThread().getContextClassLoader();

try {

if (factoryClassLoader != null) {

// Set the class loader for the factory

final ClassLoader cl = factoryClassLoader.get();

if (cl == null) {

// The pool has been dereferenced and the class loader

// GC'd. Cancel this timer so the pool can be GC'd as

// well.

cancel();

return;

}

Thread.currentThread().setContextClassLoader(cl);

}

// Evict from the pool

try {

evict();

} catch(final Exception e) {

swallowException(e);

} catch(final OutOfMemoryError oome) {

// Log problem but give evictor thread a chance to continue

// in case error is recoverable

oome.printStackTrace(System.err);

}

// Re-create idle instances.

try {

ensureMinIdle();

} catch (final Exception e) {

swallowException(e);

}

} finally {

// Restore the previous CCL

Thread.currentThread().setContextClassLoader(savedClassLoader);

}

}It can be seen that this scheduled task mainly ensures that the number of free objects in the current pool does not exceed Max idle, and there are at least min idle links. These are the mechanisms brought by common pools. But we don't need to initialize all links as soon as the connection pool is created.

We need to implement it ourselves. First, we configure min idle = max idle = max active, so that there are the same maximum number of links in the connection pool at any time. After that, we modify the source code where the connection pool is created, and force the call to preparePool to initialize all links, that is:

// This method is called when lettuce initializes to create a connection pool

public static <T extends StatefulConnection<?, ?>> GenericObjectPool<T> createGenericObjectPool(

Supplier<T> connectionSupplier, GenericObjectPoolConfig<T> config, boolean wrapConnections) {

//Omit other codes

GenericObjectPool<T> pool = new GenericObjectPool<T>(new RedisPooledObjectFactory<T>(connectionSupplier), config) {

@Override

public T borrowObject() throws Exception {

return wrapConnections ? ConnectionWrapping.wrapConnection(super.borrowObject(), poolRef.get())

: super.borrowObject();

}

@Override

public void returnObject(T obj) {

if (wrapConnections && obj instanceof HasTargetConnection) {

super.returnObject((T) ((HasTargetConnection) obj).getTargetConnection());

return;

}

super.returnObject(obj);

}

};

//After creation, call preparePool.

try {

pool.preparePool();

} catch (Exception e) {

throw new RedisConnectionException("prepare connection pool failed",e);

}

//Omit other codes

}In this way, when we initialize Redis, we can initialize all Redis links before the microservice really provides services. Since the source code modification is involved here, you can now replace the source code of the dependent library by adding a class with the same name and the same path to the project. For this optimization, I also proposed the issue and the corresponding pull request to lettuce: