Reading Guide: piecemeal upload and breakpoint continuation, these two terms should not be unfamiliar to friends who have done or are familiar with file upload. To summarize this article, I hope to help or inspire students engaged in related work.

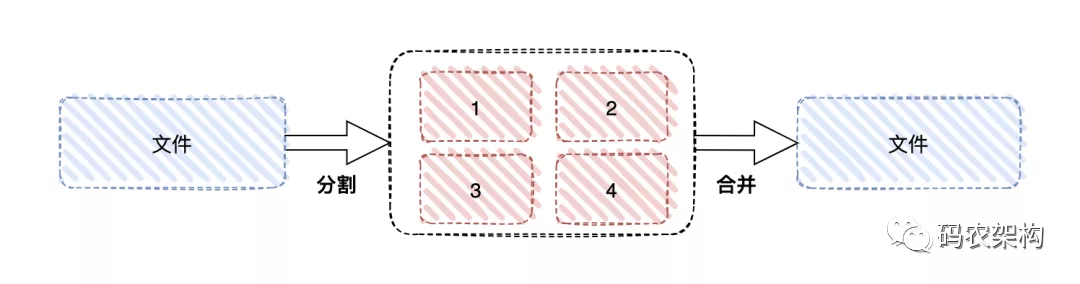

Fragment upload is to upload the files to be uploaded according to a certain size, Separate the entire file into multiple data blocks (we call it Part) to upload separately. After uploading, the server will summarize all uploaded files and integrate them into the original files. Fragment upload can not only avoid the problem of always uploading from the starting position of the file due to the poor network environment, but also use multithreading to send different block data concurrently, improve the transmission efficiency and reduce the cost Send time.

1, Background

For the fragmentation of large files, the details of file splitting have been described earlier

As well as a detailed introduction to the way RandomAccessFile supports "random access"

[add content to file]

[insert content to the specified location of the file]

2, Fragment upload

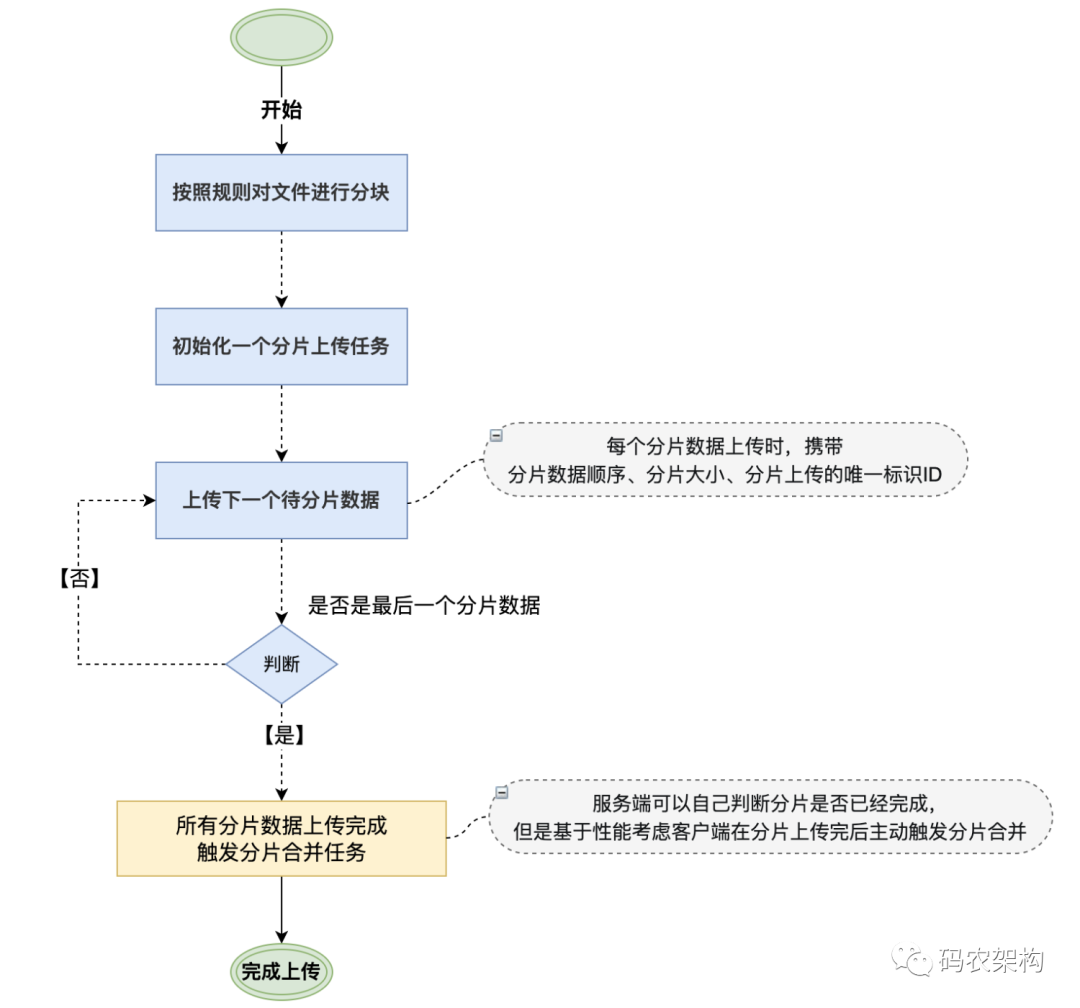

Combing the whole logic, the whole process of fragment upload of super large files is roughly as follows:

- Divide the files to be uploaded into data blocks of the same size according to certain segmentation rules;

- Initialize a slice upload task and return the unique ID of this slice upload;

- Send each fragment data block according to a certain strategy (serial or parallel);

- After sending, the server judges whether the data upload is complete according to the. If it is complete, it will synthesize the data blocks to obtain the original file.

Of course, the signature verification of the data is also involved in the whole process of data upload to prevent the data from being tampered with maliciously. The whole upload flow chart is shown below.

Based on the above principle introduction, based on Microservice architecture | how to solve the fragment upload of super large attachments? The case in continues

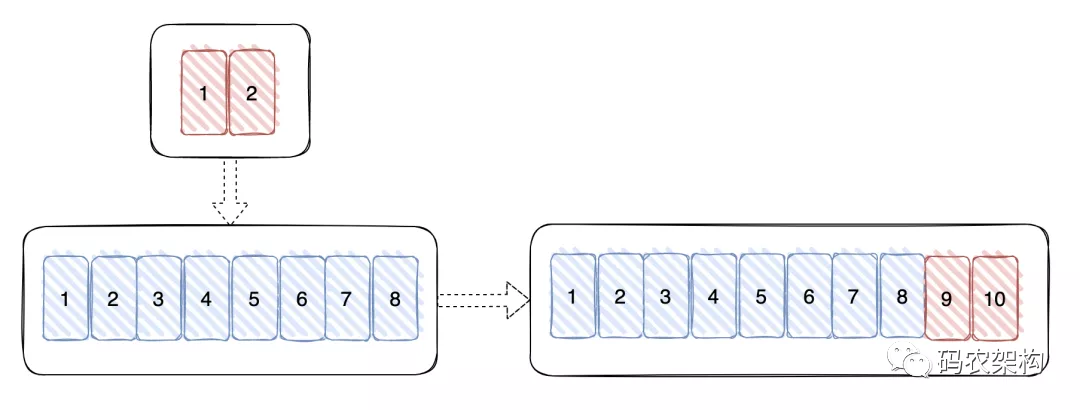

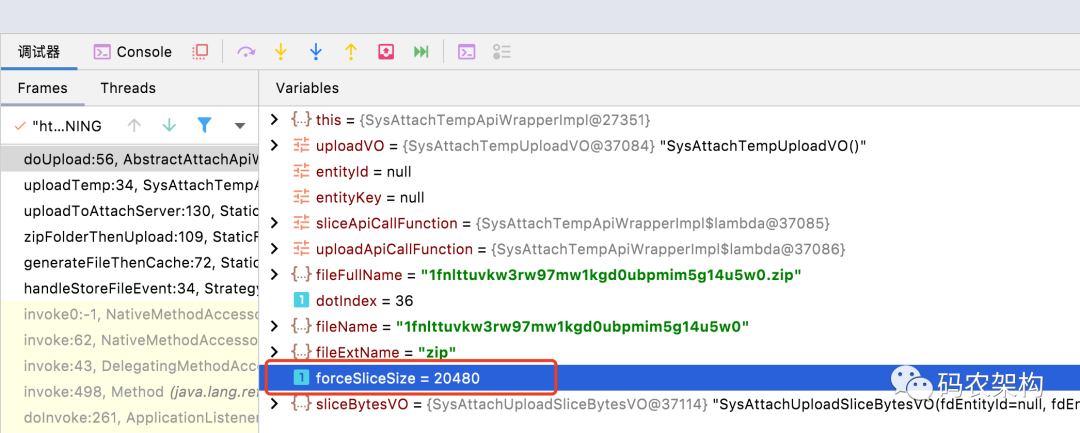

Upload file details

- Total file size 37.877 KB

- Slice size 20L * 1024 = 20.480kb

Calculation can be obtained

- Number of slices: 2

- Slice size 18.939 KB

Computational logic How to solve the problem of large attachment fragment upload? Detailed introduction

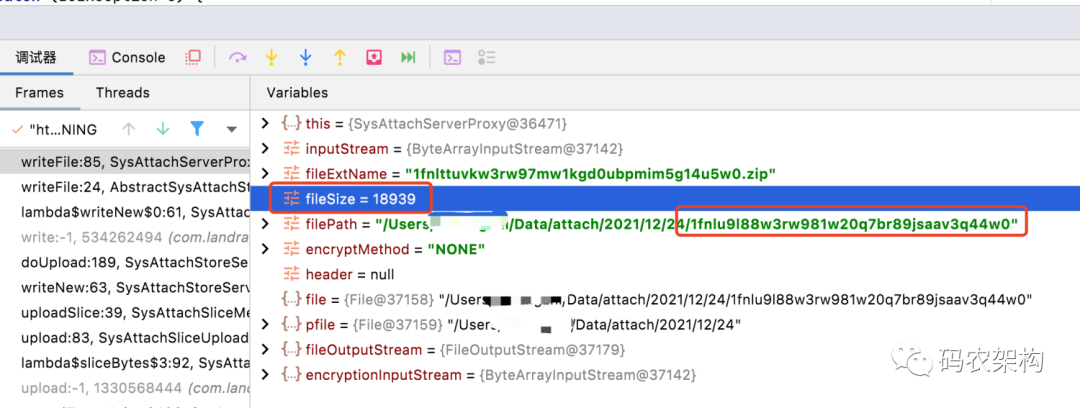

ⅶ single slice upload

Set the default temporary partition file to be stored on the local disk, and the folder has been split by date and time

/Data/attach/

Read each slice size

try (InputStream inputStream = new FileInputStream(uploadVO.getFile())) {

for (int i = 0; i < sliceBytesVO.getFdTotalSlices(); i++) {

// Read the data bytes of each partition

this.readSliceBytes(i, inputStream, sliceBytesVO);

// Call the function of fragment upload API

String result = sliceApiCallFunction.apply(sliceBytesVO);

if (StringUtils.isEmpty(result)) {

continue;

}

return result;

}

} catch (IOException e) {

throw e;

}Execute fragment upload function

public SysAttachUploadResultVO upload(InputStream inputStream, SysAttachUploadSliceBaseVO sliceBaseVO) {

// Query whether there is a fragment summary according to the file information

SysAttachFileSliceSummary sliceSummary = this.checkExistedSummary(sliceBaseVO);

// If there is no sharding summary, save the sharding summary

if (sliceSummary == null) {

sliceSummary = this.saveSliceSummary(sliceBaseVO);

}

// Query whether the current partition exists. If so, you do not need to upload the current partition

SysAttachFileSliceVO currentSliceExisted = this.checkSliceExited(sliceSummary.getFdId(),

sliceBaseVO.getFdSliceIndex());

if (currentSliceExisted != null) {

return null;

}

// Query the previous fragment according to the fragment summary

SysAttachFileSliceVO previousSlice = this.findPreviousSliceBySummaryId(sliceSummary.getFdId());

// Common write implementation

sliceMergeUploader.uploadSlice(inputStream, sliceBaseVO, sliceSummary.getFdId(), previousSlice);

List<SysAttachFileSlice> sliceList = sysAttachFileSliceService.findBySummaryId(sliceSummary.getFdId());

// Judge whether all fragments have been uploaded

if (this.checkAllSliceUploaded(sliceList, sliceBaseVO)) {

// Create a complete attachment and return the temporary attachment file ID

return sliceMergeUploader.createFullAttach(sliceBaseVO, sliceList, sliceSummary.getFdId());

}

return null;

}Generate fragment file and save fragment record

public String writeFile(InputStream inputStream, String fileExtName, long fileSize, String filePath,

String encryptMethod, Map<String, String> header) {

// When there is no attachment file on the server, build input and output streams respectively

File file = new File(filePath);

File pfile = file.getParentFile();

if (!pfile.exists()) {

pfile.mkdirs();

}

if (file.exists()) {

file.delete();

}

FileOutputStream fileOutputStream = null;

InputStream encryptionInputStream = null;

try {

file.createNewFile();

fileOutputStream = new FileOutputStream(file);

// Write attachment file

encryptionInputStream = getEncryptService(encryptMethod).initEncryptInputStream(inputStream);

IOUtils.copy(encryptionInputStream, fileOutputStream);

return filePath;

} catch (IOException e) {

throw new KmssRuntimeException("attach.msg.error.SysAttachWriteFailed", e);

} finally {

// After the attachment write operation is completed, close the output stream and input stream in turn to release the memory space where the output stream and input stream are located

IOUtils.closeQuietly(fileOutputStream);

IOUtils.closeQuietly(encryptionInputStream);

IOUtils.closeQuietly(inputStream);

}

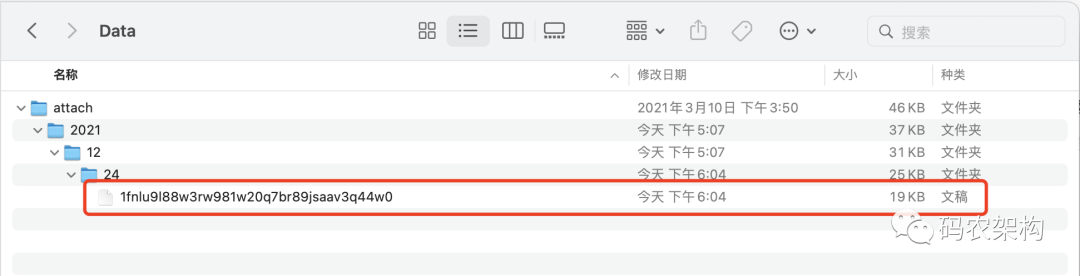

}First slice

[slice details]

[local disk partition file]

Similarly, continue to upload the second fragment, and then merge all attachments in sequence

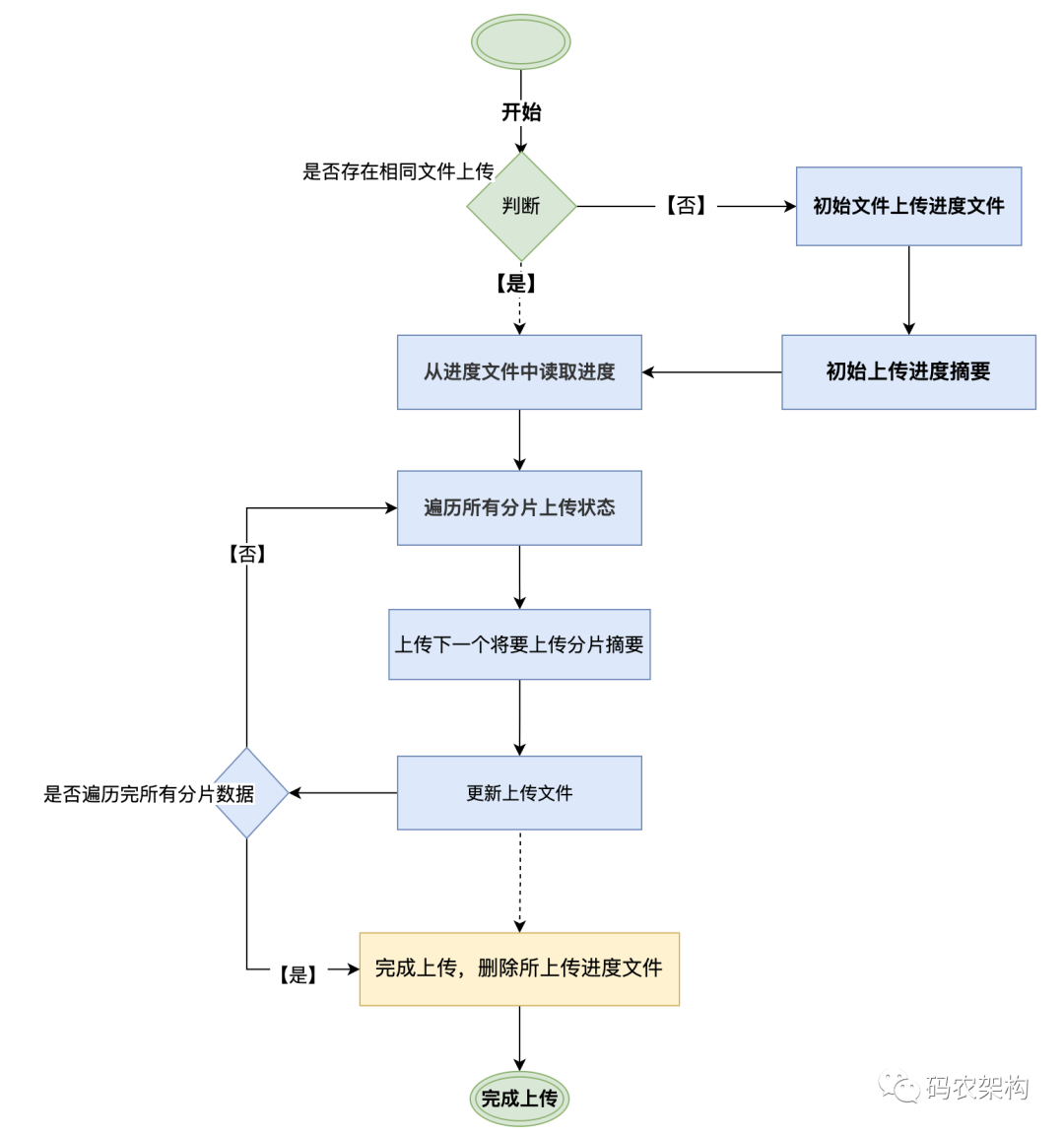

3, Breakpoint continuation

Because the data uploaded by fragment is permanent, it is easy to realize breakpoint continuous transmission based on fragment upload.

In the process of fragment upload, if the upload is interrupted due to abnormal factors such as system crash or network interruption, the client needs to record the upload progress. When uploading again is supported later, you can continue to upload from the place where the last upload was interrupted.

In order to avoid the problem that the progress data of the client after uploading is deleted, which leads to the restart of uploading from the beginning, the server can also provide corresponding interfaces for the client to query the uploaded fragment data, so that the client can know the uploaded fragment data, so as to continue uploading from the next fragment data.

For detailed implementation details, please refer to fragment upload implementation.

4, Summary

Because RandomAccessFile can freely access any location of the file, if you need to access part of the file instead of reading the file from beginning to end, an important use scenario of RandomAccessFile is multi-threaded download and breakpoint continuation in network request.

The core to realize fragment upload and breakpoint continuation is to skillfully use RandomAccessFile to read and write content. The second is how to record the fragment summary information, and users compare and analyze the upload progress.