Chapter II cluster environment construction

This chapter mainly introduces how to build kubernetes cluster environment

Environmental planning

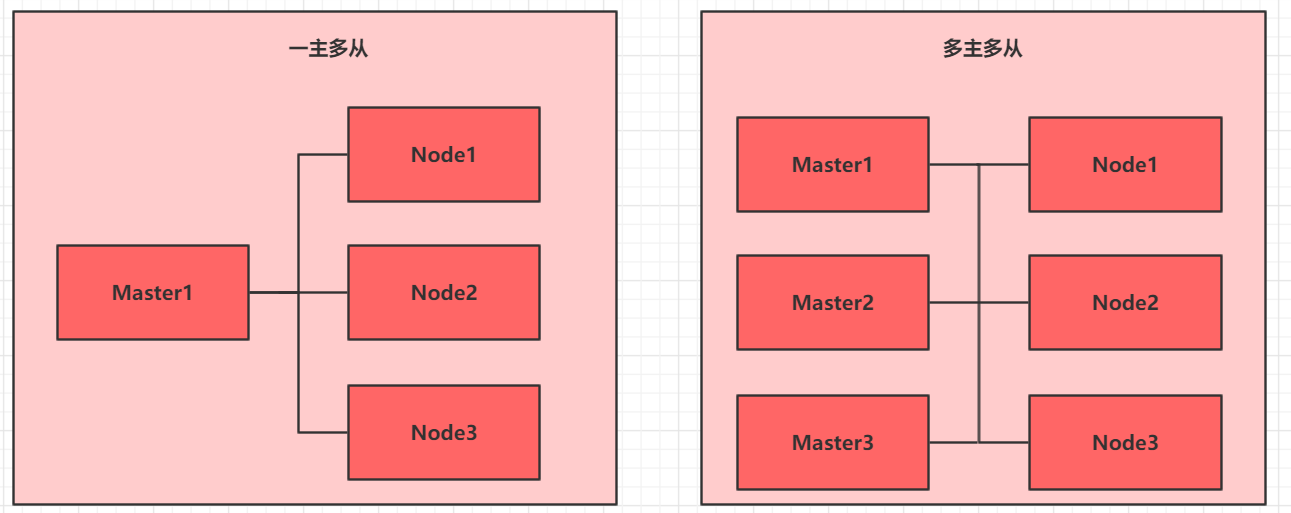

Cluster type

kubernetes clusters are generally divided into two types: one master-slave and multi master-slave.

- One Master and multiple slave: one Master Node and multiple Node nodes are simple to build, but have the risk of single machine failure. They are suitable for the test environment

- Multi Master and multi slave: multiple Master nodes and multiple Node nodes are difficult to build and have high security. They are suitable for production environment

Note: in order to make the test simple, a master-slave cluster is built this time

Installation mode

kubernetes can be deployed in many ways. At present, the mainstream methods include kubedm, minicube and binary package

- Minicube: a tool for quickly building single node kubernetes

- kubeadm: a tool for quickly building kubernetes clusters

- Binary package: download the binary package of each component from the official website and install it in turn. This method is more effective for understanding kubernetes components

Note: now you need to install the cluster environment of kubernetes, but you don't want to be too troublesome, so you choose to use kubedm

Host planning

| effect | IP address | operating system | to configure |

|---|---|---|---|

| Master | 192.168.109.101 | Centos7.5 infrastructure server | 2 CPUs, 2G memory, 50G hard disk |

| Node1 | 192.168.109.102 | Centos7.5 infrastructure server | 2 CPUs, 2G memory, 50G hard disk |

| Node2 | 192.168.109.103 | Centos7.5 infrastructure server | 2 CPUs, 2G memory, 50G hard disk |

Environment construction

Three Centos servers (one master and two slave) need to be installed in this environment setup, and then docker (18.06.3), kubedm (1.17.4), kubelet (1.17.4) and kubectl (1.17.4) programs are installed in each server.

Host installation

During virtual machine installation, pay attention to the settings of the following options:

-

Operating system environment: CPU (2C), memory (2G) and hard disk (50G)

-

Language selection: Simplified Chinese

-

Software selection: infrastructure server

-

Zone selection: automatic zones

-

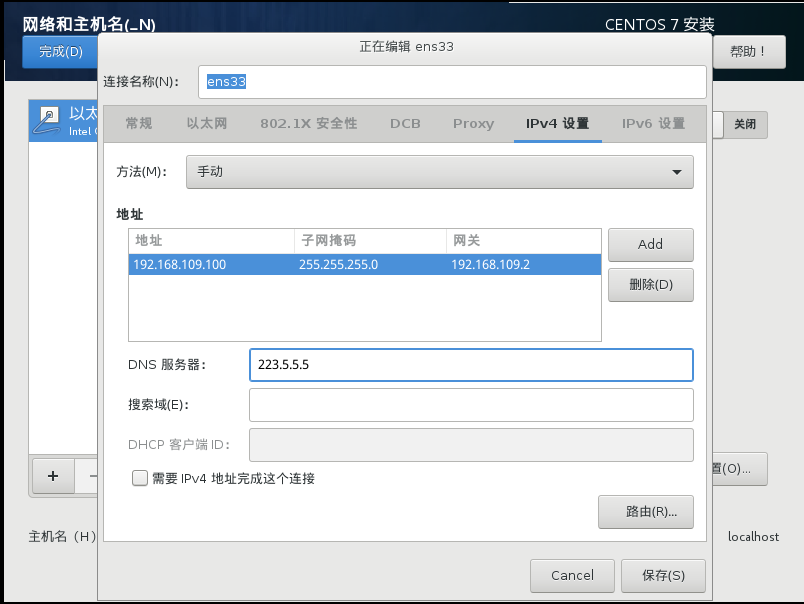

Network configuration: configure the network address information as follows

Network address: 192.168.109.100 (Each host is different (100, 101 and 102 respectively) Subnet mask: 255.255.255.0 Default gateway: 192.168.109.2 DNS: 223.5.5.5

-

Host name setting: set the host name according to the following information

master Node: master node Node: node1 node Node: node2

Environment initialization

- Check the version of the operating system

# Installing kubernetes clusters in this mode requires Centos version 7.5 or above [root@master ~]# cat /etc/redhat-release CentOS Linux release 7.5.1804 (Core)

2) Host name resolution

In order to facilitate direct calls between cluster nodes, configure host name resolution here. Internal DNS server is recommended in enterprises

# Edit the / etc/hosts file of the three servers by parsing the host name, and add the following content 192.168.109.100 master 192.168.109.101 node1 192.168.109.102 node2

3) Time synchronization

kubernetes requires that the time of nodes in the cluster must be accurate and consistent. Here, the chronyd service is directly used to synchronize the time from the network.

It is recommended to configure an internal time synchronization server in an enterprise

# Start the chronyd service [root@master ~]# systemctl start chronyd # Set the startup and self startup of chronyd service [root@master ~]# systemctl enable chronyd # When the chronyd service starts, wait a few seconds and you can verify the time using the date command [root@master ~]# date

4) Disable iptables and firewalld services

kubernetes and docker will generate a large number of iptables rules during operation. In order not to confuse the system rules with them, close the system rules directly

# 1 turn off firewalld service [root@master ~]# systemctl stop firewalld [root@master ~]# systemctl disable firewalld # 2 close iptables service [root@master ~]# systemctl stop iptables [root@master ~]# systemctl disable iptables

5) Disable selinux

selinux is a security service under linux system. If it is not shut down, all kinds of wonderful problems will occur in the installation cluster

# Edit the / etc/selinux/config file and change the value of SELINUX to disabled # Note that the linux service needs to be restarted after modification SELINUX=disabled

6) Disable swap partition

swap partition refers to virtual memory partition, which is used to convert disk space into virtual memory after physical memory is used up

Enabling the swap device will have a very negative impact on the system performance, so kubernetes requires that each node disable the swap device

However, if the swap partition cannot be closed for some reasons, you need to specify the configuration through explicit parameters during cluster installation

# Edit the partition configuration file / etc/fstab and comment out the swap partition line # Note that the linux service needs to be restarted after modification UUID=455cc753-7a60-4c17-a424-7741728c44a1 /boot xfs defaults 0 0 /dev/mapper/centos-home /home xfs defaults 0 0 # /dev/mapper/centos-swap swap swap defaults 0 0

7) Modify linux kernel parameters

# Modify linux kernel parameters and add bridge filtering and address forwarding functions # Edit / etc / sysctl d/kubernetes. Conf file, add the following configuration: net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 # Reload configuration [root@master ~]# sysctl -p # Load bridge filter module [root@master ~]# modprobe br_netfilter # Check whether the bridge filter module is loaded successfully [root@master ~]# lsmod | grep br_netfilter

8) Configure ipvs function

In kubernetes, service has two proxy models, one based on iptables and the other based on ipvs

Compared with the two, the performance of ipvs is obviously higher, but if you want to use it, you need to load the ipvs module manually

# 1 install ipset and ipvsadm [root@master ~]# yum install ipset ipvsadmin -y # 2 add the module to be loaded and write the script file [root@master ~]# cat <<EOF > /etc/sysconfig/modules/ipvs.modules #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF # 3 add execution permission for script file [root@master ~]# chmod +x /etc/sysconfig/modules/ipvs.modules # 4 execute script file [root@master ~]# /bin/bash /etc/sysconfig/modules/ipvs.modules # 5. Check whether the corresponding module is loaded successfully [root@master ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

9) Restart the server

After the above steps are completed, you need to restart the linux system

[root@master ~]# reboot

Install docker

# 1 switch image source

[root@master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# 2. View the supported docker versions in the current image source

[root@master ~]# yum list docker-ce --showduplicates

# 3 install a specific version of docker CE

# You must specify -- setopt=obsoletes=0, otherwise yum will automatically install a later version

[root@master ~]# yum install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7 -y

# 4 add a profile

# By default, the Cgroup Driver used by Docker is cgroups, while kubernetes recommends using systemd instead of cgroups

[root@master ~]# mkdir /etc/docker

[root@master ~]# cat <<EOF > /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://kn0t2bca.mirror.aliyuncs.com"]

}

EOF

# 5 start docker

[root@master ~]# systemctl restart docker

[root@master ~]# systemctl enable docker

# 6 check docker status and version

[root@master ~]# docker version

Installing kubernetes components

# Since the image source of kubernetes is abroad and the speed is relatively slow, switch to the domestic image source here

# Edit / etc / yum.com repos. d/kubernetes. Repo, add the following configuration

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

# Install kubedm, kubelet, and kubectl

[root@master ~]# yum install --setopt=obsoletes=0 kubeadm-1.17.4-0 kubelet-1.17.4-0 kubectl-1.17.4-0 -y

# Configure cgroup of kubelet

# Edit / etc/sysconfig/kubelet to add the following configuration

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

# 4. Set kubelet to start automatically

[root@master ~]# systemctl enable kubelet

Prepare cluster image

# Before installing kubernetes cluster, you must prepare the image required by the cluster in advance. The required image can be viewed through the following command

[root@master ~]# kubeadm config images list

# Download Image

# This image is in the warehouse of kubernetes and cannot be connected due to network reasons. An alternative is provided below

images=(

kube-apiserver:v1.17.4

kube-controller-manager:v1.17.4

kube-scheduler:v1.17.4

kube-proxy:v1.17.4

pause:3.1

etcd:3.4.3-0

coredns:1.6.5

)

images=(

kube-apiserver:v1.17.17

kube-controller-manager:v1.17.17

kube-scheduler:v1.17.17

kube-proxy:v1.17.17

pause:3.1

etcd:3.4.3-0

coredns:1.6.5

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

Cluster initialization

Now start to initialize the cluster and add the node node to the cluster

The following operations only need to be performed on the master node

# Create cluster

[root@master ~]# kubeadm init \

--kubernetes-version=v1.17.4 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=192.168.109.100

kubeadm init \

--kubernetes-version=v1.17.4 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=10.200.30.19

kubeadm init --kubernetes-version=1.17.4 \

--apiserver-advertise-address=192.168.122.21 \

--image-repository registry.aliyuncs.com/google_containers \

# Create necessary files

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubeadm join 10.200.30.19:6443 --token gmi9t2.8bfltlpgxcac13id

–discovery-token-ca-cert-hash sha256:4338bfcf5ba948dac27c1d058586ecc6797e231f6c81fd406d5a6c1914ee40f1

The following operations only need to be performed on the node node

# Join node nodes to the cluster [root@master ~]# kubeadm join 192.168.109.100:6443 \ --token 8507uc.o0knircuri8etnw2 \ --discovery-token-ca-cert-hash \ sha256:acc37967fb5b0acf39d7598f8a439cc7dc88f439a3f4d0c9cae88e7901b9d3f # Check the cluster status. At this time, the cluster status is NotReady because the network plug-in has not been configured [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady master 6m43s v1.17.4 node1 NotReady <none> 22s v1.17.4 node2 NotReady <none> 19s v1.17.4

Install network plug-in

kubernetes supports a variety of network plug-ins, such as flannel, calico, canal, etc. you can choose one to use. Flannel is selected this time

The following operations are still only performed on the master node. The plug-in uses the DaemonSet controller, which will run on each node

# Get the configuration file of the fan [root@master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml # Start the fan using the configuration file [root@master ~]# kubectl apply -f kube-flannel.yml # Wait a moment and check the status of the cluster nodes again [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 15m v1.17.4 node1 Ready <none> 8m53s v1.17.4 node2 Ready <none> 8m50s v1.17.4

So far, the cluster environment of kubernetes has been built

Service deployment

Next, deploy an nginx program in the kubernetes cluster to test whether the cluster is working normally.

# Deploy nginx [root@master ~]# kubectl create deployment nginx --image=nginx:1.14-alpine # Exposed port [root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort # View service status [root@master ~]# kubectl get pods,service NAME READY STATUS RESTARTS AGE pod/nginx-86c57db685-fdc2k 1/1 Running 0 18m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 82m service/nginx NodePort 10.104.121.45 <none> 80:30073/TCP 17m # 4 finally, access the nginx service deployed on the computer

catalogue

Introduction to 8knates

Container cluster k8s from introduction to proficient cluster environment construction (Chapter 2)

Resource management of container cluster k8s from introduction to mastery (Chapter 3)

Container cluster k8s from introduction to mastery (Chapter 4)

Detailed explanation of Pod of container cluster k8s from introduction to mastery (Chapter 5)

Detailed explanation of Pod controller of container cluster k8s from introduction to mastery (Chapter 6)

Detailed explanation of container cluster k8s from introduction to mastery (Chapter 7)

Container cluster k8s from introduction to proficient data storage (Chapter 8)

Security authentication of container cluster k8s from entry to mastery (Chapter 9)

Container cluster k8s: DashBoard from introduction to mastery (Chapter 10)