1, Introduction to gesture recognition (with course assignment report)

1 system design scheme

This year, gesture recognition, as a new generation of human-computer interaction means, has attracted the attention and research of domestic and foreign researchers and companies, and has made outstanding achievements. It has been widely used in smart TV, game and entertainment equipment, robots, etc. in addition, the research on gesture recognition technology can promote the understanding of visual perception ability of machine equipment, And apply it to more artificial intelligence fields, so that machines and equipment can better understand human ideas and intentions, and bring greater benefits to our life and work. This paper implements a gesture recognition system that can recognize five kinds of gestures, which can complete the basic task of human-computer interaction.

Gestures can be divided into two types: one is static gestures that are relatively immobile without any additional actions, and the other is dynamic gestures that are relatively moving and accompanied by complex changes (such as winding and combination). The former highlights the form and state, and the latter highlights the change and trajectory. This paper mainly studies the first one, and the system processing flow is shown in Figure 1.

Figure 1 system structure flow chart

2 objectives and test level to be achieved in system acceptance

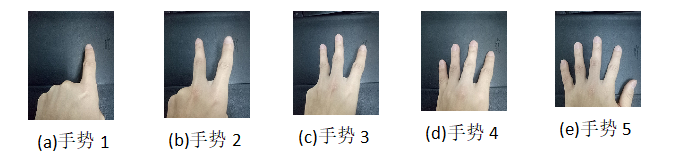

The system can recognize five predefined static gestures, as shown in Figure 2.

Figure 2 five predefined static gestures

When the system inputs any of the above gestures, the system can accurately and quickly judge which one the currently input gestures belong to, and print out the corresponding information on the console. For example, when inputting picture (a), the system outputs gesture 1 on the console; When inputting picture (b), the system outputs gesture 2,... On the console.

3 system implementation block diagram and flow chart

3.1 gesture segmentation

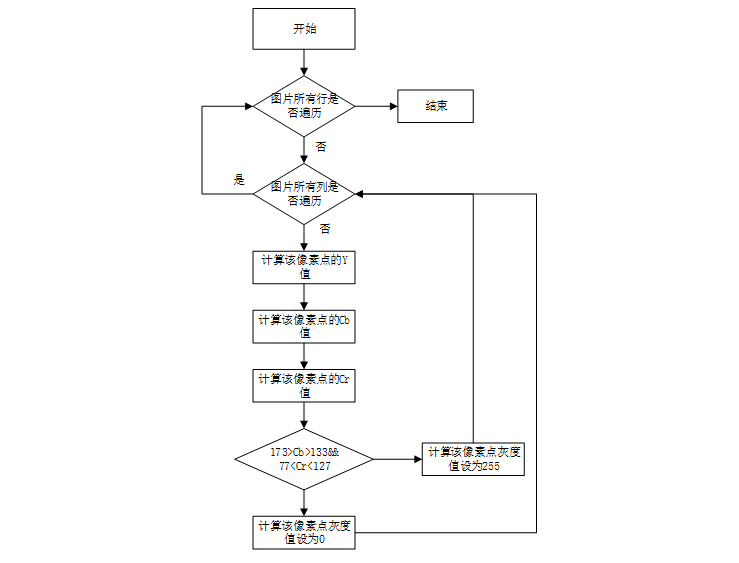

In this paper, gesture segmentation is carried out in YcbCr color space. The focus of segmentation is to establish skin color model, make use of the clustering characteristics of gesture skin color in YcbCr space, set the threshold range, and scan the image. Within this range, it is recognized as gesture, and those not within this range will not be considered. Consult relevant data and carry out experiments, The distribution range of human hand color on the two-dimensional subspace (Cb,Cr) of YcbCr meets: CB [133173] & CR [77127]. When the pixel meets this condition, it is recognized as a gesture, which is simple. The gesture segmentation flow chart is shown in Figure 3:

Fig. 3 flow chart of gesture segmentation

2, Partial source code

close all;clear all;

clc;

%----------------------------------------------

%Median filter the image and display the image

%call median_filter( ) Process

%----------------------------------------------

area = int32(0) ;%the measure of area

perimeter = int32(0) ;%Perimeter

%Read in image

[filename, pathname] = uigetfile({ '*.bmp';'*.jpg'; '*.gif'}, 'Select Picture');

RGB_data = imread([pathname, filename]);

[ROW,COL, DIM] = size(RGB_data); %The number of rows and columns of the extracted picture

R_data = single(RGB_data(:,:,1));

G_data = single(RGB_data(:,:,2));

B_data = single(RGB_data(:,:,3));

% %The following is the median filter code

% medfil_result_R = median_filter(R_data, 3);

% medfil_result_G = median_filter(G_data, 3);

% medfil_result_B = median_filter(B_data, 3);

%The following is the establishment of skin color model and implementation RGB turn YCbCr

Y_data = int32(zeros(ROW,COL));

Cb_data = int32(zeros(ROW,COL));

Cr_data = int32(zeros(ROW,COL));

for r = 1:ROW

for c = 1:COL

% Y_data(r, c) = 0.299*medfil_result_R(r, c) + 0.587*medfil_result_G(r, c) + 0.114*medfil_result_B(r, c);

% Cb_data(r, c) = -0.1687*medfil_result_R(r, c) - 0.3313*medfil_result_G(r, c) + 0.5*medfil_result_B(r, c) + 128;

% Cr_data(r, c) = 0.5*medfil_result_R(r, c) - 0.4187*medfil_result_G(r, c) - 0.0813*medfil_result_B(r, c) + 128;

%The coefficients are quantized by 8 bits

% Y_data(r, c) = int32((76*R_data(r, c) + 150*G_data(r, c) + 29*B_data(r, c))/256);

% Cb_data(r, c) = int32((-43*R_data(r, c) - 84*G_data(r, c) + 128*B_data(r, c) + 128*256)/256);

% Cr_data(r, c) = int32((128*R_data(r, c) - 107*G_data(r, c) - 20*B_data(r, c) + 128*256)/256);

Y_data(r, c) = floor( (76*R_data(r, c) + 150*G_data(r, c) + 29*B_data(r, c))/256 );

Cb_data(r, c) = floor( (-43*R_data(r, c) - 84*G_data(r, c) + 128*B_data(r, c) + 128*256)/256 );

Cr_data(r, c) = floor( (128*R_data(r, c) - 107*G_data(r, c) - 20*B_data(r, c) + 128*256)/256 );

end

end

%The following is the binary and statistical gesture area

Gray_data = int32(zeros(ROW,COL));

for r = 1:ROW

for c = 1:COL

if Cb_data(r, c)>133 && Cb_data(r, c)<173 && Cr_data(r, c)>77 && Cr_data(r, c)<127

Gray_data(r,c) = 0 ;

area = area + 1 ;

else

Gray_data(r,c) = 255 ;

end

end

end

%The following is edge detection

%edge_data=edge(Gray_data,'sobel') ;

edge_data = Sobel_Image(Gray_data) ;

% %The following is the statistical perimeter

% for r = 1:ROW

% for c = 1:COL

% if edge_data(r, c)==1

% perimeter = perimeter + 1 ;

% end

% end

% end

[H1,H2,H3,perimeter] = OriginMoment(edge_data) ;

function [ img ] = median_filter( image, m )

%----------------------------------------------

%median filtering

%Input:

%image: Original drawing

%m: Size of template 3*3 Template for, m=3

%Output:

%img: Image after median filtering

%----------------------------------------------

n = m;

[ height, width ] = size(image);

x1 = int32(image);

x2 = x1;

for i = 1: height-n+1

for j = 1:width-n+1

mb = x1( i:(i+n-1), j:(j+n-1) );

mb = mb(:);

mm = median(mb);

x2( i+(n-1)/2, j+(n-1)/2 ) = mm;

end

end

img = x2;

end

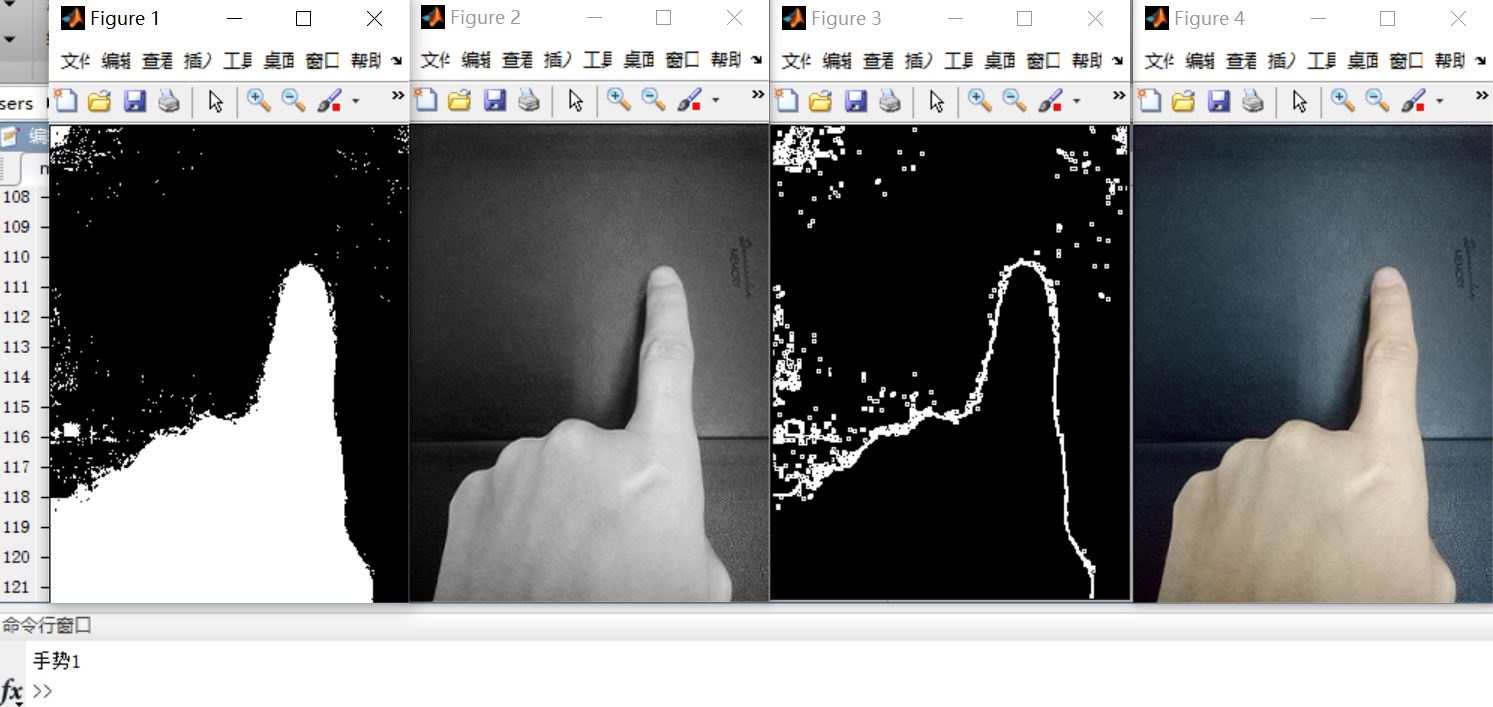

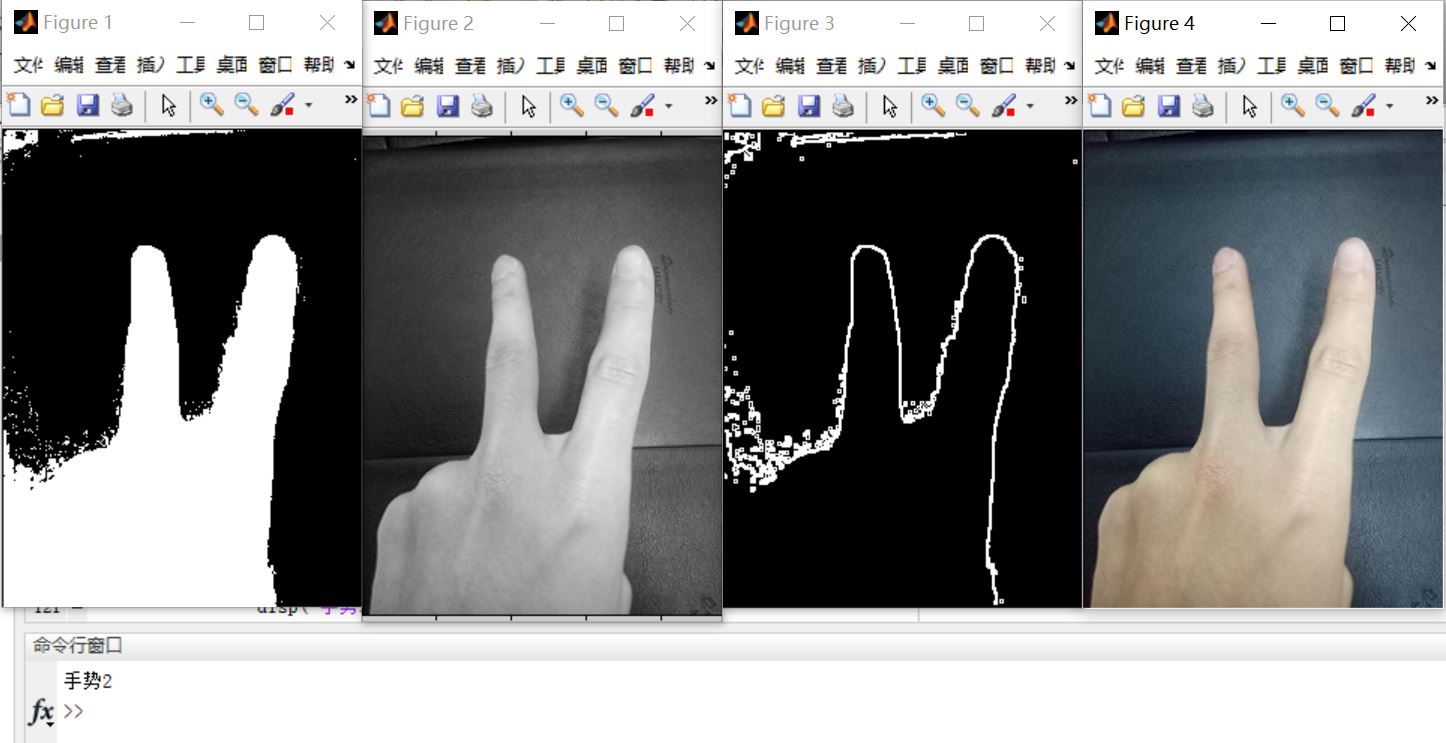

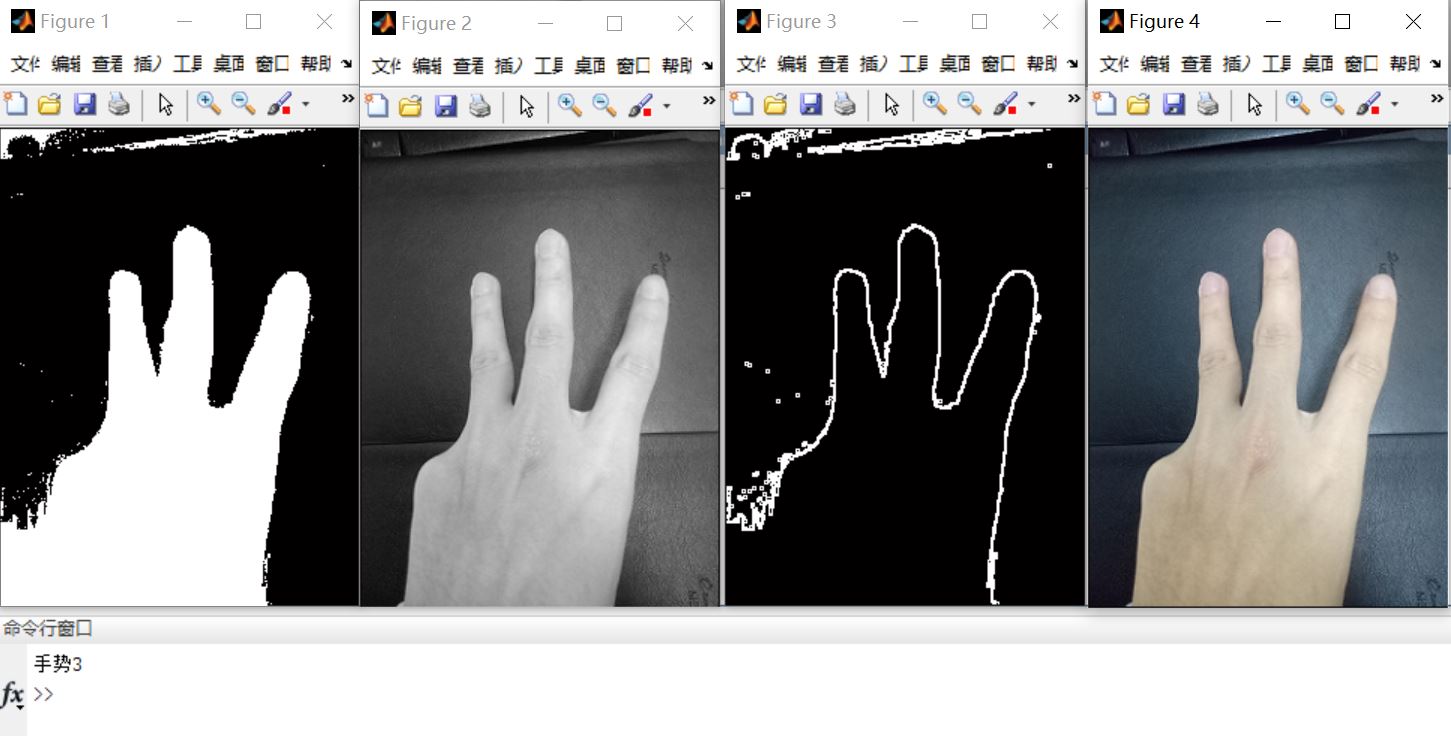

3, Operation results

4, matlab version and references

1 matlab version

2014a

2 references

[1] Cai Limei MATLAB image processing -- theory, algorithm and example analysis [M] Tsinghua University Press, 2020

[2] Yang Dan, Zhao Haibin, long Zhe Detailed explanation of MATLAB image processing example [M] Tsinghua University Press, 2013

[3] Zhou pin MATLAB image processing and graphical user interface design [M] Tsinghua University Press, 2013

[4]. Liu Chenglong Proficient in MATLAB image processing [M] Tsinghua University Press, 2015

[5] Li Changfeng, Guo Shengting, Chen Wenting Design of vision detection system based on static gesture recognition [J] Scientific and technological innovation and application 2021,11(13)