1, Experimental purpose

Build a multi-layer neural network model to recognize MNIST handwritten digits, and try to train the model with different super parameters, so that the final recognition accuracy of the model can reach more than 95%.

2, Algorithm steps

1. Parsing and loading data

(1) Open and read the downloaded MNIST dataset from the folder.

(2) Call load_ The MNIST function loads 6000 training instances and 10000 test samples.

load_ The MNIST function will return two arrays. The first images is a nm dimensional NumPy array, where n is the number of samples and m is the number of features (picture pixels). Each image in the dataset consists of 28 pixels × 28 pixels × The pixel expansion of 28 becomes a one-dimensional row vector representing the rows of the image array (784 rows or images). The second array labels returned by the function contains the corresponding target variables, handwritten numeral classification labels.

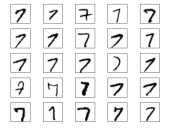

(3) Call the inshow function of Matplotlib to transform the vector characteristic matrix with 784 pixels back to the original 2828 pixels. Figure out the numbers 0 ~ 9 in the format of 2 rows and 5 columns; The first 25 variants of number 7 are illustrated in the format of 5 rows and 5 columns;

(4) The mnist object reads the training set and test set into variables.

2. Establish artificial network model and realize data processing:

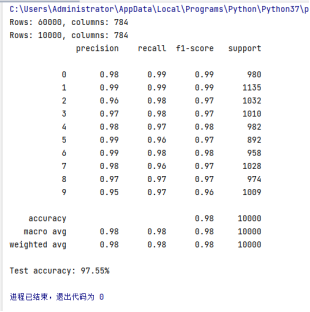

(1) A double hidden layer artificial neural network classifier model is constructed. Each layer has 100 and 50 neural nodes respectively. The model is trained according to the data of the training set, and the samples of the test set are read in to analyze the test results.

mlp = MLPClassifier(hidden_layer_sizes=(100, 50), max_iter=200)

mlp.fit(X_train, y_train) # training

predictions = mlp.predict(X_test) # test

(2) Data analysis. Print out the identification accuracy, accuracy and sample number of each output category, and print out the identification accuracy of the overall data set.

3, Direct code

# coding: utf-8

import sys

import gzip

import shutil

import os

import struct

import numpy as np

import matplotlib.pyplot as plt

from sklearn.neural_network import MLPClassifier

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

import matplotlib.pyplot as plt

if (sys.version_info > (3, 0)):

writemode = 'wb'

else:

writemode = 'w'

zipped_mnist = [f for f in os.listdir('./') if f.endswith('ubyte.gz')]

for z in zipped_mnist:

with gzip.GzipFile(z, mode='rb') as decompressed, open(z[:-3], writemode) as outfile:

outfile.write(decompressed.read())

def load_mnist(path, kind='train'):

"""Load MNIST data from `path`"""

labels_path = os.path.join(path,

'%s-labels-idx1-ubyte' % kind)

images_path = os.path.join(path,

'%s-images-idx3-ubyte' % kind)

with open(labels_path, 'rb') as lbpath:

magic, n = struct.unpack('>II',

lbpath.read(8))

labels = np.fromfile(lbpath,

dtype=np.uint8)

with open(images_path, 'rb') as imgpath:

magic, num, rows, cols = struct.unpack(">IIII",

imgpath.read(16))

images = np.fromfile(imgpath,

dtype=np.uint8).reshape(len(labels), 784)

images = ((images / 255.) - .5) * 2

return images, labels

X_train, y_train = load_mnist('', kind='train')

print('Rows: %d, columns: %d' % (X_train.shape[0], X_train.shape[1]))

X_test, y_test = load_mnist('', kind='t10k')

print('Rows: %d, columns: %d' % (X_test.shape[0], X_test.shape[1]))

# Visualize the first digit of each class:

fig, ax = plt.subplots(nrows=2, ncols=5, sharex=True, sharey=True, )

ax = ax.flatten()

for i in range(10):

img = X_train[y_train == i][0].reshape(28, 28)

ax[i].imshow(img, cmap='Greys')

ax[0].set_xticks([])

ax[0].set_yticks([])

plt.tight_layout()

# plt.savefig('images/12_5.png', dpi=300)

plt.show()

# Visualize 25 different versions of "7":

fig, ax = plt.subplots(nrows=5, ncols=5, sharex=True, sharey=True, )

ax = ax.flatten()

for i in range(25):

img = X_train[y_train == 7][i].reshape(28, 28)

ax[i].imshow(img, cmap='Greys')

ax[0].set_xticks([])

ax[0].set_yticks([])

plt.tight_layout()

# plt.savefig('images/12_6.png', dpi=300)

plt.show()

np.savez_compressed('mnist_scaled.npz',

X_train=X_train,

y_train=y_train,

X_test=X_test,

y_test=y_test)

mnist = np.load('mnist_scaled.npz')

mnist.files

X_train, y_train, X_test, y_test = [mnist[f] for f in ['X_train', 'y_train',

'X_test', 'y_test']]

del mnist

X_train.shape

mlp = MLPClassifier(hidden_layer_sizes=(100, 50), max_iter=200)

mlp.fit(X_train, y_train)

predictions = mlp.predict(X_test)

print(classification_report(y_test, predictions))

y_test_pred = mlp.predict(X_test)

acc = (np.sum(y_test == y_test_pred)

.astype(np.float) / X_test.shape[0])

print('Test accuracy: %.2f%%' % (acc * 100))

miscl_img = X_test[y_test != y_test_pred][:25]

correct_lab = y_test[y_test != y_test_pred][:25]

miscl_lab = y_test_pred[y_test != y_test_pred][:25]

fig, ax = plt.subplots(nrows=5, ncols=5, sharex=True, sharey=True, )

ax = ax.flatten()

for i in range(25):

img = miscl_img[i].reshape(28, 28)

ax[i].imshow(img, cmap='Greys', interpolation='nearest')

ax[i].set_title('%d) t: %d p: %d' % (i + 1, correct_lab[i], miscl_lab[i]))

ax[0].set_xticks([])

ax[0].set_yticks([])

plt.tight_layout()

plt.show()

4, Experimental results

The 4 pictures are: 10 output categories; The first 25 variants of number 7; The first 25 handwritten pictures and recognition results in the test data; Experimental results and data analysis.