I. Introduction

The front-end group of the company will basically hold a front-end monthly meeting every month to synchronize the infrastructure and other important information of the front-end group. The last link of the meeting will share the strange bug s or some interesting problems encountered by the front-end students in the development of this month. In the problem of sharing, I found an interesting performance problem caused by the cache library memoizee. After all, a library that improves performance can also cause other performance defects. The classic contradiction literature doesn't talk much nonsense. This article begins.

II. Use memoizee to improve performance

We put aside the cache function hook similar to useMemo commonly used in react 17. In react 16, for a function with a large amount of computation, many students may think of using the cache function to cache the results, so as to improve the performance. Unlike their own wheels, the existing cache function library memoizee It's a good recommendation.

As mentioned above, all performance improvements focus on space for time or time for space. Function cache is a typical space for time, and the idea is to take the accepted parameters as keys, the calculated results as values, and form a key value pair to store in an object. When we call this function again the next time and the parameters are the same, we can directly retrieve the result from the object, so as to avoid repeated complex logical calculations.

The simplest function cache example:

// Used to cache the calculation results of each key

const res = {};

const memoize = (num)=>{

// Assuming that it has been calculated before, return directly

if(res[num]!==undefined){

return res[num];

};

// New parameter? Recalculate and cache

const square = num*num;

res[num] = square;

return square;

};

console.log(memoize(2));// 4

console.log(memoize(3));// 9

// This time I left

console.log(memoize(2));// 4

Although the above example has the function of caching, it is essentially the caching of parameters. Its function is very single and can only be used to calculate the square of numbers. Suppose I now ask for the addition or division of numbers, don't we have to define many such cache functions ourselves? Therefore, in essence, we actually want to have a function that can act as a wrapper. Any function we pass in can be converted into a cache function by this wrapper, and the cache effect can be achieved for the same parameters during execution. This is the role of the third-party library memoizee.

After all, the core of this article is to share the performance problems caused by using memoizee. The basic usage is as follows. For more usage, please refer to the documentation:

import memoize from 'memoizee'

const o1 = {a:1,b:2};

const o2 = o1;

const o3 = {a:1,b:2};

const fn = function (obj) {

console.log(1);

return obj.a + obj.b;

};

// fn as a parameter, a fn with cache effect is obtained

const memoizeFn = memoize(fn);

memoizeFn(o1);

// o2 and o1 are the same object. Go to cache

memoizeFn(o2);

// o3 is a new object and does not go through the cache

memoizeFn(o3);

Another advantage of using memoizee is that the parameters of our function are not necessarily basic types such as numeric strings, and sometimes they may be an object. For example, in the above example, we borrowed memoizee to generate a function memoizeFn with cache effect. The parameters it receives are objects. We use fn's internal console to check whether there is cache. The effect is obvious. The console is executed twice, triggered by the parameters o1 and o3 respectively. Therefore, the advantage of borrowing the third-party library is that it can help you consider many boundary scenarios.

III. performance problems caused by memoizee

As mentioned earlier, whether it is memoizee or our customized cache function, the improvement of performance is essentially inseparable from changing space for time. Although it is fast to get the results directly after caching, with more and more cache results, 1000, 10000 to hundreds of thousands, can memoizee really meet my high-performance expectations? A simple example to subvert your perception:

const fn = function (a) {

return a * a;

};

// Use cache

console.time('Use cache');

const memoizeFn = memoize(fn);

for (let i = 0; i < 100000; i++) {

memoizeFn(i);

}

memoizeFn(90000);

console.timeEnd('Use cache');

// Do not use cache

console.time('Do not use cache');

for (let i = 0; i < 100000; i++) {

// Simply execute without caching anything

fn(i);

}

fn(90000);

console.timeEnd('Do not use cache');

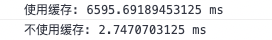

The above code is divided into two parts. memoize is used as cache. We simulate 10W executions, and then execute memoizeFn(90000) again to achieve the effect of fetching cache. The part that does not use the cache is executed and used now, and no cache is taken. Surprisingly, the code using cache takes 6.5S, while the part without cache only takes 2.74ms, which is 2442 times faster than the former!

I know you have doubts in your mind now. Let's make a more interesting comparison. At the beginning of the article, we wrote a poor cache function. It doesn't matter. We make some modifications as follows:

console.time('Use custom cache');

const res = {};

const memoizeFn_ = (num)=>{

// Assuming that it has been calculated before, return directly

if(res[num]!==undefined){

return res[num];

};

// New parameter? Recalculate and cache

const square = num*num;

res[num] = square;

return square;

};

for (let i = 0; i < 100000; i++) {

// Simply execute without caching anything

memoizeFn_(i);

}

memoizeFn_(90000);

console.timeEnd('Use custom cache');

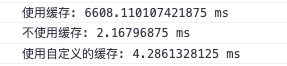

After all, there are storage and query operations when using custom cache, so the time-consuming is certainly a little slower than not using cache, but the overall time-consuming is only 2ms. Here, we can conclude that memoizee must be tricky in its implementation, and we don't decide to read the source code when things happen. Therefore, we found the following code in memoizee:

module.exports = function () {

var lastId = 0, argsMap = [], cache = [];

return {

get: function (args) {

// Note this code. indexOf is used to query whether this parameter has been executed before

var index = indexOf.call(argsMap, args[0]);

return index === -1 ? null : cache[index];

}

// Deleted some irrelevant codes

};

};

Don't sell off. When memoizee is used as cache and there is only one function parameter, the get query implementation of memoizee actually borrows indexOf. At the time complexity level, traverse and query an element from the array. The fastest is O(1) and the worst is O(N). In this case, the worst case is generally taken as the time complexity, so the time complexity is O(N).

Then why is the above example so time-consuming? That's because the cache function itself is an operation of querying and caching while executing. For example, if it is executed to 1000, it needs to check whether the 999 previously cached has been cached. Taking the 10W provided as the standard, in fact, indexOf has been executed 10W times in memoizee, and the later the query is executed, the greater the cost, It takes so long to understand.

The above figure shows that when the parameter is executed to 58152, you need to check the previous 5W multiple caches. You need to execute this operation for 4W times later, and the cache is still incremented.

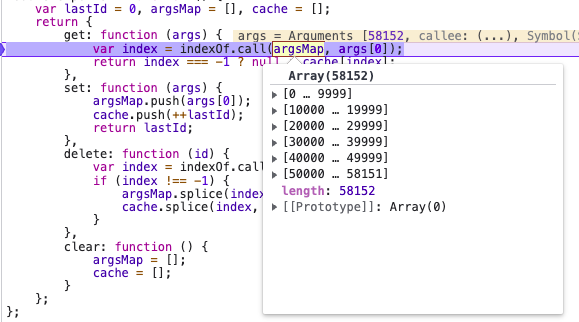

In addition, when memoizee has one or more parameters, the implementation logic of get is actually different, but the embarrassing thing is that no matter how many parameters are, they are actually borrowed from indexOf. The following figure is the code breakpoint I changed to multiple parameters:

get: function (args) {

var index = 0, set = map, i;

while (index < length - 1) {

i = indexOf.call(set[0], args[index]);

if (i === -1) return null;

set = set[1][i];

++index;

}

i = indexOf.call(set[0], args[index]);

if (i === -1) return null;

return set[1][i] || null;

},

III. solution

At this point, some students may be confused. I use memoizee to improve performance. As a result, you have performance problems with memoizee. Is it used or not? Or how?

In fact, the essence of using the cache function is to reduce the particularly complex logical processing. For example, the above processing of just finding the square of a number does not need to use the cache at all. It is thousands of times faster without caching.

Secondly, because memoizee borrows indexOf when querying the cache, it is inevitable to have performance problems in front of a large amount of data. Other colleagues encounter this problem because a customer has defined multiple attribute configurations for different types of work items in the project, and each configuration supports custom N work item attributes, In the program, there is often logic to check the corresponding work item attribute according to the work item attribute ID, so this check is directly blocked.

Remember the comparison of the above three pieces of code? The reason why our customized cache function is fast is that we use cache[key]. Even if you store hundreds of thousands of data in the cache, the time complexity of directly reading the key through the object is actually O(1). Therefore, according to the requirements of the project, the students of the performance optimization team have defined a tool function to query the attributes of work items according to the ID:

export const getterCache = (fn) => {

const cache = new Map();

return (...args) => {

const [uuid] = args;

// The time complexity here is O(1)

let data = cache.get(uuid);

if (data === undefined) {

// No cache

data = fn.apply(this, args); // Execute the original function to get the value

cache.set(uuid, data);

}

return data;

};

};

The advantage is that it borrows the get method of Map. Compared with the O(N) of indexOf, you can know that it can be much faster.

Returning to memoizee, the meeting concluded that memoizee should be used carefully for function caching (not recommended). If your function results are not complex, don't use them. For scenarios with very large calls, you may have to manually define caching functions. On the other hand, because the company's react has increased to 17, useMemo has been used for most caches, No performance loss has been found. Later, let's take a look at how useMemo implements caching. This article ends here.