[root@localhost tls]# k3s -v

k3s version v1.22.5+k3s1 (405bf79d)

go version go1.16.10

My k3s version 1.22.5+k3s1

At k3s v1 After 20.2

How proxy node registration works

The agent node registers with the websocket connection started by the k3s agent process, and the connection is maintained by the client load balancer running as part of the agent process.

The agent will use the node cluster password and the randomly generated node password to register with the server and store them in / etc / Ranger / node / password The server will store the password of each node as a Kubernetes secret, and any subsequent attempts must use the same password. The node password secret is stored in the Kube system namespace, and the name uses the template < host > node-password. k3s.

Note: in k3s v1 Before 20.2, the server stored the password in / var / lib / Ranger / k3s / server / cred / node passwd. On disk

If / etc / Ranger / node deletes the directory of the agent, recreate the password file for the agent or delete the entry from the server.

By starting the K3s server or agent with this -- with node ID flag, you can attach a unique node ID to the host name.

Solution:

[root@localhost tls]# kubectl -n kube-system get secrets | grep pass 172.16.10.5.node-password.k3s Opaque 1 171m 172.16.10.15.node-password.k3s Opaque 1 19m [root@localhost tls]# kubectl -n kube-system get secrets 172.16.10.15.node-password.k3s NAME TYPE DATA AGE 172.16.10.15.node-password.k3s Opaque 1 19m [root@localhost tls]# kubectl -n kube-system describe secrets 172.16.10.15.node-password.k3s Name: 172.16.10.15.node-password.k3s Namespace: kube-system Labels: <none> Annotations: <none> Type: Opaque Data ==== hash: 113 bytes [root@localhost tls]# kubectl -n kube-system delete secrets 172.16.10.15.node-password.k3s

List of automated deployments

At the of the directory path detailed list /Var / lib / Ranger / K3s / server / manifests is bundled into K3s binaries at build time. These will be rancher/helm-controller Install at runtime .

[root@localhost tls]# ls /var/lib/rancher/k3s/server/manifests/ ccm.yaml coredns.yaml local-storage.yaml metrics-server rolebindings.yaml traefik.yaml [root@localhost tls]# ls /var/lib/rancher/k3s/server/manifests/metrics-server/ aggregated-metrics-reader.yaml auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml [root@localhost tls]#

At k3s v1 Before 20.2:

Basic principles

The process of Agent registration is very complex. Generally speaking, it has two purposes:

- Start kubelet and other services and connect to the API server service on the server node, which is necessary for the k8s cluster

- Establish a websocket tunnel to synchronize some information between k3s server and agent

When registering the agent, we only provide the server address and node token. How does the agent complete the registration step by step? First, look at the format of node token:

On the master side of k3s

[root@localhost tls]# cat /var/lib/rancher/k3s/server/node-token K107081ea35382d9c0c04f1930e6af4d71cc916ee4a9b70f5f3cbde02f3160e2204::server:2c011429c5dcc7a64e675d14863ebd89

The user and password here correspond to the configuration of basic Auth in k3s API server. When k3s API server is started, a special authentication method is set, which is basic auth. The corresponding file is in / var / lib / Ranger / k3s / server / cred / passwd of the server node:

[root@localhost tls]# cat /var/lib/rancher/k3s/server/cred/passwd 2c011429c5dcc7a64e675d14863ebd89,server,server,k3s:server 2c011429c5dcc7a64e675d14863ebd89,node,node,k3s:agent

Thus, the agent side can obtain an authorization to communicate with k3s API server by parsing the node token. The authorization method is basic auth.

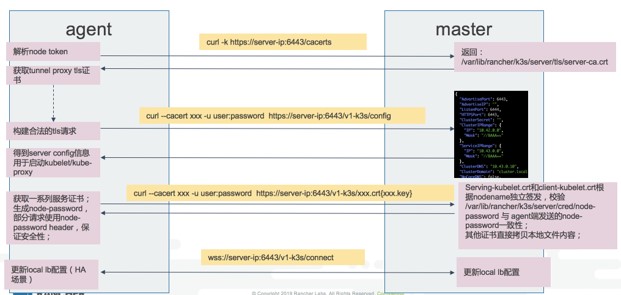

By understanding the role of node token, we can unlock the prelude to the agent registration process. Refer to the following figure:

Taking the yellow text box order as an example, the first three steps are to obtain various dependency information for starting kubelet service, and the last step is to establish websocket channel. We can only focus on the first three steps. The most important ones are the address of the API server and the tls certificates for various k8s component communication. Since those certificates are issued on the server, the agent needs to obtain them through some API requests. These certificates are roughly as follows:

[root@localhost tls]# ls client-admin.crt client-ca.key client-k3s-controller.crt client-kube-proxy.crt etcd service.key client-admin.key client-controller.crt client-k3s-controller.key client-kube-proxy.key request-header-ca.crt serving-kube-apiserver.crt client-auth-proxy.crt client-controller.key client-kube-apiserver.crt client-scheduler.crt request-header-ca.key serving-kube-apiserver.key client-auth-proxy.key client-k3s-cloud-controller.crt client-kube-apiserver.key client-scheduler.key server-ca.crt serving-kubelet.key client-ca.crt client-k3s-cloud-controller.key client-kubelet.key dynamic-cert.json server-ca.key temporary-cert

Among these certificates, kubelet and kubelet are the most special. Because kubelet runs on each node, we need to issue a certificate separately for each kubelet node (node name as the issuing basis). When it comes to separate issuance, you need to verify whether the node information is legal. At this time, node passwd appears.

The process is roughly like this. The agent forms a random passwd (/ etc / Ranger / node / password) and sends the node name and node passwd information to the k3s server as the request header of the certificate request. Because the agent will apply for two kubelet certificates from the server, it will receive two requests with this header. If the agent is registered for the first time, the server will parse the node name and node passwd after receiving the first request and store them in / var / lib / Ranger / k3s / server / cred / node passwd (note that it was here before K3s v1.20.2). After receiving the second request, the server will read the node passwd file and verify the header information. If the information is inconsistent, the request will be 403 rejected. If the agent registers repeatedly, the server will directly compare the request header content with the local information. If the information is inconsistent, the request will be 403 rejected.

Compare the agent, that is, node passwd (/ etc / Ranger / node / password) on the node node and node paswd on the server:

agent $ cat /etc/rancher/node/password 47211f28f469622cccf893071dbda698 server $ hostname cat /var/lib/rancher/k3s/server/cred/node-passwd 31567be88e5408a31cbd036fc9b37975,ip-172-31-13-54,ip-172-31-13-54, cf3f4f37042c05c631e07b0c0abc528f,xxxxx,xxxxxx,

The passwd corresponding to the Agent node is inconsistent with the passwd corresponding to the hostname stored in the server. According to the basic principle mentioned above, an error log of 403 will appear.

Solution

Why is there a passwd inconsistency? Normally, if you use k3s agent uninstall SH to clean up the installed agent node without deleting the password file (/ etc / Ranger / node / password). The problem is likely to be VM reconstruction or manual operation to delete this file. Because the password is deleted on the agent, the password will be regenerated when the agent registers again, resulting in the inconsistency between the new password and the original password stored on the server.

There are three solutions:

- Manually create a password on the agent, and the content is consistent with that stored in the server

- The original content in the server is modified to make the password consistent with the newly generated one on the agent

- You can try to use -- with node ID when registering the agent, so that the server thinks it is a new node and will not compare it with the original information

Summary

In principle, users are not recommended to touch these files mentioned in the article, and try to give control to k3s. Even if we clean up the agent node, we also try to use the built-in script of k3s. If you encounter such a problem, you can refer to the principle introduction of this article to analyze it and repair it through known solutions.