1, Introduction to ELM neural network

1 Introduction

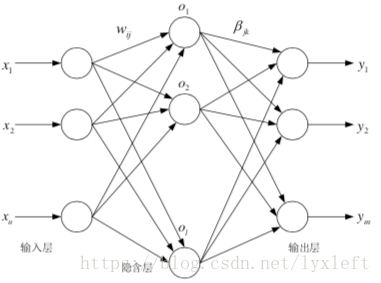

Extreme learning machine is not a new thing, but has new content in algorithm (method). In terms of neural network structure, it is a forward propagation neural network, and the significance of previous blog posts.

2 ELM's biggest innovation

1) The connection weight between the input layer and the hidden layer and the threshold of the hidden layer can be set randomly, and there is no need to adjust after setting. This is different from BP neural network. BP needs to continuously reverse to adjust the weight and threshold. So we can reduce the amount of computation by half.

2) Connection weight between hidden layer and output layer β It does not need iterative adjustment, but is determined at one time by solving the equations.

The research shows that through such rules, the generalization performance of the model is very good and the speed is improved a lot.

In a word, the biggest feature of ELM is that for traditional neural networks, especially single hidden layer feedforward neural networks (SLFNs), it is faster than traditional learning algorithms on the premise of ensuring learning accuracy.

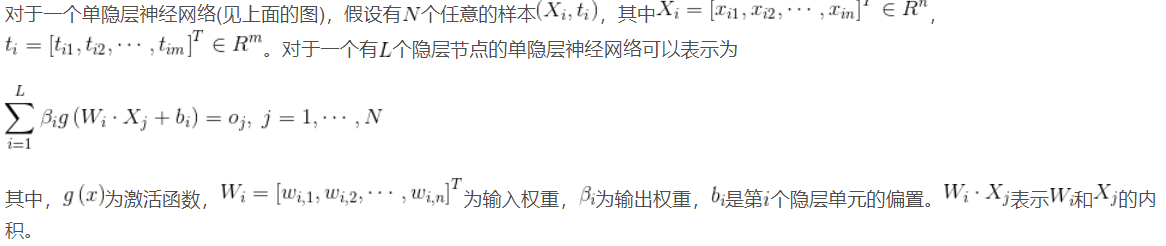

3 principle of limit learning machine

ELM is a new fast learning algorithm. For single hidden layer neural networks, ELM can randomly initialize the input weight and bias, and obtain the corresponding output weight.

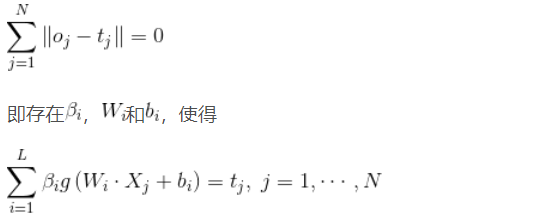

The goal of single hidden layer neural network learning is to minimize the output error, which can be expressed as

The matrix can be expressed as

3, Partial source code

%_________________________________________________________________________%

% Based on genetic optimization ELM Regression prediction %

%_________________________________________________________________________%

clear all

clc

%% Import data

load data

% Randomly generate training set and test set

k = randperm(size(input,1));

% Training set - 1900 samples

P_train=input(k(1:1900),:)';

T_train=output(k(1:1900));

% Test set - 100 samples

P_test=input(k(1901:2000),:)';

T_test=output(k(1901:2000));

%% normalization

% Training set

[Pn_train,inputps] = mapminmax(P_train,-1,1);

Pn_test = mapminmax('apply',P_test,inputps);

% Test set

[Tn_train,outputps] = mapminmax(T_train,-1,1);

Tn_test = mapminmax('apply',T_test,outputps);

%Training data related dimensions

R = size(Pn_train,1);

S = size(Tn_train,1);

N = 20;%Number of hidden layers

%% Define genetic optimization parameters

pop=20; %Population number

Max_iteration=50; % Set the maximum number of iterations

dim = N*R + N;%Dimension, that is, the number of weights and thresholds

lb = [-1.*ones(N*R,1);zeros(N,1)];%Lower boundary

ub = [ones(N*R,1);ones(N,1)];%Upper boundary

fobj = @(x) fun(x,Pn_train,Tn_train,N);

[Best_score,Best_pos,GA_curve]=GA(pop,Max_iteration,lb,ub,dim,fobj); %Start optimization

[fitness,IW,B,LW,TF,TYPE] = fun(Best_pos,Pn_train,Tn_train,N);%Get optimized parameters

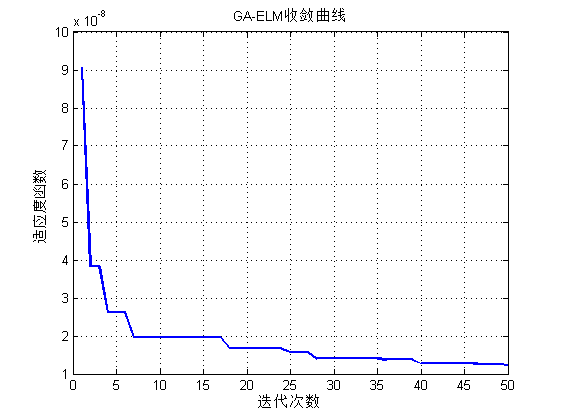

figure

plot(GA_curve,'linewidth',1.5);

grid on

xlabel('Number of iterations')

ylabel('Fitness function')

title('GA-ELM Convergence curve')

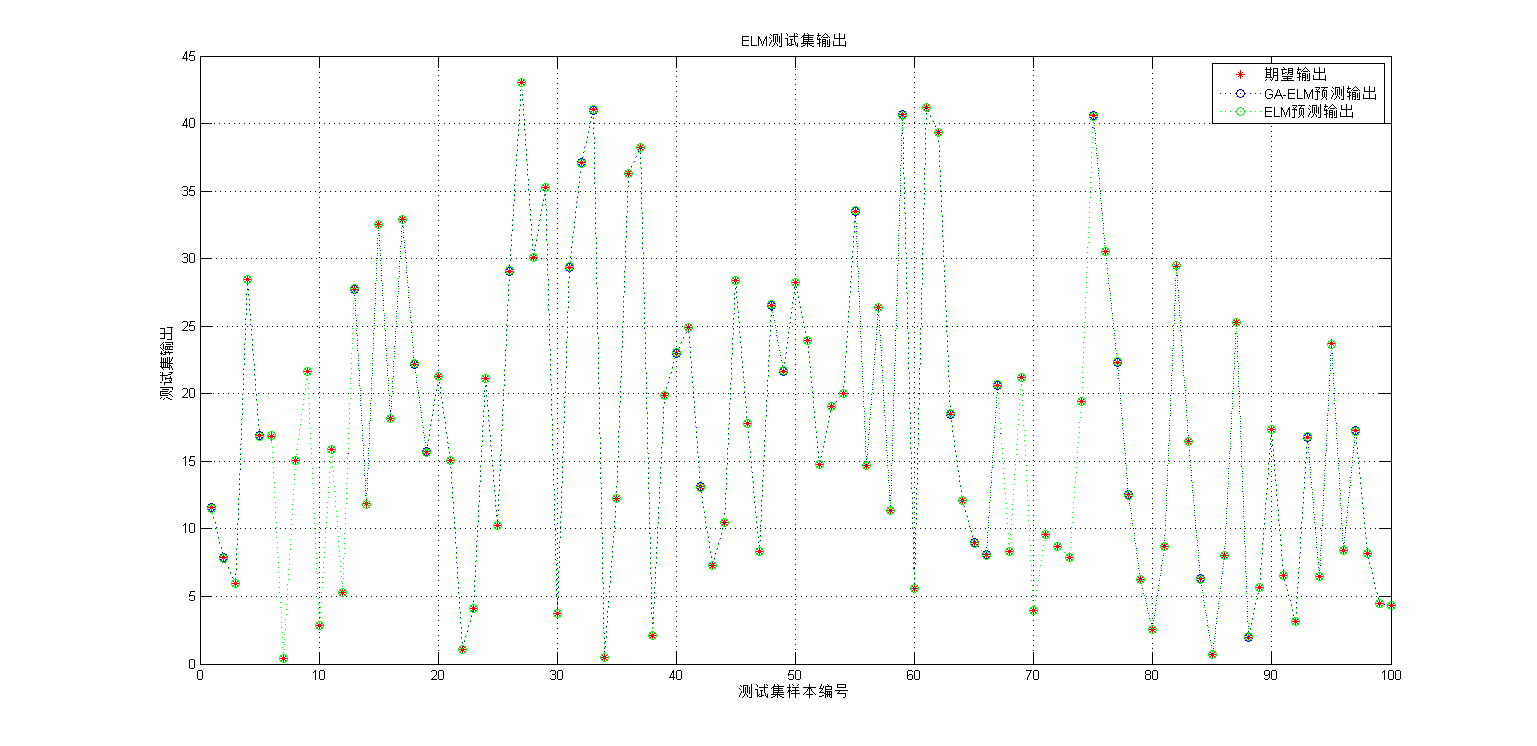

%% Data comparison drawing

figure

plot(T_test,'r*')

hold on

plot(T_sim,'b:o')

plot(T_sim1,'g:o')

xlabel('Test set sample number')

ylabel('Test set output')

title('ELM Test set output')

grid on;

legend('Expected output','GA-ELM Prediction output','ELM Prediction output')

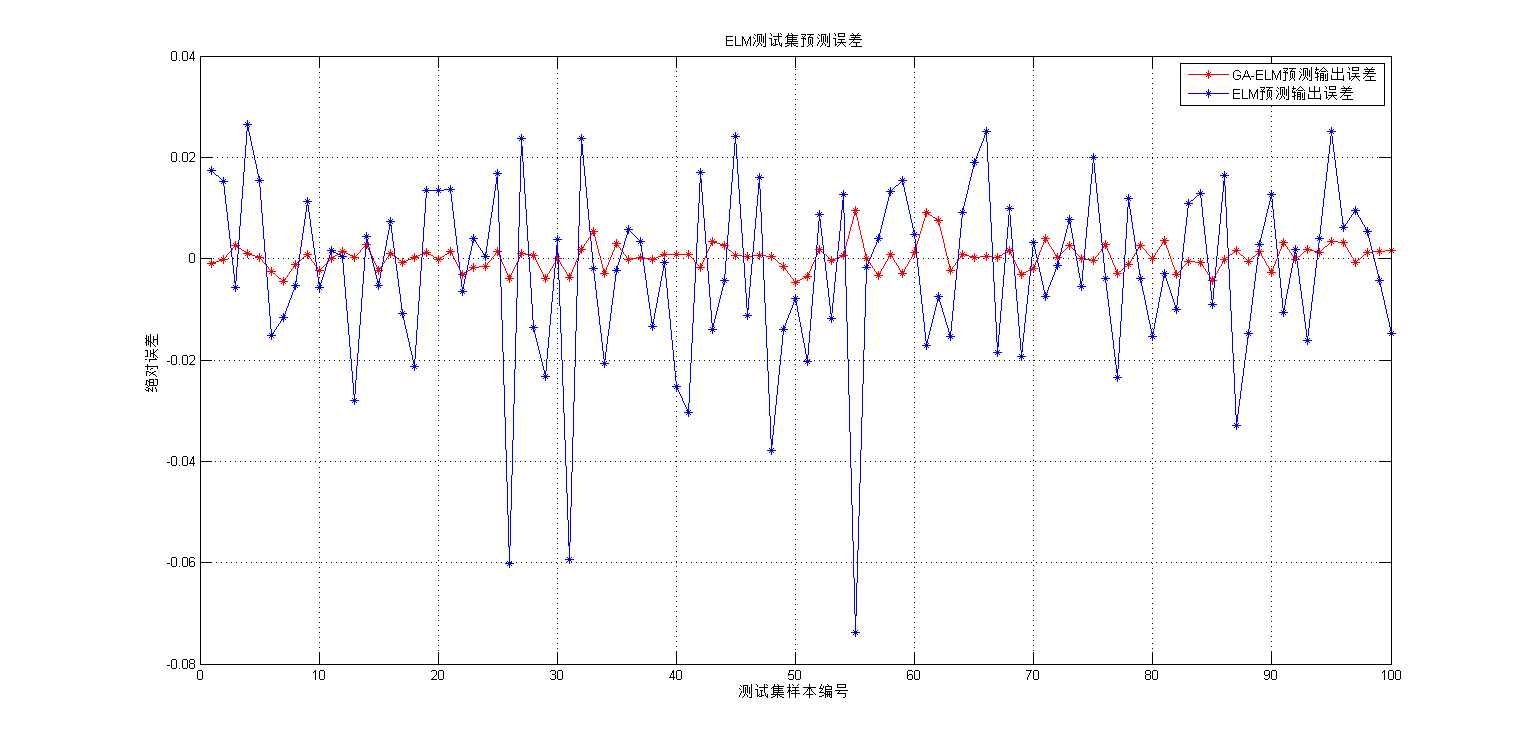

figure

plot(T_test-T_sim,'r-*')

hold on

plot(T_test-T_sim1,'b-*')

xlabel('Test set sample number')

ylabel('absolute error')

title('ELM Test set prediction error')

grid on;

legend('GA-ELM Prediction output error','ELM Prediction output error')

disp(['Basics ELM MSE Error:',num2str(E1)])

disp(['GA-ELM MSE Error:',num2str(E)])

4, Operation results

5, matlab version and references

1 matlab version

2014a

2 references

[1] Steamed stuffed bun Yang, Yu Jizhou, Yang Shan Intelligent optimization algorithm and its MATLAB example (2nd Edition) [M] Electronic Industry Press, 2016

[2] Zhang Yan, Wu Shuigen MATLAB optimization algorithm source code [M] Tsinghua University Press, 2017

[3] Zhou pin MATLAB neural network design and application [M] Tsinghua University Press, 2013

[4] Chen Ming MATLAB neural network principle and example refinement [M] Tsinghua University Press, 2013

[5] Fang Qingcheng MATLAB R2016a neural network design and application 28 case studies [M] Tsinghua University Press, 2018

[6] LV Zhong, Zhou Qiang, Zhou Kun, Chen Li, Shen shuangkui Transformer fault diagnosis based on improved limit learning machine based on genetic algorithm [J] High voltage electrician, 2015