Optimization of a query

You should encounter this scenario. A self incrementing primary key is set in MySQL, but some data is subsequently deleted, resulting in discontinuous primary keys. You can use the following command to query those discontinuous primary keys.

-- Writing method 1 select id from (select id from Table name order by id asc) b where not exists (select 1 from Table name where id=b.id-1) -- Writing method 2 select id from (select id from Table name order by id asc) t where (select 1 from Table name where id=t.id-1) is null

Before learning this article, you can initialize a table with millions of data, execute the most common query and record the time.

select * from Table name

Next, write a deep paging statement to query

select * from Table name limit 100000,10;

Then, if your self incrementing ID primary key is continuous, you can compare the execution order of the following two statements.

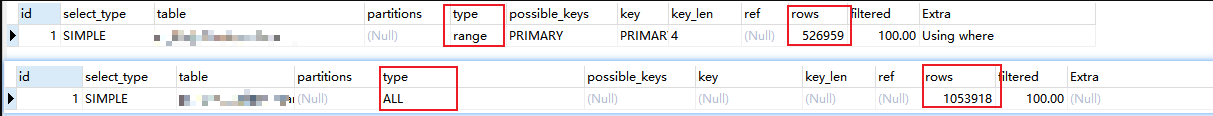

explain select * from Table name limit 100000,10; explain select * from Table name where id > 100000 limit 10;

After comparing the structure, it is found that using the where condition can reduce the number of query rows by nearly half.

However, the above second SQL statement is not practical in many scenarios, because it is difficult for the database in practical application not to have a vacancy in the primary key, and then the above code will become invalid.

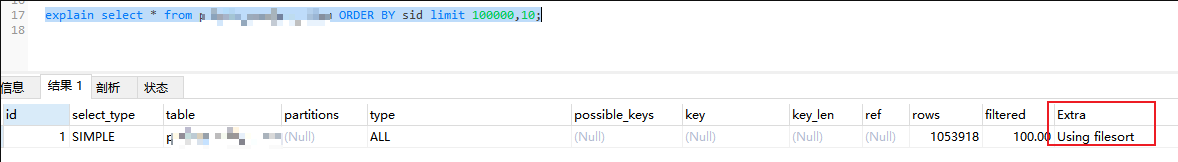

If non primary key (non index) rows are used for sorting and filtering, the problem of Using filesort mentioned in the previous blog will arise.

explain select * from Table name order by sid limit 100000,10;

It is also very simple to modify the above contents. When sorting by order, only the primary key can be sorted.

Next, continue to optimize and modify it to the following query command

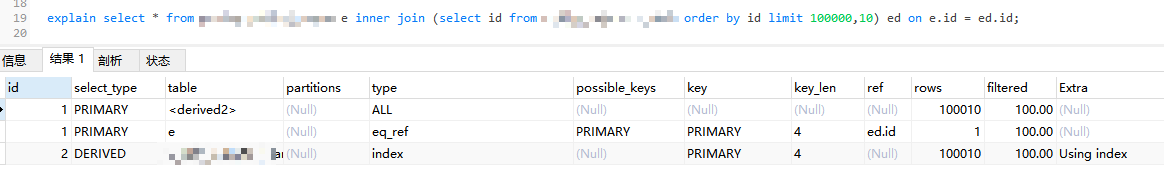

explain select * from Table name e inner join (select id from Table name order by id limit 100000,10) ed on e.id = ed.id;

Note that the execution order is from bottom to top. First use the index to sort, then use the primary key to query the final result set, and finally filter out the target data.

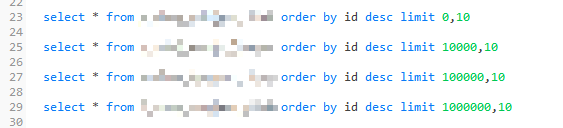

The table is tested again. With the increasing number of deep pages, the efficiency of query gradually decreases.

select * from Table name order by id desc limit 0,10; select * from Table name order by id desc limit 10000,10; select * from Table name order by id desc limit 100000,10; select * from Table name order by id desc limit 1000000,10;

Query according to where written above and get the following optimization command (but some lines will be lost)

select * from Table name where id >=0 limit 10; select * from Table name where id > 10000 limit 10; select * from Table name where id > 100000 limit 10; select * from Table name where id > 1000000 limit 10;

Similarly, you can compare the following queries

select * from Table name e inner join (select id from Table name order by id limit 0,10) ed on e.id = ed.id; select * from Table name e inner join (select id from Table name order by id limit 10000,10) ed on e.id = ed.id; select * from Table name e inner join (select id from Table name order by id limit 100000,10) ed on e.id = ed.id; select * from Table name e inner join (select id from Table name order by id limit 1000000,10) ed on e.id = ed.id;

Of course, if the order of ID S is clear and there is no missing, the following command is the fastest.

select * from Table name where id between 1000000 and 1000010 order by id desc

Optimization Guide

Because the table index of the database changes the disordered data into order, it is necessary to reasonably index the resume database

Type of database index:

- Normal: normal index. One index value is followed by multiple row values;

- Unique: unique index. There can only be one row value after an index. Adding a primary key means adding a unique index;

- fulltext: full text index;

Index method, i.e. index structure: - b+tree: balanced tree;

- Hash: hash table;

How to create an index

- Add indexes to frequently queried fields, and frequently updated fields are not suitable for indexing;

- Fields that do not appear in where should not be indexed. Fields that often appear in ORDER BY, GROUP BY and DISTINCT are suitable for indexing;

- Try to use only one index for a query;

- If you need a function operation value (for example, left('field name ', 3)) as an index, it is recommended to create another column;

- In practical application, composite indexes should be considered more, but it should be noted that the order of composite indexes is from left to right;

- Limit the number of single table indexes. It is recommended that there be no more than 5 single table indexes;

- It is recommended to use self incrementing ID value for primary key instead of UUID, MD5, HASH and character string column as primary key;

Record time

Today is the 285th / 365 day of continuous writing.

You can pay attention to me, praise me, comment on me and collect me.

More wonderful

- Python crawler 100 tutorial navigation posts (for 3 consecutive years, constantly changing!)

- Python crawler 120 (39.9 yuan, guaranteed for 5 years)