catalogue

2. Installing istio components

3, Flow trend of traditional mode

4, Analyze the default traffic scheduling mechanism under

1. Detailed explanation of cluster traffic scheduling rules

1. Service Grid

The purpose is to solve the problems of inter service communication and governance after the microservicing of system architecture. Provide a general service governance scheme.

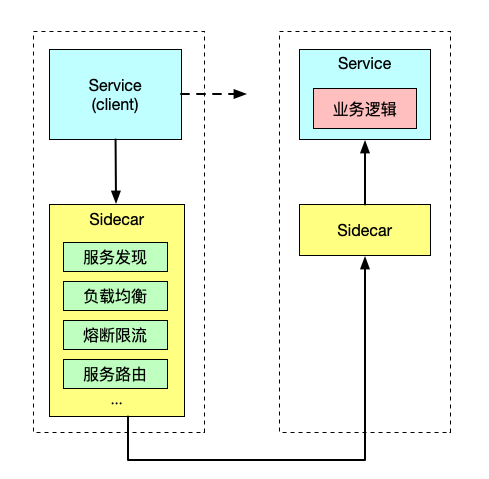

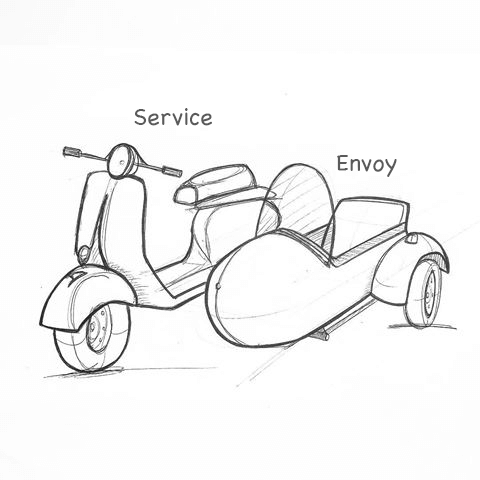

Sidecar refers to the side car mode in the software system architecture. The inspiration of this model comes from the side three wheels in our life: that is, adding a side car next to the two wheeled motorcycle to expand the existing services and functions.

The essence of this mode is to realize the decoupling of data plane (business logic) and control plane: the driver of the original two wheeled motorcycle focused on running the track, and the pilot on the side car focused on surrounding information and map and navigation.

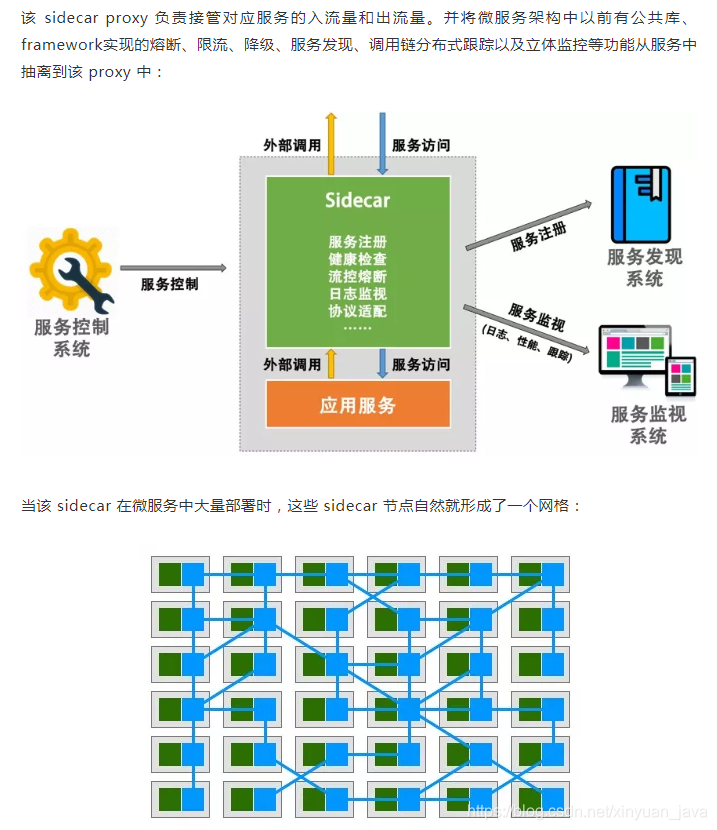

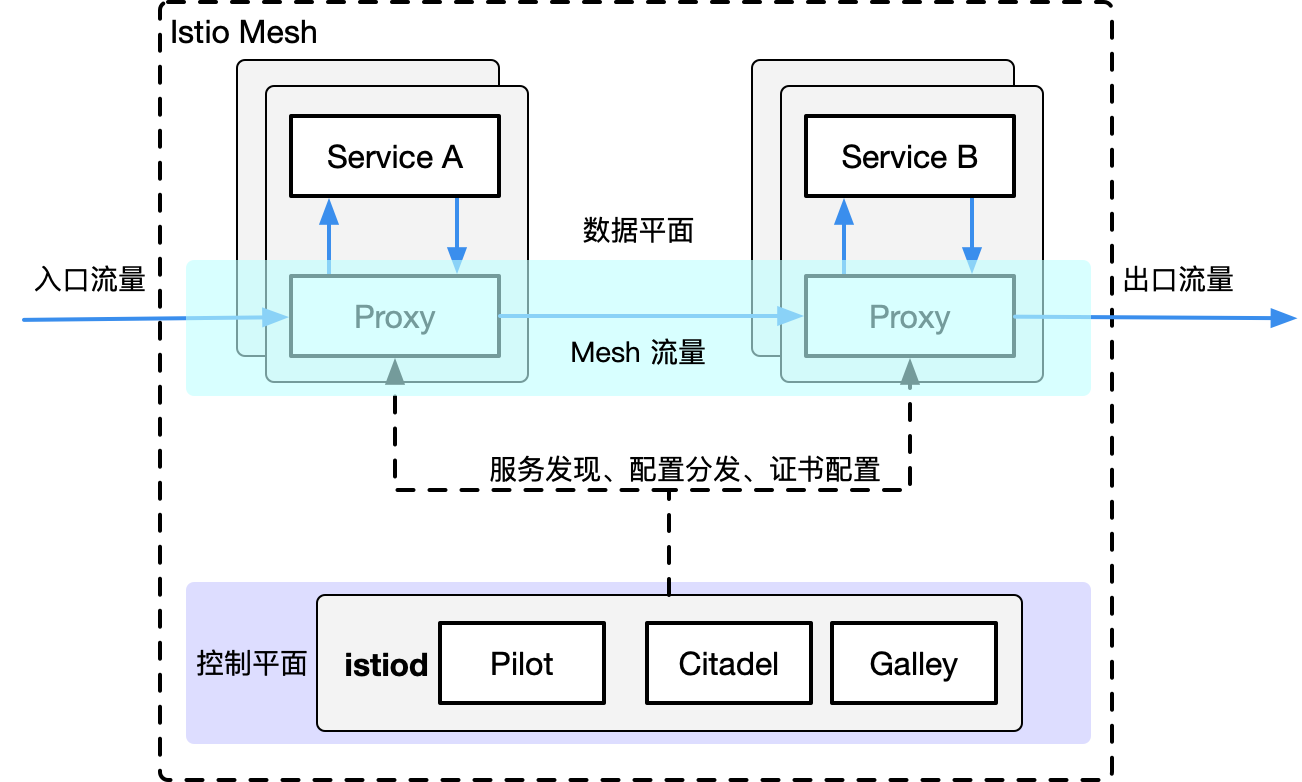

Service Mesh this service network focuses on processing services and communication between services. It is mainly responsible for constructing a stable and reliable service communication infrastructure, and making the whole architecture more advanced and Cloud Native. In the project, Service Mesh is basically a group of lightweight service agents deployed with application logic services, and is transparent to application services.

2. Open source implementation

First generation service grid Linkerd and Envoy

Linkerd, written in Scala, is the first open source service mesh solution in the industry. William Morgan is a preacher and practitioner of service mesh. Envoy is written based on C++ 11. Both in theory and in practice, the performance of the latter is better than linkerd. These two open source implementations take sidecar as the core, and most of their concerns are how to do proxy well and complete some general control surface functions. However, when you deploy a large number of sidecars in the container, how to manage and control these sidecars itself is not a small challenge. Therefore, the second generation service mesh came into being.

Second generation service grid Istio

Istio is a joint open source project between Google and IBM and Lyft. It is the most mainstream service mesh scheme at present, and it is also the de facto second-generation service mesh standard.

2, Istio service deployment

1. Installing Istio

https://istio.io/latest/docs/setup/getting-started/

Download Istio

The download will include: installation files, samples, and istioctl Command line tools.

-

visit Istio release Page to download the installation file corresponding to your operating system. In macOS or Linux systems, you can also download the latest version of Istio through the following command:

$ wget https://github.com/istio/istio/releases/download/1.7.3/istio-1.7.3-linux-amd64.tar.gz

-

Unzip and switch to the directory where Istio package is located. For example, if the Istio package name is istio-1.7.3:

$ tar zxf istio-1.7.3-linux-amd64.tar.gz $ ll istio-1.7.3 drwxr-x--- 2 root root 22 Sep 27 08:33 bin -rw-r--r-- 1 root root 11348 Sep 27 08:33 LICENSE drwxr-xr-x 6 root root 66 Sep 27 08:33 manifests -rw-r----- 1 root root 756 Sep 27 08:33 manifest.yaml -rw-r--r-- 1 root root 5756 Sep 27 08:33 README.md drwxr-xr-x 20 root root 330 Sep 27 08:33 samples drwxr-x--- 3 root root 133 Sep 27 08:33 tools

-

Copy the istioctl client to the path environment variable

$ cp bin/istioctl /bin/

-

Configuration command auto completion

Istioctl auto complete files are located in the tools directory. By copying istioctl Bash file to your home directory, and then add the following content to your The bashrc file executes istioctl tab to complete the file:

$ cp tools/istioctl.bash ~ $ source ~/istioctl.bash

2. Installing istio components

https://istio.io/latest/zh/docs/setup/install/istioctl/#display-the-configuration-of-a-profile

Install directly using istioctl:

$ istioctl install --set profile=demo ✔ Istio core installed ✔ Istiod installed ✔ Egress gateways installed ✔ Ingress gateways installed ✔ Installation complete $ kubectl -n istio-system get po NAME READY STATUS RESTARTS AGE istio-egressgateway-7bf76dd59-n9t5l 1/1 Running 0 77s istio-ingressgateway-586dbbc45d-xphjb 1/1 Running 0 77s istiod-6cc5758d8c-pz28m 1/1 Running 0 84s

istio provides several different initialization and deployment methods for different environments profile

#View the profile type provided $ istioctl profile list We use the demo mode, that is, we all install #Get yaml of kubernetes: $ istioctl manifest generate --set profile=demo > istio-kubernetes-manifest.yaml

uninstall

$ istioctl manifest generate --set profile=demo | kubectl delete -f -

3, Flow trend of traditional mode

1. Scene 1

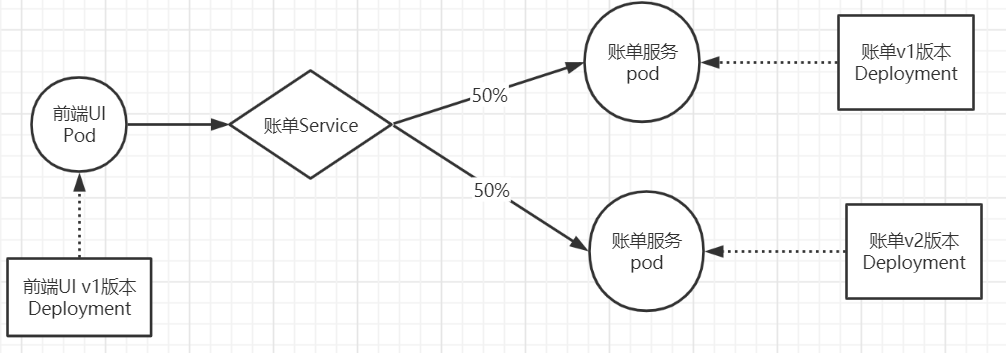

Access bill service through the front-end service front Tomcat. By default, 50% of the access will be randomly scheduled to the back-end services bill-service-dp1 and bill-service-dp2.

2. Resource list

front-tomcat-dpl-v1.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app: front-tomcat version: v1 name: front-tomcat-v1 namespace: istio-demo spec: replicas: 1 selector: matchLabels: app: front-tomcat version: v1 template: metadata: labels: app: front-tomcat version: v1 spec: containers: - image: consol/tomcat-7.0:latest name: front-tomcat

bill-service-dpl-v1.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: service: bill-service version: v1 name: bill-service-v1 namespace: istio-demo spec: replicas: 1 selector: matchLabels: service: bill-service version: v1 template: metadata: labels: service: bill-service version: v1 spec: containers: - image: nginx:alpine name: bill-service command: ["/bin/sh", "-c", "echo 'this is bill-service-v1'>/usr/share/nginx/html/index.html;nginx -g 'daemon off;'"]

bill-service-dpl-v2.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: service: bill-service version: v2 name: bill-service-v2 namespace: istio-demo spec: replicas: 1 selector: matchLabels: service: bill-service version: v2 template: metadata: labels: service: bill-service version: v2 spec: containers: - image: nginx:alpine name: bill-service command: ["/bin/sh", "-c", "echo 'hello, this is bill-service-v2'>/usr/share/nginx/html/index.html;nginx -g 'daemon off;'"]

bill-service-svc.yaml

apiVersion: v1 kind: Service metadata: labels: service: bill-service name: bill-service namespace: istio-demo spec: ports: - name: http port: 9999 protocol: TCP targetPort: 80 selector: service: bill-service type: ClusterIP

3. Operation implementation

$ kubectl create namespace istio-demo $ kubectl apply -f front-tomcat-dpl-v1.yaml $ kubectl apply -f bill-service-dpl-v1.yaml $ kubectl apply -f bill-service-dpl-v2.yaml $ kubectl apply -f bill-service-svc.yaml [root@k8s-master demo]# kubectl -n istio-demo exec front-tomcat-v1-7f8c94c6c8-lfz5m -- curl -s bill-service:9999 this is bill-service-v1 [root@k8s-master demo]# kubectl -n istio-demo exec front-tomcat-v1-7f8c94c6c8-lfz5m -- curl -s bill-service:9999 hello, this is bill-service-v2

4, Analyze the default traffic scheduling mechanism under

1. Detailed explanation of cluster traffic scheduling rules

We all know that the default access rules will allocate 50% of the traffic of v1 and v2 pod s respectively. How is the k8s default scheduling mechanism implemented? Let's explain it from the network level.

Curl bill service: 9999 -- > curl svcip: 9999 -- > find the local route - N -- > does not meet the rules of entering 0.0.0.0 and going to 10.244.1.1 bridge -- > 10.244.2.1 is the host -- > the host looks at the kubeproxy component -- > iptables save | grep svcip find link -- > iptables save | grep link -- > to find 50% of the random of the two corresponding pod addresses

1. When executing < kubectl - n istio demo exec front-tomcat-v1-7f8c94c6c8-lfz5m -- curl - s bill service: 9999 >, the default dns resolution in the container is actually the svc address of the curl back-end service.

# bill's own domain name resolution [root@k8s-master demo]# kubectl -n istio-demo exec -it bill-service-v1-765cb46975-hmtmd sh / # nslookup bill-service Server: 10.1.0.10 ... Address: 10.1.122.241 [root@k8s-master demo]# kubectl get svc -n istio-demo NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE bill-service ClusterIP 10.1.122.241 <none> 9999/TCP 66m curl -s bill-service:9999 Equivalent to curl -s 10.1.122.241:9999

2. Route to 10.244.1.1 bridge through 0.0.0.0 rule

[root@k8s-master demo]# kubectl -n istio-demo exec -it bill-service-v1-765cb46975-hmtmd sh / # route Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface default 10.244.1.1 0.0.0.0 UG 0 0 0 eth0 10.244.0.0 10.244.1.1 255.255.0.0 UG 0 0 0 eth0 10.244.1.0 * 255.255.255.0 U 0 0 0 eth0 No 10 found.1.122.241 Rule, pass 0.0.0.0 Go to 10.244.1.1 of Gateway

3. The host does not maintain rules. The traffic jumps to iptables to view the rules

# This 10.244.1.1 is actually the address of the host, that is, the network rules of the container at this time, and the -- > host inside the container

[root@k8s-node2 ~]# ip a | grep -e 10.244.1.1

inet 10.244.1.1/24 brd 10.244.1.255 scope global cni0

# At this time, the host does not find the relevant rules

# In fact, the host machine deploys Kube proxy components. Kube proxy maintains iptables rules. Although there is no direct host machine route rule for traffic, the traffic has been intercepted by iptables during access. Let's see if iptable is configured with relevant rules

[root@k8s-node2 ~]# iptables-save | grep 10.1.122.241

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.1.122.241/32 -p tcp -m comment --comment "istio-demo/bill-service:http cluster IP" -m tcp --dport 9999 -j KUBE-MARK-MASQ

-A KUBE-SERVICES -d 10.1.122.241/32 -p tcp -m comment --comment "istio-demo/bill-service:http cluster IP" -m tcp --dport 9999 -j KUBE-SVC-PK4BNTKC2JYVE7B24. The iptables rule link successfully finds the back-end address with a weight of 0.5

# Jump to KUBE-SVC-PK4BNTKC2JYVE7B2 chain according to SVC address rules [root@k8s-node2 ~]# iptables-save | grep 10.1.122.241 -A KUBE-SERVICES -d 10.1.122.241/32 -p tcp -m comment --comment "istio-demo/bill-service:http cluster IP" -m tcp --dport 9999 -j KUBE-SVC-PK4BNTKC2JYVE7B2 # You can see that 0.5 weights are forwarded to KUBE-SEP-OIO7GYZLNGRLZLYD, and the remaining weights are forwarded to KUBE-SEP-OXS2CP2Q2RMPFLD5 link. Let's see where the backend corresponds [root@k8s-node2 ~]# iptables-save | grep KUBE-SVC-PK4BNTKC2JYVE7B2 -A KUBE-SVC-PK4BNTKC2JYVE7B2 -m comment --comment "istio-demo/bill-service:http" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-OIO7GYZLNGRLZLYD -A KUBE-SVC-PK4BNTKC2JYVE7B2 -m comment --comment "istio-demo/bill-service:http" -j KUBE-SEP-OXS2CP2Q2RMPFLD5 # View the IP corresponding to KUBE-SEP-OIO7GYZLNGRLZLYD [root@k8s-node2 ~]# iptables-save | grep KUBE-SEP-OIO7GYZLNGRLZLYD -A KUBE-SEP-OIO7GYZLNGRLZLYD -s 10.244.1.168/32 -m comment --comment "istio-demo/bill-service:http" -j KUBE-MARK-MASQ # View the IP corresponding to KUBE-SEP-OXS2CP2Q2RMPFLD5 [root@k8s-node2 ~]# iptables-save | grep KUBE-SEP-OXS2CP2Q2RMPFLD5 -A KUBE-SEP-OXS2CP2Q2RMPFLD5 -s 10.244.2.74/32 -m comment --comment "istio-demo/bill-service:http" -j KUBE-MARK-MASQ # It can be found that the two links correspond to the two pod addresses of the bill service [root@k8s-node2 ~]# kubectl get po -n istio-demo -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES bill-service-v1-765cb46975-hmtmd 1/1 Running 0 89m 10.244.1.168 k8s-node2 <none> <none> bill-service-v2-6854775ffc-9n6jv 1/1 Running 0 87m 10.244.2.74 k8s-node1 <none> <none> front-tomcat-v1-7f8c94c6c8-lfz5m 1/1 Running 0 89m 10.244.1.169 k8s-node2 <none> <none>

2. To sum up

When the front container executes the curl bill service: 9999 operation, according to the domain name resolution rules, it is actually executing curl -s 10.1.122.241:9999. The container enters 0.0.0 to 10.244.1.1 bridge according to the local route -n rule. The bridge address is located on the host computer, but the corresponding route rule is not configured; So we think that the k8s cluster network is scheduled by iptables rules configured by kubeproxy component, so we find svcip -- > 0.5 -- > bill service IP step by step through iptables save.