Network security is based on the sketch framework and selenium and openpyxl libraries to crawl the epidemic statistics and summary information of foreign countries

data sources

https://voice.baidu.com/act/newpneumonia/newpneumonia/

thinking

Because the data in the target page is dynamically loaded, the response obtained by directly initiating the request does not contain any useful data. Therefore, it is necessary to use selenium's browser driver to send the request and obtain the response containing data. At the same time, continue to observe the page and find that the initially loaded page only contains the first part of the data, and the remaining data needs to manually click "expand all" to load all the data, so you also need to use the action chain to simulate the click operation. The obtained responses are parsed and stored in excel for subsequent use.

The starting url is the target website, but the response obtained from this request does not contain data, so the response cannot be directly used for parsing. It needs to be intercepted and tampered with in the download middleware. After the middleware is intercepted, the browser driver sends a request to the website again, and then uses the action chain to find and simulate the click "expand all", obtain the response containing complete data, and then encapsulate the response and return it to the crawler for analysis.

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

print('Start request')

spider.bro.get(request.url) # Intercept the response and re request to obtain the complete response

actions = ActionChains(spider.bro)

actions.click(spider.bro.find_element_by_xpath('//*[@id="foreignTable"]/div/span')).perform() # click "expand all"

actions.release() # Release action chain

page_text = spider.bro.page_source

new_response = http.HtmlResponse(url=request.url, body=page_text, encoding='utf-8', request=request) # Tamper with the complete page that responds to the new request

return new_response

The response is parsed using xpath, and the parsed data is stored in item and submitted to the pipeline.

class NewCoronaCrawlerItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

area = scrapy.Field() # region

new = scrapy.Field() # newly added

total = scrapy.Field() # Cumulative

cured = scrapy.Field() # cure

death = scrapy.Field() # death

def parse(self, response, **kwargs):

print('Start parsing')

tr_list = response.xpath('//*[@id="foreignTable"]/table/tbody/tr/td/table/tbody/tr')

for tr in tr_list:

data = tr.xpath('./td//text()').getall()

item = NewCoronaCrawlerItem()

item['area'] = data[0]

item['new'] = data[1]

item['total'] = data[2]

item['cured'] = data[3]

item['death'] = data[4]

yield item

In the pipeline, open the excel table with openpyxl, create a new worksheet and name it "year month day hour minute second", write and save all the data, and then close it.

class NewCoronaCrawlerPipeline:

wb = None

ws = None

def open_spider(self, spider):

self.wb = openpyxl.load_workbook(r'Summary of the epidemic situation of New Coronavirus pneumonia abroad.xlsx') # Open workbook

self.ws = self.wb.create_sheet(time.strftime('%Y-%m-%d_%H-%M-%S', time.localtime(time.time()))) # Create a new worksheet named mm / DD / yyyy, hours, minutes and seconds

self.ws.append(['region', 'newly added', 'Cumulative', 'cure', 'death']) # Insert header

def process_item(self, item, spider):

self.ws.append([item['area'], int(item['new']), int(item['total']), int(item['cured']), int(item['death'])]) # Insert a row of data

return item

def close_spider(self, spider):

print('Save data')

self.wb.save(r'Summary of the epidemic situation of New Coronavirus pneumonia abroad.xlsx')

self.wb.close()

print('Save complete!')

At the same time, in order to improve efficiency, the browser driver can be set as a headless browser, and avoid detection can be set to improve security.

def __init__(self):

super().__init__()

# Set to headless browser

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

# Circumvention detection

option = webdriver.ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

self.bro = webdriver.Chrome(executable_path=r'chromedriver.exe', options=option, chrome_options=chrome_options)

For the initial request, you need to modify the information in the configuration file, which does not comply with robots Txt protocol, and conduct UA camouflage. At the same time, open the download middleware and pipeline, and modify the displayed log level.

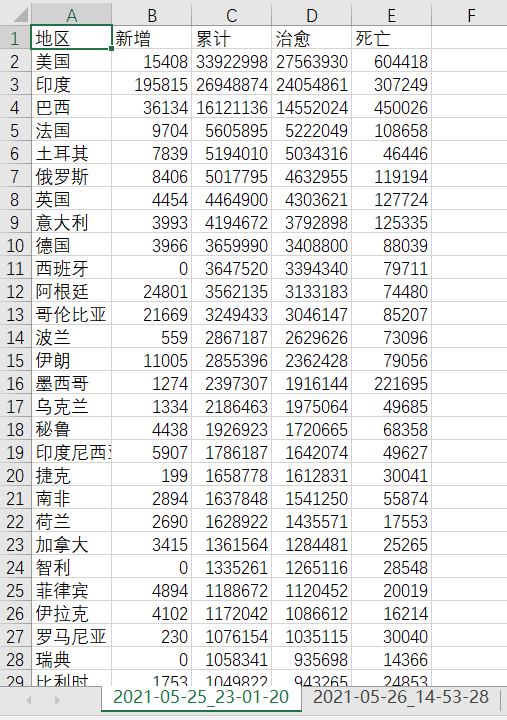

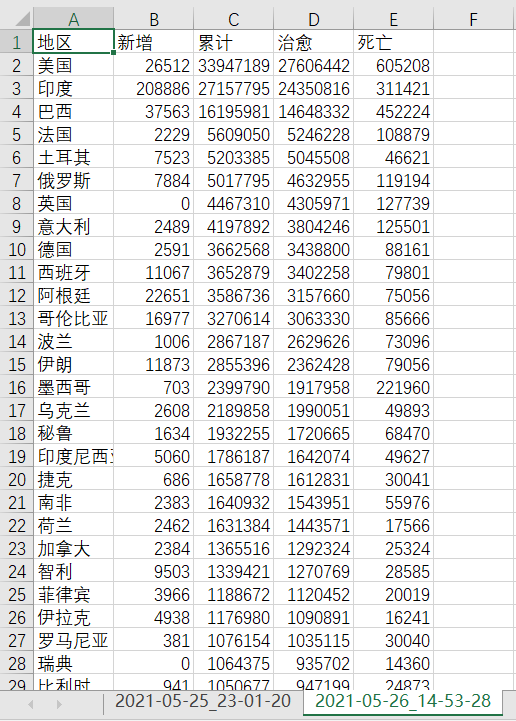

result