preface

In the last article, I analyzed MediaCodec and its contents in detail. However, a layer of NuPlayerDecoder is wrapped outside MediaCodec. Here's how they communicate.

Theoretically, since NuPlayerDecoder is wrapped in the outer layer of MediaCodec, it can also be understood as App relative to MediaCodec. It calls MediaCodec's API to complete some tasks. Let's take a detailed look at this process

1. Start process of decoding sequence

The actual decoding starts with setRenderer. In the NuPlayer::onStart() function,

mVideoDecoder->setRenderer(mRenderer);

Go to the NuPlayer::DecoderBase::setRenderer() function, send the msg kWhatSetRenderer, and then jump to the onSetRenderer() function,

void NuPlayer::Decoder::onSetRenderer(const sp<Renderer> &renderer) {

bool hadNoRenderer = (mRenderer == NULL);

mRenderer = renderer;

if (hadNoRenderer && mRenderer != NULL) {

// this means that the widevine legacy source is ready

onRequestInputBuffers();

}

}

In the onSetRenderer function, you call the onRequestInputBuffers() function. This function is wonderful. Let's look at its execution logic:

void NuPlayer::DecoderBase::onRequestInputBuffers() {

if (mRequestInputBuffersPending) {

return;

}

// doRequestBuffers() return true if we should request more data

if (doRequestBuffers()) {

mRequestInputBuffersPending = true;

sp<AMessage> msg = new AMessage(kWhatRequestInputBuffers, this);

msg->post(2 * 1000ll);

}

}

void NuPlayer::DecoderBase::onMessageReceived(const sp<AMessage> &msg) {

case kWhatRequestInputBuffers:

{

mRequestInputBuffersPending = false;

onRequestInputBuffers();

break;

}

See the code logic? In the onRequestInputBuffers function, you will send the msg of kWhatRequestInputBuffers, and in the async processing function of msg, you will continue to call the onRequestInputBuffers function. This cycle continues, huh??? And this operation?

The only thing that can stop the loop operation is the if (doRequestBuffers()) judgment statement in the onRequestInputBuffers function. Only when it is judged as 0 will the loop operation be terminated.

Let's look at the doRequestBuffers() function, which is actually a while loop. When more data is needed, this function returns true:

/*

* returns true if we should request more data

*/

bool NuPlayer::Decoder::doRequestBuffers() {

// mRenderer is only NULL if we have a legacy widevine source that

// is not yet ready. In this case we must not fetch input.

if (isDiscontinuityPending() || mRenderer == NULL) {

return false;

}

status_t err = OK;

while (err == OK && !mDequeuedInputBuffers.empty()) {

size_t bufferIx = *mDequeuedInputBuffers.begin();

sp<AMessage> msg = new AMessage();

msg->setSize("buffer-ix", bufferIx);

err = fetchInputData(msg); //Take an input buffer

if (err != OK && err != ERROR_END_OF_STREAM) {

// if EOS, need to queue EOS buffer

break;

}

mDequeuedInputBuffers.erase(mDequeuedInputBuffers.begin());

if (!mPendingInputMessages.empty()

|| !onInputBufferFetched(msg)) {

mPendingInputMessages.push_back(msg); //The actually fetched data is put into the buffered message queue

}

}

return err == -EWOULDBLOCK

&& mSource->feedMoreTSData() == OK;

}

In the NuPlayer::Decoder::fetchInputData() function, call the msource - > dequeueaccessunit() function to deal with GenericSource and fill the compressed data into the buffer.

Inside the NuPlayer::Decoder::onInputBufferFetched() function, the data will be added to the BufferQueue through mcodec - > queueinputbuffer().

2. Loop logic

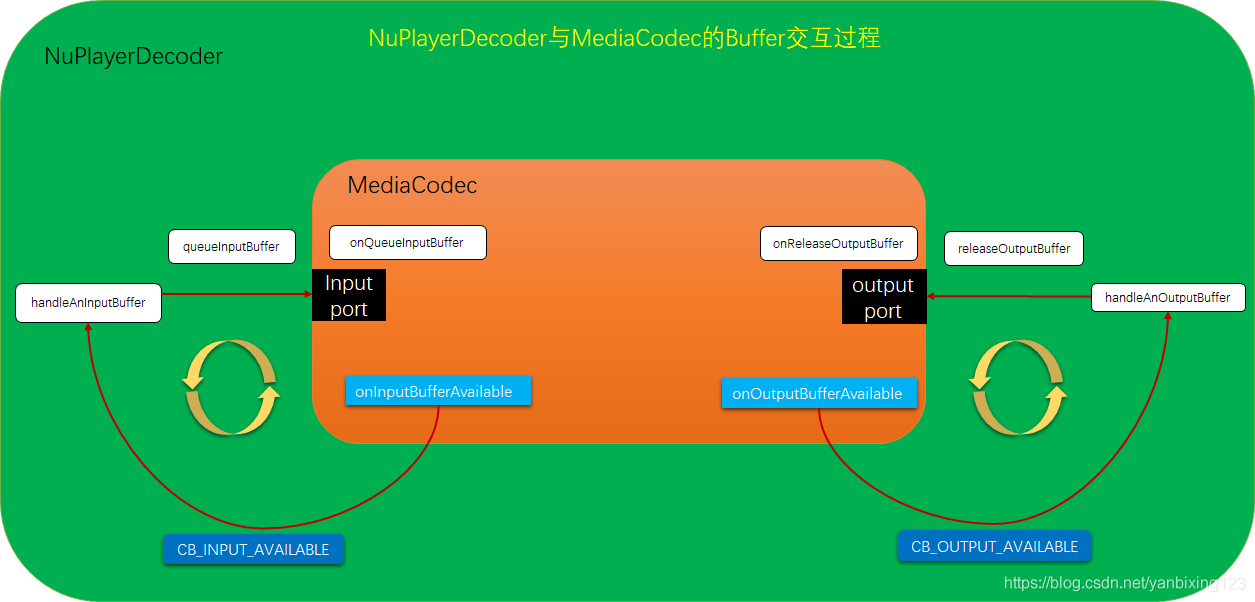

Let's take a look at the following figure. My understanding is that NuPlayerDecoder is a layer wrapped outside MediaCodec, and the input and output are relative to MediaCodec. The interaction between NuPlayerDecoder and MediaCodec occurs on two ports. MediaCodec also maintains a BufferQueue. When there is a buffer on the input port port, MediaCodec:: oninpufferavailable() function will be called, This function will send a CB_INPUT_AVAILABLE msg to NuPlayerDecoder and inform it that there is a buffer in the input port of MediaCodec. Then NuPlayerDecoder will call the NuPlayer::Decoder::handleAnInputBuffer() function to handle this matter. How? The function of MediaCodec is decoding. The Decoder takes data from demux (MediaExtractor) and gives it to MediaCodec for processing.

After MediaCodec processes these data (how to handle it? Parse the H264 code stream into YUV format), the data flows internally from input port to output port. At this time, MediaCodec::onOutputBufferAvailable() function will be triggered to tell nuplayerdecoder that there is a buffer in MediaCodec's output port and send a CB_OUTPUT_AVAILABLE msg, when NuPlayerDecoder receives this msg, it calls NuPlayer::Decoder::handleAnOutputBuffer() function to deal with, how to deal with it?

The next step of the Decoder is Render, so the next step is to send the data to the Renderer.

This is a circular logic, step by step.

2.1 establishment process of asynchronous message

First, how does NuPlayerDecoder establish message contact with MediaCodec?

In the NuPlayer::Decoder::onConfigure() function, there is the following code:

sp<AMessage> reply = new AMessage(kWhatCodecNotify, this); //This is a message of type kWhatCodecNotify mCodec->setCallback(reply);

Then, in the MediaCodec::setCallback function:

status_t MediaCodec::setCallback(const sp<AMessage> &callback) {

sp<AMessage> msg = new AMessage(kWhatSetCallback, this);

msg->setMessage("callback", callback);

sp<AMessage> response;

return PostAndAwaitResponse(msg, &response);

}

void MediaCodec::onMessageReceived(const sp<AMessage> &msg) {

switch (msg->what()) {

case kWhatSetCallback:

{

sp<AReplyToken> replyID;

CHECK(msg->senderAwaitsResponse(&replyID));

...

sp<AMessage> callback;

CHECK(msg->findMessage("callback", &callback));

mCallback = callback; //Get callback and assign it to the global variable mCallback

...

sp<AMessage> response = new AMessage;

response->postReply(replyID);

break;

}

}

}

Here, assign the reply of NuPlayerDecoder to mCallback of MediaCodec.

When you need to call back oninpufferavailable to NuPlayerDecoder, let's take a look. At this time, mCallback is used to send messages

void MediaCodec::onInputBufferAvailable() {

int32_t index;

while ((index = dequeuePortBuffer(kPortIndexInput)) >= 0) {

sp<AMessage> msg = mCallback->dup();

msg->setInt32("callbackID", CB_INPUT_AVAILABLE);

msg->setInt32("index", index);

msg->post();

}

}

Here, the MediaCodec message is sent to the current class Decoder message queue, and then processed in NuPlayer::Decoder::onMessageReceived.

void NuPlayer::Decoder::onMessageReceived(const sp<AMessage> &msg) {

ALOGV("[%s] onMessage: %s", mComponentName.c_str(), msg->debugString().c_str());

switch (msg->what()) {

case kWhatCodecNotify:

{

int32_t cbID;

CHECK(msg->findInt32("callbackID", &cbID));

...

switch (cbID) {

case MediaCodec::CB_INPUT_AVAILABLE:

{

int32_t index;

CHECK(msg->findInt32("index", &index));

handleAnInputBuffer(index);

break;

}

2.2 process of inputting data to MediaCodec

First, MediaCodec will send a CB to NuPlayerDecoder function through MediaCodec:: oninpufferavailable() function_ INPUT_ Available MSG, in NuPlayerDecoder:

bool NuPlayer::Decoder::handleAnInputBuffer(size_t index) {

if (isDiscontinuityPending()) {

return false;

}

sp<ABuffer> buffer;

mCodec->getInputBuffer(index, &buffer); //First, get an available input buffer from MediaCodec

if (buffer == NULL) {

handleError(UNKNOWN_ERROR);

return false;

}

if (index >= mInputBuffers.size()) {

for (size_t i = mInputBuffers.size(); i <= index; ++i) {

mInputBuffers.add();

mMediaBuffers.add();

mInputBufferIsDequeued.add();

mMediaBuffers.editItemAt(i) = NULL;

mInputBufferIsDequeued.editItemAt(i) = false;

}

}

mInputBuffers.editItemAt(index) = buffer;

//CHECK_LT(bufferIx, mInputBuffers.size());

if (mMediaBuffers[index] != NULL) {

mMediaBuffers[index]->release();

mMediaBuffers.editItemAt(index) = NULL;

}

mInputBufferIsDequeued.editItemAt(index) = true;

if (!mCSDsToSubmit.isEmpty()) {

sp<AMessage> msg = new AMessage();

msg->setSize("buffer-ix", index);

sp<ABuffer> buffer = mCSDsToSubmit.itemAt(0);

ALOGI("[%s] resubmitting CSD", mComponentName.c_str());

msg->setBuffer("buffer", buffer);

mCSDsToSubmit.removeAt(0);

CHECK(onInputBufferFetched(msg));

return true;

}

while (!mPendingInputMessages.empty()) {

sp<AMessage> msg = *mPendingInputMessages.begin();

if (!onInputBufferFetched(msg)) {//Here is to put the data queue into the Input of MediaCodec

//In this loop, the more important point is to process EOS. If EOS is encountered, the loop will jump out here.

break;

}

mPendingInputMessages.erase(mPendingInputMessages.begin());

}

if (!mInputBufferIsDequeued.editItemAt(index)) {

return true;

}

mDequeuedInputBuffers.push_back(index);

onRequestInputBuffers();

return true;

}

The real loop is in onRequestInputBuffers() function, which is as follows:

void NuPlayer::DecoderBase::onRequestInputBuffers() {

if (mRequestInputBuffersPending) {

return;

}

// doRequestBuffers() return true if we should request more data

if (doRequestBuffers()) {

mRequestInputBuffersPending = true;

sp<AMessage> msg = new AMessage(kWhatRequestInputBuffers, this);

msg->post(2 * 1000ll);

}

}

If you still need to get data, the dorequestinpuffers() function will return true, and then send the kWhatRequestInputBuffers msg. The processing function of this msg will still call the onRequestInputBuffers() function, so the loop continues. So the core is the doRequestBuffers() function.

bool NuPlayer::Decoder::doRequestBuffers() {

// mRenderer is only NULL if we have a legacy widevine source that

// is not yet ready. In this case we must not fetch input.

if (isDiscontinuityPending() || mRenderer == NULL) {

return false;

}

status_t err = OK;

while (err == OK && !mDequeuedInputBuffers.empty()) {

size_t bufferIx = *mDequeuedInputBuffers.begin();

sp<AMessage> msg = new AMessage();

msg->setSize("buffer-ix", bufferIx);

err = fetchInputData(msg);

if (err != OK && err != ERROR_END_OF_STREAM) {

// if EOS, need to queue EOS buffer

break;

}

mDequeuedInputBuffers.erase(mDequeuedInputBuffers.begin());

if (!mPendingInputMessages.empty()

|| !onInputBufferFetched(msg)) {

mPendingInputMessages.push_back(msg);

}

}

return err == -EWOULDBLOCK

&& mSource->feedMoreTSData() == OK;

}

There are two important functions. The fetchInputData(msg) function takes data from the Source, and the oninpufferfetched () function fills the data and passes the data to MediaCodec. Let's see how the oninpufferfetched() function performs these operations:

bool NuPlayer::Decoder::onInputBufferFetched(const sp<AMessage> &msg) {

size_t bufferIx;

CHECK(msg->findSize("buffer-ix", &bufferIx));

CHECK_LT(bufferIx, mInputBuffers.size());

sp<ABuffer> codecBuffer = mInputBuffers[bufferIx];

sp<ABuffer> buffer;

bool hasBuffer = msg->findBuffer("buffer", &buffer);

// handle widevine classic source - that fills an arbitrary input buffer

MediaBuffer *mediaBuffer = NULL;

if (hasBuffer) {

mediaBuffer = (MediaBuffer *)(buffer->getMediaBufferBase());

if (mediaBuffer != NULL) {

// likely filled another buffer than we requested: adjust buffer index

size_t ix;

for (ix = 0; ix < mInputBuffers.size(); ix++) {

const sp<ABuffer> &buf = mInputBuffers[ix];

if (buf->data() == mediaBuffer->data()) {

// all input buffers are dequeued on start, hence the check

if (!mInputBufferIsDequeued[ix]) {

ALOGV("[%s] received MediaBuffer for #%zu instead of #%zu",

mComponentName.c_str(), ix, bufferIx);

mediaBuffer->release();

return false;

}

// TRICKY: need buffer for the metadata, so instead, set

// codecBuffer to the same (though incorrect) buffer to

// avoid a memcpy into the codecBuffer

codecBuffer = buffer;

codecBuffer->setRange(

mediaBuffer->range_offset(),

mediaBuffer->range_length());

bufferIx = ix;

break;

}

}

CHECK(ix < mInputBuffers.size());

}

}

if (buffer == NULL /* includes !hasBuffer */) {

int32_t streamErr = ERROR_END_OF_STREAM;

CHECK(msg->findInt32("err", &streamErr) || !hasBuffer);

CHECK(streamErr != OK);

// attempt to queue EOS

status_t err = mCodec->queueInputBuffer(

bufferIx,

0,

0,

0,

MediaCodec::BUFFER_FLAG_EOS);

if (err == OK) {

mInputBufferIsDequeued.editItemAt(bufferIx) = false;

} else if (streamErr == ERROR_END_OF_STREAM) {

streamErr = err;

// err will not be ERROR_END_OF_STREAM

}

if (streamErr != ERROR_END_OF_STREAM) {

ALOGE("Stream error for %s (err=%d), EOS %s queued",

mComponentName.c_str(),

streamErr,

err == OK ? "successfully" : "unsuccessfully");

handleError(streamErr);

}

} else {

sp<AMessage> extra;

if (buffer->meta()->findMessage("extra", &extra) && extra != NULL) {

int64_t resumeAtMediaTimeUs;

if (extra->findInt64(

"resume-at-mediaTimeUs", &resumeAtMediaTimeUs)) {

ALOGI("[%s] suppressing rendering until %lld us",

mComponentName.c_str(), (long long)resumeAtMediaTimeUs);

mSkipRenderingUntilMediaTimeUs = resumeAtMediaTimeUs;

}

}

int64_t timeUs = 0;

uint32_t flags = 0;

CHECK(buffer->meta()->findInt64("timeUs", &timeUs));

int32_t eos, csd;

// we do not expect SYNCFRAME for decoder

if (buffer->meta()->findInt32("eos", &eos) && eos) {

flags |= MediaCodec::BUFFER_FLAG_EOS;

} else if (buffer->meta()->findInt32("csd", &csd) && csd) {

flags |= MediaCodec::BUFFER_FLAG_CODECCONFIG;

}

// copy into codec buffer

if (buffer != codecBuffer) {

CHECK_LE(buffer->size(), codecBuffer->capacity());

codecBuffer->setRange(0, buffer->size());

memcpy(codecBuffer->data(), buffer->data(), buffer->size());

//Where data is actually copied

}

status_t err = mCodec->queueInputBuffer( //Here, give the buffer to the Codec decoder

bufferIx,

codecBuffer->offset(),

codecBuffer->size(),

timeUs,

flags);

if (err != OK) {

if (mediaBuffer != NULL) {

mediaBuffer->release();

}

ALOGE("Failed to queue input buffer for %s (err=%d)",

mComponentName.c_str(), err);

handleError(err);

} else {

mInputBufferIsDequeued.editItemAt(bufferIx) = false;

if (mediaBuffer != NULL) {

CHECK(mMediaBuffers[bufferIx] == NULL);

mMediaBuffers.editItemAt(bufferIx) = mediaBuffer;

}

}

}

return true;

}

At this point, the data is handed over to MediaCodec, and the data before decoding is ready.

2.3 data flow after mediacodec decoding

When the output port of MediaCodec has data, MediaCodec::onOutputBufferAvailable() function will be called, which is to send CB_OUTPUT_AVAILABLE msg to NuPlayerDecoder, which is also processed in NuPlayer::Decoder::onMessageReceived function:

case MediaCodec::CB_OUTPUT_AVAILABLE:

{

int32_t index;

size_t offset;

size_t size;

int64_t timeUs;

int32_t flags;

CHECK(msg->findInt32("index", &index));

CHECK(msg->findSize("offset", &offset));

CHECK(msg->findSize("size", &size));

CHECK(msg->findInt64("timeUs", &timeUs));

CHECK(msg->findInt32("flags", &flags));

handleAnOutputBuffer(index, offset, size, timeUs, flags);

break;

}

Continue:

bool NuPlayer::Decoder::handleAnOutputBuffer(

size_t index,

size_t offset,

size_t size,

int64_t timeUs,

int32_t flags) {

// CHECK_LT(bufferIx, mOutputBuffers.size());

sp<ABuffer> buffer;

mCodec->getOutputBuffer(index, &buffer);

if (index >= mOutputBuffers.size()) {

for (size_t i = mOutputBuffers.size(); i <= index; ++i) {

mOutputBuffers.add();

}

}

mOutputBuffers.editItemAt(index) = buffer;

buffer->setRange(offset, size);

buffer->meta()->clear();

buffer->meta()->setInt64("timeUs", timeUs);

bool eos = flags & MediaCodec::BUFFER_FLAG_EOS;

// we do not expect CODECCONFIG or SYNCFRAME for decoder

sp<AMessage> reply = new AMessage(kWhatRenderBuffer, this);

reply->setSize("buffer-ix", index);

reply->setInt32("generation", mBufferGeneration);

if (eos) {

ALOGI("[%s] saw output EOS", mIsAudio ? "audio" : "video");

buffer->meta()->setInt32("eos", true);

reply->setInt32("eos", true);

} else if (mSkipRenderingUntilMediaTimeUs >= 0) {

if (timeUs < mSkipRenderingUntilMediaTimeUs) {

ALOGV("[%s] dropping buffer at time %lld as requested.",

mComponentName.c_str(), (long long)timeUs);

reply->post();

return true;

}

mSkipRenderingUntilMediaTimeUs = -1;

}

mNumFramesTotal += !mIsAudio;

// wait until 1st frame comes out to signal resume complete

notifyResumeCompleteIfNecessary();

if (mRenderer != NULL) {

// send the buffer to renderer.

mRenderer->queueBuffer(mIsAudio, buffer, reply);

if (eos && !isDiscontinuityPending()) {

mRenderer->queueEOS(mIsAudio, ERROR_END_OF_STREAM);

}

}

return true;

}

The mrenderer - > queuebuffer () function sends the data to the Renderer.

In Render, judge whether the Buffer needs to be rendered and whether the frame needs to be lost according to the time, and then feed back to NuPlayerDecoder through a notify, and then judge whether to Render the frame data according to this notify in the onRenderBuffer processing function below.

At the same time, a kWhatRenderBuffer msg is sent. The processing function is as follows:

case kWhatRenderBuffer:

{

if (!isStaleReply(msg)) {

onRenderBuffer(msg);

}

break;

}

void NuPlayer::Decoder::onRenderBuffer(const sp<AMessage> &msg) {

status_t err;

int32_t render;

size_t bufferIx;

int32_t eos;

CHECK(msg->findSize("buffer-ix", &bufferIx));

if (!mIsAudio) {

int64_t timeUs;

sp<ABuffer> buffer = mOutputBuffers[bufferIx];

buffer->meta()->findInt64("timeUs", &timeUs);

if (mCCDecoder != NULL && mCCDecoder->isSelected()) {

mCCDecoder->display(timeUs);

}

}

if (msg->findInt32("render", &render) && render) {

int64_t timestampNs;

CHECK(msg->findInt64("timestampNs", ×tampNs));

err = mCodec->renderOutputBufferAndRelease(bufferIx, timestampNs);

} else {

mNumOutputFramesDropped += !mIsAudio;

err = mCodec->releaseOutputBuffer(bufferIx);

}

if (err != OK) {

ALOGE("failed to release output buffer for %s (err=%d)",

mComponentName.c_str(), err);

handleError(err);

}

if (msg->findInt32("eos", &eos) && eos

&& isDiscontinuityPending()) {

finishHandleDiscontinuity(true /* flushOnTimeChange */);

}

}

This (MSG - > findint32 ("render", & render) & & render) is the notify passed back from the Renderer. If rendering is required, this judgment statement is true. It will call the mcodec - > renderoutpufferandrelease function to render the frame data, then release the buffer and return the buffer to MediaCodec.

If this statement is judged to be false, it will directly call the mcodec - > releaseoutputbuffer function to release the buffer. Instead of rendering, it will directly return the buffer to MediaCodec.

So far, the Decoder process has been analyzed. If you have time, you can analyze the interaction between MediaCodec and OMX. Next, analyze Render.