catalogue

1.0 introduction 5

2.0 setting environment 6

3.0 step 7 of adding Diou NMS

3.1. Install accuracy Checker tool 7

3.2. edit

"/opt/intel/openvino_2021/deployment_tools/open_model_zoo/tools/accuracy_checker/ac curacy_checker/postproces sor/nms.py" 7

4.0 checking yolo-v4-tf model accuracy with Diou NMS 10

4.1. Download and transform yolo-v4-tf model 10

4.2. Preparing datasets and yml files 10

4.2.1. Download dataset 10

4.2.2. Copy and edit "accuracy-check.yml" 10

4.3. Run the accuracy Checker tool to calculate yolo-v4-tf the statistical accuracy 11

5.0 references 12

Revision record

Date revision description

Initial version of 0.8 in January 2021.

1.0 introduction

Yolov4 model was launched in the middle of 2020, which has a far-reaching impact on the field of deep learning target detection. To be successful, yolov4 integrates many first-class technologies, including an improved non maximum suppression (NMS) algorithm, which uses Distance Intersection union ratio (DIoU) instead of intersection union ratio (IoU). DIoU summarizes two geometric factors in bounding box regression (i.e. overlapping area and center point distance), which speeds up convergence and improves performance.

In this white paper, the DIoU-NMS function will be added to OpenVINO ™ Accuracy Checker tool to calculate yolov4 the correct expected accuracy. Although Diou NMS can help greatly improve accuracy, it can also be used with the post training optimization Toolkit (pot) to generate an optimized INT8 model.

2.0 setting environment

-

System environment

– set OpenVINO ™ two thousand and twenty-one point two -

Ubuntu 18.04 or Ubuntu 20.04.

3.0 steps to add Diou NMS

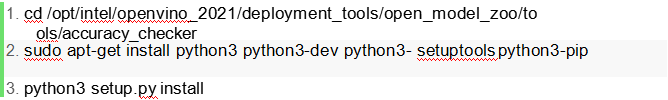

3.1. Install the accuracy Checker tool

According to openvinotookit_open_model_zoo guide for installation.

3.2. Edit "/ opt / Intel / openvino_2021 / deployment_tools / open_model_zo O / tools / accuracy_checker / accuracy_checker / postprocessor / NMS. Py"

Add the following code to the file:

22. description="Use minimum area of two boundi

ng boxes as base area to calculate overlap"

23. )

24. })

25. return parameters

26.

27. def configure(self):

28. self.overlap = self.get_value_from_config('overlap'

)

29. self.include_boundaries = self.get_value_from_confi g('include_boundaries')

30. self.keep_top_k = self.get_value_from_config('keep_

top_k')

31. self.use_min_area = self.get_value_from_config('use

_min_area')

32.

33. def process_image(self, annotations, predictions):

34. for prediction in predictions:

35. scores = get_scores(prediction)

36. keep = self.diou_nms(

37. prediction.x_mins, prediction.y_mins, predi

ction.x_maxs, prediction.y_maxs, scores,

38. self.overlap, self.include_boundaries, self

.keep_top_k, self.use_min_area

39. )

40. prediction.remove([box for box in range(len(pre

diction.x_mins)) if box not in keep])

41.

42. return annotations, predictions

43.

44. @staticmethod

45. def diou_nms(x1, y1, x2, y2, scores, thresh, include_bo

undaries=True, keep_top_k=None, use_min_area=False):

46. """

47. Pure Python NMS baseline.

48. """

49. b = 1 if include_boundaries else 0

50.

51. areas = (x2 - x1 + b) * (y2 - y1 + b)

52. order = scores.argsort()[::-1]

53.

54. if keep_top_k:

55. order = order[:keep_top_k]

56.

57. keep = []

58.

59. while order.size > 0:

60. i = order[0]

61. keep.append(i)

62.

63. xx1 = np.maximum(x1[i], x1[order[1:]])

64. yy1 = np.maximum(y1[i], y1[order[1:]])

65. xx2 = np.minimum(x2[i], x2[order[1:]])

66. yy2 = np.minimum(y2[i], y2[order[1:]])

67.

68. w = np.maximum(0.0, xx2 - xx1 + b)

69. h = np.maximum(0.0, yy2 - yy1 + b)

70. intersection = w * h

71.

72. cw = np.maximum(x2[i], x2[order[1:]]) - np.mini

mum(x1[i], x1[order[1:]])

73. ch = np.maximum(y2[i], y2[order[1:]]) - np.mini mum(y1[i], y1[order[1:]])

74. c_area = cw**2+ch**2+1e-16

75. rh02 = ((x2[order[1:]]+x1[order[1:]])-

(x2[i]+x1[i]))**2/4+((y2[order[1:]]+y1[order[1:]])- (y2[i]+y1[i]))**2/4

76.

77. if use_min_area:

78. base_area = np.minimum(areas[i], areas[orde

r[1:]])

79. else:

80. base_area = (areas[i] + areas[order[1:]] - intersection)

81.

82. overlap =

83. np.divide( intersectio

84. n, base_area,

85. out=np.zeros_like(intersection, dtype=float

),

86. where=base_area != 0

87. ) - pow(rh02/c_area,0.6)

88. order = order[np.where(overlap <= thresh)[0] + 1] # pylint: disable=W0143

89.

90. return keep

4.0 check yolo-v4-tf model accuracy with Diou NMS

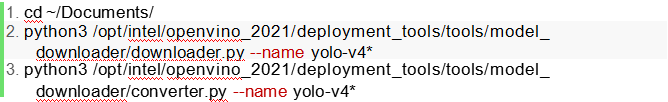

4.1. Download and transform yolo-v4-tf models

4.2. Preparing datasets and yml files

4.2.1. Download dataset

1. wget http://images.cocodataset.org/zips/val2017.zip 2. wget http://images.cocodataset.org/annotations/annotations_tra inval2017.zip 3. unzip - d annotations_trainval2017/ annotations_trainval2017.zip 4. unzip -d annotations_trainval2017/annotations/ val2017.zip

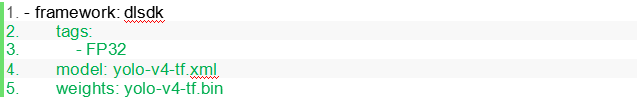

4.2.2. Copy and edit accuracy-check.yml

Copy "accuracy-check.yml"

1.sudo mv /opt/intel/openvino_2021/deployment_tools/open_mode l_zoo/models/public/yolo-v4-tf/accuracy-check.yml ~/Documents/

Edit accuracy-check.yml

1. Mark lines 2-60

2. Add the following on line 65

3. Edit line 94 from

-type: nms

Until

-type: diou-nms

4.3. Run the accuracy Checker tool to calculate yolo-v4-tf the statistical accuracy

If the above settings and modifications are correct, use the following command to obtain accuracy results.

1. accuracy_check -c accuracy-check.yml -m public/yolo-v4- tf/FP32/ -- definitions /opt/intel/openvino_2021/deployment_tools/open_mod el_zoo/tools/accuracy_checker/dataset_definitions.yml - s annotations_trainval2017/annotations/ -td CPU

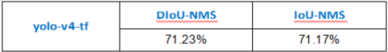

mAP results:

The results confirmed that the mAP of DIoU was more accurate than IoU. Although there are only small differences when using the post training optimization Toolkit (pot) to convert the model to INT8, these differences may affect the performance of the converted INT8 model. Therefore, implement OpenVINO oriented tools ™ The DIoU NMS function of (precision checker and pot) is very important.

5.0 references

Reference document number / location