The Chinese New Year is coming soon. The vagrants who work outside are finally going to return home. Although the epidemic is serious, we still have to go back home. We can only go back once or twice a year when we are far away from home. We are homesick! No, open 12306 to buy a ticket. I saw that there were no tickets. We can't afford this first-class business seat. How can we fix it?

If we can't get a direct one, why don't we spend more money to buy more stops, or buy a ticket to get on the bus and then make up the ticket?

That's a good idea. First I have to see all the stops of each train, and then I'll search for tickets. Take G3136 as an example. The starting station is Ningbo and the terminal is Taiyuan South. Then I just need to buy any station between Ningbo and Hangzhou East and the terminal between Hangzhou East and Taiyuan South. I can get on the bus. I'm still a little excited when I think of a way to go home.

I was stunned when I searched. Wouldn't the arrangement of so many stops, departure stations and arrival stations explode? It's killing me. Is it easy to go home?

No, what do we do? We fiddle with the code. Isn't it fast to hand over a lot of repetitive work to it? Just do it

Ten thousand words are omitted here...

Finally realized!!!

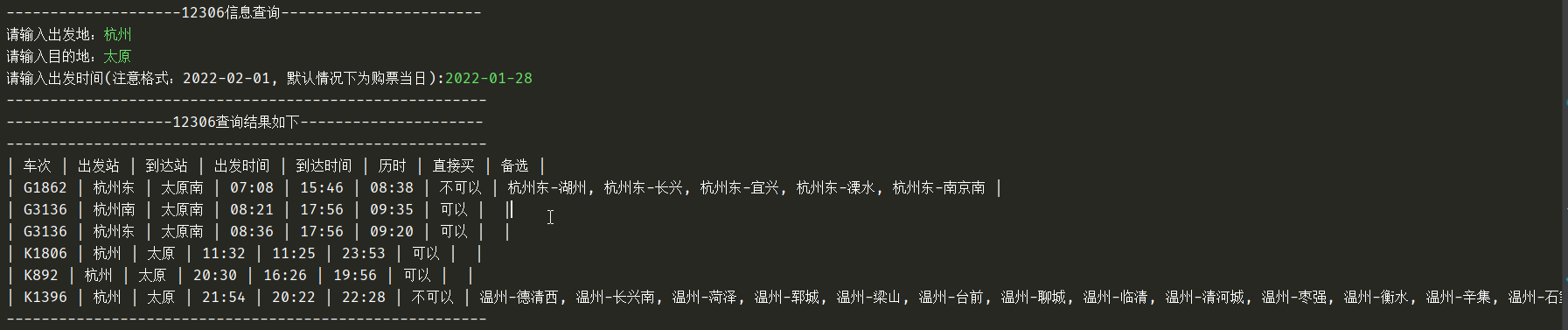

As long as you input the departure place, destination and departure time, you can output all train number information. Some trains can be bought directly, and some can't be bought directly. However, if you give an alternative, you can buy a ticket to go home as long as you choose one of the alternatives.

The code is as follows:

# coding=utf-8

import requests

import urllib.parse as parse

import time

import json

import pretty_errors

import re

from fake_useragent import UserAgent

TRAIN_NUMBER = 2

TRAIN = 3

DEPARTURE_STATION = 6

TERMINUS = 7

DEPARTURE_TIME = 8

ARRIVAL_TIME = 9

DURATION = 10

IF_BOOK = 11

DATE = 13

NO_SEAT = 29

HARD_SEAT = 28

SOFT_SEAT = 27

def Citys():

"""

City abbreviation

:return:

"""

headers = {'User-Agent': str(UserAgent().random)}

url = 'https://kyfw.12306.cn/otn/resources/js/framework/station_name.js?station_version=1.9141'

content = requests.get(url=url, headers=headers)

content = content.content.decode('utf-8')

content = content[content.find('=') + 2:-2]

content_list = content.split('@')

dict_city = {}

for city in content_list:

str_1 = city[city.find('|') + 1:]

city_name = str_1[str_1.find('|') + 1:str_1.find('|') + 4] # City abbreviation

city_name_1 = str_1[:str_1.find('|')] # City name

dict_city[city_name_1] = city_name

return dict_city

def Time():

"""

Get current time

:return:

"""

list_time = list(time.localtime())

year = str(list_time[0])

month = str(list_time[1])

day = str(list_time[2])

if len(month) == 1:

month = '0' + month

if len(day) == 1:

day = '0' + day

return year, month, day

proxy = {'http': '122.226.57.70:8888'}

class Train:

def __init__(self,

from_station,

to_station,

train_date=Time()[0] + '-' + Time()[1] + '-' + Time()[2]

):

self.from_station = from_station

self.to_station = to_station

self.train_date = train_date

self.url = 'https://kyfw.12306.cn/otn/leftTicket/queryA?leftTicketDTO.%s&leftTicketDTO.%s&leftTicketDTO.%s&purpose_codes=ADULT'

# self.headers = {

# 'Cookie': 'JSESSIONID=073CAA21150F40AA7F05551F7E1B5F5C; RAIL_DEVICEID=STuBhcdGH45k8StSkJVyk_v6qFnIUDpyzg1O9l7IyMoOPPIEEuBEqcBRuuv0WOKJrV4MdxGi08T0AzwlQ3d4guOQ6LTNlh7emO8TdgWZe2Wp3OuA9WIKYP5Ly-a3o-f5uHGmyX8yleCV0nzQDSL9grkJHRjA4syw; RAIL_EXPIRATION=1642644949013; guidesStatus=off; highContrastMode=defaltMode; cursorStatus=off; BIGipServerpool_index=804258314.43286.0000; route=6f50b51faa11b987e576cdb301e545c4; BIGipServerotn=384827914.24610.0000'}

self.headers = {'User-Agent': str(UserAgent().random)}

self.session = requests.session()

self.session.get(

'https://kyfw.12306.cn/otn/leftTicket/init?linktypeid=dc&fs=%E6%9D%AD%E5%B7%9E%E4%B8%9C,HGH&ts=%E5%A4%AA%E5%8E%9F%E5%8D%97,TNV&date=2022-01-19&flag=N,N,Y',

headers=self.headers, proxies=proxy, timeout=5)

def station(self, train_number):

"""

Find the starting point and ending point of the train

:return:

"""

url = f'https://kyfw.12306.cn/otn/czxx/queryByTrainNo?' \

f'{parse.urlencode({"train_no": train_number})}&' \

f'{parse.urlencode({"from_station_telecode": Citys()[self.from_station]})}&' \

f'{parse.urlencode({"to_station_telecode": Citys()[self.to_station]})}&' \

f'{parse.urlencode({"depart_date": self.train_date})}'

self.headers['User-Agent'] = str(UserAgent().random)

content = self.session.get(url, headers=self.headers, proxies=proxy, timeout=5)

# content = requests.get(url, headers=self.headers, proxies=proxy, timeout=5)

content = content.content.decode('utf-8')

data = json.loads(content)

stations_data = data['data']['data']

stations_data.sort(key=lambda x: x['station_no'])

from_station_idx = int(

list(filter(lambda x: self.from_station in x['station_name'], stations_data))[0]['station_no'])

from_station_buy = [station['station_name'] for station in stations_data[:from_station_idx]]

to_station_buy = [station['station_name'] for station in stations_data[from_station_idx:]]

return from_station_buy, to_station_buy

def train(self):

"""

Crawling information

:return:

"""

url = self.url % (parse.urlencode({"train_date": self.train_date}),

parse.urlencode({"from_station": Citys()[self.from_station]}),

parse.urlencode({"to_station": Citys()[self.to_station]}))

self.headers['User-Agent'] = str(UserAgent().random)

content = self.session.get(url, headers=self.headers, proxies=proxy, timeout=5)

# content = requests.get(url, headers=self.headers, proxies=proxy, timeout=5)

content = content.content.decode('utf-8')

data = json.loads(content)

dict_train = data['data']['result']

dict_map = data['data']['map']

res = []

for train in dict_train:

train_split = train.split('|')

from_station_buy, to_station_buy = self.station(train_split[TRAIN_NUMBER])

buy = []

for from_station, to_station in [[x, y] for x in from_station_buy for y in to_station_buy]:

if train_split[IF_BOOK] == 'N' and self.book_if(from_station, to_station, train_split[TRAIN]):

buy.append(f'{from_station}-{to_station}')

train_str = [train_split[TRAIN], dict_map[train_split[DEPARTURE_STATION]],

dict_map[train_split[TERMINUS]], train_split[DEPARTURE_TIME],

train_split[ARRIVAL_TIME], train_split[DURATION],

'sure' if train_split[IF_BOOK] == 'Y' else 'may not', ', '.join(buy)]

res.append('| ' + ' | '.join(train_str) + ' |')

return res

def book_if(self, from_station, to_station, train_number):

"""

Query whether there are tickets

:param from_station:

:param to_station:

:param train_number:

:return:

"""

url = self.url % (parse.urlencode({"train_date": self.train_date}),

parse.urlencode({"from_station": Citys()[from_station]}),

parse.urlencode({"to_station": Citys()[to_station]}))

self.headers['User-Agent'] = str(UserAgent().random)

content = self.session.get(url, headers=self.headers, proxies=proxy, timeout=5)

# content = requests.get(url, headers=self.headers, proxies=proxy, timeout=5)

content = content.content.decode('utf-8')

data = json.loads(content)

dict_train = data['data']['result']

train = list(filter(lambda x: x.split('|')[TRAIN] == train_number, dict_train))

if not train:

return

return True if train[0].split('|')[IF_BOOK] == 'Y' else False

if __name__ == '__main__':

print('--------------------12306 Information Service-----------------------')

while True:

from_station = input('Please enter the place of departure:') or 'Hangzhou'

if from_station in Citys():

break

while True:

to_station = input('Please enter destination:') or 'Taiyuan'

if to_station in Citys():

break

pattern = re.compile('\d{4}-\d{2}-\d{2}')

while True:

date = input('Please enter the departure time(Note format: 2022-02-01, By default, it is the day of ticket purchase):')

if not date or re.match(pattern, date):

break

if not date:

date = Time()[0] + '-' + Time()[1] + '-' + Time()[2]

train = Train(from_station, to_station, date)

#

information = train.train()

print('-------------------------------------------------------')

print('-------------------12306 The query results are as follows---------------------')

print('-------------------------------------------------------')

print('| Train number | Departure station | destination | Departure time | arrival time | Duration | Buy directly | alternative |')

for info in information: print(info)

print('-------------------------------------------------------')

This is the implementation of the first version. At present, it is only a rough alternative, which saves you the time of manual search, and many functions have not been realized

- No alternative ranking: Although alternatives are given, which alternative is better is not ranked

- No seat information (business / first class / second class / hard seat / no seat): Although you can buy it, you may not be able to buy one suitable for you (cheap), which is a little extravagant

I'm very happy to go home. I'm ready to realize the second edition

Reference from: Use crawler to crawl 12306 ticket information