Article catalog

- 1. Mining background and objectives

- 2.2 data exploration and preprocessing

-

* 2.1 Data filtering

- 2.2 data De duplication

- 2.3 delete prefix score

- 2.4 jieba participle

- 3 subject analysis based on LDA model

- 4. Weight

- 5. How to compare the similarity between two documents in the topic space

This paper is based on the data of Chapter 15 of the actual combat part of Python data analysis and mining - Emotional Analysis of e-commerce product review data.

This paper aims to review the processing and modeling methods of comment text data.

1. Mining background and objectives

Text mining analysis is carried out for water heater comments on JD platform, and the mining modeling is as follows:

-

Analyze the emotional tendency of users of a brand of water heater

-

Dig out the advantages and disadvantages of the brand water heater from the comment text

-

Refine the selling points of different brands of water heaters

2.2 data exploration and preprocessing

2.1 data filtering

#-*- coding: utf-8 -*-

import pandas as pd

inputfile = '../data/huizong.csv' #Comment summary document

outputfile = '../data/meidi_jd.txt' #Save path after comment extraction

data = pd.read_csv(inputfile, encoding = 'utf-8')

data = data[[u'comment']][data[u'brand'] == u'Beautiful']

data.to_csv(outputfile, index = False, header = False, encoding = 'utf-8')

2.2 data De duplication

#-*- coding: utf-8 -*-

import pandas as pd

inputfile = '../data/meidi_jd.txt' #Comment document

outputfile = '../data/meidi_jd_process_1.txt' #Save path after comment processing

data = pd.read_csv(inputfile, encoding = 'utf-8', header = None,sep = None)

l1 = len(data)

data = pd.DataFrame(data[0].unique())

l2 = len(data)

data.to_csv(outputfile, index = False, header = False, encoding = 'utf-8')

print(u'Deleted%s Comments.' %(l1 - l2))

Amway has a software that can do emotion analysis called ROST CM6. Note that the documents you submit must be ANSI coded, otherwise the processed documents will be garbled

2.3 delete prefix score

#-*- coding: utf-8 -*-

import pandas as pd

#Parameter initialization

inputfile1 = '../data/meidi_jd_process_end_Negative emotional results.txt'

inputfile2 = '../data/meidi_jd_process_end_Positive emotional results.txt'

outputfile1 = '../data/meidi_jd_neg.txt'

outputfile2 = '../data/meidi_jd_pos.txt'

data1 = pd.read_csv(inputfile1, encoding = 'utf-8', header = None) #read in data

data2 = pd.read_csv(inputfile2, encoding = 'utf-8', header = None)

data1 = pd.DataFrame(data1[0].str.replace('.*?\d+?\\t ', '')) #Modifying data with regular expressions

data2 = pd.DataFrame(data2[0].str.replace('.*?\d+?\\t ', ''))

data1.to_csv(outputfile1, index = False, header = False, encoding = 'utf-8') #Save results

data2.to_csv(outputfile2, index = False, header = False, encoding = 'utf-8')

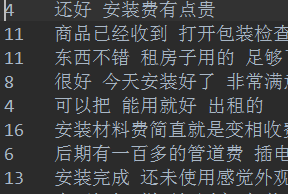

Before deletion

After deletion

! [insert picture description here]( https://img-blog.csdnimg.cn/20190823123110736.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80Mzc0NjQzMw==,size_16,color_FFFFFF,t_70)

2.4 jieba participle

#-*- coding: utf-8 -*-

import pandas as pd

import jieba #Import stuttering word segmentation. You need to download and install it yourself

#Parameter initialization

inputfile1 = '../data/meidi_jd_neg.txt'

inputfile2 = '../data/meidi_jd_pos.txt'

outputfile1 = '../data/meidi_jd_neg_cut.txt'

outputfile2 = '../data/meidi_jd_pos_cut.txt'

data1 = pd.read_csv(inputfile1, encoding = 'utf-8', header = None) #read in data

data2 = pd.read_csv(inputfile2, encoding = 'utf-8', header = None)

mycut = lambda s: ' '.join(jieba.cut(s)) #Custom simple word segmentation function

data1 = data1[0].apply(mycut) #Through word segmentation in the form of "broadcast", speed up the speed.

data2 = data2[0].apply(mycut)

data1.to_csv(outputfile1, index = False, header = False, encoding = 'utf-8') #Save results

data2.to_csv(outputfile2, index = False, header = False, encoding = 'utf-8')

3 subject analysis based on LDA model

https://blog.csdn.net/sinat_26917383/article/details/79357700#1__27

#-*- coding: utf-8 -*-

import pandas as pd

# Modify default encoding

import sys

sys.getdefaultencoding() # View current encoding format

# When an error is reported (AttributeError: 'module' object has no attribute 'setdefaultencoding')

#Parameter initialization

negfile = '../data/meidi_jd_neg_cut.txt'

posfile = '../data/meidi_jd_pos_cut.txt'

stoplist = '../data/stoplist.txt'

neg = pd.read_csv(negfile, encoding = 'utf-8', header = None) #read in data

pos = pd.read_csv(posfile, encoding = 'utf-8', header = None)

stop = pd.read_csv(stoplist, encoding = 'utf-8', header = None, sep = 'tipdm')

#sep sets the split word. Because csv uses half width comma as the split word by default, and the word happens to be in the stop word list, it will cause reading errors

#So the solution is to manually set a non-existent segmentation word, such as tipdm.

stop = [' ', ''] + list(stop[0]) #Pandas automatically filters white space characters, which are added here manually

neg[1] = neg[0].apply(lambda s: s.split(' ')) #Define a partition function and broadcast it with apply

neg[2] = neg[1].apply(lambda x: [i for i in x if i not in stop]) #Judge whether to stop using words word by word. The idea is the same as above

pos[1] = pos[0].apply(lambda s: s.split(' '))

pos[2] = pos[1].apply(lambda x: [i for i in x if i not in stop])

from gensim import corpora, models

#Negative theme analysis

neg_dict = corpora.Dictionary(neg[2]) #Build dictionary

neg_corpus = [neg_dict.doc2bow(i) for i in neg[2]] #Building corpus

neg_lda = models.LdaModel(neg_corpus, num_topics = 3, id2word = neg_dict) #LDA model training

# for i in range(3):

# #print(neg_lda.show_topics())#Show theme

# print(neg_lda.print_topic(i))

#Positive theme analysis

pos_dict = corpora.Dictionary(pos[2])

pos_corpus = [pos_dict.doc2bow(i) for i in pos[2]]

pos_lda = models.LdaModel(pos_corpus, num_topics = 3, id2word = pos_dict)

# for i in range(3):

# #print(pos_lda.show_topics())#Show theme

# print(pos_lda.print_topic(i))

for i in neg_lda.show_topics():

print(i[0], i[1])

for i in pos_lda.show_topics():

print(i[0], i[1])

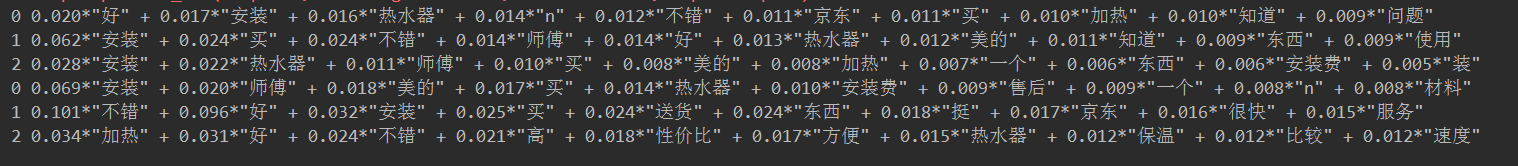

The feature words are extracted according to the three potential themes highly praised by Midea water heater:

The high-frequency feature words in topic 1, namely good, fast delivery, heating, speed, fast, service and extraordinary, mainly reflect JD's fast delivery and excellent service; Midea water heater has fast heating speed;

The high-frequency feature words in topic 2, that is, the hot concerns are mainly price, things and value, which mainly reflect that Midea water heater is good, the price is appropriate, and it is worth buying, etc;

The high-frequency feature words in topic 3, that is, the hot concerns are mainly after-sales, master, door-to-door and installation, which mainly reflect JD's after-sales service and master's door-to-door installation.

From the three potential themes of Midea's poor comments on water heaters, we can see:

The high-frequency feature words in topic 1 are mainly installation, service, yuan, etc., that is, topic 1 mainly reflects the high installation fees of Midea water heater, poor after-sales service of water heater, etc;

The high-frequency feature words in topic 2 are mainly emotional words such as but, a little, and can also be;

Theme 3 mainly reflects that Midea water heater may not meet its needs; The high-frequency feature words in topic 3 mainly include no, but, self, etc. topic 3 may mainly reflect the installation of Midea water heater.

Based on the above topics and the high-frequency characteristic words, the advantages of Midea water heater are as follows:

Affordable, cost-effective, good-looking appearance, practical water heater, convenient to use, fast heating speed and good service.

Relatively speaking, users' complaints about Midea water heater mainly reflect the following aspects:

Midea water heaters are expensive to install and provide after-sales service.

Therefore, the reasons for users' purchase can be summarized as follows * *: Midea's big brand is trustworthy, Midea's water heater is affordable and cost-effective * *.

According to the LDA theme model analysis on the user evaluation of Midea water heater on JD platform, we put forward the following suggestions for Midea brand.

1) On the basis of maintaining the advantages of convenient use and affordable price, the water heater is improved to improve the quality of the water heater as a whole.

2) Improve the overall quality of installation personnel and customer service personnel and improve service quality. The detailed rules for charging installation fees shall be made clear and transparent to reduce the problem of arbitrary charging in the installation process. Moderately reduce the installation cost and material cost, so as to highlight the advantages in the competition of big brands.

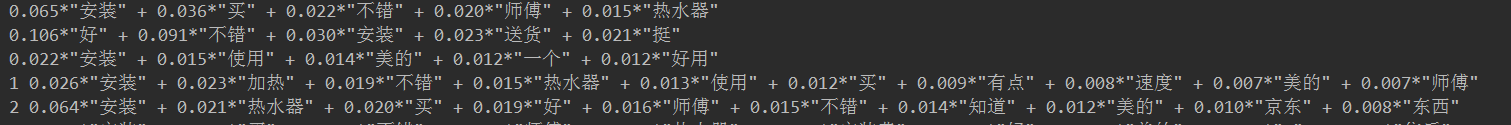

4. Weight

for i in range(3):

print(pos_lda.print_topic(i, topn=5)) # Weight TOP5

for i in neg_lda.show_topics(num_topics=2, num_words=10, log=False, formatted=True): #Among the top 2 or 100 topics, the topic vocabulary with the weight of top 2

print(i[0], i[1])

5. How to compare the similarity between two documents in the topic space

import numpy as np

topics =[pos_lda[c] for c in pos_corpus]

dense = np.zeros((len(topics),100),float)

for ti,t in enumerate(topics):

for tj,v in t:

dense[ti,tj] = v # The result is deny [document id, subject id] = the weight of a document in a subject

print(dense)

! [insert picture description here]( https://img-blog.csdnimg.cn/20190823143233596.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80Mzc0NjQzMw==,size_16,color_FFFFFF,t_70)