catalogue

text

One of a series of articles on implementing ijk player for windows step by step -- compiling ffmpeg 4.0.2 and generating ffplay on Windows 10 platform

Step by step to achieve the second article in the series of ijkplayer for windows -- audio and video output of ijkplayer player source code analysis -- Video

Step by step to achieve the third article in the series of ijkplayer for windows -- audio and video output of ijkplayer player source code analysis

Step by step implementation of the fourth article series of ijkplayer for windows -- compiling ffmpeg for ijkplayer under Windows

Step by step to achieve the fifth article in the series of ijkplayer for windows -- using automake to generate makefile s step by step

Step by step to achieve the sixth article in the series of ijkplayer for windows -- SDL2 source code analysis of the rendering process of OpenGL ES on Windows

Step by step to achieve the seventh article series of ijkplayer for windows -- the end (with source code)

Step by step to achieve the second article in the series of ijkplayer for windows -- audio and video output of ijkplayer player source code analysis -- Video

Ijkplayer only supports Android and IOS platforms. Recently, due to the needs of the project, a player on Windows platform is needed. I have some knowledge of ijkplayer player before, so I want to try to implement it on this basis. The data receiving, data parsing and decoding part of ijkplayer uses the code of ffmepg. These parts can be common under different platforms (except video hard decoding), so the difference is the audio and video output part. If you implement ijkplayer under windows, you need to understand this part of the code thoroughly. I have studied it for a period of time, and now write down some understandings. If there is something wrong, I hope you can correct it.

Some related knowledge

SDL

FFmpeg implements a simple player. Its rendering uses SDL. I have Compile ffplayer on windows platform Come out. SDL can be downloaded from the network or compiled by itself.

- What is SDL?

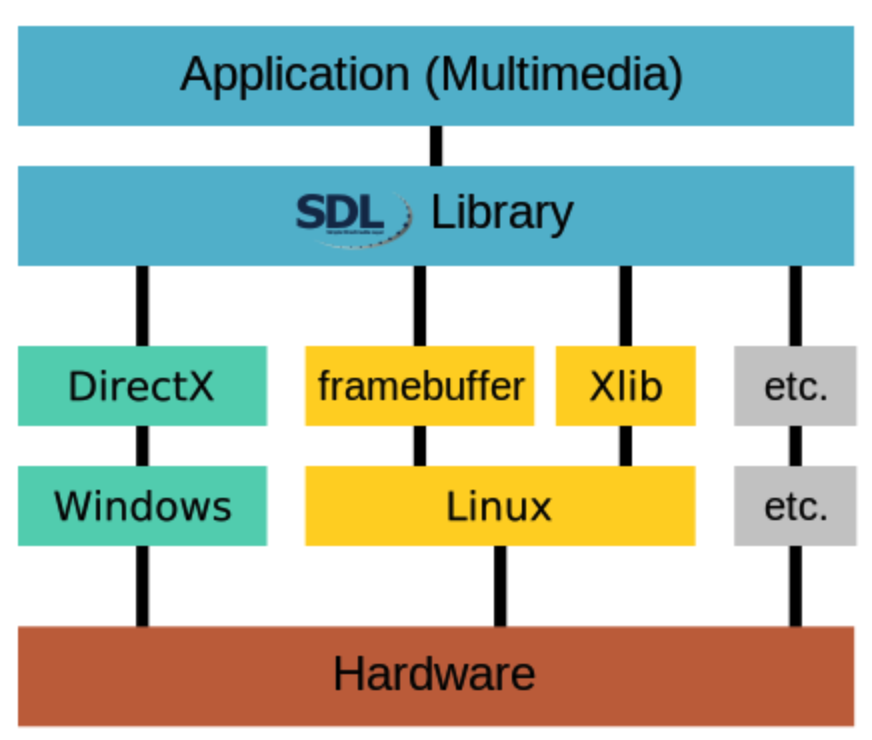

SDL (Simple DirectMedia Layer) is a set of open source cross platform multimedia development library written in C language. SDL provides several functions to control image, sound, output and input, so that developers can develop application software across multiple platforms (Linux, Windows, Mac OS, etc.) with the same or similar code. At present, SDL is mostly used to develop multimedia applications such as games, simulators and media players. The purpose of SDL can be clearly illustrated by the following figure.

The most basic function of SDL is to put it simply. It provides interfaces for window creation, surface creation and rendering on different platforms. Among them, the surface is created with EGL, and the render is completed by OpenGLES.

OpenGL ES

What is openGL ES

OpenGL ES (OpenGL for Embedded Systems) is a subset of OpenGL 3D graphics APIs. It is designed for embedded devices such as mobile phones, PDA s and game consoles. Various graphics card manufacturers and system manufacturers implement this set of APIs

EGL

What is EGL

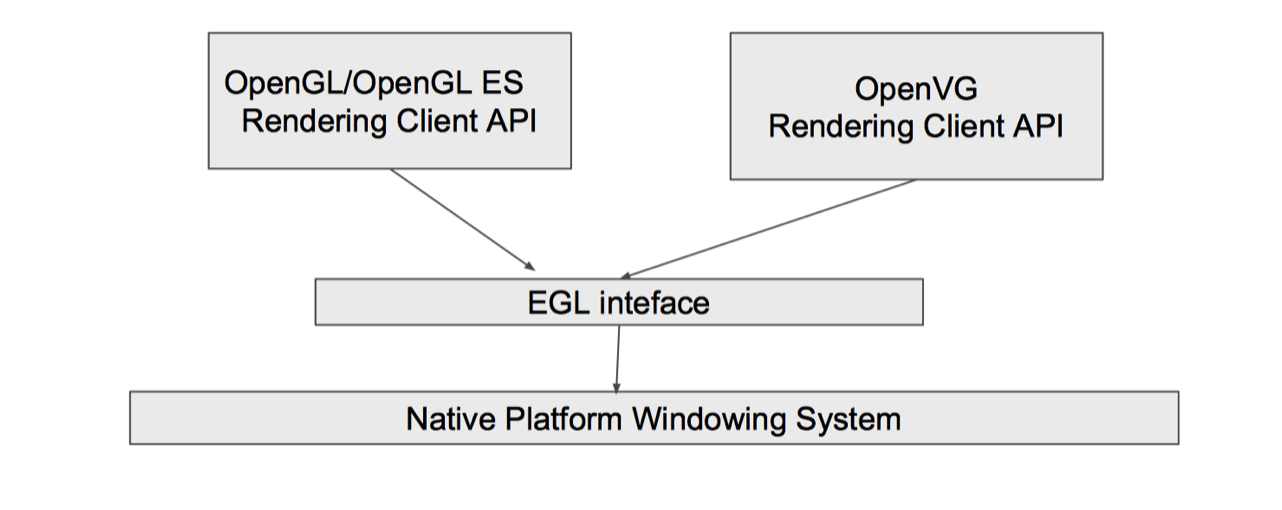

EGL is an intermediate interface layer between OpenGL ES rendering API and native platform window system. It is mainly implemented by system manufacturers. EGL provides the following mechanisms:

- Communicate with the native window system of the device

- Query the available types and configurations of drawing surfaces

- Create drafting surface

- Synchronize rendering between OpenGL ES and other graphics rendering API s

- Manage rendering resources such as texture maps

- In order to enable OpenGL ES to be drawn on the current device, we need EGL as the bridge between OpenGL ES and the device.

Relationship between OpenGL ES and EGL

General steps for drawing with EGL

- Get EGL Display object: eglGetDisplay()

- Initialize the connection to EGLDisplay: eglininitialize()

- Get EGLConfig object: eglChooseConfig()

- Create EGLContext instance: eglCreateContext()

- Create EGLSurface instance: eglCreateWindowSurface()

- Connect EGLContext and EGLSurface: eglMakeCurrent()

- Drawing with OpenGL ES API: gl_* ()

- Switch between front buffer and back buffer for display: eglSwapBuffer()

- Disconnect and release the EGLContext object associated with EGLSurface: eglRelease()

- Delete EGLSurface object

- Delete EGLContext object

- Terminate the connection with EGLDisplay

The drawing process of Ijkplayer through EGL basically uses the above process.

Source code analysis

Now comb the source code of audio and video output from the beginning. Take the Android platform as an example.

Image rendering related structures

struct SDL_Vout {

SDL_mutex *mutex;

SDL_Class *opaque_class;

SDL_Vout_Opaque *opaque;

SDL_VoutOverlay *(*create_overlay)(int width, int height, int frame_format, SDL_Vout *vout);

void (*free_l)(SDL_Vout *vout);

int (*display_overlay)(SDL_Vout *vout, SDL_VoutOverlay *overlay);

Uint32 overlay_format;

};

typedef struct SDL_Vout_Opaque {

ANativeWindow *native_window;//Video image window

SDL_AMediaCodec *acodec;

int null_native_window_warned; // reduce log for null window

int next_buffer_id;

ISDL_Array overlay_manager;

ISDL_Array overlay_pool;

IJK_EGL *egl;//

} SDL_Vout_Opaque;

typedef struct IJK_EGL

{

SDL_Class *opaque_class;

IJK_EGL_Opaque *opaque;

EGLNativeWindowType window;

EGLDisplay display;

EGLSurface surface;

EGLContext context;

EGLint width;

EGLint height;

} IJK_EGL;

Initialize the render object of the player

By calling SDL_VoutAndroid_CreateForAndroidSurface to generate rendered objects:

IjkMediaPlayer *ijkmp_android_create(int(*msg_loop)(void*))

{

...

mp->ffplayer->vout = SDL_VoutAndroid_CreateForAndroidSurface();

if (!mp->ffplayer->vout)

goto fail;

...

}

Finally, by calling SDL_ VoutAndroid_ Create forandroidsurface to generate player render objects. Take a look at several members of player render objects:

- func_create_overlay is used to create video frame rendering objects.

- func_display_overlay is an image display interface function.

- func_free_l used to release resources.

After the video is decoded, the relevant data is stored in the rendering object of each video frame, and then by calling func_ display_ The overlay function renders the image.

SDL_Vout *SDL_VoutAndroid_CreateForANativeWindow()

{

SDL_Vout *vout = SDL_Vout_CreateInternal(sizeof(SDL_Vout_Opaque));

if (!vout)

return NULL;

SDL_Vout_Opaque *opaque = vout->opaque;

opaque->native_window = NULL;

if (ISDL_Array__init(&opaque->overlay_manager, 32))

goto fail;

if (ISDL_Array__init(&opaque->overlay_pool, 32))

goto fail;

opaque->egl = IJK_EGL_create();

if (!opaque->egl)

goto fail;

vout->opaque_class = &g_nativewindow_class;

vout->create_overlay = func_create_overlay;

vout->free_l = func_free_l;

vout->display_overlay = func_display_overlay;

return vout;

fail:

func_free_l(vout);

return NULL;

}

Creation of video frame rendering objects

Create render object function:

static SDL_VoutOverlay *func_create_overlay_l(int width, int height, int frame_format, SDL_Vout *vout)

{

switch (frame_format) {

case IJK_AV_PIX_FMT__ANDROID_MEDIACODEC:

return SDL_VoutAMediaCodec_CreateOverlay(width, height, vout);

default:

return SDL_VoutFFmpeg_CreateOverlay(width, height, frame_format, vout);

}

}

You can see that there are two ways of image rendering under the Android platform, one is MediaCodeC, and the other is OpenGL. Because OpenGL is platform independent, we focus on this image rendering method.

Every time the video decoder decodes a frame of image, it will insert the frame into the frame queue. The player will do some processing on the frames inserted into the queue. For example, it calls SDL for each frame_ Voutdoverlay creates a render object. Look at the following code:

static int queue_picture(FFPlayer *ffp, AVFrame *src_frame, double pts, double duration, int64_t pos, int serial){

...

if (!(vp = frame_queue_peek_writable(&is->pictq)))//Take out the writable video frame at the end of the team

return -1;

...

alloc_picture(ffp, src_frame->format);//Call SDL_ in this function Vout_ Createoverlay creates (initializes) a render object for the current frame

...

if (SDL_VoutFillFrameYUVOverlay(vp->bmp, src_frame) < 0) {//Populates the rendered object with relevant data

av_log(NULL, AV_LOG_FATAL, "Cannot initialize the conversion context\n");

exit(1);

}

....

frame_queue_push(&is->pictq);//Finally, it is push ed to the frame queue for processing by the rendering display function.

}

In alloc_ Create render objects for video frames in the video frame queue in picture.

static void alloc_picture(FFPlayer *ffp, int frame_format)

{

...

vp->bmp = SDL_Vout_CreateOverlay(vp->width, vp->height,

frame_format,

ffp->vout);

...

}

Continue to look at the creation of rendered objects:

SDL_VoutOverlay *SDL_VoutFFmpeg_CreateOverlay(int width, int height, int frame_format, SDL_Vout *display)

Take a look at the parameters of this function. The first two parameters are the width and height of the image, the third parameter is the format of the video frame, and the fourth parameter is the rendering object of the player mentioned above. There is also a member in the rendering object of the player in the video frame format, but it is not initialized in the initialization function mentioned above. Finally, after searching, there are two places to initialize the video frame format of the player. One is the following function:

inline static void ffp_reset_internal(FFPlayer *ffp)

{

....

ffp->overlay_format = SDL_FCC_RV32;

...

}

Another place is configured through configuration items:

{ "overlay-format", "fourcc of overlay format",

OPTION_OFFSET(overlay_format), OPTION_INT(SDL_FCC_RV32, INT_MIN, INT_MAX),

.unit = "overlay-format" },

Specify the video frame image format in the java code as follows:

m_IjkMediaPlayer.setOption(IjkMediaPlayer.OPT_CATEGORY_PLAYER, "overlay-format", IjkMediaPlayer.SDL_FCC_RV32);

Return to the creation function of video frame rendering object:

Uint32 overlay_format = display->overlay_format;

switch (overlay_format) {

case SDL_FCC__GLES2: {

switch (frame_format) {

case AV_PIX_FMT_YUV444P10LE:

overlay_format = SDL_FCC_I444P10LE;

break;

case AV_PIX_FMT_YUV420P:

case AV_PIX_FMT_YUVJ420P:

default:

#if defined(__ANDROID__)

overlay_format = SDL_FCC_YV12;

#else

overlay_format = SDL_FCC_I420;

#endif

break;

}

break;

}

}

The above lines of code mean that if the player uses OpenGL to render images, the image format needs to be converted to the image format customized by ijkplayer.

After processing the video frame, the relevant data will be saved to the following objects:

SDL_VoutOverlay_Opaque *opaque = overlay->opaque;

Specify the video frame handler for the rendered object:

overlay->func_fill_frame = func_fill_frame;

Next, define and initialize managed_frame and linked_frame

opaque->managed_frame = opaque_setup_frame(opaque, ff_format, buf_width, buf_height);

if (!opaque->managed_frame) {

ALOGE("overlay->opaque->frame allocation failed\n");

goto fail;

}

overlay_fill(overlay, opaque->managed_frame, opaque->planes);

The difference between these two frames will be mentioned below.

Video frame processing

On the processing of video frames, take a look at func_fill_frame function:

static int func_fill_frame(SDL_VoutOverlay *overlay, const AVFrame *frame)

Its two parameters, the first one we mentioned earlier in alloc_ The rendering object initialized in picture, and the frame is the decoded video frame.

At the beginning of this function, the image format specified in the player is compared with the image format of the video frame. If the two image formats are consistent, for example, the image format is YUV420, SWS does not need to be called_ The scale function converts the image format. On the contrary, it needs to be converted. No conversion is required through linked_frame to fill the rendered object. If conversion is needed, it is through managed_ Frame.

OK, the rendered object of the video frame is filled with data and inserted into the video frame queue. Next, it is displayed.

Video rendering thread

static int video_refresh_thread(void *arg)

{

FFPlayer *ffp = arg;

VideoState *is = ffp->is;

double remaining_time = 0.0;

while (!is->abort_request) {

if (remaining_time > 0.0)

av_usleep((int)(int64_t)(remaining_time * 1000000.0));

remaining_time = REFRESH_RATE;

if (is->show_mode != SHOW_MODE_NONE && (!is->paused || is->force_refresh))

video_refresh(ffp, &remaining_time);

}

return 0;

}

It will eventually enter video_refresh function to render in video_ In the refresh function:

if (vp->serial != is->videoq.serial) {

frame_queue_next(&is->pictq);

goto retry;

}

It will check whether the decoded frame is the current frame. If not, it will wait all the time. Then synchronize the audio and video. If the current video frame is within the display time range, call the display function to display:

if (time < is->frame_timer + delay) {

*remaining_time = FFMIN(is->frame_timer + delay - time, *remaining_time);

goto display;

}

There is also a goto to display. I don't know why it will jump to display in the case of pause.

if (is->paused)

goto display;

Finally, it will jump to the following function for display:

static int func_display_overlay_l(SDL_Vout *vout, SDL_VoutOverlay *overlay);

Here are some preparations before display.

Surface creation

Surface is generated by java code and passed to native code through JNI method.

public void setDisplay(SurfaceHolder sh) {

mSurfaceHolder = sh;

Surface surface;

if (sh != null) {

surface = sh.getSurface();

} else {

surface = null;

}

_setVideoSurface(surface);

updateSurfaceScreenOn();

}

JNI method

static JNINativeMethod g_methods[] = {

{

...,

{ "_setVideoSurface", "(Landroid/view/Surface;)V", (void *) IjkMediaPlayer_setVideoSurface },

...

}

window creation

The native code initializes the window with the passed surface:

void SDL_VoutAndroid_SetAndroidSurface(JNIEnv *env, SDL_Vout *vout, jobject android_surface)

{

ANativeWindow *native_window = NULL;

if (android_surface) {

native_window = ANativeWindow_fromSurface(env, android_surface);//Initialization window

if (!native_window) {

ALOGE("%s: ANativeWindow_fromSurface: failed\n", __func__);

// do not return fail here;

}

}

SDL_VoutAndroid_SetNativeWindow(vout, native_window);

if (native_window)

ANativeWindow_release(native_window);

}

Selection of video rendering mode

After the window is created, go back and look at the rendering display function:

static int func_display_overlay_l(SDL_Vout *vout, SDL_VoutOverlay *overlay)

Two parameters, the first is the player rendering object mentioned above, and the second is the rendering object of video frame. The rendering method depends on the image format settings in the two rendered objects. At present, I can see that what is assigned to the format member in the video frame object is the image format of the player rendering object:

SDL_VoutOverlay *SDL_VoutFFmpeg_CreateOverlay(int width, int height, int frame_format, SDL_Vout *display)

{

Uint32 overlay_format = display->overlay_format;

...

SDL_VoutOverlay *overlay = SDL_VoutOverlay_CreateInternal(sizeof(SDL_VoutOverlay_Opaque));

if (!overlay) {

ALOGE("overlay allocation failed");

return NULL;

}

...

overlay->format = overlay_format;

...

return overlay;

}

There are three ways to judge the rendering method:

- If the video frame image format is SDL_FCC__AMC (MediaCodec) only supports native rendering. So release the egl objects used in openGL rendering.

- If the video frame image format is SDL_FCC_RV24,SDL_FCC_I420 or SDL_FCC_I444P10LE, rendered using OpenGL.

- Other image formats may be either native rendering or OpenGL rendering. It depends on whether the image rendering mode set by the player is SDL_FCC__GLES2, if yes, OpenGL rendering is used; otherwise, native rendering is used.

The native rendering method is relatively simple. Copy the image information stored in the overlay to the ANativeWindow_Buffer is enough. OpenGL rendering is more complex.

OpenGL rendering

As mentioned earlier, rendering with OpenGL requires EGL to communicate with the underlying API. Take a look at the whole process of rendering:

EGLBoolean IJK_EGL_display(IJK_EGL* egl, EGLNativeWindowType window, SDL_VoutOverlay *overlay)

{

EGLBoolean ret = EGL_FALSE;

if (!egl)

return EGL_FALSE;

IJK_EGL_Opaque *opaque = egl->opaque;

if (!opaque)

return EGL_FALSE;

if (!IJK_EGL_makeCurrent(egl, window))

return EGL_FALSE;

ret = IJK_EGL_display_internal(egl, window, overlay);

eglMakeCurrent(egl->display, EGL_NO_SURFACE, EGL_NO_SURFACE, EGL_NO_CONTEXT);

eglReleaseThread(); // FIXME: call at thread exit

return ret;

}

Three parameters. The first parameter is the initialized egl object, the second is the created nativewindow, and the third is the video frame rendering object. IJK_ egl_ The makecurrent function performs the first to sixth steps of egl drawing described above, and saves the initialization data of egl to the egl variable.

static EGLBoolean IJK_EGL_makeCurrent(IJK_EGL* egl, EGLNativeWindowType window)

IJK_ EGL_ display_ The internal function is to create render, and then call OpenGL API to render data.

static EGLBoolean IJK_EGL_display_internal(IJK_EGL* egl, EGLNativeWindowType window, SDL_VoutOverlay *overlay)

reference resources

https://blog.csdn.net/leixiaohua1020/article/details/14215391

https://blog.csdn.net/leixiaohua1020/article/details/14214577