preface

Recently, I encountered the problem of multi-point evaluation, but I haven't learned the classical polynomial modular version, so I learned the new method directly.

This new method is actually very easy to understand, but it still took me too long (I'm a vegetable)

as paper As mentioned in, the code of this practice is very simple and doesn't need to be adjusted for long.

Transpose principle

This principle refers to the linear transformation through matrix multiplication (e.g A x Ax Ax, x x x is a column vector) and we want to find A T x A^Tx ATx, except that you can directly A A A multiply after transposition x x x. You can also reverse and change the steps in the process of linear transformation to achieve the purpose.

Specifically, we divide the linear transformation process into three instructions:

- swap i j, swap variables i i i, j j The value of j;

- Mul, I, C, put the variable i i i times the constant c c c;

- add i j c, the variable j j j plus i ∗ c i*c i∗c, c c c is a constant, i i i is different from j j Variable of j.

(in fact, there is the fourth kind, which is to output the answers to the array, but I think it is essentially the third kind)

Then, to realize the transpose of transformation, we only need to reverse the order of instructions, and then change the third instruction add i j c into add j i c. If the multiplication here is polynomial multiplication or matrix multiplication, it will become the transpose of this multiplication operation.

As for why this is right, I don't know much about it (it is said to use induction), but you should be able to realize its correctness.

Since matrix multiplication can fit all linear operations, the linear algorithms we usually use, such as binomial inversion and polynomial inversion, are applicable to this principle.

Polynomial multiplication transpose

Because the paper says that the transpose of Fourier transform is itself, we skip Fourier and directly say polynomial multiplication.

As mentioned above, the third instruction will use multiplication and transpose, which is also an important pre knowledge in the new multi-point evaluation method.

For polynomial multiplication f ∗ g = ∑ i = 0 n + m − 2 ( ∑ j = 0 i f j ⋅ g i − j ) x i f*g=\sum_{i=0}^{n+m-2}(\sum_{j=0}^if_j\cdot g_{i-j})x^i f * g = ∑ i=0n+m − 2 (∑ j=0i ⋅ fj ⋅ gi − j) xi, we think of it as the multiplication of vector and matrix, and we can deduce its transpose as ( f ∗ g ) T = ∑ i = 0 n − m ( ∑ j = 0 m f m − 1 − j + i g m − 1 − j ) x i (f*g)^T=\sum_{i=0}^{n-m}(\sum_{j=0}^mf_{m-1-j+i}g_{m-1-j})x^i (f∗g)T=∑i=0n−m(∑j=0mfm−1−j+igm−1−j)xi. Because it is related to the subscript difference, the transpose of polynomial multiplication is also called "subtraction convolution".

It looks a little complicated, but in fact it is f f f and coefficient after turning g g g after ordinary polynomial multiplication m − 1 m-1 m − 1 to n − 1 n-1 n − 1.

Polynomial multiplication is n n n times sum m m m times becomes n + m n+m n+m times, its transpose is n + m n+m n+m times sum m m m times becomes n n n times, so they are inverted. Pay special attention to the degree of polynomials in the code.

Multipoint evaluation

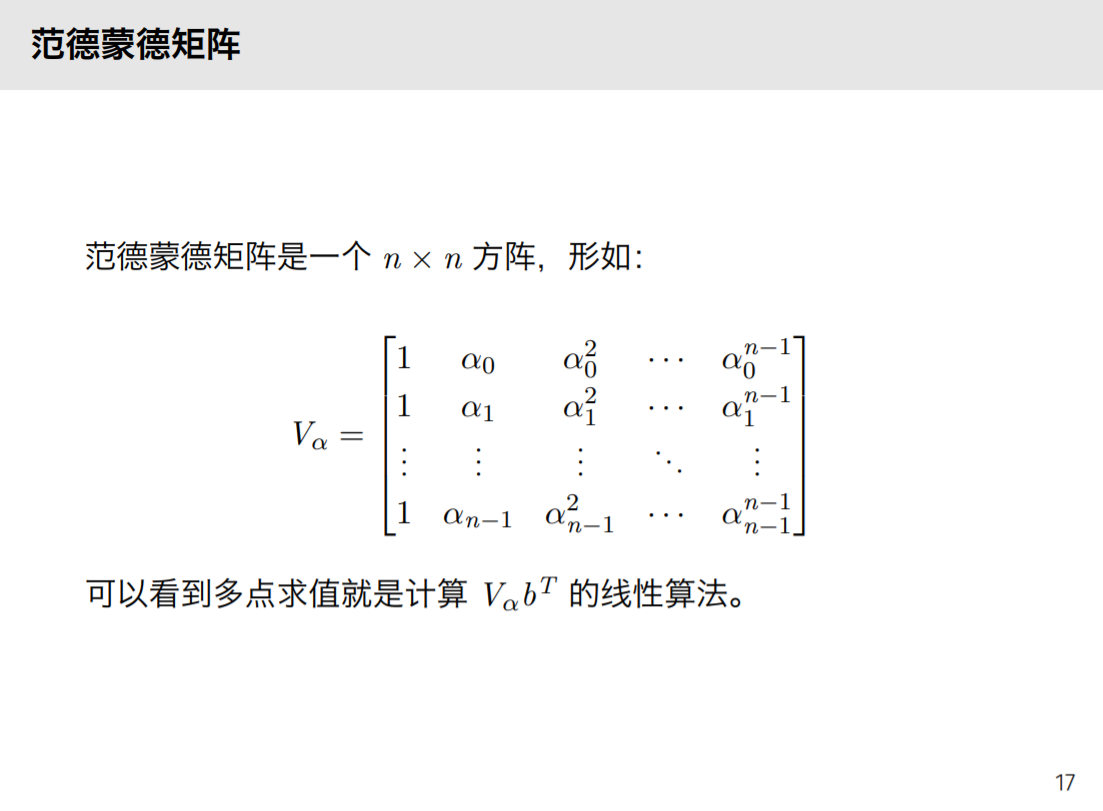

It is not convenient to print the matrix here, so the PDF is cut directly:

We put

∑

j

=

0

n

−

1

b

j

1

−

a

j

x

\sum_{j=0}^{n-1}\frac{b_j}{1-a_jx}

Σ j=0n − 1 − 1 − aj xbj becomes

b

0

(

1

−

a

1

x

)

.

.

.

(

1

−

a

n

−

1

x

)

+

(

1

−

a

0

x

)

b

1

.

.

.

(

1

−

a

n

−

1

x

)

+

.

.

.

+

(

1

−

a

0

x

)

.

.

.

(

1

−

a

n

−

2

x

)

b

n

−

1

(

1

−

a

0

x

)

(

1

−

a

1

x

)

.

.

.

(

1

−

a

n

−

1

x

)

\frac{b_0(1-a_1x)...(1-a_{n-1}x)+(1-a_0x)b_1...(1-a_{n-1}x)+...+(1-a_0x)...(1-a_{n-2}x)b_{n-1}}{(1-a_0x)(1-a_1x)...(1-a_{n-1}x)}

(1−a0x)(1−a1x)...(1−an−1x)b0(1−a1x)...(1−an−1x)+(1−a0x)b1...(1−an−1x)+...+(1−a0x)...(1−an−2x)bn−1

This thing can obviously be maintained by the line segment tree. We set two polynomials at each node of the line segment tree

F

l

,

r

F_{l,r}

Fl,r , and

T

l

,

r

T_{l,r}

Tl,r , represents the numerator and denominator of the answer in the interval, then the transfer we want to do is

T

l

,

r

=

T

l

,

m

i

d

∗

T

m

i

d

+

1

,

r

F

l

,

r

=

F

l

,

m

i

d

∗

T

m

i

d

+

1

,

r

+

F

m

i

d

+

1

,

r

∗

T

l

,

m

i

d

T_{l,r}=T_{l,mid}*T_{mid+1,r}\\ F_{l,r}=F_{l,mid}*T_{mid+1,r}+F_{mid+1,r}*T_{l,mid}

Tl,r=Tl,mid∗Tmid+1,rFl,r=Fl,mid∗Tmid+1,r+Fmid+1,r∗Tl,mid

In particular,

F

l

,

l

=

b

l

,

T

l

,

l

=

1

−

a

l

x

F_{l,l}=b_l,T_{l,l}=1-a_lx

Fl,l=bl,Tl,l=1−alx. The last thing we ask is

F

0

,

n

−

1

T

0

,

n

−

1

{F_{0,n-1}\over T_{0,n-1}}

T0,n − 1, F0,n − 1, need to use the first-order polynomial to find the inverse.

The above method is the transpose of the original multi-point evaluation, so using the transpose principle, we can transpose the above calculation process to obtain the normal multi-point evaluation.

According to the law, we reverse the process, that is, recursion from top to bottom on the line segment tree. Since there can only be one variable (a vector or polynomial) in the transpose principle, we might as well calculate all variables in advance normally

T

T

T. Then treat it as a constant, so that our operation when recursing from top to bottom is

F

l

,

m

i

d

′

=

(

F

l

,

r

′

∗

T

m

i

d

+

1

,

r

)

T

F

m

i

d

+

1

,

r

′

=

(

F

l

,

r

′

∗

T

l

,

m

i

d

)

T

F'_{l,mid}=(F'_{l,r}*T_{mid+1,r})^T\\ F'_{mid+1,r}=(F'_{l,r}*T_{l,mid})^T

Fl,mid′=(Fl,r′∗Tmid+1,r)TFmid+1,r′=(Fl,r′∗Tl,mid)T

When recursing to the bottom,

F

′

F'

There is only one value in F ', which is the answer to this position.

According to the principle, the top F ′ F' F 'should be the sum of the polynomials you want to evaluate at multiple points 1 T 0 , n − 1 \frac{1}{T_{0,n-1}} T0,n − 1 − 1 is the result of "subtraction convolution".

But what we ask for F F The number of times F should be n − 1 n-1 n − 1, and the original polynomial is n − 1 n-1 n − 1 time, T 0 , n − 1 T_{0,n-1} T0,n − 1 yes n n n times, direct subtraction convolution, obviously the number is not right.

Think again, our original process F 0 , n − 1 T 0 , n − 1 {F_{0,n-1}\over T_{0,n-1}} T0,n − 1, F0,n − 1 are equivalent, so they are removed after multiplication n n For the parts n times and later, removing these parts is equivalent to multiplying by the constant 0. After the transposition of this instruction, it remains unchanged, or the original position is multiplied by 0. But the original polynomial is n − 1 n-1 n − 1 time, its n n After n times, it will be 0, so you only need to expand its size to 2 n − 1 2n-1 2n − 1 time, and then do subtraction convolution.

code

It is said that the constant of this method is much smaller, which just makes up for the constant defect of vector.

#define ll long long

const ll MOD=998244353;

#define arr vector<ll>

inline ll ksm(ll a,ll b,ll mo){//Counting

ll res=1;

for(;b;b>>=1,a=a*a%mo)if(b&1)res=res*a%mo;

return res;

}

//NTT begin

#define g 3ll

int rev[MAXN<<1];//MAXN should be set large enough

ll omg[MAXN<<1];

inline int NTT(arr&a,int inv){

int n=a.size(),bit=1;

while((1<<bit)<n)bit++;

n=(1<<bit);

if((int)a.size()<n)a.resize(n);

for(int i=0;i<n;i++){

rev[i]=(rev[i>>1]>>1)|((i&1)<<(bit-1));

if(i<rev[i])swap(a[i],a[rev[i]]);

}ll tmp,x,y;omg[0]=1;

for(int m=1,mi=(MOD-1)>>1,om;m<n;m<<=1,mi>>=1){

tmp=ksm(g,inv<0?MOD-1-mi:mi,MOD),om=0;

for(int i=1;i<m;i++)omg[i]=omg[i-1]*tmp%MOD;

for(int i=0;i<n;i+=(m<<1),om=0)

for(int j=i;j<i+m;j++,om++)

x=a[j],y=a[j+m]*omg[om]%MOD,

a[j]=(x+y)%MOD,a[j+m]=(x-y+MOD)%MOD;

}if(inv<0){

ll iv=ksm(n,MOD-2,MOD);

for(int i=0;i<n;i++)a[i]=a[i]*iv%MOD;

}return n;

}

#undef g

//NTT end

//pre-functions begin

inline void getinv(arr&a){//Polynomial inversion (independent of polynomial multiplication function and reducing constant)

arr f,h;

int n=a.size(),m=1,N=0;

h.push_back(ksm(a[0],MOD-2,MOD));

while(m<n){

m<<=1,f=a,f.resize(m);

f.resize(m<<1),h.resize(m<<1);

N=NTT(f,1),NTT(h,1);

for(int i=0;i<N;i++)h[i]=(MOD+2-f[i]*h[i]%MOD)*h[i]%MOD;

NTT(h,-1),h.resize(m);

}a=h,a.resize(n);

}

inline arr mul(arr a,arr b){//Returns the new array polynomial multiplication

int n=a.size(),m=b.size();

int N=NTT(a,n+m-1,1);NTT(b,N,1);

for(int i=0;i<N;i++)a[i]=a[i]*b[i]%MOD;

NTT(a,-1),a.resize(n+m-1);

return a;

}

inline arr demul(arr a,arr b){//Subtractive convolution

int n=a.size(),m=b.size();

if(n<m)return arr();

reverse(b.begin(),b.end());

a.resize(n+m-1),b.resize(n+m-1);

int N=NTT(a,1);NTT(b,1);

for(int i=0;i<N;i++)a[i]=a[i]*b[i]%MOD;

NTT(a,-1);

for(int i=0;i<=n-m;i++)a[i]=a[i+m-1];

a.resize(n-m+1);

return a;

}

//pre-functions end

//segment tree begin

arr t[MAXN<<2];

ll ans[MAXN];

inline void build(int x,int l,int r,const arr&a){//Seek T

if(l==r){

t[x].clear(),t[x].push_back(1);

t[x].push_back((MOD-a[l])%MOD);

return;

}int mid=(l+r)>>1;

build(x<<1,l,mid,a),build(x<<1|1,mid+1,r,a);

t[x]=mul(t[x<<1],t[x<<1|1]);

}

inline void godown(int x,int l,int r,arr F){//Transpose F '

if(l==r){ans[l]=F[0];return;}

int mid=(l+r)>>1;

godown(x<<1,l,mid,demul(F,t[x<<1|1]));

godown(x<<1|1,mid+1,r,demul(F,t[x<<1]));

}

//segment tree end

inline void solve(arr&b,arr&a){

int n=max(b.size(),a.size());

b.resize(n<<1),a.resize(n);

build(1,0,n-1,a);

getinv(t[1]);

godown(1,0,n-1,demul(b,t[1]));

}